Home >Common Problem >Microsoft Edge now supports automatic image tag generation for Narrator and other screen readers

Microsoft Edge now supports automatic image tag generation for Narrator and other screen readers

- PHPzforward

- 2023-04-21 20:52:071733browse

A picture is worth a thousand words. Microsoft takes this old adage so seriously that it’s introducing a new feature called “Automatic Image Description” in Edge to help people with visual impairments.

Before we discuss how this technology works, let’s take a look at how websites use images. When a blog publishes an article, the author attaches a screenshot to the post and sets attributes for the image. This property is called alt text. This description acts as a title or description that search engines recognize. When a user searches for a keyword suitable for image alt text, the search engine will highlight the appropriate image in the results.

Automatic image description in Microsoft Edge

Narrator in Windows 10 and 11 Screen readers are often used by people with visual impairments. These software use text-to-speech algorithms to help them understand what is displayed on the screen, select/execute various options, etc.

Microsoft Edge supports Narrator to read text content on web pages and assist users in browsing websites, links, etc. When the browser loads a page that contains an image, Narrator will check whether the image has alt text assigned to it, and if so, it will be read aloud.

According to Microsoft, many websites do not include alt text for images. This means their description is blank, screen readers will skip it entirely, and users will miss out on useful information the image may contain.

This is where the new automatic image descriptions in Microsoft Edge come into play. It combines optical recognition of images and text-to-speech recognition. When Microsoft Edge detects that an image does not have an alt text caption, it sends the media to its machine learning algorithm, powered by Azure Cognitive Services' Computer Vision API.

Artificial intelligence technology analyzes the content in the image, creates a description for it in a supported language, and returns it to the browser for Narrator to read aloud. It is also able to detect text in images through optical character recognition (OCR) and supports 120 languages. Automatic image description supports common image formats such as JPEG, GIF, PNG, WebP, and more.

The Vision API will ignore some exceptions and not read aloud to the reader. This includes images where the site is set to be descriptive, images smaller than 50 x 50 pixels, very large images, and photos that may contain gore or adult content.

How to enable automatic image descriptions in Microsoft Edge?

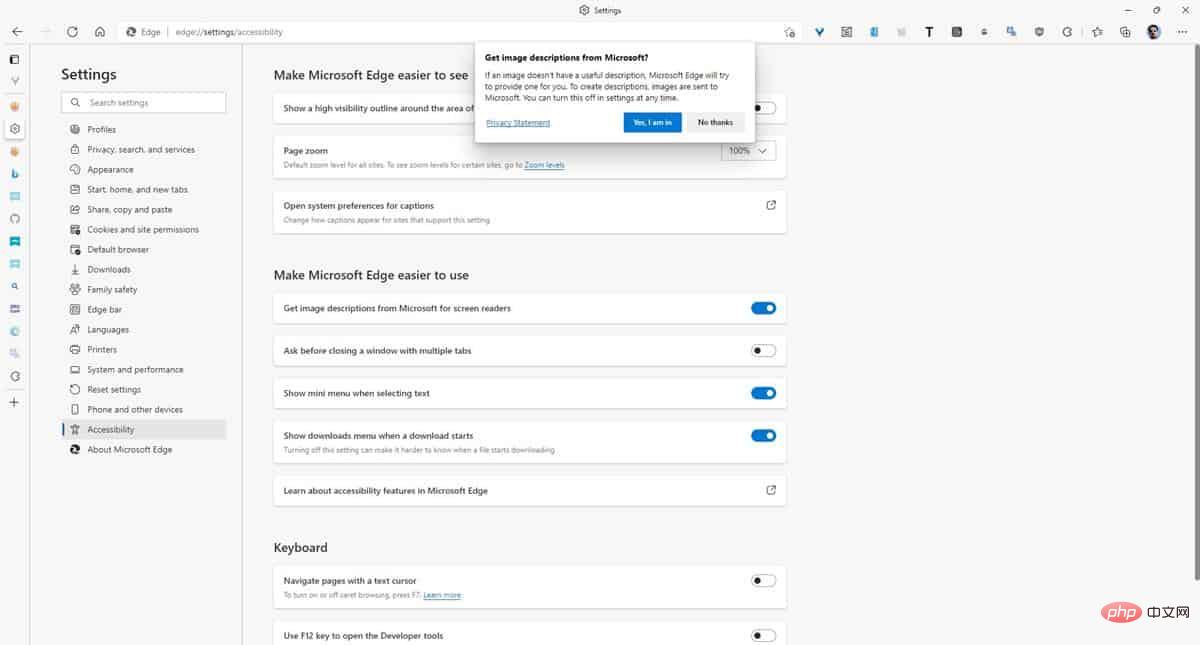

Automatic image description is now available in stable versions of Microsoft Edge for Windows, Linux, and macOS. This option is not enabled by default as it has its own privacy policy. To turn on the option, go to the Edge Settings > Accessibility page and click the button next to "Get image descriptions for screen readers from Microsoft." You'll need to accept the privacy policy to use this feature.

Use the hotkey Ctrl Win Enter to enable Windows Narrator, and the screen reader will read image descriptions for you while you browse the Internet using Microsoft Edge. You can toggle this feature from your browser's context menu.

The above is the detailed content of Microsoft Edge now supports automatic image tag generation for Narrator and other screen readers. For more information, please follow other related articles on the PHP Chinese website!