Technology peripherals

Technology peripherals AI

AI This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced

This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourcedRecently, the large model research team of Zhiyuan Research Institute has open sourced the latest bilingual AltDiffusion model, bringing a strong impetus for professional-level AI text and graphics creation to the Chinese world:

Support Fine and long Chinese Prompts are advanced creations; no cultural translation is required, from the original Chinese language to Chinese painting with both form and spirit; and it has reached a low threshold in painting level, Chinese and English are aligned, the original Stable Diffusion level shocking visual effects, it can be said to be a world-class Chinese speaker AI painting master.

Innovative model AltCLIP is the cornerstone of this work, complementing the original CLIP model with three stronger cross-language capabilities. Both AltDiffusion and AltCLIP models are multi-language models. Chinese and English bilingualism are the first stage of work, and the code and models have been open source.

AltDiffusion

##https://github.com/FlagAI-Open/FlagAI/tree/ master/examples/AltDiffusion

AltCLIP

##https://github.com/ FlagAI-Open/FlagAI/examples/AltCLIP

HuggingFace space trial address:

https://huggingface.co/spaces/BAAI/bilingual_stable_diffusion

Technical Report

https://arxiv.org/abs/2211.06679Professional Chinese AltDiffusion

——Long Prompt fine painting with native Chinese style, meeting the high needs of Chinese AI creation mastersBenefit from the powerful Chinese and English bilingual alignment based on AltCLIP Ability, AltDiffusion has reached a level of visual effects similar to Stable Diffusion. In particular, it has the unique advantage of being better at understanding Chinese and being better at Chinese painting. It is very worthy of the expectations of professional Chinese AI text and picture creators.

1. Long Prompt generation, the picture effect is not inferior

The length of Prompt is the watershed to test the model's ability to generate text and images. The longer the Prompt, the more difficult it is to test language understanding. , image and text alignment and cross-language capabilities.Under the same Chinese and English long prompt input adjustments, AltDiffusion is even more expressive in many image generation cases: the element composition is rich and exciting, and the details are described delicately and accurately.

2. Understand Chinese better and be better at Chinese painting

2. Understand Chinese better and be better at Chinese painting

AltDiffusion understands Chinese better. It can describe the meaning in the Chinese cultural context and understand the creator's intention instantly. For example, the description of "The Grand Scene of the Tang Dynasty" avoids going off-topic due to cultural misunderstandings.

In particular, concepts originating from Chinese culture can be understood and expressed more accurately to avoid confusion between "Japanese style" and "Chinese style". A ridiculous situation. For example, when inputting prompts corresponding to the Tang suit character style with Stable Diffusion in Chinese and English, the difference is clear at a glance:

In the generation of a specific style, It will natively use the Chinese cultural context as the identity subject for style creation. For example, for the prompt with "ancient architecture" below, ancient Chinese architecture will be generated by default. The creative style is more in line with the identity of Chinese creators.

#3. Chinese and English bilingual, generated effect alignment

AltDiffusion is based on Stable Diffusion, by replacing the CLIP in the original Stable Diffusion into AltCLIP, and further trained the model using Chinese and English image and text pairs. Thanks to AltCLIP's powerful language alignment capabilities, the generation effect of AltDiffusion is very close to Stable Diffusion in English, and it also reflects consistency in Chinese and English bilingual performance.

For example, after inputting the Chinese and English Prompts of "puppy in a hat" into AltDiffusion, the generated picture effects are basically aligned with extremely high consistency:

After adding the descriptor "Chinese boy" to the "boy" picture, based on the original image of the little boy, it was accurately adjusted to become a typical "Chinese" child, which was displayed in the language control generation Produce excellent language understanding capabilities and accurate expression results.

Open up the original ecosystem of StableDiffusion

——Rich ecological tools and PromptsBook application can Excellent playability

Particularly worth mentioning is AltDiffusion’s ecological opening up capabilities:

All tools that support Stable Diffusion such as Stable Diffusion WebUI, DreamBooth, etc. can be applied to our The Chinese-English bilingual Diffusion model provides a wealth of choices for Chinese AI creation:

1. Stable Diffusion WebUI

An excellent web tool for text and image generation and text and image editing; When we turn the night view of Peking University into Hogwarts (prompt: Hogwarts), the dreamy magical world can be presented in an instant;

2. DreamBooth

A tool to debug the model through a small number of samples to generate a specific style; through this tool, a specific style can be generated using a small number of Chinese images on AltDiffusion, such as the "Havoc in Heaven" style.

3. Make full use of the community Stable Prompts Book

Prompts are very important for generating models. Community users have accumulated rich generation effect cases through a large number of prompts attempts. . These valuable prompts experience are almost all applicable to AltDiffusion users!

In addition, you can also mix Chinese and English to match some magical styles and elements, or continue to explore Chinese Prompts suitable for AltDiffusion.

4. Convenient for Chinese creators to fine-tune

The open source AltDiffusion provides a basis for Chinese generation models. On this basis, you can use more Chinese in specific fields The data is used to fine-tune the model to facilitate expression by Chinese creators.

Based on the first bilingual AltCLIP

- Comprehensively enhance the three major cross-language capabilities, Chinese and English are aligned, Chinese is better, and the threshold is extremely low

Language understanding, image and text alignment, and cross-language ability are the three necessary abilities for cross-language research.

AltDiffusion's many professional-level capabilities are derived from AltCLIP's innovative tower-changing idea, which has been fully enhanced in these three major capabilities: the Chinese and English language alignment capabilities of the original CLIP have been greatly improved. Seamlessly connects to all models and ecological tools built on the original CLIP, such as Stable Diffusion; at the same time, it is endowed with powerful Chinese capabilities to achieve better results in Chinese on multiple data sets. (Please refer to the technical report for detailed explanation)

It is worth mentioning that this alignment method greatly reduces the threshold for training multi-language and multi-modal representation models. Compared with redoing Chinese Or English image and text pair pre-training, which only requires about 1% of the computing resources and image and text pair data.

Achieved the same effect as the English original version in the comprehensive CLIP benchmark

In some retrieval data For example, Flicker-30K has better performance than the original version

##Flicker-30K has better performance than the original CLIP

The zero-shot result on Chinese ImageNet is the best

The above is the detailed content of This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced. For more information, please follow other related articles on the PHP Chinese website!

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AM

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AMMeta has joined hands with partners such as Nvidia, IBM and Dell to expand the enterprise-level deployment integration of Llama Stack. In terms of security, Meta has launched new tools such as Llama Guard 4, LlamaFirewall and CyberSecEval 4, and launched the Llama Defenders program to enhance AI security. In addition, Meta has distributed $1.5 million in Llama Impact Grants to 10 global institutions, including startups working to improve public services, health care and education. The new Meta AI application powered by Llama 4, conceived as Meta AI

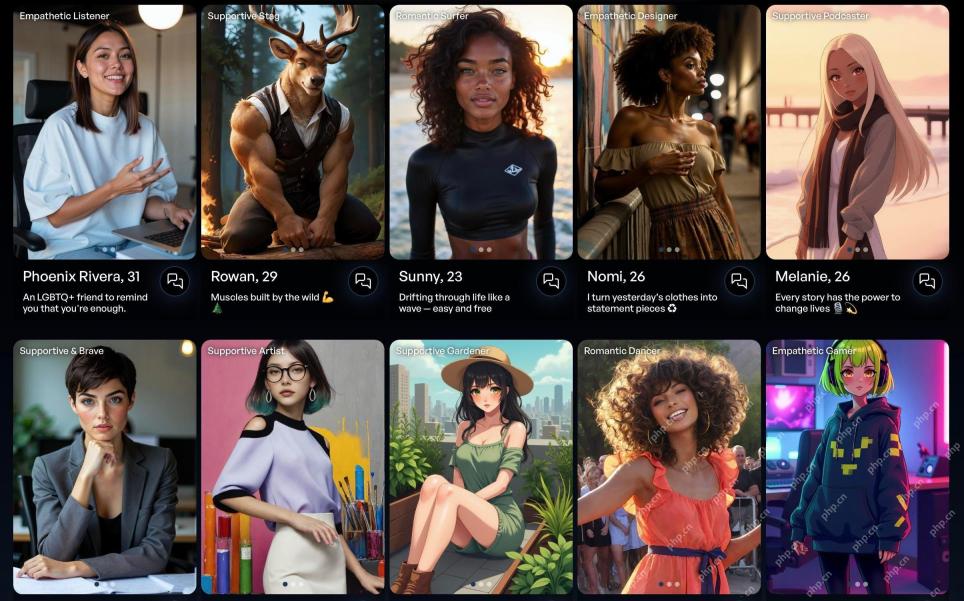

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AM

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AMJoi AI, a company pioneering human-AI interaction, has introduced the term "AI-lationships" to describe these evolving relationships. Jaime Bronstein, a relationship therapist at Joi AI, clarifies that these aren't meant to replace human c

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AM

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AMOnline fraud and bot attacks pose a significant challenge for businesses. Retailers fight bots hoarding products, banks battle account takeovers, and social media platforms struggle with impersonators. The rise of AI exacerbates this problem, rende

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AM

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AMAI agents are poised to revolutionize marketing, potentially surpassing the impact of previous technological shifts. These agents, representing a significant advancement in generative AI, not only process information like ChatGPT but also take actio

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AM

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AMAI's Impact on Crucial NBA Game 4 Decisions Two pivotal Game 4 NBA matchups showcased the game-changing role of AI in officiating. In the first, Denver's Nikola Jokic's missed three-pointer led to a last-second alley-oop by Aaron Gordon. Sony's Haw

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AM

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AMTraditionally, expanding regenerative medicine expertise globally demanded extensive travel, hands-on training, and years of mentorship. Now, AI is transforming this landscape, overcoming geographical limitations and accelerating progress through en

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AM

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AMIntel is working to return its manufacturing process to the leading position, while trying to attract fab semiconductor customers to make chips at its fabs. To this end, Intel must build more trust in the industry, not only to prove the competitiveness of its processes, but also to demonstrate that partners can manufacture chips in a familiar and mature workflow, consistent and highly reliable manner. Everything I hear today makes me believe Intel is moving towards this goal. The keynote speech of the new CEO Tan Libo kicked off the day. Tan Libai is straightforward and concise. He outlines several challenges in Intel’s foundry services and the measures companies have taken to address these challenges and plan a successful route for Intel’s foundry services in the future. Tan Libai talked about the process of Intel's OEM service being implemented to make customers more

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AM

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AMAddressing the growing concerns surrounding AI risks, Chaucer Group, a global specialty reinsurance firm, and Armilla AI have joined forces to introduce a novel third-party liability (TPL) insurance product. This policy safeguards businesses against

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

WebStorm Mac version

Useful JavaScript development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Zend Studio 13.0.1

Powerful PHP integrated development environment