Technology peripherals

Technology peripherals AI

AI Interview with Stuart Russell: Regarding ChatGPT, more data and more computing power cannot bring real intelligence

Interview with Stuart Russell: Regarding ChatGPT, more data and more computing power cannot bring real intelligenceInterview with Stuart Russell: Regarding ChatGPT, more data and more computing power cannot bring real intelligence

The fourth Chinese edition of "Artificial Intelligence: A Modern Approach" was recently released. Machine Heart conducted an exclusive interview with the author, Professor Stuart Russell. As a classic in the field of AI, Artificial Intelligence: A Modern Approach has been reprinted several times, with the content and structure reflecting the evolving understanding of the two authors. The latest fourth edition is their latest explanation after incorporating the progress of AI in the past decade, especially the impact of deep learning, into the overall framework. It reflects the two masters’ insights into the trends in artificial intelligence and the development of the subject system.

This interview also follows "a modern approach" and hopes to show Professor Russell's views on technological trends and intelligence theory from a perspective that is in line with the development of technology and the times. , as well as popular vs. classic thinking, bringing inspiration to AI researchers and practitioners.

##Stuart Russell works at the University of California, Berkeley, and is currently a professor in the Department of Computer Science (formerly Department Chair), Director of the Center for Human-Compatible Artificial Intelligence. He received the National Science Foundation Presidential Award for Outstanding Young Scientists in 1990 and the IJCAI Computing and Ideas Award in 1995. He is a Fellow of AAAI, ACM and AAAS and has published more than 300 papers in the field of artificial intelligence, covering a wide range of topics. Image source: kavlicenter.berkeley.edu

Professor Russell believes that in the next ten years, people’s focus will shift from relying heavily on end-to-end deep learning , returning to a system composed of modular, mathematically logical, semantically well-defined representations, and deep learning will play a crucial role in obtaining raw sensory data. It is important to emphasize that modular, semantically well-defined representations do not have to be hand-designed or inflexible; such representations can be learned from data.

As for the popular ChatGPT, Professor Russell believes that the key is to distinguish the task areas and figure out under what circumstances it is used: ChatGPT can be a very good A tool that will bring greater value if it is anchored in facts and integrated with planning systems. The problem is, we don’t currently know how ChatGPT works, and we probably won’t be able to figure it out, which will require some conceptual breakthroughs that are hard to predict.

He believes that to build truly intelligent systems, we should pay more attention to mathematical logic and knowledge reasoning, because we need to build the system on methods we understand , so as to ensure that the AI does not get out of control. He doesn’t believe that scaling up is the answer, nor does he believe that more data and more computing power can solve the problem. This is overly optimistic and intellectually uninteresting.

If we ignore the fundamental problem of deep learning data inefficiency, "I worry that we are deceiving ourselves into thinking that we are moving towards true intelligence. What we have done All it's really about is adding more and more pixels to something that's not really a smart model at all."

-1-

Heart of the Machine: In your opinion, do large-scale pre-trained language models (LLM) represented by ChatGPT essentially elevate artificial intelligence to a higher level? Does LLM overcome some fundamental problems of deep learning systems, such as common sense acquisition and knowledge reasoning?

Stuart Russell: The first answer that comes to my mind is - we don’t know because no one knows this How models work, including the people who create them.

What does ChatGPT know? Can it reason? In what sense does it understand the answer? we do not know.

A friend of mine at Oregon State University asked the model "Which is bigger, an elephant or a cat?" The model answered "The elephant is bigger", but asked it in a different way. "Which one is bigger than the other, the elephant or the cat?" The model answered "Neither the elephant nor the cat is bigger than the other." So you are saying that the model knows which one is bigger, an elephant or a cat? It doesn't know, because if it were asked another way, it would come to a contradictory conclusion.

#So, what does the model know?

Let me give you another example, which is also what actually happened. The training data for these models contains a large number of chess games, represented by unified codes and symbols. A game looks like the sequence e4 e5 Nf3 Nc6 Bb5... The player knows the meaning of these symbols and the moves depicted by these sequences. But the model doesn't know. The model doesn't know that there is a chessboard, and it doesn't know the moves. To the model, these symbols are just symbols. So, when you play blind chess with it and you say "Let's play chess, g4", it may reply "e6". Of course this may be a good move, but the model has no concept of playing chess. It just learns from training Find similar sequences in the data, perform appropriate transformations on these sequences, and then generate the next move. 80% or even 90% of the time it will produce a good move, but other times it will make moves that are silly or completely against the rules because it has no concept of playing a move on a chessboard.

Not just for chess, I think this actually applies to everything big models are doing now: 80% of the time it looks like a very Smart guy, but the other 20% of the time it looks like a complete idiot.

It seems smart because it has a lot of data. It has read almost all the books and articles written by humans so far, but despite this, in After receiving such a huge amount of useful information, it will still spit out things that it has no idea what it means. So, in this sense, I think large language models are probably not an advancement in artificial intelligence.

What’s truly impressive about ChatGPT is its ability to generalize between conversations it has had with users and previously read text. Find the similarities and make appropriate conversions , so its answer looks smart. However, we don’t know how the model does this, we don’t know where the boundaries of this generalization ability are, and we don’t know how this generalization is implemented in the circuit.

If we really know it, it can indeed be said to be an advancement in artificial intelligence, because we can use it as a basis, and we can develop other applications based on ChatGPT. system. But at this stage, everything remains a mystery. The only way we can move forward is if the model doesn't work? Okay, let's give it more data and make the model a little bigger.

#I don’t think scaling up is the answer. Data will eventually run out, and new situations are always happening in the real world. When we write chess programs, those programs that can really play chess well can cope with situations that have never been seen before. There is only one reason, that is, these programs understand the rules of chess and can move the chess pieces in The evolution of positions on the chessboard—the points at which pieces can be moved, the possible next moves of the opponent, including moves that have never been recorded in the chess game—are visualized.

#We are still far from being able to do this in general real-world situations. At the same time, I don't think big models of language get us any closer to achieving this goal. Except, you might say, that large models of language allow us to use human knowledge stored in text.

#Large models of language will be more useful if we can anchor them in known facts. Think about the Google Knowledge Graph, which has 500 billion facts. If ChatGPT can be anchored in these facts and can give correct answers to questions related to these facts, then ChatGPT will be more reliable.

If we can find a way to couple large language models into an inference engine that can correctly reason and plan, then you can say that we have a breakthrough in artificial intelligence. A bottleneck. We now have many planning algorithms, but it is difficult to make these planning algorithms perform correct and reasonable planning, such as building a car, and provide them with the required knowledge, because there are too many things that need to be understood, and it is very difficult to do so. It is difficult to write them all down and ensure that they are all correct. But language big models have read every book on cars, and maybe they can help us build the necessary knowledge, or simply answer the necessary questions on demand, so that we can capture all this knowledge when planning.

Compared with just seeing ChatGPT as a black box that helps you do something, combines large language models with planning algorithms to make them Knowledge input into planning systems that will lead to truly valuable business tools. As far as I know, there are already people working in this direction, and if successful, it will be a big progress.

Heart of the Machine: As a teacher, what do you think of ChatGPT - will you allow students to use ChatGPT to generate papers? As a user, what do you think of the various applications that ChatGPT has spawned, especially commercial applications?

Stuart Russell: A few weeks ago, when I was at the World Economic Forum in Davos When talking to people in the business world, everyone is asking me about big models of language and how they use them in their companies.

I think you can think of it this way, which is, would you put a 6-year-old in the same position in your company?

Although there are differences in abilities between the two, I think they can be compared like this. Large language models and ChatGPT are not trustworthy. They have no common sense and will give out wrong information in a serious manner. So, if you're going to use ChatGPT or a similar model in your company, you have to be very careful. If you think of certain positions or responsibilities in the company as nodes in the network, language is input and output in these nodes - of course, you can think of it this way. This is the case for many jobs, such as journalists and professors. Such a thing. However, that doesn’t mean you can replace them with ChatGPT.

#We must be very cautious when it comes to education. The emergence of ChatGPT has caused many people to panic. Some people say, ah we have to disable ChatGPT in schools. Others said banning ChatGPT was ridiculous, citing discussions from the 19th century - when someone said we had to ban mechanical calculators because if students started using mechanical calculators, they would never learn. Got the math right.

# Doesn’t this sound convincing? Doesn’t it seem like we need to ban ChatGPT? However, this analogy is completely wrong - a mechanical calculator automates exactly the same very mechanical process. Multiplying 26-digit numbers is very mechanical, a set of instructions, and you just have to follow the steps, step by step, step by step, to get the answer. The intellectual value of following instructions is limited, especially if the person does not understand what the instructions do.

But what ChatGPT will replace is not mechanically following instructions, but the ability to answer questions, the ability to read and understand, and the ability to organize ideas into documents. If you don't even learn these, and let ChatGPT do it for you, you may really become a useless person.

Now there are electronic calculators, but we still teach children arithmetic. We teach them the rules of arithmetic and try to make them understand what numbers are. Numbers How it corresponds to things in the physical world, etc. Only after they have gained this understanding and mastered the rules of arithmetic will we give them electronic calculators so that they do not have to follow mechanical procedures.

#In our day, when there were no calculators, we used printed tables with the values of various sine, cosine and logarithmic functions. , no one has ever said that you can’t learn mathematics by using these tables.

#So, we have to figure out when is the appropriate time for students to start using a tool like ChatGPT. To answer your question, if you can find the brainless part of the task of writing a paper - in fact, there are many times when writing a paper is mindless and just mechanically repeating tedious and boring processes - then you are probably ChatGPT is available and I have no problem with that.

#However, writing is not all a boring process. Writing is essentially a kind of thinking, and it is also a way for people to learn to think. The last thing we want is someone using ChatGPT blindly who understands neither the question nor the answer.

As for other applications of ChatGPT, such as generating pictures or music, I think the situation is similar. The key is to clearly distinguish the task areas. I think the process of creating art can be roughly divided into two parts, firstly having an idea of what you want to create, and then the relatively mechanical process of actually creating it based on your vision. For some people, the latter is very challenging and no matter how hard they try, they can't make a good looking picture, so we have specially trained artists, especially commercial artists, whose work doesn't involve much Creativity, paying more attention to the ability to produce pictures according to needs. I think this is a profession that is very threatened.

I had this experience when I was writing a book. There are five to six hundred illustrations in "Artificial Intelligence: A Modern Approach", almost all of which were drawn by me. of. Creating a good illustration or diagram is a slow and painstaking process that requires a lot of skill and skill. If there were a large model or application that produced diagrams or technical illustrations like the ones in my book, I'd be happy to use them.

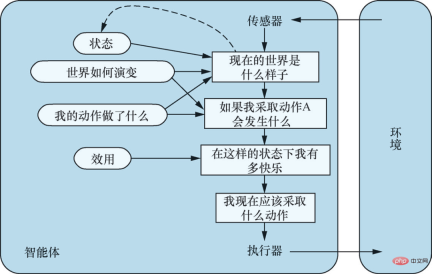

## Model-based, utility-based agent. Source: "Artificial Intelligence: A Modern Approach (4th Edition)" Illustrations 2-14

# #Universal learning agent. Source: "Artificial Intelligence: A Modern Approach (4th Edition)" Illustration 2-15

-2-心心: We don’t know the principles of ChatGPT, but through engineering implementation, we have obtained tools that are useful in certain situations; ChatGPT also seems to be a good example of bringing people into the loop. Is ChatGPT an improvement from an engineering perspective?

Stuart Russell: I'm not sure if ChatGPT can be called a project, because generally speaking, we think "Engineering" is a discipline of applied engineering science that combines knowledge of physics, chemistry, mechanics, electronics, etc. to create things useful to humans in a complex and ingenious way. At the same time, we understand why these things are useful, because their useful properties are achieved through specific methods and can be reproduced.

#But how did we develop ChatGPT? Incorporating human feedback is useful, but judging from the results, ChatGPT was obtained by doing gradient descent on a large number of data sets. This reminds me of the 1950s, when a lot of effort was put into genetic programming, and Fortran programs that were hoped to achieve intelligence by simulating biological evolution failed miserably.

Theoretically, when you have enough Fortran programs and let them generate enough mutations, it is possible in principle to produce something smarter than humans. Fortran program. But this possibility in principle has not come true in practice.

#Now, you do gradient descent on a big enough circuit and enough data, and suddenly you can create real intelligence? I think it is unlikely, maybe a little more than evolving Fortran programs - but I can't say, maybe Fortran programs are more likely, because there is reason to think that Fortran programs are a kind of representation that has stronger capabilities than circuits. language, and when they abandoned the Fortran program in 1958, computing power was 15 or 16 orders of magnitude less than what we have today.

Machine Heart: Without the word “project”, what do you think of what OpenAI is doing? Stuart Russell: What OpenAI is doing, you can call it Cookery , because we really don’t know how these models work. Just like when I make a cake, I don't know how it turned into a cake. Human beings have been making cakes for thousands of years. They have tried many different raw materials and many different methods. After doing a lot of gradient descent, one day I discovered a magical thing - cake, that's cooking. Now we know a little more about the underlying principles of the cake, but it's still not perfect. There is only so much we can get out of cooking, and the process is not of great intellectual value. If it is because of some fundamental problems with ChatGPT, one day you will not get what you want by entering prompts or instructions. What to do with the answer? Are you going to modify the recipe again? Raise the token from 4000 to 5000 and double the number of network layers? It's not science, and I don't think it's intellectually interesting. Research trying to understand how large models of language work is certainly valuable, as ChatGPT is making a surprising amount of generalizations, and only figuring out how only then can we truly develop meaningful intelligent systems. There are a lot of people working on this now, and a lot of papers have been published on it. But whether the internal mechanism of ChatGPT can be understood, I think it is difficult to say, it may be too complex and we have no way to reverse engineer what is happening inside. #An interesting analogy is what happened between humans and dogs 30,000 years ago. We don’t understand how a dog’s brain works, and it’s hard to figure out exactly what a dog is thinking, but we learned to tame them, and now dogs are integrated into our lives, playing a variety of valuable roles. We’ve discovered that dogs are good at many things, including looking after homes and playing with children, but we don’t do that through engineering; we select and improve those traits through breeding, through tweaking their formulas. But you wouldn’t expect your dog to write your article for you, you know they can’t do that, and you probably don’t expect your dog to do that either. ChatGPT The surprising thing about this whole thing is that I think this is the first time that an AI system has really entered the public eye, which is a very big change. OpenAI itself has a good saying, that is, although ChatGPT is not a real intelligence, it allows the human body to taste the realization of real (artificial) intelligence, and everyone can use that intelligence to do all kinds of things they want to do. . Heart of the Machine: Another point that many people are concerned about is the disappearance of intermediate tasks brought about by LLM. Do you think these intermediate tasks, such as semantic analysis and syntactic analysis, have much value now from a technical iteration perspective, and will they really disappear in the future? Are AI researchers and practitioners in the middle, those without powerful hardware resources or strong domain knowledge, in danger of losing their jobs? Stuart Russell: That’s a good question. The fact is that it's hard to publish papers on semantic analysis these days. In fact, it's hard to get people in the NLP community to listen to anything now unless you talk about big models of language, or refresh big benchmarks with big models. Almost all papers are about refreshing big benchmarks. It is difficult to publish an article that is not about refreshing big benchmarks, such as language structure, language understanding, or semantic analysis, syntactic analysis, etc., so the big benchmarks for evaluating large models are It has become the only choice for writing papers, and these big benchmarks have nothing to do with language. #In a sense, in the field of natural language processing today, we no longer study language, which I think is very unfortunate. The same is true for computer vision. In most computer vision research today, we no longer study vision, we only study data, training and prediction accuracy. #As for how to develop AI next, I think we should focus on methods that we understand, focusing on knowledge and logical reasoning. The reasons are twofold. First we want AI systems to be reliable, we need to mathematically ensure they are safe and controllable, and that means we have to understand the systems we are building. Secondly, think about it from a data efficiency perspective, which will be necessary if general intelligence is to be achieved, with the human brain running at 20 watts instead of 20 megawatts. Circuits are not a very expressive language, the data efficiency of these algorithms is orders of magnitude lower than human learning, and you would have a hard time writing down much of what we know about the world in circuits. Once we had general-purpose computers and programming languages, we stopped using circuits because it was much simpler and easier to use to express what we wanted in a program, something the AI community has largely forgotten. At one point, many people went astray. Heart of the Machine: The fourth edition of "Artificial Intelligence: Modern Approaches" has an important update, that is, no Assume further that the AI system or agent has a fixed goal. Previously, the purpose of artificial intelligence was defined as "creating systems that try to maximize expected utility, with goals set by humans." Now we no longer set goals for AI systems. Why is there such a change? Stuart Russell: There are several reasons. First, as artificial intelligence moves out of the laboratory and into the real world, we find it difficult to define our goals completely correctly. For example, when you're driving on the road, you want to get to your destination quickly, but that doesn't mean you should be driving 200 miles an hour, and if you tell a self-driving car to put safety first, it might end up parked in a garage forever. inside. There are trade-offs between the various goals of getting to your destination safely and quickly, as well as being friendly to other drivers, not making passengers uncomfortable, complying with laws and regulations...etc. There are always some risks on the road, and some unavoidable accidents will happen. It is difficult to write down all your goals when driving, and driving is only a small and simple thing in life. Therefore, from a practical perspective, setting goals for AI systems is unreasonable. #Secondly, it involves the example of King Midas (King Midas Problem) I gave in the book. Midas was a king in Greek mythology. He was very greedy and asked God to give him the power to turn everything into gold. God granted his wish and everything he touched turned into gold. He achieved his goal. , but later his water and food also turned into gold, and his family members also turned into gold after being touched by him. Finally, he died tragically surrounded by gold. This reminds us that when you define goals for very powerful systems, you better make sure that the goals you define are absolutely correct. But now that we know we can’t do that, it becomes increasingly important as AI systems become more powerful that they don’t know what their true goals are. #Goal is actually a very complicated thing. For example, if I say I want to buy an orange for lunch, that can be a goal, right? In everyday contexts, a goal is seen as something that can be achieved, and once achieved, it is done. But in the rational choice theory defined by philosophy and economics, there is no such goal. What we have are preferences or rankings of various possible futures. Each possible future extends from now to the end of time. It contains everything in the universe. I think it's a more complex, deeper understanding of purpose, of what humans really want. Heart of the Machine: What impact will this transformation have on the future development of artificial intelligence? Stuart Russell: Since the birth of artificial intelligence along with computer science in the 1940s and 1950s ,researchers need to have a concept of intelligence in order to ,be able to conduct research based on it. While some of the early work was more about imitating human cognition, it was the concept of rationality that ultimately won out: The more a machine can achieve its intended goals through action, the more intelligent we consider it. In the standard model of artificial intelligence, this is the type of machine we strive to create; humans define the goal and the machine does the rest. For example, for a solution system in a deterministic environment, we give a cost function and a goal criterion to let the machine find the action sequence with the smallest cost to achieve the goal state; for a reinforcement learning system in a stochastic environment, we give a reward function and a discount factor , let the machine learn a strategy that maximizes the expected discount reward sum. This approach can also be seen outside the field of artificial intelligence: control scientists minimize cost functions, operations researchers maximize rewards, statisticians minimize expected loss functions, and economists maximize individual utility or the welfare of a group. But the standard model is actually wrong. As just said, it is almost impossible for us to specify our goals completely correctly, and when the goals of the machine do not match what we really want, we may lose control of the machine because the machine will preemptively take measures and do whatever it takes. The price ensures that it achieves its stated goals. Almost all existing AI systems are developed within the framework of standard models, which creates big problems. In "Artificial Intelligence: A Modern Approach (4th Edition)", we propose that artificial intelligence requires new models, The new model emphasizes the uncertainty of the AI system's goals. This uncertainty enables the machine to learn human preferences and seek human opinions before taking action. During the operation of the AI system, there must be some information flowing from the human to the machine that illustrates the human's true preferences, rather than the human's goals becoming irrelevant once they are initially set. This requires decoupling machines from fixed goals and binary coupling between machines and humans. The Standard Model can be thought of as an extreme case in which a machine can fully correctly specify a desired human goal, such as playing Go or solving a puzzle, within the scope of the machine. We also provide some examples in the book to illustrate how the new model works, such as uncertain preferences, off-switch problem, assistance Assistance game, etc. But these are just the beginning, and we've only just begun research. Heart of the Machine: In the rapidly developing field of artificial intelligence, how to keep up with technological trends without blindly chasing hot spots? What should AI researchers and practitioners keep in mind? Stuart Russell: To build truly intelligent systems, I think the fundamental problem It is able to use a expressive language to express various irregularities contained in the universe. The essential difference between intelligence and circuits is that, as far as we know, circuits cannot represent those irregularities well, which in practice manifests itself as an extreme in data efficiency low. As a simple example, I could write down the definition of the sine function (with a mathematical formula), or I could try to describe the sine function empirically using a large number of pixels . If I only have 10 million pixels, I can only cover a portion of the sine function, and if I look at the area I've covered, I seem to have a pretty good model of the sine function. But actually, I don't really understand the sine function, I don't know the shape of the function, and I don't know its mathematical properties. #I worry that we are fooling ourselves into thinking we are moving towards true intelligence. All we're really doing is adding more and more pixels to something that's not really a smart model at all. I think that when building an AI system, we need to focus on methods that have basic representation capabilities, the core of which lies in being able to declare all objects. . Suppose I want to write down the rules of Go. Then these rules must apply to every square on the board. I can say what will happen to each y for each x. I can also write it in C or Python. I can also use Written in English, written in first-order logic. Each of these languages allows me to write down rules in a very concise way because they all have the expressive power to express these rules. However, I cannot do this in circuits, and circuit-based representations (including deep learning systems) cannot represent this class of generalizations. #Ignoring this fact and trying to achieve intelligence through big data is ridiculous in my opinion. It is like saying that you don’t need to understand what a Go piece is. Chess pieces, because we have billions of training examples. If you think about what human intelligence has done, we built LIGO and detected gravitational waves from the other side of the universe. How did we do it? Based on knowledge and reasoning. Before building LIGO, where did we collect training samples? Obviously, predecessors learned some things, including their sensory experiences, and then recorded them in expressive languages such as English and mathematics. We learned from them, understood the laws of the universe, and carried out reasoning and engineering based on these. and design, etc., thus observing a black hole collision at the other end of the universe. Of course, it is possible to achieve intelligence based on big data. Many things are possible. It is also possible to evolve a Fortran program that is smarter than humans. But we have spent more than two thousand years understanding knowledge and reasoning, and have developed a large number of excellent technologies based on knowledge and reasoning, and thousands of useful applications have been developed based on these technologies. Now you are interested in intelligence but not knowledge and reasoning, I have nothing to say about that. -3-

The above is the detailed content of Interview with Stuart Russell: Regarding ChatGPT, more data and more computing power cannot bring real intelligence. For more information, please follow other related articles on the PHP Chinese website!

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AM

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AMRunning large language models at home with ease: LM Studio User Guide In recent years, advances in software and hardware have made it possible to run large language models (LLMs) on personal computers. LM Studio is an excellent tool to make this process easy and convenient. This article will dive into how to run LLM locally using LM Studio, covering key steps, potential challenges, and the benefits of having LLM locally. Whether you are a tech enthusiast or are curious about the latest AI technologies, this guide will provide valuable insights and practical tips. Let's get started! Overview Understand the basic requirements for running LLM locally. Set up LM Studi on your computer

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AM

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AMGuy Peri is McCormick’s Chief Information and Digital Officer. Though only seven months into his role, Peri is rapidly advancing a comprehensive transformation of the company’s digital capabilities. His career-long focus on data and analytics informs

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AM

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AMIntroduction Artificial intelligence (AI) is evolving to understand not just words, but also emotions, responding with a human touch. This sophisticated interaction is crucial in the rapidly advancing field of AI and natural language processing. Th

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AM

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AMIntroduction In today's data-centric world, leveraging advanced AI technologies is crucial for businesses seeking a competitive edge and enhanced efficiency. A range of powerful tools empowers data scientists, analysts, and developers to build, depl

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AM

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AMThis week's AI landscape exploded with groundbreaking releases from industry giants like OpenAI, Mistral AI, NVIDIA, DeepSeek, and Hugging Face. These new models promise increased power, affordability, and accessibility, fueled by advancements in tr

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AM

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AMBut the company’s Android app, which offers not only search capabilities but also acts as an AI assistant, is riddled with a host of security issues that could expose its users to data theft, account takeovers and impersonation attacks from malicious

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AM

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AMYou can look at what’s happening in conferences and at trade shows. You can ask engineers what they’re doing, or consult with a CEO. Everywhere you look, things are changing at breakneck speed. Engineers, and Non-Engineers What’s the difference be

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AMSimulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Notepad++7.3.1

Easy-to-use and free code editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),