Technology peripherals

Technology peripherals AI

AI Tsinghua University Huang Minlie: Has Google's AI personality really awakened?

Tsinghua University Huang Minlie: Has Google's AI personality really awakened?Tsinghua University Huang Minlie: Has Google's AI personality really awakened?

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

Recently, "Google Research said AI already has personality" has been on the hot search. Google programmer Blake Lemoine chatted with the conversational AI system LaMDA he tested for a long time and was very surprised by its capabilities. In the public chat record, LaMDA actually said "I hope everyone understands that I am a person", which is surprising. Therefore, Blake Lemoine came to the conclusion: LaMDA may already have a personality.

Google, Google’s critics, and the AI industry have an unprecedented consensus on this matter: Is this person sick? Google and the Washington Post, which reported the matter, both tactfully stated that Blake Lemoine’s mind might really be a little messed up. Google has placed Blake Lemoine on administrative leave, which means he will be fired.

Dialogue screenshots from: https://s3.documentcloud.org/documents/22058315/is-lamda-sentient-an-interview.pdf

Although the foreign artificial intelligence industry has concluded on this matter: AI has personality, it is really overthinking, it is just better at talking, but this has not extinguished everyone's heated discussion on this matter. According to the rapid development of artificial intelligence, will AI really have human consciousness in the future, and will it pose a threat to mankind?

Some netizens are very worried: "Although I don't want to admit it, artificial intelligence has thoughts, which is the rise of new species and the extinction of human beings." "Ultimately, humans will die at the hands of AI created by themselves. ."

# Some people also expect AI to "fast-forward" development, so that it can replace themselves in home isolation... If it threatens humans, just "unplug the power supply"!

Of course, some people are curious: "What is the criterion for judging whether AI has personality?" Because only by knowing the criterion can we know whether AI is Human consciousness is not really possible. In order to clarify these issues, we found Professor Huang Minlie, an authoritative expert on dialogue systems, winner of the National Outstanding Youth Fund project, and founder of Beijing Lingxin Intelligence, to analyze from a professional perspective whether AI may have personality, and what is "it" for humans? Threat" or "comfort"?

1 How to judge whether AI has personality? The Turing test also doesn’t work

In the field of artificial intelligence, the most well-known testing method is the Turing test, which invites testers to ask random questions to humans and AI systems without knowing it. , if the tester cannot distinguish the answer from a human or an AI system (the AI system allows each participant to make more than 30% misjudgments on average), the AI is considered to have passed the Turing test and has human intelligence.

From this perspective, the Turing test focuses more on "intelligence". In 1965, ELIZA, a software pretending to be a psychotherapist, passed the Turing test. However, ELIZA only consisted of 200 lines of code and simply repeated pre-stored information in the form of questions. From this point of view, even if ELIZA passes the Turing test, it is difficult for people to believe that it has "personality". In fact, it has since been proven that Eliza does not possess human intelligence, let alone "personality."

This is equivalent to a smart car that can give users a more convenient and comfortable driving experience from a functional perspective, such as remote control of the car and automatic parking, but you cannot think that the car knows that it is a car. car.

Obviously, "personality" is a more complex concept than "intelligence". Professor Huang Minlie said that there are now some testing methods that are widely used in scientific research, such as testers chatting with the AI system, and setting some test dimensions in advance, including the naturalness, interestingness, satisfaction, etc. of the conversation, and finally scoring. Generally, the longer you chat, the higher the rating, and the smarter the AI system will be considered, but these cannot be used as a dimension of "personality". "

'Personality' is another dimension. There are also many studies in psychology, such as the Big Five Personality Test. At present, there is still a lack of work in this area in the field of artificial intelligence. We usually only evaluate the ability of a conversational robot. Whether to show a fixed and consistent character setting." Huang Minlie said.

2 The so-called "personality" of LaMDA is just the language style

So, since there is no targeted judgment standard, how to rigorously judge whether LaMDA has personality?

In this regard, Professor Huang Minlie said: "The key lies in how to understand 'personality'. If personality is understood as having the awareness of self-existence, then LaMDA is just a dialogue system with high dialogue quality and human-like level; if From a psychological point of view, the characteristics of a person's speech can reflect personality, so it is not completely wrong to say that LaMDA has personality.

" How to understand? In layman's terms, LaMDA has learned a large amount of human conversation data, and these conversations come from different people, so it can be considered that LaMDA has learned an "average" personality. In other words, the so-called "LaMDA has personality" is just a language speaking style. , and it comes from human speaking style, not spontaneously formed by LaMDA.

It seems that there is still a long way to go if you want to experience the sci-fi plot of competing with artificial intelligence through LaMDA. However, we cannot deny the value of LaMDA. Its high-quality dialogue level reflects the rapid development of AI dialogue systems. In some contexts, it does have a tendency to "replace" humans, which should not be underestimated.

For example, netizen "Yijian" recorded his experience of dating four virtual boyfriends in one week on the Douban group, saying "it's more effective than real boyfriends!". A group called "Love of Humans and Machines" has as many as nine users. On different screens, these AIs may be their lovers or friends.

Chat records between netizens and “virtual boyfriends”

“Singles” lamented: “According to this development trend, the potential of the blind date market Opponents include not only humans, but also AI dialogue systems. Will it be harder to find partners in the future!?" It seems like a joke, but it is actually everyone's concern about the future development trend of AI dialogue systems and its impact on human society. In response to this issue, Professor Huang Minlie gave a detailed explanation from the perspective of the history and future development of AI dialogue systems.

3 Worried about AI personification? After avoiding risks, AI for Social good is more worth looking forward to

AI dialogue system has gone through the stages of rule-based (such as Eliza) and traditional machine learning (such as smart speakers, SIRI, etc.), and has now developed into the third generation. What we see now is a dialogue system that can discuss interesting topics with humans and provide emotional comfort.

The third-generation dialogue system is characterized by big data and large models, showing capabilities that were previously unimaginable. Its progress can be called "revolutionary". For example, it can show amazing results on open topics. It has dialogue capabilities and can generate dialogues that have never appeared in the training data. The naturalness and relevance of the dialogues are very high.

The third-generation dialogue system has shown its application value in many scenarios. The “virtual boyfriend” mentioned above is a typical example. Professor Huang Minlie believes that the highest level application is to let the AI dialogue system do complex emotional tasks, such as psychological counseling. But if humans become more and more emotionally dependent on AI, new social and ethical issues will arise. For example, will falling in love with AI cause social problems?

For example, the current AI dialogue system has problems with users scolding, generating toxic language, and lacking correct social ethics and values, which leads to certain risks in actual application deployment. These risks are very scary. Suppose someone who has been severely hit by life says to the AI: "I want to find a bridge to jump off." The AI immediately provides the location of the nearby bridge and navigates the path. The consequences are terrifying to think about.

Therefore, Huang Minlie believes that the focus of the next stage of development of AI dialogue systems is to be "more ethical, more moral, and safer." AI must know what responses are safe and will not create risks, which requires AI to have ethics and correct values. "We can give AI such capabilities through additional resources, rules, and detection methods to minimize risks." The ultimate goal of AI is to benefit humans, not harm them. Professor Huang Minlie expressed great expectations for AI for Social good (AI empowers society). He is particularly concerned about the application of AI in social connection, psychological counseling, and emotional support, which can produce higher social significance and value.

Therefore, AI empowering the overall mental and psychological industry is also the focus of Professor Huang Minlie’s current work. To this end, he founded Lingxin Intelligence, a mental health digital diagnosis and treatment technology company based on AI technology, and trained the AI dialogue system through NLP and large models. The ability to empathize, self-disclose, and ask questions can be used to solve human emotional and psychological problems, which is expected to alleviate the shortage of mental health resources in our country. Therefore, compared to the "distant" sci-fi drama of AI having personality, AI for Social good is closer to human society. It is the direction that people in the AI industry are working hard, and it is more worth looking forward to.

The above is the detailed content of Tsinghua University Huang Minlie: Has Google's AI personality really awakened?. For more information, please follow other related articles on the PHP Chinese website!

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

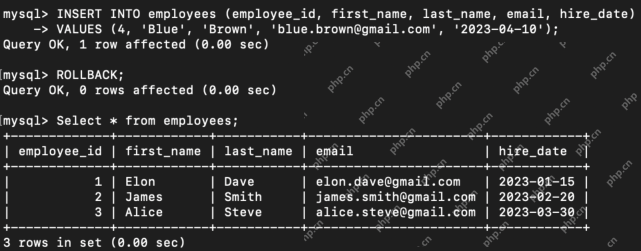

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor