The current trend of general-purpose large models replacing proprietary models customized for specific tasks is gradually emerging. This approach has significantly reduced the marginal cost of AI model application. This raises a question: Is it feasible to achieve zero-sample information extraction without training?

Information extraction technology is an important part of building a knowledge graph. If it can be implemented without training at all, it will greatly reduce the threshold of data analysis and help realize automated knowledge. Library build.

We build a general zero-sample IE system by using prompt engineering method for GPT-3.5——GPT4IE (GPT for Information Extraction), found that GPT3.5 can automatically extract structured information from original sentences. Supports both Chinese and English, and the tool code is open source.

Tool URL: https://cocacola-lab.github.io/GPT4IE/

Code: https://github.com/cocacola-lab/GPT4IE

##1 Background introduction

Information The goal of extraction (Information Extraction, IE) is to extract structured information from unstructured text, including entity-relation triple extraction (Entity-relation Extract, RE), named entity recognition (Named Entity Recognition, NER) and event extraction ( Event Extraction, EE) [1][2][3][4][5]. Many studies have begun to rely on IE technology to automate zero-shot/few-shot work, such as clinical IE [6].

Recently, large-scale pre-trained language models (LLMs) have performed extremely well on many downstream tasks, even with just a few examples as a guide without the need for It can be achieved with a little tweaking. From this we raise a question: Is it feasible to implement zero-sample IE tasks only through prompts? We try to use the prompt method to build a general zero-sample IE system for GPT-3.5 - GPT4IE (GPT for Information Extraction) . Combined with GPT3.5 and hints, it is able to automatically extract structured information from original sentences.

2 Technical Framework

Design a task-specified prompt template, and then fill the template with user input The specific slot value (slot) forms a prompt (prompt), which is entered into GPT-3.5 and used for IE. There are three supported tasks: RE, NER and EE, and all three tasks are bilingual in Chinese and English. The user needs to enter a sentence and formulate a list of extraction types (i.e., relationship list, head entity list, tail entity list, entity type list, or event list). The details are as follows:

The goal of the RE task is to extract triples from the text, such as "(China, capital, Beijing)", "("Ruyi "Biography", starring, Zhou Xun)". The required input format is as follows (the items with "*" represent non-required fields. We have set default values for these options, but for flexibility, we support user-defined specified lists, the same below):

- Input Sentence: Input text

- Relation type list (rtl)* : ['Relation type 1', 'Relation type 2', ...]

- Subject type list (stl)* : ['Header entity type 1', 'Header entity type 2', ...]

- Object type list (otl)* : ['Tail entity type 1', 'Tail entity type 2', ...]

- OpenAI API key: OpenAI API key (our Some of the available keys are provided in Github for example use.)

NER task is designed to extract entities from text, such as "(LOC , Beijing)”, “(Character, Zhou Enlai)”. On the NER task, the input format is as follows:

- Input Sentence: Input text

- Entity type list (etl)* : [ 'Entity type 1', 'Entity type 2', ...]

- OpenAI API key: OpenAI API key

EE Task aims to extract events from plain text, such as "{Life-Divorce: {Person: Bob, Time: today, Place: America}}", "{Contest Behavior-Promotion: {Time : None, Promotional side: Northwest Wolves, Promotional event: Battle for the top spot in the Chinese Premier League}}". The input format is as follows:

- Input Sentence: Input text

- Event type list (etl)* : {'Event type 1': ['Argument role 1', ' Argument role 2', ...], ...}

- OpenAI API key: OpenAI API key

3 Tool Usage Example

##3.1 RE Example 1

Input:

Input Sentence: Bob worked for Google in Beijing, the capital of China.

rtl: [ 'location-located_in', 'administrative_division-country', 'person-place_lived', 'person-company', 'person-nationality', 'company-founders', 'country-administrative_divisions', 'person-children', 'country -capital', 'deceased_person-place_of_death', 'neighborhood-neighborhood_of', 'person-place_of_birth']

stl: ['organization', 'person' , 'location', 'country']

otl: ['person', 'location', 'country', 'organization', 'city']

Output:

##3.2 RE Example 2

Input Sentence:"Ruyi's Royal Love in the Palace" is an ancient costume palace emotional TV series, produced by Directed by Wang Jun, starring Zhou Xun, Huo Jianhua, Zhang Junning, Dong Jie, Xin Zhilei, Tong Yao, Li Chun, Wu Junmei and others.

rtl: ['Album', 'Date of establishment', 'Altitude', 'Official language', 'Area', 'Father', 'Singer', 'Producer', 'Director', 'Capital', 'Starring', 'Chairman', 'ancestral home', 'Wife', 'Mother', 'Climate', 'Area', 'Protagonist' , 'Postal code', 'Abbreviation', 'Production company', 'Registered capital', 'Screenwriter', 'Founder', 'Graduation school', 'Nationality', 'Professional code', 'Dynasty', 'Author ', 'lyrics', 'city', 'guest', 'headquarter location', 'population', 'spokesperson', 'adapted from', 'principal', 'husband', 'host', 'theme song' ', 'years of study', 'composition', 'number', 'release time', 'box office', 'acting', 'dubbing', 'award-winning']

# #stl: ['Country', 'Administrative Region', 'Literary Works', 'Characters', 'Film and Television Works', 'School', 'Book Works', 'Place', 'Historical Figures', 'Attractions' , 'Song', 'Subject Major', 'Enterprise', 'TV Variety Show', 'Institution', 'Enterprise/Brand', 'Entertainment Figure']

#otl: ['Country', 'Person', 'Text', 'Date', 'Place', 'Climate', 'City', 'Song', 'Enterprise', 'Number', 'Music Album', 'School', 'Work', 'Language']Output:

3.3 NER Example 1

Input: Input Sentence : Bob worked for Google in Beijing, the capital of China.

etl

: ['LOC', 'MISC', 'ORG', ' PER']Output:

## 3.4 NER Example 2

Input Sentence: In the past five years, under the guidance of Deng Xiaoping Theory, the Zhigong Party has followed the basic line of the primary stage of socialism and worked hard to implement the policies proposed at the 10th National Congress of the Zhigong Party The basic tasks of participating in party functions and strengthening self-construction. etl: ['Organization', 'Location', 'People'] Output:

##3.5 EE Example 1

Input:

Input Sentence: Yesterday Bob and his wife got divorced in Guangzhou.

etl: {'Personnel:Elect': ['Person', 'Entity', 'Position', 'Time', 'Place'], 'Business:Declare-Bankruptcy': ['Org', 'Time ', 'Place'], 'Justice:Arrest-Jail': ['Person', 'Agent', 'Crime', 'Time', 'Place'], 'Life:Divorce': ['Person', 'Time ', 'Place'], 'Life:Injure': ['Agent', 'Victim', 'Instrument', 'Time', 'Place']}

Output:

##3.6 EE Example 2

Input:

Input Sentence:: In the 2022 Qatar World Cup final, Argentina narrowly defeated France in a penalty shootout.

etl: {'Organizational Behavior-Strike': ['Time', 'Affiliation', 'Number of Strikers', 'Strike Personnel'], ' Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limited Stock': ['Time', 'Limited Stock'], 'Organizational Relations-Dismissal': [' Time', 'Firing Party', 'Fired Person']}

Output:

3.7 EE example three (an interesting error example)

Input:

Input Sentence:: I divorced him today

##etl: {'Organizational Behavior-Strike': [ 'Time', 'Organization', 'Number of strikes', 'Strike personnel'], 'Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limit' :['Time', 'Limit Stock'] , 'Organizational Relations-Dismissal': ['Time', 'Dismissal Party', 'Dismissed Personnel']}Output:

The above is the detailed content of Zero-sample information extraction by talking to GPT. For more information, please follow other related articles on the PHP Chinese website!

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

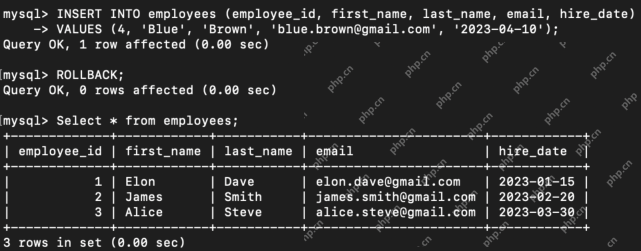

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor