Technology peripherals

Technology peripherals AI

AI The winning rate against humans is 84%. DeepMind AI reaches the level of human experts in Western chess for the first time

The winning rate against humans is 84%. DeepMind AI reaches the level of human experts in Western chess for the first timeThe winning rate against humans is 84%. DeepMind AI reaches the level of human experts in Western chess for the first time

DeepMind has made new achievements in the field of game AI, this time in Western chess.

#In the field of AI games, the progress of artificial intelligence is often demonstrated through board games. Board games can measure and evaluate how humans and machines develop and execute strategies in controlled environments. For decades, the ability to plan ahead has been key to AI's success in perfect-information games like chess, checkers, shogi, and Go, as well as imperfect-information games like poker and Scotland Yard.

Stratego has become one of the next frontiers of AI research. A visualization of the game’s stages and mechanics is shown below in 1a. The game faces two challenges.

First, Stratego’s game tree has 10,535 possible states, which is more than the well-studied imperfect information games Unrestricted Texas Hold’em (10,164 possible states) and Go (10,360 possible states) ).

Second, acting in a given environment in Stratego requires reasoning over 1066 possible deployments for each player at the start of the game, whereas poker only has 103 possible pairs of hands. Perfect information games such as Go and chess do not have a private deployment phase, thus avoiding the complexity of this challenge in Stratego.

Currently, it is not possible to use model-based SOTA perfect information planning technology, nor to use imperfect information search technology that decomposes the game into independent situations.

For these reasons, Stratego provides a challenging benchmark for studying large-scale policy interactions. Like most board games, Stratego tests our ability to make relatively slow, thoughtful and logical decisions in a sequential manner. And because the structure of the game is very complex, the AI research community has made little progress, and the artificial intelligence can only reach the level of human amateur players. Therefore, developing an agent to learn end-to-end strategies to make optimal decisions under Stratego's imperfect information, starting from scratch and without human demonstration data, remains one of the major challenges in AI research.

For these reasons, Stratego provides a challenging benchmark for studying large-scale policy interactions. Like most board games, Stratego tests our ability to make relatively slow, thoughtful and logical decisions in a sequential manner. And because the structure of the game is very complex, the AI research community has made little progress, and the artificial intelligence can only reach the level of human amateur players. Therefore, developing an agent to learn end-to-end strategies to make optimal decisions under Stratego's imperfect information, starting from scratch and without human demonstration data, remains one of the major challenges in AI research.

Recently, in a latest paper from DeepMind, researchers proposed DeepNash, an agent that learns Stratego self-game in a model-free way without human demonstration. DeepNask defeated previous SOTA AI agents and achieved the level of expert human players in the game's most complex variant, Stratego Classic.

Paper address: https://arxiv.org/pdf/2206.15378.pdf.

Paper address: https://arxiv.org/pdf/2206.15378.pdf.

The core of DeepNash is a structured, model-free reinforcement learning algorithm, which researchers call Regularized Nash Dynamics (R-NaD). DeepNash combines R-NaD with a deep neural network architecture and converges to a Nash equilibrium, meaning it learns to compete under incentives and is robust to competitors trying to exploit it.

Figure 1 b below is a high-level overview of the DeepNash method. The researchers systematically compared its performance with various SOTA Stratego robots and human players on the Gravon gaming platform. The results show that DeepNash defeated all current SOTA robots with a winning rate of more than 97% and competed fiercely with human players. It ranked in the top 3 in the rankings in 2022 and in each period, with a winning rate of 84%.

Researchers said that for the first time, an AI algorithm can reach the level of human experts in complex board games without deploying any search methods in the learning algorithm. , it is also the first time that AI has achieved human expert level in the Stratego game.

Method Overview

DeepNash uses an end-to-end learning strategy to run Stratego and strategically place chess pieces on the board at the beginning of the game (see Figure 1a). During the game-play phase, The researchers used integrated deep RL and game theory methods. The agent aims to learn an approximate Nash equilibrium through self-play.

This research uses orthogonal paths without search, and proposes a new method that combines model-free reinforcement learning in self-game with game theory algorithm ideas-regularized Nash dynamics (RNaD) combined.

The model-free part means that the research does not establish an explicit opponent model to track the possible states of the opponent. The game theory part is based on the idea that based on the reinforcement learning method, they guide the agent to learn Behavior moves toward a Nash equilibrium. The main advantage of this compositional approach is that there is no need to explicitly mock private state from public state. An additional complex challenge is to combine this model-free reinforcement learning approach with R-NaD to enable self-play in chess to compete with human expert players, something that has not been achieved so far. This combined DeepNash method is shown in Figure 1b above.

Regularized Nash Dynamics Algorithm

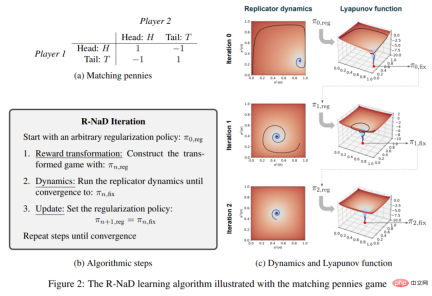

The R-NaD learning algorithm used in DeepNash is based on the idea of regularization to achieve convergence. R-NaD relies on three A key step, as shown in Figure 2b below:

DeepNash consists of three components: (1) Core training component R-NaD ; (2) fine-tuning the learning strategy to reduce the residual probability of the model taking highly unlikely actions, and (3) post-processing at test time to filter out low-probability actions and correct errors.

DeepNash’s network consists of the following components: a U-Net backbone with residual blocks and skip connections, and four heads. The first DeepNash head outputs the value function as a scalar, while the remaining three heads encode the agent policy by outputting a probability distribution of its actions during deployment and gameplay. The structure of this observation tensor is shown in Figure 3:

Experimental results

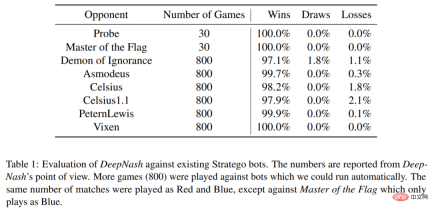

DeepNash also interacts with several existing Some Stratego computer programs have been evaluated: Probe won the Computer Stratego World Championship three of the years (2007, 2008, 2010); Master of the Flag won the championship in 2009; Demon of Ignorance is Stratego's Open source implementation; Asmodeus, Celsius, Celsius1.1, PeternLewis and Vixen were programs submitted to the Australian University Programming Competition in 2012, which PeternLewis won.

As shown in Table 1, DeepNash won the vast majority of games against all these agents, even though DeepNash had no adversarial training and only used self-game.

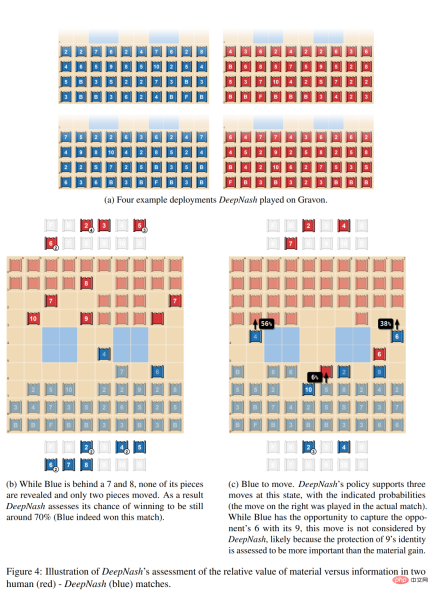

Figure 4a below illustrates some of the frequently repeated deployment methods in DeepNash; Figure 4b shows DeepNash (blue square) on the chess piece A situation where the center is behind (losing 7 and 8) but ahead in terms of information, because the red side's opponent has 10, 9, 8 and two 7s. The second example in Figure 4c shows DeepNash having an opportunity to capture the opponent's 6 with its 9, but this move was not considered, probably because DeepNash believed that protecting the identity of the 9 was considered more important than the material gain.

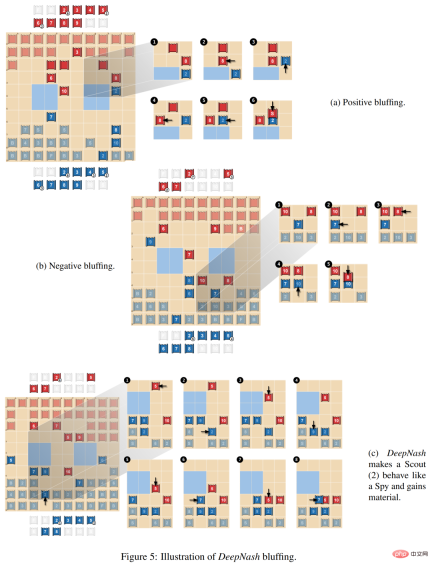

In Figure 5a below, the researchers demonstrate positive bluffing, where players pretend that the value of the piece is higher than it actually is. value. DeepNash chases the opponent's 8 with the unknown piece Scout (2) and pretends it is a 10. The opponent thinks the piece might be a 10 and guides it next to the Spy (where the 10 can be captured). However, in order to capture this piece, the opponent's Spy lost to DeepNash's Scout.

The second type of bluffing is negative bluffing, as shown in Figure 5b below. It is the opposite of active bluffing, where the player pretends that the piece is worth less than it actually is.

Figure 5c below shows a more complex bluff, where DeepNash brings its undisclosed Scout (2) close to the opponent's 10, which could be interpreted as a Spy. This strategy actually allows Blue to capture Red's 5 with 7 a few moves later, thus gaining material, preventing 5 from capturing Scout (2), and revealing that it is not actually a Spy.

The above is the detailed content of The winning rate against humans is 84%. DeepMind AI reaches the level of human experts in Western chess for the first time. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to set up two-step authentication in ChatGPT!May 12, 2025 pm 05:37 PM

An easy-to-understand explanation of how to set up two-step authentication in ChatGPT!May 12, 2025 pm 05:37 PMChatGPT Security Enhanced: Two-Stage Authentication (2FA) Configuration Guide Two-factor authentication (2FA) is required as a security measure for online platforms. This article will explain in an easy-to-understand manner the 2FA setup procedure and its importance in ChatGPT. This is a guide for those who want to use ChatGPT safely. Click here for OpenAI's latest AI agent, OpenAI Deep Research ⬇️ [ChatGPT] What is OpenAI Deep Research? A thorough explanation of how to use it and the fee structure! table of contents ChatG

![[For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!](https://img.php.cn/upload/article/001/242/473/174704251871181.jpg?x-oss-process=image/resize,p_40) [For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!May 12, 2025 pm 05:35 PM

[For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!May 12, 2025 pm 05:35 PMThe use of generated AI is attracting attention as the key to improving business efficiency and creating new businesses. In particular, OpenAI's ChatGPT has been adopted by many companies due to its versatility and accuracy. However, the shortage of personnel who can effectively utilize ChatGPT is a major challenge in implementing it. In this article, we will explain the necessity and effectiveness of "ChatGPT training" to ensure successful use of ChatGPT in companies. We will introduce a wide range of topics, from the basics of ChatGPT to business use, specific training programs, and how to choose them. ChatGPT training improves employee skills

A thorough explanation of how to use ChatGPT to streamline your Twitter operations!May 12, 2025 pm 05:34 PM

A thorough explanation of how to use ChatGPT to streamline your Twitter operations!May 12, 2025 pm 05:34 PMImproved efficiency and quality in social media operations are essential. Particularly on platforms where real-time is important, such as Twitter, requires continuous delivery of timely and engaging content. In this article, we will explain how to operate Twitter using ChatGPT from OpenAI, an AI with advanced natural language processing capabilities. By using ChatGPT, you can not only improve your real-time response capabilities and improve the efficiency of content creation, but you can also develop marketing strategies that are in line with trends. Furthermore, precautions for use

![[For Mac] Explaining how to get started and how to use the ChatGPT desktop app!](https://img.php.cn/upload/article/001/242/473/174704239752855.jpg?x-oss-process=image/resize,p_40) [For Mac] Explaining how to get started and how to use the ChatGPT desktop app!May 12, 2025 pm 05:33 PM

[For Mac] Explaining how to get started and how to use the ChatGPT desktop app!May 12, 2025 pm 05:33 PMChatGPT Mac desktop app thorough guide: from installation to audio functions Finally, ChatGPT's desktop app for Mac is now available! In this article, we will thoroughly explain everything from installation methods to useful features and future update information. Use the functions unique to desktop apps, such as shortcut keys, image recognition, and voice modes, to dramatically improve your business efficiency! Installing the ChatGPT Mac version of the desktop app Access from a browser: First, access ChatGPT in your browser.

What is the character limit for ChatGPT? Explanation of how to avoid it and upper limits by modelMay 12, 2025 pm 05:32 PM

What is the character limit for ChatGPT? Explanation of how to avoid it and upper limits by modelMay 12, 2025 pm 05:32 PMWhen using ChatGPT, have you ever had experiences such as, "The output stopped halfway through" or "Even though I specified the number of characters, it didn't output properly"? This model is very groundbreaking and not only allows for natural conversations, but also allows for email creation, summary papers, and even generate creative sentences such as novels. However, one of the weaknesses of ChatGPT is that if the text is too long, input and output will not work properly. OpenAI's latest AI agent, "OpenAI Deep Research"

What is ChatGPT's voice input and voice conversation function? Explaining how to set it up and how to use itMay 12, 2025 pm 05:27 PM

What is ChatGPT's voice input and voice conversation function? Explaining how to set it up and how to use itMay 12, 2025 pm 05:27 PMChatGPT is an innovative AI chatbot developed by OpenAI. It not only has text input, but also features voice input and voice conversation functions, allowing for more natural communication. In this article, we will explain how to set up and use the voice input and voice conversation functions of ChatGPT. Even when you can't take your hands off, ChatGPT responds and responds with audio just by talking to you, which brings great benefits in a variety of situations, such as busy business situations and English conversation practice. A detailed explanation of how to set up the smartphone app and PC, as well as how to use each.

An easy-to-understand explanation of how to use ChatGPT for job hunting and job hunting!May 12, 2025 pm 05:26 PM

An easy-to-understand explanation of how to use ChatGPT for job hunting and job hunting!May 12, 2025 pm 05:26 PMThe shortcut to success! Effective job change strategies using ChatGPT In today's intensifying job change market, effective information gathering and thorough preparation are key to success. Advanced language models like ChatGPT are powerful weapons for job seekers. In this article, we will explain how to effectively utilize ChatGPT to improve your job hunting efficiency, from self-analysis to application documents and interview preparation. Save time and learn techniques to showcase your strengths to the fullest, and help you make your job search a success. table of contents Examples of job hunting using ChatGPT Efficiency in self-analysis: Chat

An easy-to-understand explanation of how to create and output mind maps using ChatGPT!May 12, 2025 pm 05:22 PM

An easy-to-understand explanation of how to create and output mind maps using ChatGPT!May 12, 2025 pm 05:22 PMMind maps are useful tools for organizing information and coming up with ideas, but creating them can take time. Using ChatGPT can greatly streamline this process. This article will explain in detail how to easily create mind maps using ChatGPT. Furthermore, through actual examples of creation, we will introduce how to use mind maps on various themes. Learn how to effectively organize and visualize your ideas and information using ChatGPT. OpenAI's latest AI agent, OpenA

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Linux new version

SublimeText3 Linux latest version