As artificial intelligence (AI) matures, adoption continues to increase. According to recent research, 35% of organizations are using artificial intelligence and 42% are exploring its potential. While AI is well understood and deployed in large numbers in the cloud, it is still nascent at the edge and faces some unique challenges.

Many people use artificial intelligence throughout the day, from navigating their cars to tracking their steps to talking to digital assistants. Even though users frequently access these services on mobile devices, the results of the calculations still exist in the cloud usage of AI. More specifically, a person requests information, the request is processed by a central learning model in the cloud, and the results are sent back to the person's local device.

Edge AI is understood and deployed less frequently than cloud AI. From the beginning, AI algorithms and innovations have relied on a fundamental assumption—that all data can be sent to a central location. In this central location, algorithms have full access to the data. This allows the algorithm to build its intelligence like a brain or central nervous system, with full access to computation and data.

But AI at the edge is different. It distributes intelligence across all cells and nerves. By pushing intelligence to the edge, we empower these edge devices with agency. This is critical in many applications and fields such as healthcare and industrial manufacturing.

Reasons to deploy AI at the edge

There are three main reasons for deploying AI at the edge.

Protecting Personally Identifiable Information (PII)

First, some organizations that handle PII or sensitive IP (intellectual property) prefer to keep the data at its source - In imaging machines in hospitals or manufacturing machines on factory floors. This reduces the risk of "drift" or "leakage" that may occur when data is transmitted over the network.

Minimize bandwidth usage

The second is the bandwidth issue. Sending large amounts of data from the edge to the cloud would clog the network and be impractical in some cases. It is not uncommon for imaging machines in health environments to generate files so large that transferring them to the cloud is impossible or takes days to complete.

Simply processing data at the edge is more effective, especially when the insights are aimed at improving proprietary machines. In the past, computing was much more difficult to move and maintain, so this data needed to be moved to the computing location. This paradigm is being challenged now that data is often more important and harder to manage, leading to use cases that warrant moving computation to the location of the data.

Avoid Latency

The third reason for deploying AI at the edge is latency. The internet is fast, but not real time. If there are situations where milliseconds matter, such as robotic arms assisting surgeries or time-sensitive production lines, organizations may decide to run AI at the edge.

Challenges of AI at the edge and how to solve them

Despite these benefits, there are still some unique challenges associated with deploying AI at the edge. Here are some tips you should consider to help manage these challenges.

Good and bad results of model training

Most AI technologies use large amounts of data to train models. However, in edge industrial use cases this often becomes more difficult, as most manufactured products are defect-free and therefore labeled or annotated as good. The resulting imbalance between "good results" and "bad results" makes it more difficult for the model to learn to identify problems.

Pure AI solutions that rely on data classification without contextual information are often not easy to create and deploy due to the lack of labeled data and even the occurrence of rare events. Adding context to AI, otherwise known as a data-centric approach, often brings benefits in terms of accuracy and scale of the final solution. The truth is that while AI can often replace mundane tasks performed manually by humans, it greatly benefits from human insight when building models, especially when there isn’t a lot of data to work with.

Get a commitment from experienced subject matter experts, working closely with the data scientists who build the algorithms, to get a jumpstart on AI learning.

AI cannot magically solve or provide an answer to every problem

There are usually many steps that go into the output. For example, a factory floor may have many workstations, and they may be dependent on each other. Humidity in one area of the factory during one process may affect the results of another process later in a different area of the production line.

People often think that artificial intelligence can magically piece together all these relationships. While this is possible in many cases, it can also require large amounts of data and a long time to collect, resulting in very complex algorithms that do not support interpretability and updating.

Artificial intelligence cannot live in a vacuum. Capturing these interdependencies will push the envelope from a simple solution to one that can scale over time and across different deployments.

Lack of stakeholder support limits the scale of AI

It’s difficult to scale AI throughout an organization if a group of people in the organization are skeptical of its benefits. The best (perhaps only) way to gain widespread support is to start with a high-value, difficult problem and then use AI to solve it.

At Audi, we consider solving the problem of frequency of replacement of welding gun electrodes. But the low cost of electrodes doesn't eliminate any mundane tasks humans are doing. Instead, they chose a welding process that is universally recognized as a difficult problem across the industry and significantly improved the quality of the process through artificial intelligence. This sparked the imagination of engineers across the company, who looked at how AI could be used in other processes to improve efficiency and quality.

Balancing the Benefits and Challenges of Edge AI

Deploying AI at the edge can help organizations and their teams. It has the potential to transform facilities into intelligent edges, improve quality, optimize manufacturing processes, and inspire developers and engineers across the organization to explore how they can integrate AI or advance AI use cases, including predictive analytics, recommendations to improve efficiency, or anomaly detection. But it also brings new challenges. As an industry, we must be able to deploy it while reducing latency, increasing privacy, protecting IP and keeping the network running smoothly.

The above is the detailed content of AI at the edge: Three tips to consider before deploying. For more information, please follow other related articles on the PHP Chinese website!

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

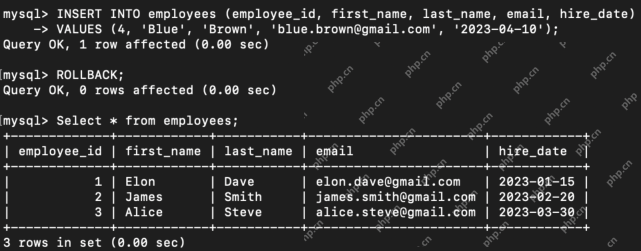

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor