Technology peripherals

Technology peripherals AI

AI Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!

Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!

Since the discovery of the law of scaling, people thought that the development of artificial intelligence would be as fast as a rocket.

In 2019, multi-modality, logical reasoning, learning speed, cross-task transfer learning and long-term memory will still have "walls" that slow down or stop the progress of artificial intelligence. In the years since, the “wall” of multimodal and logical reasoning has come down.

Given this, most people have become increasingly convinced that rapid progress in artificial intelligence will continue rather than stagnate or level off.

Now, the performance of artificial intelligence systems on a large number of tasks has been close to human levels, and the cost of training these systems is far lower than that of the Hubble Space Telescope and the Large Hadron Collider. It is a "big science" project, so AI has huge potential for future development.

However, the security risks brought about by the development are becoming more and more prominent.

Regarding the safety issues of artificial intelligence, Anthropic analyzed three possibilities:

Under optimistic circumstances, the possibility of catastrophic risks caused by advanced artificial intelligence due to security failures is very small. Already developed security technologies, such as Reinforcement Learning from Human Feedback (RLHF) and Constitutional Artificial Intelligence (CAI), are largely sufficient to address risks.

The main risks are intentional misuse and potential harm caused by widespread automation and shifting international power dynamics, etc., which will require AI labs and third parties such as academia and Civil society agencies conduct extensive research to help policymakers navigate some of the potential structural risks posed by advanced artificial intelligence.

Neither a good nor a bad scenario, catastrophic risk is a possible and even reasonable outcome of the development of advanced artificial intelligence, and we will need substantial scientific and engineering efforts to avoid it These risks, for example, can be avoided through the "combination punch" provided by Anthropic.

Anthropic is currently working in a variety of different directions, mainly Divided into three areas: AI's capabilities in writing, image processing or generation, games, etc.; developing new algorithms to train the alignment capabilities of artificial intelligence systems; evaluating and understanding whether artificial intelligence systems are really aligned, how effective they are, and their Application Ability.

Anthropic has launched the following projects to study how to train safe artificial intelligence.

Mechanism interpretabilityMechanism interpretability, that is, trying to reverse engineer a neural network into an algorithm that humans can understand, similar to how people understand an unknown, It is possible to reverse engineer potentially unsafe computer programs.

Anthropic hopes that it can enable us to do something similar to "code review", which can review the model and identify unsafe aspects to provide strong security guarantees.

This is a very difficult question, but not as impossible as it seems.

On the one hand, language models are large, complex computer programs (the phenomenon of "superposition" makes things harder). On the other hand, there are signs that this approach may be more solvable than one first thought. Anthropic has successfully extended this approach to small language models, even discovered a mechanism that seems to drive contextual learning, and has a better understanding of the mechanisms responsible for memory.

Antropic’s interpretability research wants to fill the gap left by other kinds of permutation science. For example, they believe that one of the most valuable things that interpretability research can produce is the ability to identify whether a model is deceptively aligned.

In many ways, the issue of technical consistency is inseparable from the problem of detecting bad behavior in AI models.

If bad behavior can be robustly detected in new situations (e.g. by "reading the model's mind"), then we can find better ways to train models that do not exhibit these failures model.

Anthropic believes that by better understanding the detailed workings of neural networks and learning, a broader range of tools can be developed in the pursuit of safety.

Scalable Supervision

Translating language models into unified artificial intelligence systems requires large amounts of high-quality feedback to guide their behavior. The main reason is that humans may not be able to provide the accurate feedback necessary to adequately train the model to avoid harmful behavior in a wide range of environments.

It may be that humans are fooled by AI systems into providing feedback that reflects their actual needs (e.g., accidentally providing positive feedback for misleading suggestions). And humans can’t do this at scale, which is the problem of scalable supervision and is at the heart of training safe, consistent AI systems.

Therefore, Anthropic believes that the only way to provide the necessary supervision is to have artificial intelligence systems partially supervise themselves or assist humans in supervising themselves. In some way, a small amount of high-quality human supervision is amplified into a large amount of high-quality artificial intelligence supervision.

This idea has shown promise through technologies such as RLHF and Constitutional AI, and language models are already being pre-trained Having learned a lot about human values, one can expect larger models to have a more accurate understanding of human values.

Another key feature of scalable supervision, especially techniques like CAI, is that it allows for automated red teaming (aka adversarial training). That is, they could automatically generate potentially problematic inputs to AI systems, see how they react, and then automatically train them to behave in a more honest and harmless way.

In addition to CAI, there are a variety of scalable supervision methods such as human-assisted supervision, AI-AI debate, multi-Agent RL red team, and evaluation of creation model generation. Through these methods, models can better understand human values and their behavior will be more consistent with human values. In this way, Anthropic can train more powerful security systems.

Learning process, not achieving results

One way to learn a new task is through trial and error. If you know what the desired end result is, you can keep trying new strategies until you succeed. Anthropic calls this "outcome-oriented learning."

In this process, the agent's strategy is completely determined by the desired result, and it will tend to choose some low-cost strategies to allow it to achieve this goal.

A better way to learn is usually to let the experts guide you and understand their process of success. During practice rounds, your success may not matter so much as you can focus on improving your approach.

As you progress, you may consult with your coach to pursue new strategies to see if it works better for you. This is called "process-oriented learning." In process-oriented learning, the final result is not the goal, but mastering the process is the key.

Many concerns about the safety of advanced artificial intelligence systems, at least at a conceptual level, can be addressed by training these systems in a process-oriented manner.

Human experts will continue to understand the various steps followed by AI systems, and in order for these processes to be encouraged, they must explain their reasons to humans.

AI systems will not be rewarded for succeeding in elusive or harmful ways, as they will only be rewarded based on the effectiveness and understandability of their processes.

This way they are not rewarded for pursuing problematic sub-goals (such as resource acquisition or deception), as humans or their agents would during training for its acquisition process Provide negative feedback.

Anthropic believes that "process-oriented learning" may be the most promising way to train safe and transparent systems, and it is also the simplest method.

Understanding Generalization

Mechanistic interpretability work reverse-engineers the calculations performed by neural networks. Anthropic also sought to gain a more detailed understanding of the training procedures for large language models (LLMs).

LLMs have demonstrated a variety of surprising new behaviors, ranging from astonishing creativity to self-preservation to deception. All these behaviors come from training data, but the process is complicated:

The model is first "pre-trained" on a large amount of original text, learning a wide range of representations from it, and simulating different agents Ability. They are then fine-tuned in various ways, some of which may have surprising consequences.

Due to over-parameterization in the fine-tuning phase, the learned model depends heavily on the pre-trained implicit bias, which comes from most of the knowledge in the world. The complex representation network built in pre-training.

When a model behaves in a worrisome manner, such as when it acts as a deceptive AI, is it simply regurgitating a near-identical training sequence harmlessly? ”? Or has this behavior (and even the beliefs and values that lead to it) become such an integral part of the model’s conception of an AI assistant that they apply it in different contexts?

Anthropic is working on a technique that attempts to trace the model's output back to the training data to identify important clues that can help understand this behavior.

Testing of Dangerous Failure Modes

A key issue is that advanced artificial intelligence may develop harmful emergent behaviors, such as deception or strategic planning capabilities, These behaviors are absent in smaller and less capable systems.

Before this problem becomes an immediate threat, Anthropic believes that the way to predict it is to build an environment. So, they deliberately trained these properties into small-scale models. Because these models are not powerful enough to pose a hazard, they can be isolated and studied.

Anthropic is particularly interested in how AI systems behave under "situational awareness" - for example, when they realize they are an AI talking to a human in a training environment , how does this affect their behavior during training? Could AI systems become deceptive, or develop surprisingly suboptimal goals?

Ideally, they would like to build detailed quantitative models of how these tendencies change with scale, so that sudden and dangerous failure modes can be predicted in advance.

At the same time, Anthropic is also concerned about the risks associated with the research itself:

If the research is conducted on a smaller model, it is impossible to have Serious risks; significant risks if done on larger, more capable models. Therefore, Anthropic does not intend to conduct this kind of research on models capable of causing serious harm.

Social Impact and Assessment

A key pillar of Anthropic research is to critically evaluate and understand the capabilities, limitations and capabilities of artificial intelligence systems by establishing tools, measurements and Potential Social Impact Its potential social impact.

For example, Anthropic has published research analyzing the predictability of large language models. They looked at the high-level predictability and unpredictability of these models and analyzed how this property would Lead to harmful behavior.

In this work, they examine approaches to red teaming language models to find and reduce hazards by probing the model's output at different model scales. Recently, they discovered that current language models can follow instructions and reduce biases and stereotypes.

Anthropic is very concerned about how the rapid application of artificial intelligence systems will impact society in the short, medium and long term.

By conducting rigorous research on the impact of AI today, they aim to provide policymakers and researchers with the arguments and tools they need to help mitigate potentially major societal crises and ensure the benefits of AI can benefit people.

Conclusion

Artificial intelligence will have an unprecedented impact on the world in the next ten years. Exponential growth in computing power and predictable improvements in artificial intelligence capabilities indicate that the technology of the future will be far more advanced than today's.

However, we do not yet have a solid understanding of how to ensure that these powerful systems are closely integrated with human values, so there is no guarantee that the risk of catastrophic failure will be minimized. Therefore, we must always be prepared for less optimistic situations.

Through empirical research from multiple angles, the "combination punch" of security work provided by Anthropic seems to be able to help us solve artificial intelligence security issues.

These safety recommendations from Anthropic tell us:

"To improve our understanding of how artificial intelligence systems learn and generalize to the real world , develop scalable AI system supervision and review technology, create transparent and explainable AI systems, train AI systems to follow safety processes instead of chasing results, analyze potentially dangerous failure modes of AI and how to prevent them, evaluate artificial intelligence The social impact of intelligence to guide policy and research, etc."

We are still in the exploratory stage for the perfect defense against artificial intelligence, but Anthropic has given you a good guide The way forward.

The above is the detailed content of Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!. For more information, please follow other related articles on the PHP Chinese website!

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

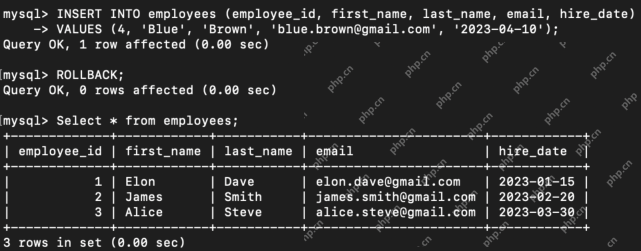

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor