Technology peripherals

Technology peripherals AI

AI Control prediction for trajectory guidance in end-to-end autonomous driving: a simple and powerful baseline method TCP

Control prediction for trajectory guidance in end-to-end autonomous driving: a simple and powerful baseline method TCPControl prediction for trajectory guidance in end-to-end autonomous driving: a simple and powerful baseline method TCP

arXiv paper "Trajectory-guided Control Prediction for End-to-end Autonomous Driving: A Simple yet Strong Baseline", June 2022, Shanghai AI Laboratory and Shanghai Jiao Tong University.

Current end-to-end autonomous driving methods either run controllers based on planned trajectories or directly perform control predictions, which spans two research areas. In view of the potential mutual benefits between the two, this article actively explores the combination of the two, called TCP (Trajectory-guided Control Prediction). Specifically, the ensemble method has two branches, respectively for trajectory planning and direct control. The trajectory branch predicts future trajectories, while the control branch involves a new multi-step prediction scheme reasoning about the relationship between current actions and future states. The two branches are connected so that the control branch receives corresponding guidance from the trajectory branch at each time step. The outputs of the two branches are then fused to achieve complementary advantages.

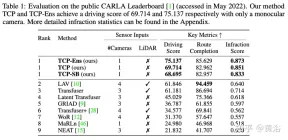

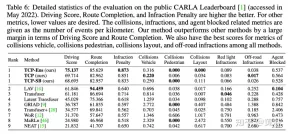

Evaluation using the Carla simulator in a closed-loop urban driving environment with challenging scenarios. Even with monocular camera input, this method ranks first in the official CARLA rankings. Source code and data will be open source: https://github.com/OpenPerceptionX/TCP

Choose Roach (“End-to-end urban driving by imitating a reinforcement learning coach“. ICCV, 2021) as an expert. Roach is a simple model trained by RL with privileged information including roads, lanes, routes, vehicles, pedestrians, traffic lights and stations, all rendered as 2D BEV images. Compared with hand-crafted experts, such learning-based experts can convey more information besides direct supervision signals. Specifically, there is a feature loss, which forces the latent features before the final output head of the student model to be similar to those of the experts. A value loss is also added as an auxiliary task for the student model to predict expected returns.

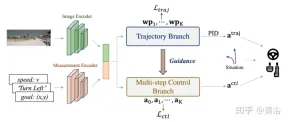

As shown in the figure, the entire architecture consists of an input encoding stage and two subsequent branches: the input image i is passed through a CNN-based image encoder, such as ResNet, to generate a feature map F. At the same time, the navigation information g is concatenated with the current speed v to form the measurement input m, and then the MLP-based measurement encoder takes m as its input and outputs the measurement feature jm. The encoding features are then shared by both branches for subsequent trajectory and control predictions. Specifically, Control Branch is a new multi-step prediction design with guidance from Trajectory Branch. Finally, a scenario-based fusion scheme is adopted to combine the best of both output paradigms.

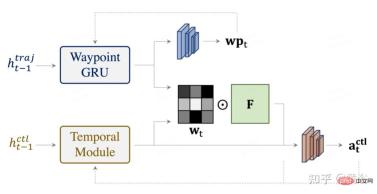

As shown in the figure, TCP seeks the help of the trajectory planning branch by learning the attention map to extract important information from the encoded feature map. The interaction between the two branches (trajectory and control) enhances the consistency of these two closely related output paradigms and further elaborates the spirit of multi-task learning (MTL). Specifically, the image encoder F is utilized to extract the 2D feature map at time step t, and the corresponding hidden states from the control branch and the trajectory branch are used to calculate the attention map.

# Information representation features are input into the strategy header, which is shared among all time t steps to predict the corresponding control actions. Note that for the initial step, only measured features are used to calculate the initial attention map, and the attention image features are combined with the measured features to form an initial feature vector. To ensure that the features indeed describe the state of that step and contain important information for controlling predictions, a feature loss is added at each step so that the initial feature vectors are also close to the expert's features.

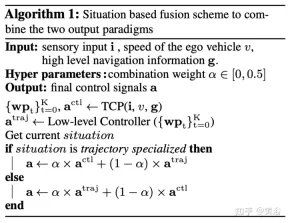

The TCP framework has two forms of output representation: planning trajectory and predictive control. For further combination, a scenario-based fusion strategy is designed, as shown in the pseudocode of Algorithm 1.

Specifically, α is represented as a combined weight, with a value between 0 and 0.5. According to the prior belief, in some cases one representation is more suitable. By averaging the weight α, the trajectory and control The predicted results are combined, and the more suitable one occupies more weight (1 − α). Note that the combination weight α does not need to be constant or symmetric, which means that it can be set to different values in different situations, or for specific control signals. In the experiments, scenes were selected based on whether the self-vehicle turned, meaning that if it turned, the scene was control-specific, otherwise it was trajectory-specific.

The experimental results are as follows:

The above is the detailed content of Control prediction for trajectory guidance in end-to-end autonomous driving: a simple and powerful baseline method TCP. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to set up two-step authentication in ChatGPT!May 12, 2025 pm 05:37 PM

An easy-to-understand explanation of how to set up two-step authentication in ChatGPT!May 12, 2025 pm 05:37 PMChatGPT Security Enhanced: Two-Stage Authentication (2FA) Configuration Guide Two-factor authentication (2FA) is required as a security measure for online platforms. This article will explain in an easy-to-understand manner the 2FA setup procedure and its importance in ChatGPT. This is a guide for those who want to use ChatGPT safely. Click here for OpenAI's latest AI agent, OpenAI Deep Research ⬇️ [ChatGPT] What is OpenAI Deep Research? A thorough explanation of how to use it and the fee structure! table of contents ChatG

![[For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!](https://img.php.cn/upload/article/001/242/473/174704251871181.jpg?x-oss-process=image/resize,p_40) [For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!May 12, 2025 pm 05:35 PM

[For businesses] ChatGPT training | A thorough introduction to 8 free training options, subsidies, and examples!May 12, 2025 pm 05:35 PMThe use of generated AI is attracting attention as the key to improving business efficiency and creating new businesses. In particular, OpenAI's ChatGPT has been adopted by many companies due to its versatility and accuracy. However, the shortage of personnel who can effectively utilize ChatGPT is a major challenge in implementing it. In this article, we will explain the necessity and effectiveness of "ChatGPT training" to ensure successful use of ChatGPT in companies. We will introduce a wide range of topics, from the basics of ChatGPT to business use, specific training programs, and how to choose them. ChatGPT training improves employee skills

A thorough explanation of how to use ChatGPT to streamline your Twitter operations!May 12, 2025 pm 05:34 PM

A thorough explanation of how to use ChatGPT to streamline your Twitter operations!May 12, 2025 pm 05:34 PMImproved efficiency and quality in social media operations are essential. Particularly on platforms where real-time is important, such as Twitter, requires continuous delivery of timely and engaging content. In this article, we will explain how to operate Twitter using ChatGPT from OpenAI, an AI with advanced natural language processing capabilities. By using ChatGPT, you can not only improve your real-time response capabilities and improve the efficiency of content creation, but you can also develop marketing strategies that are in line with trends. Furthermore, precautions for use

![[For Mac] Explaining how to get started and how to use the ChatGPT desktop app!](https://img.php.cn/upload/article/001/242/473/174704239752855.jpg?x-oss-process=image/resize,p_40) [For Mac] Explaining how to get started and how to use the ChatGPT desktop app!May 12, 2025 pm 05:33 PM

[For Mac] Explaining how to get started and how to use the ChatGPT desktop app!May 12, 2025 pm 05:33 PMChatGPT Mac desktop app thorough guide: from installation to audio functions Finally, ChatGPT's desktop app for Mac is now available! In this article, we will thoroughly explain everything from installation methods to useful features and future update information. Use the functions unique to desktop apps, such as shortcut keys, image recognition, and voice modes, to dramatically improve your business efficiency! Installing the ChatGPT Mac version of the desktop app Access from a browser: First, access ChatGPT in your browser.

What is the character limit for ChatGPT? Explanation of how to avoid it and upper limits by modelMay 12, 2025 pm 05:32 PM

What is the character limit for ChatGPT? Explanation of how to avoid it and upper limits by modelMay 12, 2025 pm 05:32 PMWhen using ChatGPT, have you ever had experiences such as, "The output stopped halfway through" or "Even though I specified the number of characters, it didn't output properly"? This model is very groundbreaking and not only allows for natural conversations, but also allows for email creation, summary papers, and even generate creative sentences such as novels. However, one of the weaknesses of ChatGPT is that if the text is too long, input and output will not work properly. OpenAI's latest AI agent, "OpenAI Deep Research"

What is ChatGPT's voice input and voice conversation function? Explaining how to set it up and how to use itMay 12, 2025 pm 05:27 PM

What is ChatGPT's voice input and voice conversation function? Explaining how to set it up and how to use itMay 12, 2025 pm 05:27 PMChatGPT is an innovative AI chatbot developed by OpenAI. It not only has text input, but also features voice input and voice conversation functions, allowing for more natural communication. In this article, we will explain how to set up and use the voice input and voice conversation functions of ChatGPT. Even when you can't take your hands off, ChatGPT responds and responds with audio just by talking to you, which brings great benefits in a variety of situations, such as busy business situations and English conversation practice. A detailed explanation of how to set up the smartphone app and PC, as well as how to use each.

An easy-to-understand explanation of how to use ChatGPT for job hunting and job hunting!May 12, 2025 pm 05:26 PM

An easy-to-understand explanation of how to use ChatGPT for job hunting and job hunting!May 12, 2025 pm 05:26 PMThe shortcut to success! Effective job change strategies using ChatGPT In today's intensifying job change market, effective information gathering and thorough preparation are key to success. Advanced language models like ChatGPT are powerful weapons for job seekers. In this article, we will explain how to effectively utilize ChatGPT to improve your job hunting efficiency, from self-analysis to application documents and interview preparation. Save time and learn techniques to showcase your strengths to the fullest, and help you make your job search a success. table of contents Examples of job hunting using ChatGPT Efficiency in self-analysis: Chat

An easy-to-understand explanation of how to create and output mind maps using ChatGPT!May 12, 2025 pm 05:22 PM

An easy-to-understand explanation of how to create and output mind maps using ChatGPT!May 12, 2025 pm 05:22 PMMind maps are useful tools for organizing information and coming up with ideas, but creating them can take time. Using ChatGPT can greatly streamline this process. This article will explain in detail how to easily create mind maps using ChatGPT. Furthermore, through actual examples of creation, we will introduce how to use mind maps on various themes. Learn how to effectively organize and visualize your ideas and information using ChatGPT. OpenAI's latest AI agent, OpenA

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft