Technology peripherals

Technology peripherals AI

AI In-depth report: Large model-driven AI speeds up across the board! The golden decade begins

In-depth report: Large model-driven AI speeds up across the board! The golden decade beginsIn-depth report: Large model-driven AI speeds up across the board! The golden decade begins

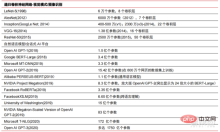

After experiencing “three ups and two downs” in the past 70 years, with the improvement and progress of underlying chips, computing power, data and other infrastructure, the global AI industry is gradually moving from computational intelligence to perceptual intelligence and cognitive intelligence, and is forming accordingly. The industrial division of labor and collaboration system of "chips, computing facilities, AI frameworks & algorithm models, and application scenarios". Since 2019, AI large models have significantly improved the ability to generalize problem solving, and "large models and small models" have gradually become the mainstream technology route in the industry, driving the overall acceleration of the development of the global AI industry and forming the "chip computing power infrastructure AI framework & algorithm" "Library Application Scenario" stable industrial value chain structure.

For this issue’s intelligent internal reference, we recommend CITIC Securities’ report “Large Models Drive AI to Accelerate Comprehensively, and the Industry’s Golden Ten-Year Investment Cycle Begins” to interpret the current status of the artificial intelligence industry and core issues in industrial development. Source: CITIC Securities

1. The “Three Ups and Three Downs” of Artificial Intelligence

Since the concept & theory of “artificial intelligence” was first proposed in 1956, the development of AI industry & technology has mainly experienced three major developments. development stage.

1 ) 20 century 50 年 ~20 century 70 Era: Subject to computing power performance, data volume, etc., it remains more at the theoretical level. The Dartmouth Conference in 1956 promoted the emergence of the world's first wave of artificial intelligence. At that time, an optimistic atmosphere permeated the entire academic world, and many world-class inventions appeared in terms of algorithms, including a method called reinforcement learning. In its prototype form, reinforcement learning is the core idea of Google's AlphaGo algorithm. In the early 1970s, AI encountered a bottleneck: people found that logic provers, perceptrons, reinforcement learning, etc. could only do very simple and narrow-purpose tasks, and could not handle tasks that were slightly beyond their scope. The limited memory and processing speed of computers at the time were not enough to solve any practical AI problems. The complexity of these calculations increases exponentially, making it an impossible computational task.

2 ) 20 century 80 年 ~20 century 90 era: Expert system is the first commercialization attempt of artificial intelligence, with high hardware cost, Limited applicable scenarios restrict the further development of the market. In the 1980s, expert system AI programs began to be adopted by companies around the world, and "knowledge processing" became the focus of mainstream AI research. The capabilities of expert systems come from the professional knowledge they store, and knowledge base systems and knowledge engineering became the main directions of AI research in the 1980s. However, the practicality of expert systems is limited to certain situations, and people's enthusiasm for expert systems soon turned to great disappointment. On the other hand, the advent of modern PCs from 1987 to 1993 was far less expensive than machines like Symbolics and Lisp used by expert systems. Compared to modern PCs, expert systems are considered archaic and very difficult to maintain. As a result, government funding began to decline, and winter came again.

3) 2015 YTD: Gradually form a complete industrial chain division of labor and collaboration system. The third landmark event of artificial intelligence occurred in March 2016. AlphaGo developed by Google DeepMind defeated South Korean professional nine-dan Go player Lee Sedol in a human-machine battle. Subsequently, the public became familiar with artificial intelligence, and enthusiasm was mobilized in various fields. This incident established a statistical classification deep learning model based on the DNN neural network algorithm. This type of model is more general than in the past and can be applied to different application scenarios through different feature value extraction. At the same time, the popularity of mobile Internet from 2010 to 2015 also brought unprecedented data nourishment to deep learning algorithms. Thanks to the increase in data volume, improvement in computing power and the emergence of new machine learning algorithms, artificial intelligence has begun to undergo major adjustments. The research field of artificial intelligence is also expanding, including expert systems, machine learning, evolutionary computing, fuzzy logic, computer vision, natural language processing, recommendation systems, etc. The development of deep learning has brought artificial intelligence into a new development climax.

▲ The third wave of artificial intelligence development

▲ The third wave of artificial intelligence development

The third wave of artificial intelligence brings us a number of scenarios that can be commercialized, such as the DNN algorithm The outstanding performance enabled speech recognition and image recognition to contribute the first batch of successful business cases in the fields of security and education. In recent years, the development of algorithms such as Transformer based on neural network algorithms has put the commercialization of NLP (natural language processing) on the agenda, and it is expected to see mature commercialization scenarios in the next 3-5 years.

▲ The number of years required for the industrialization of artificial intelligence technology

2. The division of labor is gradually complete, and the implementation scenarios are constantly expanding

Experienced in the past 5 to 6 years With the development, the global AI industry is gradually forming a division of labor and collaboration and a complete industrial chain structure, and is beginning to form typical application scenarios in some fields.

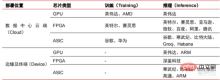

1. AI chip: From GPU to FPGA, ASIC, etc., the performance is constantly improving

Chip is the commanding heights of the AI industry. The prosperity of this round of artificial intelligence industry is due to the greatly improved AI computing power, which makes deep learning and multi-layer neural network algorithms possible. 人 Artificial intelligence is rapidly penetrating into various industries, and data is growing massively. This results in extremely complex algorithm models, heterogeneous processing objects, and high computing performance requirements. Therefore, artificial intelligence deep learning requires extremely powerful parallel processing capabilities. Compared with CPUs, AI chips have more logical operation units (ALUs) for data processing and are suitable for parallel processing of intensive data. The main types include graphics processors ( GPU), field programmable gate array (FPGA), application specific integrated circuit (ASIC), etc.

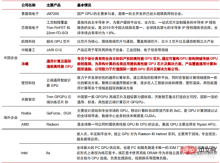

From the perspective of usage scenarios, relevant hardware includes: cloud-side inference chips, cloud-side test chips, terminal processing chips, IP cores, etc. In the "training" or "learning" part of the cloud, NVIDIA GPU has a strong competitive advantage, and Google TPU is also actively expanding its market and applications. FPGAs and ASICs may have an advantage in end-use “inference” applications. The United States has strong advantages in the fields of GPU and FPGA, with dominant companies such as NVIDIA, Xilinx, and AMD. Google and Amazon are also actively developing AI chips.

▲ Application of chips in different AI links

▲Complexity of artificial intelligence neural network algorithm model

▲ Chip manufacturer layout

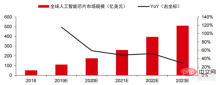

In the high-performance computing market, solving complex problems with the help of the parallel computing capabilities of AI chips is currently the mainstream solution. According to Tractica data, the global AI HPC market size was approximately US$1.36 billion in 2019, and the market size is expected to reach US$11.19 billion by 2025, with a seven-year CAGR of 35.1%. The AI HPC market share will increase from 13.2% in 2019 to 35.5% in 2025. At the same time, Tractica data shows that the global AI chip market size was US$6.4 billion in 2019, and the market size is expected to reach US$51 billion by 2023, with the market space growing nearly 10 times.

▲ Edge computing chip shipments (millions, by terminal device)

▲ Global artificial intelligence chips Market size (billion US dollars)

In the past two years, a large number of self-developed chip companies have emerged in China, represented by Moore Thread, which develops self-developed GPUs, and Cambrian, which develops self-developed autonomous driving chips. Moore Thread released the MUSA unified system architecture and the first-generation chip "Sudi" in March 2022. Moore Thread's new architecture supports NVIDIA's CUDA architecture. According to IDC data, among China's artificial intelligence chips in the first half of 2021, GPU has been the first choice in the market, accounting for more than 90% of the market share. However, with the steady development of other chips, it is expected that the proportion of GPU will gradually decrease to 80% by 2025. .

▲ GPU chip major players and technical roadmap

2. Computing power facilities: With the help of cloud computing, self-construction and other methods, indicators such as computing power scale and unit cost have been continuously improved

In the past, the development of computing power has effectively alleviated the bottleneck of artificial intelligence development. As an ancient concept, artificial intelligence has been limited in its past development by insufficient computing power. Its computing power requirements mainly come from two aspects: 1) One of the biggest challenges of artificial intelligence is the inconsistency between recognition and accuracy. High, and to improve accuracy, it is necessary to increase the scale and accuracy of the model, which requires stronger computing power support. 2) As the application scenarios of artificial intelligence are gradually implemented, data in the fields of image, voice, machine vision and games have shown explosive growth, which has also put forward higher requirements for computing power, making computing technology enter a new round of high-speed innovation period. . The development of computing power in the past decade or so has effectively alleviated the development bottleneck of artificial intelligence. In the future, intelligent computing will show the characteristics of greater demand, higher performance requirements, and diverse needs anytime, anywhere.

As it approaches the physical limit, Moore's Law of computing power growth gradually expires, and the computing power industry is in the stage of multi-factor comprehensive innovation . In the past, computing power supply was mainly improved through process shrinkage, that is, increasing the number of transistor stacks within the same chip to improve computing performance. However, as processes continue to approach physical limits and costs continue to increase, Moore's Law gradually becomes ineffective. The computing power industry enters the post-Moore era, and computing power supply needs to be improved through comprehensive innovation of multiple factors. There are currently four levels of computing power supply: single-chip computing power, complete machine computing power, data center computing power, and networked computing power, which are continuously evolving and upgrading through different technologies to meet the supply needs of diversified computing power in the smart era. In addition, improving the overall performance of computing systems through deep integration of software and hardware systems and algorithm optimization is also an important direction for the evolution of the computing power industry.

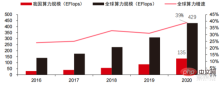

Computing power scale: According to the "China Computing Power Development Index White Paper" released by the China Academy of Information and Communications Technology in 2021, the total scale of global computing power will still maintain a growth trend in 2020, with the total scale reaching 429EFlops. A year-on-year increase of 39%, of which the basic computing power scale is 313EFlops, the intelligent computing power scale is 107EFlops, and the supercomputing power scale is 9EFlops. The proportion of intelligent computing power has increased. The development pace of my country's computing power is similar to that of the world. In 2020, the total scale of my country's computing power reached 135EFlops, accounting for 39% of the global computing power scale, achieving a high growth of 55%, and achieving a growth rate of more than 40% for three consecutive years.

▲ Changes in global computing power scale

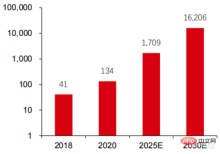

Computing power structure: my country’s development situation is similar to that of the world, and intelligent computing power is growing rapidly. The proportion increased from 3% in 2016 to 41% in 2020. The proportion of basic computing power dropped from 95% in 2016 to 57% in 2020. Driven by downstream demand, the artificial intelligence computing power infrastructure represented by intelligent computing centers has developed rapidly. At the same time, in terms of future demand, according to the "Ubiquitous Computing Power: Cornerstone of an Intelligent Society" report released by Huawei in 2020, with the popularization of artificial intelligence, it is expected that by 2030, the demand for artificial intelligence computing power will be equivalent to 160 billion Qualcomm chips Snapdragon 855 has a built-in AI chip, which is equivalent to about 390 times that in 2018 and about 120 times that in 2020.

▲ Estimated artificial intelligence computing power demand (EFlops) in 2030

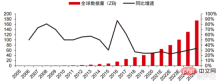

Data storage: non-relational database and used to store and manage non-relational databases Data lakes for structured data are experiencing an explosion of demand. The amount of global data has shown explosive growth in recent years. According to IDC statistics, the amount of data generated globally in 2019 was 41ZB. The CAGR in the past ten years was close to 50%. It is expected that the global data amount may be as high as 175ZB by 2025, 2019-2025 It will still maintain a compound growth rate of nearly 30% every year, and more than 80% of the data will be unstructured data such as text, images, audio and video, which is difficult to process. The surge in data volume (especially unstructured data) has made the weaknesses of relational databases more and more prominent. Faced with the geometric exponential growth of data, the vertical overlay data extension model of relational databases traditionally designed for structured data is difficult to satisfy.

Non-relational databases and data lakes used to store and manage unstructured data are gradually occupying an increasing share of the market due to their flexibility and easy scalability. According to IDC, the global Nosql database market size was US$5.6 billion in 2020 and is expected to grow to US$19 billion in 2025, with a compound growth rate of 27.6% from 2020 to 2025. At the same time, according to IDC, the global data lake market size was US$6.2 billion in 2020, and the market size growth rate in 2020 was 34.4%.

▲ Global data volume and year-on-year growth rate (ZB, %)

3. AI framework: relatively mature, dominated by a few giants

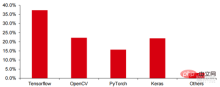

Tensorflow (Industry), PyTorch (Academia) gradually achieve dominance. Tensorflow launched by Google is the mainstream, and together with other open source modules such as Keras (Tensorflow2 integrates the Keras module), Facebook's open source PyTorch, etc., it constitutes the current mainstream framework for AI learning. Since its establishment in 2011, Google Brain has carried out large-scale deep learning application research for scientific research and Google product development. Its early work was DistBelief, the predecessor of TensorFlow. DistBelief is refined and widely used in product development at Google and other Alphabet-owned companies. In November 2015, based on DistBelief, Google Brain completed the development of TensorFlow, the "second-generation machine learning system" and made the code open source. Compared with its predecessor, TensorFlow has significant improvements in performance, architectural flexibility, and portability.

Although Tensorflow and Pytorch are open source modules, due to the huge model and complexity of the deep learning framework, their modifications and updates are basically completed by Google. As a result, Google and Facebook have also updated Tensorflow and PyTorch. The direction directly dominates the industry’s development model of artificial intelligence.

▲ Global Commercial Artificial Intelligence Framework Market Share Structure (2021)

Microsoft invested US$1 billion in OpenAI in 2020 and obtained the GPT-3 language model exclusive license. GPT-3 is currently the most successful application in natural language generation. It can not only be used to write "papers", but can also be used to "automatically generate code". Since its release in July this year, it has also been regarded by the industry as the most powerful Artificial intelligence language model. Facebook founded the AI Research Institute as early as 2013. FAIR itself does not have as famous models and applications as AlphaGo and GPT-3, but its team has published academic papers in areas that Facebook itself is interested in, including computer vision, Natural language processing and conversational AI, etc. In 2021, Google had 177 papers accepted and published by NeurIPS (currently the highest journal on artificial intelligence algorithms), Microsoft had 116 papers, DeepMind had 81 papers, Facebook had 78 papers, IBM had 36 papers, and Amazon had only 35 papers.

4. Algorithm model: Neural network algorithm is the main theoretical basis

Deep learning is transitioning to deep neural networks. Machine learning is a computer algorithm that predicts images, sounds and other data through multi-layer nonlinear feature learning and hierarchical feature extraction. Deep learning is an advanced machine learning, also known as deep neural network (DNN: Deep Neural Networks). Different neural networks and training methods are established for training and inference in different scenarios (information), and training is the process of optimizing the weight and transmission direction of each neuron through massive data deduction. The convolutional neural network can consider single pixels and surrounding environmental variables, and simplify the amount of data extraction, further improving the efficiency of the neural network algorithm.

Neural network algorithm has become the core of big data processing. AI conducts deep learning through massive labeled data, optimizes neural networks and models, and introduces the application link of reasoning and decision-making. The 1990s was a period of rapid rise in machine learning and neural network algorithms, and the algorithms were commercially used with the support of computing power. After the 1990s, the practical application fields of AI technology include data mining, industrial robots, logistics, speech recognition, banking software, medical diagnosis and search engines, etc. The framework of related algorithms has become the focus of technology giants’ layout.

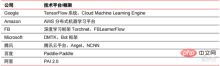

▲ Algorithm platform framework of major technology giants

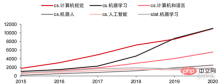

In terms of technical direction, computer vision and machine learning are the main technical research and development directions. According to ARXIV data, from a theoretical research perspective, the two fields of computer vision and machine learning developed rapidly from 2015 to 2020, followed by the field of robotics. In 2020, among the AI-related publications on ARXIV, the number of publications in the field of computer vision exceeded 11,000, ranking first in the number of AI-related publications.

▲Number of AI-related publications on ARXIV from 2015 to 2020

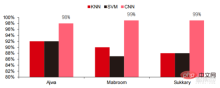

In the past five years, we have observed that neural network algorithms, mainly CNN and DNN, are the fastest growing machine learning algorithms in recent years. Due to their excellent performance in computer vision, natural language processing and other fields, their performance has accelerated significantly. It has accelerated the implementation of artificial intelligence applications and is a key factor in the rapid maturity of computer vision and decision-making intelligence. As can be seen from the side view, the standard DNN method has obvious advantages over traditional KNN, SVM, and random forest methods in speech recognition tasks.

▲ The convolution algorithm breaks through the accuracy bottleneck of traditional image processing and is available for industrialization for the first time.

In terms of training cost, the neural network algorithm The cost of training artificial intelligence is significantly reduced. ImageNet is a dataset of more than 14 million images used to train artificial intelligence algorithms. According to tests by the Stanford DAWNBench team, training a modern image recognition system in 2020 only costs about US$7.5, a drop of more than 99% from US$1,100 in 2017. This is mainly due to the optimization of algorithm design, the reduction of computing power costs, and Advances in large-scale AI training infrastructure. The faster the system can be trained, the faster it can be evaluated and updated with new data, which will further speed up the training of the ImageNet system and increase the productivity of developing and deploying artificial intelligence systems.

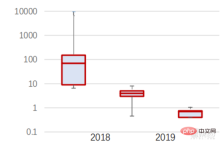

Looking at the training time distribution, the time required for neural network algorithm training has been reduced across the board. By analyzing the distribution of training time in each period, it is found that in the past few years, the training time has been greatly shortened and the distribution of training time has become more concentrated, which mainly benefits from the widespread use of accelerator chips.

▲ ImageNet training time distribution (minutes)

Driven by the convolutional neural network, the computer vision accuracy test scores have been significantly improved. It is in the industrialization stage. Computer vision accuracy has made tremendous progress in the past decade, mainly due to the application of machine learning technology. Top-1 Accuracy Test The better an AI system is at assigning the correct label to an image, the more identical its predictions (among all possible labels) are to the target label. With additional training data (such as photos from social media), there was 1 error per 10 attempts on the Top-1 accuracy test in January 2021, compared to 1 error per 10 attempts in December 2012 4 errors will occur. Another accuracy test, Top-5, asks the computer to answer whether the target label is among the top five predictions of the classifier. Its accuracy increased from 85% in 2013 to 99% in 2021, exceeding the human level. Score 94.9%.

▲ TOP-1 accuracy change

▲TOP-5 accuracy change

In the process of the development of neural network algorithms, Transformer models have become mainstream in the past five years, integrating various scattered small models in the past . The Transformer model is a classic NLP model launched by Google in 2017 (Bert uses Transformer). The core part of the model usually consists of two parts, namely the encoder and the decoder. The encoder/decoder is mainly composed of two modules: the feedforward neural network (the blue part in the picture) and the attention mechanism (the rose-red part in the picture). The decoder usually has an additional (cross-over) attention mechanism. The encoder and decoder classify and refocus the data by imitating the neural network. The model performance exceeds RNN and CNN in machine translation tasks. Only the encoder/decoder is needed to achieve good results and can be efficiently parallelized.

AI Large modelization is a new trend that has emerged in the past two years. Self-supervised learning Pre-training model fine-tuning and adaptation solutions are gradually becomes mainstream, AI It becomes possible for the model to generalize with the support of big data. Traditional small models are trained with labeled data in specific fields and have poor versatility. They are often not applicable to another application scenario and need to be retrained. Large AI models are usually trained on large-scale unlabeled data, and large models can be fine-tuned to meet the needs of a variety of application tasks. Represented by organizations such as OpenAI, Google, Microsoft, Facebook, and NVIDIA, the deployment of large-scale intelligent models has become a leading global trend, and has formed basic models with large parameters such as GPT-3 and Switch Transformer.

The Megatron-LM jointly developed by NVIDIA and Microsoft at the end of 2021 has 8.3 billion parameters, while the Megatron developed by Facebook has 11 billion parameters. Most of these parameters come from reddit, wikipedia, news websites, etc. Tools such as data lakes required for large amounts of data storage and analysis will be one of the focuses of the next step of research and development.

5. Application scenarios: gradually implemented in security, Internet, retail and other fields

Currently, the most mature technologies on the application side are speech recognition, image recognition, etc., focusing on these fields , a large number of companies have been listed in both China and the United States, and certain industrial clusters have been formed. In the field of speech recognition, relatively mature listed companies include iFlytek and Nuance, which was previously acquired by Microsoft for US$29 billion.

Smart medical care:AI Medical care is mostly used in medical assistance scenarios. AI products in the medical and health field involve multiple application scenarios such as intelligent consultation, medical history collection, voice electronic medical records, medical voice entry, medical imaging diagnosis, intelligent follow-up, and medical cloud platforms. From the perspective of hospital medical treatment process, pre-diagnosis products are mostly voice assistant products, such as medical guidance, medical history collection, etc.; in-diagnosis products are mostly voice electronic cases and image-assisted diagnosis; and post-diagnosis products are mainly follow-up tracking products. Taking into account the different products in the entire medical treatment process, the current main application areas of AI medical care are still auxiliary scenarios, replacing doctors' physical and repetitive labor. The leading overseas company in AI medical care is Nuance. 50% of the company's business comes from intelligent medical solutions, and clinical medical document transcription solutions such as medical records are the main source of revenue for the medical business.

Smart city: Big city diseases and new urbanization bring new challenges to urban governance and stimulate the demand forAI urban governance. As the population and number of motor vehicles increase in large and medium-sized cities, problems such as urban congestion are becoming more prominent. With the advancement of new urbanization, smart cities will become the main development model of Chinese cities. AI security and AI traffic management involved in smart cities will become the main implementation solutions on the G side. In 2016, Hangzhou carried out its first urban data brain transformation, and the peak congestion index dropped to below 1.7. At present, urban data brains represented by Alibaba have invested more than 1.5 billion yuan, mainly in areas such as intelligent security and intelligent transportation. The scale of my country's smart city industry continues to expand. The Forward-looking Industry Research Institute predicts that it will reach 25 trillion yuan in 2022, with an average annual compound growth rate of 55.27% from 2014 to 2022.

▲ 2014-2022 Smart City Market Scale and Forecast (Unit: Trillion Yuan)

▲ 2014-2022 Smart City Market Scale and Forecast (Unit: Trillion Yuan)

In 2020 , the market size will reach 5710 billion, and smart warehousing will usher in a market worth hundreds of billions. In the context of high costs and digital transformation in the logistics industry, warehousing logistics and product manufacturing are facing the urgent need for automation, digitalization, and intelligent transformation to improve manufacturing and circulation efficiency. According to data from the China Federation of Logistics and Purchasing, China's smart logistics market will reach 571 billion yuan in 2020, with an average annual compound growth rate of 21.61% from 2013 to 2020. New generation information technologies such as the Internet of Things, big data, cloud computing, and artificial intelligence have not only promoted the development of the smart logistics industry, but also put forward higher service requirements for the smart logistics industry. The scale of the smart logistics market is expected to continue to expand. According to GGII estimates, China's smart warehousing market size was nearly 90 billion yuan in 2019, and the Forward Research Institute predicts that this number will reach more than 150 billion yuan in 2025.

▲ China’s smart logistics market size and growth rate from 2013 to 2020

▲ China’s smart logistics market size and growth rate from 2013 to 2020

New retail: Artificial intelligence will bring about reductions in labor costs and improvements in operational efficiency. Amazon Go is the unmanned store concept proposed by Amazon. The unmanned store officially opened in Seattle, USA on January 22, 2018. AmazonGo combines cloud computing and machine learning, applying Just Walk Out Technology and Amazon Rekognition. In-store cameras, sensor monitors, and the machine algorithms behind them will identify the items consumers take away, and automatically check out when customers leave the store. This is a brand-new revolution in the field of retail business.

Cloud-based artificial intelligence module components are currently the main direction of the major Internet giants in the commercialization of artificial intelligence. Integrate artificial intelligence technology into public cloud services. sell. Google Cloud Platform’s AI technology has always been at the forefront of the industry and is committed to integrating advanced AI technology into cloud computing service centers. In recent years, Google has acquired a number of AI companies and launched products such as AI-specific chips TPU and cloud service Cloud AutoML to complete its layout. At present, Google's AI capabilities have covered cognitive services, machine learning, robotics, data analysis & collaboration and other fields. Different from the relatively scattered products of some cloud vendors in the AI field, Google is more complete and systematic in the operation of AI products. It integrates vertical applications into basic AI components, and integrates Tensorflow and TPU computing into infrastructure, forming a complete AI platform services.

Baidu is China's AI 's most capable public cloud vendor, Baidu AI 's core strategy It’s open empowerment. Baidu has built an AI platform represented by DuerOS and Apollo to open up the ecosystem and form a positive iteration of data and scenarios. Based on the data foundation of Baidu Internet search, natural language processing, knowledge graph and user portrait technology have gradually matured. At the platform and ecological level, Baidu Cloud is a large computing platform that is open to all partners and becomes a basic support platform with various capabilities of Baidu Brain. There are also some vertical solutions, such as a new generation operating system based on natural language-based human-computer interaction, and Apollo related to intelligent driving. Vehicle manufacturers can call on the capabilities they need, and automotive electronics manufacturers can also call on the corresponding capabilities they need to jointly build the entire platform and ecosystem.

3. Industrial changes: AI large models have gradually become mainstream, and industrial development is expected to accelerate across the board

In recent years, the technological evolution route of the AI industry has mainly shown the following characteristics: The performance of the underlying modules continues to improve. Improvement, focusing on the generalization ability of the model, thereby helping to optimize the versatility of the AI algorithm and feeding back data collection. The sustainable development of AI technology relies on breakthroughs in underlying algorithms, which also requires the construction of basic capabilities with computing power as the core and an environment supported by big data for knowledge and experience learning. The rapid popularity of large models in the industry, the operating modes of large models and small models, and the continuous improvement of the capabilities of underlying links such as chips and computing infrastructure, as well as the resulting continuous improvement in application scenario categories and scenario depth, and ultimately It will bring continuous mutual promotion between basic industrial capabilities and application scenarios, and drive the development of the global AI industry to continue to accelerate under the forward cycle logic.

Large models bring strong general problem-solving capabilities. Currently, most artificial intelligence is in a "manual workshop style". Facing downstream applications in various industries, AI has gradually shown the characteristics of fragmentation and diversification, and the model versatility is not high. In order to improve general solving capabilities, large models provide a feasible solution, namely "pre-training large models and fine-tuning downstream tasks". This solution refers to capturing knowledge from a large amount of labeled and unlabeled data, improving model generalization capabilities by storing knowledge into a large number of parameters and fine-tuning specific tasks.

The large model is expected to further break through the accuracy limitations of the existing model structure, and combined with nested small model training, further improves the model efficiency in specific scenarios. In the past ten years, the improvement of model accuracy mainly relied on the structural changes of the network. However, as the neural network structure design technology gradually matures and converges, the accuracy improvement has reached a bottleneck, and the application of large models is expected to break through this bottleneck. . Taking Google's visual transfer model Big Transfer, BiT, as an example, two data sets, ILSVRC-2012 (1.28 million images, 1000 categories) and JFT-300M (300 million images, 18291 categories), are used to train ResNet50. The accuracy They are 77% and 79% respectively. The use of large models further improves the bottleneck accuracy. In addition, using JFT-300M to train ResNet152x4, the accuracy can increase to 87.5%, which is 10.5% higher than the ILSVRC-2012 ResNet50 structure.

Big Model Small Model: The promotion of generalized large model artificial intelligence and combined with data optimization in specific scenarios will become the commercialization of the artificial intelligence industry in the mid-term key. The original model of re-extracting data for specific scenarios has proven to be difficult to make a profit. The cost of re-training the model is too high, and the obtained model has low versatility and is difficult to reuse. In the context of the continuous improvement of chip computing power performance, the attempt to nest large models into small models provides manufacturers with another idea. By analyzing massive data, they can obtain general-purpose models, and then nest specific small models to provide solutions for different scenarios. Optimized and saved a lot of costs. Public cloud vendors such as Alibaba Cloud, Huawei Cloud, and Tencent Cloud are actively developing self-developed large model platforms to improve the generality of the models.

AI Chip giants represented by Nvidia, in the new generation of chips, AI are commonly used in the industry model, a new engine is specially designed to greatly improve the computing power. NVIDIA’s Hopper architecture introduces the Transformer engine, which greatly accelerates AI training. The Transformer engine uses software and custom NVIDIA Hopper Tensor Core technology, which is designed to accelerate the training of models built on common AI model building blocks known as Transformers. These Tensor Cores are capable of applying FP8 and FP16 mixed precision to significantly accelerate AI calculations for Transformer models. Tensor Core operations with FP8 have twice the throughput of 16-bit operations. The Transformer engine addresses these challenges with a custom, NVIDIA-tuned heuristic that dynamically selects between FP8 and FP16 computation and automatically handles reprojection and scaling between these accuracies in each layer. According to data provided by NVIDIA, the Hopper architecture can be 9 times more efficient than the Ampere model when training the Transformer model. Under the trend of large model technology, cloud vendors are gradually becoming core players in the computing power market. After the artificial intelligence technology framework develops towards generalization through large models, cloud vendors can also use PaaS capabilities to integrate the underlying IaaS capabilities are combined with PaaS to provide universal solutions for the market. We have seen that with the emergence of large models, the amount of data that artificial intelligence needs to process and analyze is increasing day by day. At the same time, this part of the data has been transformed from professional data sets in the past into general-purpose big data. Cloud computing giants can combine their powerful PaaS capabilities with the underlying IaaS foundation to provide one-stop data processing for artificial intelligence manufacturers. This has also helped cloud computing giants become one of the main beneficiaries of this round of artificial intelligence waves.

▲ Domestic cloud computing market size

▲ Domestic cloud computing market size

Currently, international mainstream cloud vendors such as AWS and Azure compete with domestic leading clouds such as Alibaba Cloud, Tencent Cloud, and Huawei Cloud. Manufacturers have begun to focus on PaaS capabilities such as data storage and data processing. In terms of storage capabilities, NoSQL databases will have more opportunities in the future as data types become increasingly complex. For example, Google Cloud has dispersed its layout in object classes, traditional relational databases, and NoSQL databases. In terms of data processing, the importance of Data Lake and Data Warehouse has become increasingly prominent. By improving this part of the product line, the cloud computing giant has built a complete data cycle model and combined it with its underlying IaaS basic capabilities. A complete product line and a closed data cycle model will be the biggest advantages for cloud computing giants in the future to compete in the AI middle layer.

With the gradual clarification of the AI industry chain structure, and the substantial improvement in industrial operation efficiency and technical depth brought by large models, in the medium term, assuming that AI technology does not undergo a leap-forward transition, we judge that the AI industry The chain value is expected to gradually move closer to both ends, and the value of the intermediate links is expected to continue to weaken, and gradually form a typical industrial chain structure of "chip computing power infrastructure AI framework & algorithm library application scenarios". At the same time, under such an industrial structure arrangement, we expect that Upstream chip companies, cloud infrastructure manufacturers, and downstream application manufacturers are expected to gradually become core beneficiaries of the rapid development of the AI industry.

Large models bring about the unification of AI’s underlying technical architecture and the huge demand for computing power, which naturally helps cloud computing companies play a fundamental role in this process: cloud computing has the widest global distribution , the most powerful hardware computing facilities, while AI framework and general algorithms are the most typical PaaS capabilities and tend to be integrated into the platform capabilities of cloud vendors. Therefore, from the perspective of technical versatility and actual business needs, driven by large models, cloud computing giants are expected to gradually become the main provider of basic algorithm framework capabilities in computing facilities, and continue to erode the business of existing AI algorithm platform providers. space. It can be found from the quotations of various products of cloud vendors in the past. Taking AWS and Google products as examples, the price of Linus on-demand usage in the Eastern United States is decreasing step by step.

As you can see from the figure, the price of an m1.large product with 2 vCPUs, 2 ECUs and 7.5GiB has continued to fall from about $0.4/hour in 2008 to about $0.18/hour in 2022. The on-demand price of Google Cloud's n1-standard-8 product with 8 vCPUs and 30GB of memory has also dropped from US$0.5/hour in 2014 to US$0.38/hour in 2022. It can be seen that cloud computing prices are on an overall downward trend. Over the next 3-5 years, we will see more AI-as-a-service (AIaaS) offerings. The large model trend mentioned earlier, especially the birth of GPT-3, set off this trend. Due to the huge number of parameters of GPT-3, it must be run on huge public cloud computing power such as Azure-scale computing facilities, so Microsoft Making it a service that can be obtained through web API will also encourage the emergence of more large models.

▲ AWS EC2 historical standardized price (USD/hour)

With the support of current computing power conditions and foreseeable technical capabilities, the application end will Continue to realize algorithm iteration and optimization through data acquisition, improve the shortcomings that still exist in the current cognitive intelligence (image recognition direction), and try to develop towards decision-making intelligence. According to the current technical capabilities and hardware computing power support, it will still take a long time to achieve complete decision-making intelligence; making local intelligence based on the continued deepening of existing scenarios will be the main direction in 3-5 years. The current AI application level is still too single-pointed, and completing local connection will become the first step to achieve decision-making intelligence. Artificial intelligence software applications will include from bottom-level drivers to upper-level applications and algorithm frameworks, from business-oriented (manufacturing, finance, logistics, retail, real estate, etc.) to people (metaverse, medical, humanoid robots, etc.) ), autonomous driving and other fields.

Zhidongxi believes that with the continuous improvement of basic elements such as AI chips, computing power facilities, and data, as well as the substantial improvement in the ability to generalize problem solving brought by large models, the AI industry is forming a "chip computing power foundation" With the stable industrial value chain structure of "facilities AI framework & algorithm library application scenarios", AI chip manufacturers, cloud computing manufacturers (computing facility algorithm framework), AI application scenario manufacturers, platform algorithm framework manufacturers, etc. are expected to continue to become core beneficiaries of the industry.

The above is the detailed content of In-depth report: Large model-driven AI speeds up across the board! The golden decade begins. For more information, please follow other related articles on the PHP Chinese website!

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AM

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AMRunning large language models at home with ease: LM Studio User Guide In recent years, advances in software and hardware have made it possible to run large language models (LLMs) on personal computers. LM Studio is an excellent tool to make this process easy and convenient. This article will dive into how to run LLM locally using LM Studio, covering key steps, potential challenges, and the benefits of having LLM locally. Whether you are a tech enthusiast or are curious about the latest AI technologies, this guide will provide valuable insights and practical tips. Let's get started! Overview Understand the basic requirements for running LLM locally. Set up LM Studi on your computer

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AM

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AMGuy Peri is McCormick’s Chief Information and Digital Officer. Though only seven months into his role, Peri is rapidly advancing a comprehensive transformation of the company’s digital capabilities. His career-long focus on data and analytics informs

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AM

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AMIntroduction Artificial intelligence (AI) is evolving to understand not just words, but also emotions, responding with a human touch. This sophisticated interaction is crucial in the rapidly advancing field of AI and natural language processing. Th

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AM

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AMIntroduction In today's data-centric world, leveraging advanced AI technologies is crucial for businesses seeking a competitive edge and enhanced efficiency. A range of powerful tools empowers data scientists, analysts, and developers to build, depl

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AM

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AMThis week's AI landscape exploded with groundbreaking releases from industry giants like OpenAI, Mistral AI, NVIDIA, DeepSeek, and Hugging Face. These new models promise increased power, affordability, and accessibility, fueled by advancements in tr

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AM

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AMBut the company’s Android app, which offers not only search capabilities but also acts as an AI assistant, is riddled with a host of security issues that could expose its users to data theft, account takeovers and impersonation attacks from malicious

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AM

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AMYou can look at what’s happening in conferences and at trade shows. You can ask engineers what they’re doing, or consult with a CEO. Everywhere you look, things are changing at breakneck speed. Engineers, and Non-Engineers What’s the difference be

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AMSimulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver CS6

Visual web development tools