Technology peripherals

Technology peripherals AI

AI It's really so smooth: NeuralHDHair, a new 3D hair modeling method, jointly produced by Zhejiang University, ETH Zurich, and CityU

It's really so smooth: NeuralHDHair, a new 3D hair modeling method, jointly produced by Zhejiang University, ETH Zurich, and CityUIn recent years, the virtual digital human industry has exploded, and all walks of life are launching their own digital human images. There is no doubt that high-fidelity 3D hair models can significantly enhance the realism of virtual digital humans. Unlike other parts of the human body, describing and extracting hair structure is more challenging due to the extremely complex nature of the intertwined hair structure, making it extremely difficult to reconstruct a high-fidelity 3D hair model from just a single view. Generally speaking, existing methods solve this problem in two steps: first estimating a 3D orientation field based on the 2D orientation map extracted from the input image, and then synthesizing hair strands based on the 3D orientation field. However, this mechanism still has some problems in practice.

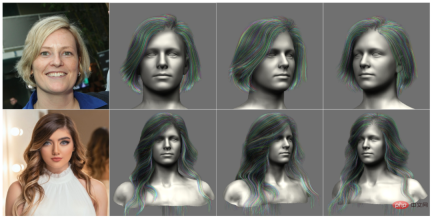

Based on observations in practice, researchers are seeking a fully automated and efficient hair model modeling method that can reconstruct a 3D hair model from a single image with fine-grained features (Figure 1), while Showing a high degree of flexibility, e.g. reconstructing a hair model requires only one forward pass of the network.

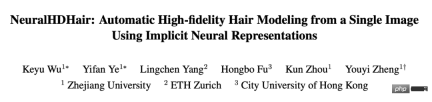

In order to solve these problems, researchers from Zhejiang University, ETH Zurich, Switzerland, and City University of Hong Kong proposed IRHairNet, which implements a rough Develop sophisticated strategies to generate high-fidelity 3D orientation fields. Specifically, they introduced a novel voxel-aligned implicit function (VIFu) to extract information from the 2D orientation map of the rough module. At the same time, in order to make up for the local details lost in the 2D direction map, the researchers used the high-resolution brightness map to extract local features and combined them with the global features in the fine module for high-fidelity hair styling.

In order to effectively synthesize hair models from 3D directional fields, researchers introduced GrowingNet, a hair growth method based on deep learning using local implicit grid representation. This is based on a key observation: although the geometry and growth direction of hairs differ globally, they have similar characteristics at specific local scales. Therefore, a high-level latent code can be extracted for each local 3D orientation patch, and then a neural latent function (a decoder) is trained to grow hair strands in it based on this latent code. After each growth step, a new local patch centered on the end of the hair strand is used to continue growing. After training, it can be applied to 3D oriented fields at any resolution.

Paper: https://arxiv.org/pdf/2205.04175.pdf

IRHairNet and GrowingNet form the core of NeuralHDHair. Specifically, the main contributions of this research include:

- Introduction of a novel fully automatic monocular hair modeling framework whose performance is significantly better than existing SOTA methods;

- Introduces a coarse-to-fine hair modeling neural network (IRHairNet), using a novel voxel-aligned implicit function and a brightness mapping to enrich local details of high-quality hair modeling;

- A new hair growing network (GrowingNet) based on local implicit functions is proposed, which can efficiently generate hair models with any resolution. This network achieves a certain order of magnitude improvement in speed compared to previous methods.

Method

Figure 2 shows the pipeline of NeuralHDHair. For a portrait image, its 2D orientation map is first calculated and its brightness map is extracted. Additionally, they are automatically aligned to the same bust reference model to obtain bust depth maps. These three graphs are then fed back to IRHairNet.

- IRHairNet is designed to generate high-resolution 3D hair geometric features from a single image. Inputs to this network include a 2D orientation map, a brightness map, and a fitted half-length depth map, which are obtained from the input portrait image. The output is a 3D orientation field, where each voxel contains a local growth direction, and a 3D occupancy field, where each voxel represents whether a hair strand has passed (1) or not (0).

- GrowingNet is designed to efficiently generate a complete hair model from the 3D orientation field and 3D occupancy field estimated by IRHairNet, where the 3D occupancy field is used to limit the hair growth area.

For more method details, please refer to the original paper.

Experiment

In this part, the researcher evaluates the effectiveness and necessity of each algorithm component through ablation studies (Section 4.1), and then combines the methods in this paper Compare with current SOTA (Section 4.2). Implementation details and more experimental results can be found in the supplementary material.

Ablation Experiment

The researchers evaluated the fidelity and efficiency of GrowingNet from a qualitative and quantitative perspective. First, three sets of experiments are conducted on synthetic data: 1) traditional hair growth algorithm, 2) GrowingNet without overlapping potential patch schemes, 3) the complete model of this paper.

As shown in Figure 4 and Table 1, compared with the traditional hair growth algorithm, GrowingNet in this article has obvious advantages in time consumption while maintaining the same growth performance in terms of visual quality. In addition, by comparing the third and fourth columns of Figure 4, it can be seen that if there is no overlapping potential patch scheme, the hair strands at the patch boundary may be discontinuous, which is a problem when the growth direction of the hair strands changes drastically. It's even more serious. However, it is worth noting that this solution greatly improves efficiency at the expense of slightly reducing accuracy. Improving efficiency is of great significance for its convenient and efficient application in human body digitization.

Comparison with SOTA method

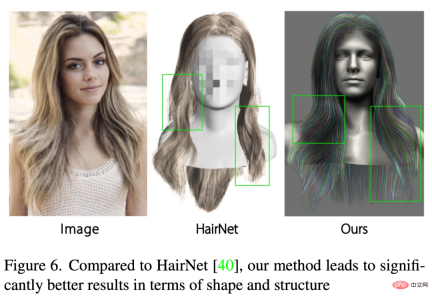

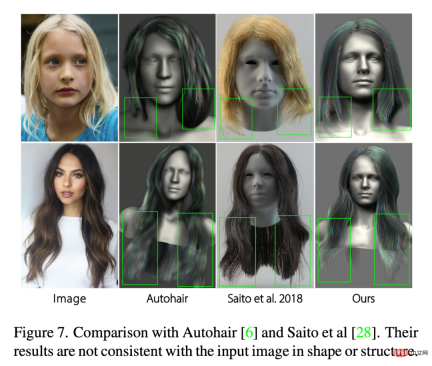

In order to evaluate the performance of NeuralHDHair, the researchers compared Comparisons were made with some SOTA methods [6, 28, 30, 36, 40]. Among them, Autohair is based on a data-driven approach for hair synthesis, while HairNet [40] ignores the hair growth process to achieve end-to-end hair modeling. In contrast, [28,36] implement a two-step strategy by first estimating a 3D orientation field and then synthesizing hair strands from it. PIFuHD [30] is a monocular high-resolution 3D modeling method based on a coarse-to-fine strategy, which can be used for 3D hair modeling.

As shown in Figure 6, the results of HairNet look unsatisfactory, but the local details and even the overall shape are inconsistent with the hair in the input image. This is because the method synthesizes hair in a simple and crude way, recovering disordered hair strands directly from a single image.

The reconstruction results are also compared with Autohair[6] and Saito[28]. As shown in Figure 7, although Autohair can synthesize realistic results, it does not structurally match the input image well because the database contains limited hairstyles. Saito's results, on the other hand, lack local details and have shapes inconsistent with the input image. In contrast, the results of this method better maintain the global structure and local details of the hair while ensuring the consistency of the hair shape.

PIFuHD [30] and Dynamic Hair [36] are dedicated to estimating high-fidelity 3D hair geometric features to generate realistic hair strands Model. Figure 8 shows two representative comparison results. It can be seen that the pixel-level implicit function used in PIFuHD cannot fully depict complex hair, resulting in a result that is too smooth, has no local details, and does not even have a reasonable global structure. Dynamic Hair can produce more reasonable results with less detail, and the hair growth trend in its results can match the input image well, but many local structural details (such as hierarchy) cannot be captured, especially for complex hairstyles. In contrast, our method can adapt to different hairstyles, even extremely complex structures, and make full use of global features and local details to generate high-fidelity, high-resolution 3D hair models with more details.

The above is the detailed content of It's really so smooth: NeuralHDHair, a new 3D hair modeling method, jointly produced by Zhejiang University, ETH Zurich, and CityU. For more information, please follow other related articles on the PHP Chinese website!

A Comprehensive Guide to ExtrapolationApr 15, 2025 am 11:38 AM

A Comprehensive Guide to ExtrapolationApr 15, 2025 am 11:38 AMIntroduction Suppose there is a farmer who daily observes the progress of crops in several weeks. He looks at the growth rates and begins to ponder about how much more taller his plants could grow in another few weeks. From th

The Rise Of Soft AI And What It Means For Businesses TodayApr 15, 2025 am 11:36 AM

The Rise Of Soft AI And What It Means For Businesses TodayApr 15, 2025 am 11:36 AMSoft AI — defined as AI systems designed to perform specific, narrow tasks using approximate reasoning, pattern recognition, and flexible decision-making — seeks to mimic human-like thinking by embracing ambiguity. But what does this mean for busine

Evolving Security Frameworks For The AI FrontierApr 15, 2025 am 11:34 AM

Evolving Security Frameworks For The AI FrontierApr 15, 2025 am 11:34 AMThe answer is clear—just as cloud computing required a shift toward cloud-native security tools, AI demands a new breed of security solutions designed specifically for AI's unique needs. The Rise of Cloud Computing and Security Lessons Learned In th

3 Ways Generative AI Amplifies Entrepreneurs: Beware Of Averages!Apr 15, 2025 am 11:33 AM

3 Ways Generative AI Amplifies Entrepreneurs: Beware Of Averages!Apr 15, 2025 am 11:33 AMEntrepreneurs and using AI and Generative AI to make their businesses better. At the same time, it is important to remember generative AI, like all technologies, is an amplifier – making the good great and the mediocre, worse. A rigorous 2024 study o

New Short Course on Embedding Models by Andrew NgApr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew NgApr 15, 2025 am 11:32 AMUnlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Is Hallucination in Large Language Models (LLMs) Inevitable?Apr 15, 2025 am 11:31 AM

Is Hallucination in Large Language Models (LLMs) Inevitable?Apr 15, 2025 am 11:31 AMLarge Language Models (LLMs) and the Inevitable Problem of Hallucinations You've likely used AI models like ChatGPT, Claude, and Gemini. These are all examples of Large Language Models (LLMs), powerful AI systems trained on massive text datasets to

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AM

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AMRecent research has shown that AI Overviews can cause a whopping 15-64% decline in organic traffic, based on industry and search type. This radical change is causing marketers to reconsider their whole strategy regarding digital visibility. The New

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AM

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AMA recent report from Elon University’s Imagining The Digital Future Center surveyed nearly 300 global technology experts. The resulting report, ‘Being Human in 2035’, concluded that most are concerned that the deepening adoption of AI systems over t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Dreamweaver CS6

Visual web development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Dreamweaver Mac version

Visual web development tools