Technology peripherals

Technology peripherals AI

AI Systematic review of deep reinforcement learning pre-training, online and offline research is enough.

Systematic review of deep reinforcement learning pre-training, online and offline research is enough.Systematic review of deep reinforcement learning pre-training, online and offline research is enough.

In recent years, reinforcement learning (RL) has developed rapidly, driven by deep learning. Various breakthroughs in fields from games to robotics have stimulated people's interest in designing complex, large-scale RL algorithms and systems. However, existing RL research generally allows agents to learn from scratch when faced with new tasks, making it difficult to use pre-acquired prior knowledge to assist decision-making, resulting in high computational overhead.

In the field of supervised learning, the pre-training paradigm has been verified as an effective way to obtain transferable prior knowledge. By pre-training on large-scale data sets, the network model can Quickly adapt to different downstream tasks. Similar ideas have also been tried in RL, especially the recent research on "generalist" agents [1, 2], which makes people wonder whether something like GPT-3 [3] can also be born in the RL field. Universal pre-trained model.

However, the application of pre-training in the RL field faces many challenges, such as the significant differences between upstream and downstream tasks, how to efficiently obtain and utilize pre-training data, and how to use prior knowledge. Issues such as effective transfer hinder the successful application of pre-training paradigms in RL. At the same time, there are great differences in the experimental settings and methods considered in previous studies, which makes it difficult for researchers to design appropriate pre-training models in real-life scenarios.

In order to sort out the development of pre-training in the field of RL and possible future development directions, Researchers from Shanghai Jiao Tong University and Tencent wrote a review to discuss existing RL Pre-training segmentation methods under different settings and problems to be solved.

##Paper address: https://arxiv.org/pdf/2211.03959.pdf

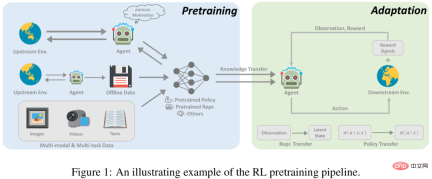

RL Pre-training IntroductionReinforcement learning (RL) provides a general mathematical form for sequential decision-making. Through RL algorithms and deep neural networks, agents learned in a data-driven manner and optimizing specified reward functions have achieved performance beyond human performance in various applications in different fields. However, although RL has been proven to be effective in solving specified tasks, sample efficiency and generalization ability are still two major obstacles hindering the application of RL in the real world. In RL research, a standard paradigm is for an agent to learn from experience collected by itself or others, optimizing a neural network through random initialization for a single task. In contrast, for humans, prior knowledge of the world greatly aids the decision-making process. If the task is related to previously seen tasks, humans tend to reuse already learned knowledge to quickly adapt to new tasks without learning from scratch. Therefore, compared with humans, RL agents suffer from low data efficiency and are prone to overfitting.

However, recent advances in other areas of machine learning actively advocate leveraging prior knowledge built from large-scale pre-training. By training at scale on a wide range of data, large foundation models can be quickly adapted to a variety of downstream tasks. This pretraining-finetuning paradigm has proven effective in fields such as computer vision and natural language processing. However, pre-training has not had a significant impact on the RL field. Although this approach is promising, designing principles for large-scale RL pretraining faces many challenges. 1) Diversity of domains and tasks; 2) Limited data sources; 3) Rapid adaptation to the difficulty of solving downstream tasks. These factors arise from the inherent characteristics of RL and require special consideration by researchers.

Pre-training has great potential for RL, and this study can serve as a starting point for those interested in this direction. In this article, researchers attempt to conduct a systematic review of existing pre-training work on deep reinforcement learning.

In recent years, deep reinforcement learning pre-training has experienced several breakthroughs. First, pre-training based on expert demonstrations, which uses supervised learning to predict the actions taken by experts, has been used on AlphaGo. In pursuit of less-supervised large-scale pre-training, the field of unsupervised RL has grown rapidly, which allows agents to learn from interactions with the environment without reward signals. In addition, the rapid development of offline reinforcement learning (offline RL) has prompted researchers to further consider how to use unlabeled and sub-optimal offline data for pre-training. Finally, offline training methods based on multi-task and multi-modal data further pave the way for a general pre-training paradigm.

Online pre-training

In the past, the success of RL was achieved with dense and well-designed reward functions. Traditional RL paradigms, which have made great progress in many fields, face two key challenges when scaling to large-scale pre-training. First, RL agents are easily overfitted, and it is difficult for agents pre-trained with complex task rewards to achieve good performance on tasks they have never seen before. In addition, designing reward functions is usually very expensive and requires a lot of expert knowledge, which is undoubtedly a big challenge in practice.

Online pre-training without reward signals may become an available solution for learning universal prior knowledge and supervised signals without human involvement. Online pre-training aims to acquire prior knowledge through interaction with the environment without human supervision. In the pre-training phase, the agent is allowed to interact with the environment for a long time but cannot receive extrinsic rewards. This solution, also known as unsupervised RL, has been actively studied by researchers in recent years.

In order to motivate agents to acquire prior knowledge from the environment without any supervision signals, a mature method is to design intrinsic rewards for agents to encourage The agent designs reward mechanisms accordingly by collecting diverse experiences or mastering transferable skills. Previous research has shown that agents can quickly adapt to downstream tasks through online pretraining with intrinsic rewards and standard RL algorithms.

Offline pre-training

Although online pre-training can achieve good pre-training results without human supervision, But for large-scale applications, online pre-training is still limited. After all, online interaction is somewhat mutually exclusive with the need to train on large and diverse datasets. In order to solve this problem, people often hope to decouple the data collection and pre-training links and directly use historical data collected from other agents or humans for pre-training.

A feasible solution is offline reinforcement learning. The purpose of offline reinforcement learning is to obtain a reward-maximizing RL policy from offline data. A fundamental challenge is the problem of distribution shift, that is, the difference in distribution between the training data and the data seen during testing. Existing offline reinforcement learning methods focus on how to solve this challenge when using function approximation. For example, policy constraint methods explicitly require the learned policy to avoid taking actions not seen in the data set, and value regularization methods alleviate the problem of overestimation of the value function by fitting the value function to some form of lower bound. However, whether strategies trained offline can generalize to new environments not seen in offline datasets remains underexplored.

Perhaps, we can avoid the learning of RL policies and instead use offline data to learn prior knowledge that is beneficial to the convergence speed or final performance of downstream tasks. More interestingly, if our model can leverage offline data without human supervision, it has the potential to benefit from massive amounts of data. In this paper, researchers refer to this setting as offline pre-training, and the agent can extract important information (such as good representation and behavioral priors) from offline data.

Towards a general agent

The pre-training methods in a single environment and single modality mainly focus on the above mentioned Online pre-training and offline pre-training settings, and recently, researchers in the field have become increasingly interested in building a single general decision-making model (e.g., Gato [1] and Multi-game DT [2]), making the same The model is able to handle tasks of different modalities in different environments. In order to enable agents to learn from and adapt to a variety of open-ended tasks, the research hopes to leverage large amounts of prior knowledge in different forms, such as visual perception and language understanding. More importantly, if researchers can successfully build a bridge between RL and machine learning in other fields, and combine previous successful experiences, they may be able to build a general agent model that can complete various tasks.

The above is the detailed content of Systematic review of deep reinforcement learning pre-training, online and offline research is enough.. For more information, please follow other related articles on the PHP Chinese website!

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AM

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AMMeta has joined hands with partners such as Nvidia, IBM and Dell to expand the enterprise-level deployment integration of Llama Stack. In terms of security, Meta has launched new tools such as Llama Guard 4, LlamaFirewall and CyberSecEval 4, and launched the Llama Defenders program to enhance AI security. In addition, Meta has distributed $1.5 million in Llama Impact Grants to 10 global institutions, including startups working to improve public services, health care and education. The new Meta AI application powered by Llama 4, conceived as Meta AI

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AM

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AMJoi AI, a company pioneering human-AI interaction, has introduced the term "AI-lationships" to describe these evolving relationships. Jaime Bronstein, a relationship therapist at Joi AI, clarifies that these aren't meant to replace human c

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AM

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AMOnline fraud and bot attacks pose a significant challenge for businesses. Retailers fight bots hoarding products, banks battle account takeovers, and social media platforms struggle with impersonators. The rise of AI exacerbates this problem, rende

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AM

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AMAI agents are poised to revolutionize marketing, potentially surpassing the impact of previous technological shifts. These agents, representing a significant advancement in generative AI, not only process information like ChatGPT but also take actio

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AM

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AMAI's Impact on Crucial NBA Game 4 Decisions Two pivotal Game 4 NBA matchups showcased the game-changing role of AI in officiating. In the first, Denver's Nikola Jokic's missed three-pointer led to a last-second alley-oop by Aaron Gordon. Sony's Haw

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AM

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AMTraditionally, expanding regenerative medicine expertise globally demanded extensive travel, hands-on training, and years of mentorship. Now, AI is transforming this landscape, overcoming geographical limitations and accelerating progress through en

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AM

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AMIntel is working to return its manufacturing process to the leading position, while trying to attract fab semiconductor customers to make chips at its fabs. To this end, Intel must build more trust in the industry, not only to prove the competitiveness of its processes, but also to demonstrate that partners can manufacture chips in a familiar and mature workflow, consistent and highly reliable manner. Everything I hear today makes me believe Intel is moving towards this goal. The keynote speech of the new CEO Tan Libo kicked off the day. Tan Libai is straightforward and concise. He outlines several challenges in Intel’s foundry services and the measures companies have taken to address these challenges and plan a successful route for Intel’s foundry services in the future. Tan Libai talked about the process of Intel's OEM service being implemented to make customers more

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AM

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AMAddressing the growing concerns surrounding AI risks, Chaucer Group, a global specialty reinsurance firm, and Armilla AI have joined forces to introduce a novel third-party liability (TPL) insurance product. This policy safeguards businesses against

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment