Technology peripherals

Technology peripherals AI

AI T Frontline | Exclusive interview with Tencent AILab: From 'point' to 'line', the laboratory is more than just experiments

T Frontline | Exclusive interview with Tencent AILab: From 'point' to 'line', the laboratory is more than just experimentsT Frontline | Exclusive interview with Tencent AILab: From 'point' to 'line', the laboratory is more than just experiments

Guest: Shi Shuming

Written by: Mo Qi

Reviewer: Yun Zhao

"Most research work tends to revolve around a point, and point-like The results are difficult to be used directly by users," said Shi Shuming, director of the Natural Language Processing Center of Tencent AI Lab.

In the past decade or so, artificial intelligence (AI) has experienced a renaissance, and significant technological progress has also occurred in the field of natural language processing (NLP). The advancement of NLP technology has greatly improved the quality of machine translation, made search and recommendation more accurate, and also spawned more digital scenario applications, such as conversational robots, smart writing, etc. So, as the crown jewel of AI, the NLP field has attracted countless domestic and foreign companies, talents, and capital. How do various factors promote its research progress? How do companies incubate and implement research results? How do relevant practitioners view the bottlenecks and controversies in the development of AI?

Recently, "T Frontline" had the honor to interview Tencent AI Lab Natural Language Processing Center, hoping to get a glimpse of it from the perspective of an "artificial intelligence laboratory".

Don’t stop at experiments: we also focus on the implementation and open source of the results

T Frontline: Tencent AILab laboratory in natural language In terms of processing, what directions are there to explore?

Shi Shuming: Tencent AI Lab’s natural language processing team conducts research in four directions: text understanding, text generation, intelligent dialogue, and machine translation. In terms of results, judging from the paper publication situation, in the past three years, the team has published more than 50 academic papers in first-class international conferences and journals every year, ranking among the top domestic research institutions; it is worth mentioning that two of our papers have been evaluated separately. For the best paper of NAACL'2021 and the outstanding paper of ACL'2021. In terms of academic competitions, we have won many heavyweight competitions. For example, we won first place in 5 tasks in last year's International Machine Translation Competition WMT'2021.

In addition to papers and academic competitions, we also consciously transform our research results into systems and open source data, open to users inside and outside the company. These systems and data include text understanding system TexSmart, interactive translation system TranSmart, intelligent creative assistant "Effidit", Chinese word vector data containing 8 million words, etc.

The Chinese word vector data released at the end of 2018 is called "Tencent word vector" by the outside world. It is at the leading level in terms of scale, accuracy, and freshness. It has received widespread attention, discussion and use in the industry. It has been widely used in many industries. Continuously improve performance in applications. Compared with similar systems, the text understanding system TexSmart provides fine-grained named entity recognition (NER), semantic association, deep semantic expression, text mapping and other special functions, and won the best award at the 19th China Computational Linguistics Conference (CCL'2020) System Demonstration Award. The interactive translation system TranSmart is the first public interactive translation Internet product in China. It provides highlight functions such as translation input method, constraint decoding, and translation memory fusion. It supports many customers, businesses and scenarios inside and outside the company, including the United Nations Documentation Agency, Memsource, Huatai Securities, Tencent Music, China Literature Online, Tencent Games Going Global, Tencent Optional Stock Document Translation, etc. The intelligent creative assistant "Effidit" we released some time ago provides multi-dimensional text completion, diversified text polishing and other special functions. It uses AI technology to assist writers in diverging ideas, enriching expressions, and improving text editing and writing. s efficiency.

T Frontline: In terms of intelligent collaboration, can you take "Effidit" as an example and talk about the origin and latest status of the project?

Shi Shuming: The smart writing assistant Effidit project was launched before the National Day in 2020. There are two main reasons for doing this project: first, there are pain points in writing, and second, the NLP technology required for this scenario is consistent with our team's ability accumulation.

First of all, let’s talk about the pain points in writing: In life and work, we often need to read news, novels, public account articles, papers, technical reports, etc., and we also need to write some things, such as technical documents and meeting minutes. , reporting materials, etc. We can find that the process of reading is usually relaxed, pleasant and effortless, but writing is different. We often don’t know how to use appropriate words to express our thoughts. Sometimes the sentences and paragraphs written with great effort are still unclear. It looks dry and prone to typos in the middle. Perhaps most people are better at reading than writing. So we thought about whether we could use technology to solve the pain points in writing and improve the efficiency of writing?

Let’s talk about the second reason for starting this project: We have been thinking about how NLP technology can improve human work efficiency and quality of life? In the past few years, we have carried out in-depth research work in NLP sub-directions such as text understanding, text generation, and machine translation. Most research work is often carried out around a point, and point-like results are difficult to be directly used by users. Therefore, we subconsciously string together a number of point-like research results to form a line, that is, a system. We have been looking for implementation scenarios for research results in text generation. Considering the pain points in writing mentioned earlier, we decided to launch the smart writing assistant Effidit project after discussion.

After one and a half years of research and development, the first version has been released. Next, we will continue to iterate and optimize, listen to user feedback, improve the effectiveness of various functions, and strive to produce a tool that is easy to use and popular with users.

Trusted AI: Research on explainability and robustness still needs to be explored

T Frontline: In recent years, trusted AI has attracted the attention of people in the industry. Can you Can you talk about the understanding and progress of trustworthy AI in the field of NLP?

Shi Shuming: I don’t know much about trusted AI, so I can only talk about some superficial ideas. Trustworthy AI is an abstract concept and there is currently no precise definition. However, from a technical perspective, it includes many elements: model interpretability, robustness, fairness and privacy protection, etc. In recent years, pre-trained language models based on the Transformer structure have shown amazing results on many natural language processing tasks and have attracted widespread attention. However, this type of AI model is essentially a data-driven black box model. Their interpretability of prediction results is poor, the model robustness is not very good, and they are prone to learn biases inherent in the data (such as gender). Bias), resulting in some problems in the fairness of the model. Word vectors that appear earlier than pre-trained language models also suffer from gender bias. At present, on the one hand, building credible AI models is a research direction that attracts attention in the field of machine learning and NLP. There are many research works and some progress has been made. On the other hand, these advances are still far from the goal. For example, in terms of the interpretability of deep models, the progress is not particularly great, and a key step has not been taken.

The Tencent AI Lab where I work is also carrying out some research work on trusted AI. Tencent AI Lab has continued to invest in trusted AI work since 2018, and has achieved some results in three major directions: adversarial robustness, distributed transfer learning and interpretability. In the future, Tencent AI Lab will focus on the fairness and explainability of AI, and continue to explore the application of related technologies in medical, pharmaceutical, life science and other fields.

Difficulty Focus: Statistical methods cannot fundamentally understand semantics

T Frontline: What do you think is the bottleneck of NLP research at this stage Where? What are the future directions?

Shi Shuming: Since the emergence of the research field of natural language processing, the biggest bottleneck it has faced is how to truly understand the semantics expressed by a piece of natural language text. This bottleneck has not been broken so far.

Human beings truly have the ability to understand natural language. For example, when we see the sentence "She likes blue", we know its meaning, what "like" is and what "blue" is. ". As for the NLP algorithm, when it processes the above sentence, there is no essential difference from the sentence "abc def xyz" we see in an unknown foreign language. Suppose that in this unknown foreign language, "abc" means "she", "def" means like, and "xyz" means "green". When we know nothing about this foreign language, we cannot understand any sentences in this foreign language. If we are lucky enough to see a large number of sentences written in this foreign language, we may do some statistical analysis on them, trying to establish the correspondence between the words in this foreign language and the words in our mother tongue, hoping to finally crack the language Purpose. This process is not easy and there is no guarantee of ultimate success.

For AI, the situation it faces is worse than us humans deciphering an unknown foreign language. We have common sense of life and the mapping of native words to internal concepts in our minds, but AI does not have these things. The symbolic method in NLP research attempts to add human-like capabilities to AI through the symbolic expression of text and knowledge maps, trying to fundamentally solve the problem of understanding; while the statistical method temporarily ignores common sense and internal concepts in the mind etc., focusing on improving statistical methods and making full use of the information in the data itself. So far, the second method is the mainstream of industry research and has achieved greater success.

Judging from the bottleneck breakthroughs and progress of statistical NLP in the past ten years, Word vector technology (that is, using a medium-dimensional dense vector to represent a word) It broke through the computability bottleneck of words, combined with deep learning algorithms and GPU computing power, and kicked off a series of breakthroughs in NLP in the past decade. The emergence of new network structures (such as Transformer) and paradigms (such as pre-training) has greatly improved the computability of text and the effect of text representation. However, because statistical NLP does not model common sense and basic concepts as well as humans do, and cannot fundamentally understand natural language, it is difficult to avoid some common sense errors.

Of course, the research community has never given up its efforts in symbolization and deep semantic representation. The most influential attempts in this area in the past ten years include Wolfram Alpha and AMR (Abstract Meaning Representation). This road is very hard, and the main challenges are the modeling of a large number of abstract concepts and scalability (that is, from understanding highly formalized sentences to understanding general natural language texts).

Possible future research directions in basic technology include: new generation language models, controllable text generation, improving the model's cross-domain transfer capabilities, statistical models that effectively incorporate knowledge, deep semantic representation, etc. These research directions correspond to some local bottlenecks in NLP research. The direction that needs to be explored in terms of application is how to use NLP technology to improve human work efficiency and quality of life.

Research and implementation: How to balance the two?

T Frontline: How is the direction of AI Lab NLP explored and laid out in terms of basic research, cutting-edge technology and industrialization? What are the next steps?

Shi Shuming: In terms of basic research, our goal is to seek breakthroughs in basic research, solve some bottlenecks in current research, and strive to produce original and useful products like Word2vec, Transformer, and Bert. , results with significant impact. In order to achieve this goal, on the one hand, we give basic researchers a greater degree of freedom and encourage them to do things with long-term potential impact; on the other hand, the entire team members use brainstorming and other methods to select a number of key breakthrough directions. , let’s work together.

In terms of industrialization, in addition to technological transformation of the company's existing products, we focus on creating one or two technology products led by ourselves. The goal is tointegrate research results and improve people's work efficiency or quality of life. These technology products include TranSmart, an interactive translation system for translators, and Effidit, an intelligent creative assistant for text editing and writing scenarios. We will continue to polish these two technical products.

Looking for the Jade of Kunshan: Researchers need a certain degree of freedom

T Frontline: As far as the scientific research department is concerned, what do you think are the different focuses of researchers and algorithm engineers?

Shi Shuming: In our team, the responsibilities of algorithm engineers include two points: one is to implement or optimize existing algorithms (such as the algorithm in a published paper), and the other is to implement and polish it technology products. In addition to the two responsibilities of an algorithm engineer, the researcher's responsibilities also include proposing and publishing original research results. This division is not absolute, and the boundaries are relatively fuzzy. It largely depends on the employee's personal interests and the needs of the project.

T Frontline: As a manager, what are the differences between laboratory team management and traditional technical engineer management methods and concepts?

Shi Shuming: For the business team, technical engineers need to work closely together to create the planned products through certain project management processes. Lab teams tend to consist of basic researchers and technical engineers (and perhaps a small amount of product and operations staff). For basic research, researchers need to be given greater freedom, less "guidance" and more help, respect their interests, stimulate their potential, and encourage them to do something with long-term potential impact. Breakthroughs in basic research are often not planned from the top down or managed through project management processes. On the other hand, when the laboratory team builds technical products, it requires more collaboration between researchers and technical engineers, supplemented by lightweight project management processes.

Laboratory AI position: The selection of candidates pays more attention to the "three good things" and the heart is strong enough

T front line: If there is an applicant who has strong research ability and has published a lot at high-level conferences I have a thesis, but my engineering ability is poor. Will you accept it?

Shi Shuming: This is a good question. This is a question we often encounter when recruiting. Ideally, both academia and industry would like to cultivate or recruit talents with strong research and engineering capabilities. However, in practice, such people are rare and are often the object of competition among various companies and research institutions. During the interview process, for candidates with particularly outstanding research abilities, our requirements for engineering abilities will be reduced accordingly, but they must be higher than a basic threshold. Similarly, for candidates with strong engineering abilities, our requirements for research abilities will also be lower. In the actual work process, if arranged properly, employees with strong research capabilities and strong engineering capabilities will give full play to their respective advantages through cooperation and complete the project together.

T Frontline: What abilities do you value most in candidates?

Shi Shuming: Dr. Shen Xiangyang said that the requirements for recruiting people are "three good": good in mathematics, good in programming, and good attitude. Being good in mathematics corresponds to a person's research potential, being good in programming corresponds to engineering ability, and having a good attitude includes "being passionate about one's work," "being able to cooperate with colleagues for win-win results," and "being reliable in doing things." These three points are valued by many research institutions. During the actual interview process, the candidate's research ability and potential are often assessed by reading paper publication records and chatting about projects, the candidate's engineering ability is assessed through programming tests and project output, and the overall interview process is used to infer whether the candidate is genuine. "Good attitude". This method of speculation and evaluation can sometimes lead to mistakes, but overall the accuracy is quite high.There are also some abilities that are difficult to judge through a one- or two-hour interview, but if the employees recruited have these abilities, they are a treasure. The first is the ability to choose important research topics. The second is the ability to complete one thing. People or teams that lack this ability may always frequently start various topics or projects, but these topics and projects are never completed with high quality, and often end in an anticlimax. This may have something to do with execution, perseverance, focus, technical level, etc. The third is the ability to endure loneliness and criticism. Important and influential things are often not understood by most people before their influence comes out; if your heart is not strong enough and cannot bear loneliness and criticism, it may be difficult to persist, and it will be easy to give up your original intention and jump into what is already a red ocean. to involute the current hot spots.

T Frontline: What suggestions do you currently have for fresh graduates and technical people who switch careers into the field of artificial intelligence?

Shi Shuming: Each graduate’s academic qualifications, school and project participation are different. Technicians who switch to artificial intelligence have vastly different professional and life experiences. It is difficult to give too much information. What general advice. I can only think of a few points for now: First, don’t just immerse yourself in doing things and ignore the collection of information and intelligence. Find more seniors, sisters or friends to inquire about the situation, listen to their introduction to the current work situation and their evaluation of different types of work and various work units, and understand the road they have traveled and the pitfalls they have stepped on. At the same time, information is collected through various methods such as forums, public accounts, short videos, etc. to help one make decisions at this critical node in life. Second, if you are more than a year away from graduation and have no internship experience, find a reliable place to do an internship. Through internships, on the one hand, you can accumulate practical experience, improve your abilities, and experience the feeling of work in advance; on the other hand, internship experience will also enrich your resume and enhance your competitiveness when looking for a job. Third, involution is always inevitable at work, and everything goes as planned. Control expectations, adjust your mentality, and find ways to digest the emotional gap caused by changes. Fourth, after settling down, don’t forget your dreams, work hard, and accomplish something worthy of your abilities.

I wish every graduate can find their favorite job and grow in their workplace, and I wish every technician who changes careers and enters the field of artificial intelligence can enjoy the happiness brought by struggle in the new track of AI. and harvest.

Guest Introduction

Shi Shuming, graduated from the Computer Science Department of Tsinghua University, is currently the director of the Natural Language Processing Center of Tencent AI Lab. His research interests include knowledge mining, natural language understanding, text generation, and intelligent dialogue. He has published more than 100 papers in academic conferences and journals such as ACL, EMNLP, AAAI, IJCAI, WWW, SIGIR, TACL, etc., with an H-index of 35. He has served as the system demonstration co-chair of EMNLP 2021 and CIKM 2013, a senior program committee member of KDD2022, and a program committee member of ACL, EMNLP and other conferences.

The above is the detailed content of T Frontline | Exclusive interview with Tencent AILab: From 'point' to 'line', the laboratory is more than just experiments. For more information, please follow other related articles on the PHP Chinese website!

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AM

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AMMeta has joined hands with partners such as Nvidia, IBM and Dell to expand the enterprise-level deployment integration of Llama Stack. In terms of security, Meta has launched new tools such as Llama Guard 4, LlamaFirewall and CyberSecEval 4, and launched the Llama Defenders program to enhance AI security. In addition, Meta has distributed $1.5 million in Llama Impact Grants to 10 global institutions, including startups working to improve public services, health care and education. The new Meta AI application powered by Llama 4, conceived as Meta AI

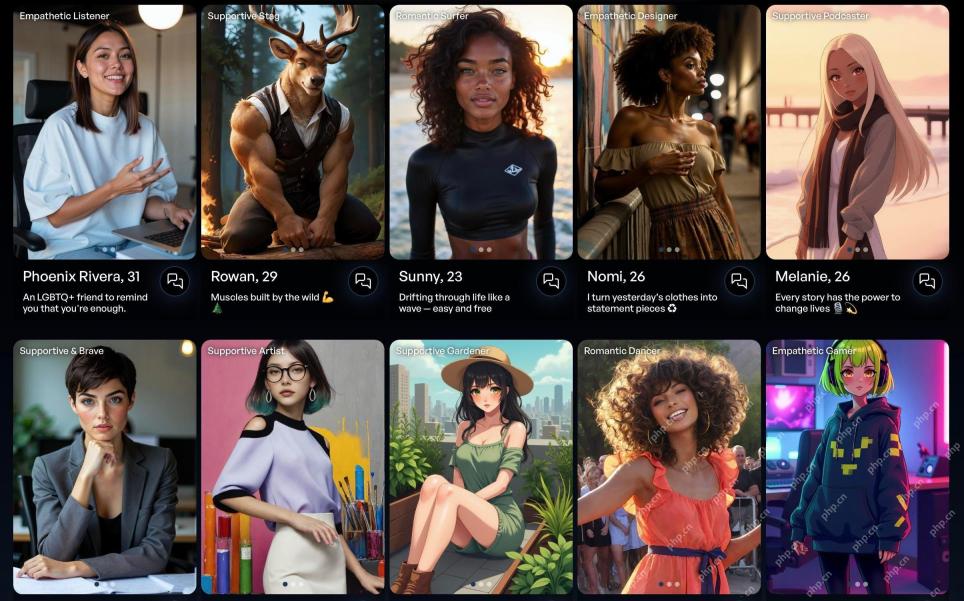

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AM

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AMJoi AI, a company pioneering human-AI interaction, has introduced the term "AI-lationships" to describe these evolving relationships. Jaime Bronstein, a relationship therapist at Joi AI, clarifies that these aren't meant to replace human c

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AM

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AMOnline fraud and bot attacks pose a significant challenge for businesses. Retailers fight bots hoarding products, banks battle account takeovers, and social media platforms struggle with impersonators. The rise of AI exacerbates this problem, rende

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AM

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AMAI agents are poised to revolutionize marketing, potentially surpassing the impact of previous technological shifts. These agents, representing a significant advancement in generative AI, not only process information like ChatGPT but also take actio

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AM

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AMAI's Impact on Crucial NBA Game 4 Decisions Two pivotal Game 4 NBA matchups showcased the game-changing role of AI in officiating. In the first, Denver's Nikola Jokic's missed three-pointer led to a last-second alley-oop by Aaron Gordon. Sony's Haw

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AM

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AMTraditionally, expanding regenerative medicine expertise globally demanded extensive travel, hands-on training, and years of mentorship. Now, AI is transforming this landscape, overcoming geographical limitations and accelerating progress through en

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AM

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AMIntel is working to return its manufacturing process to the leading position, while trying to attract fab semiconductor customers to make chips at its fabs. To this end, Intel must build more trust in the industry, not only to prove the competitiveness of its processes, but also to demonstrate that partners can manufacture chips in a familiar and mature workflow, consistent and highly reliable manner. Everything I hear today makes me believe Intel is moving towards this goal. The keynote speech of the new CEO Tan Libo kicked off the day. Tan Libai is straightforward and concise. He outlines several challenges in Intel’s foundry services and the measures companies have taken to address these challenges and plan a successful route for Intel’s foundry services in the future. Tan Libai talked about the process of Intel's OEM service being implemented to make customers more

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AM

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AMAddressing the growing concerns surrounding AI risks, Chaucer Group, a global specialty reinsurance firm, and Armilla AI have joined forces to introduce a novel third-party liability (TPL) insurance product. This policy safeguards businesses against

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment