Technology peripherals

Technology peripherals AI

AI StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem

StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problemStarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem

Multi-agent reinforcement learning (MARL) is a challenging problem that not only requires identifying the policy improvement direction of each agent, but also requires combining the policy updates of individual agents to improve Overall performance. Recently, this problem has been initially solved, and some researchers have introduced the centralized training decentralized execution (CTDE) method, which allows the agent to access global information during the training phase. However, these methods cannot cover the full complexity of multi-agent interactions.

In fact, some of these methods have proven to be failures. In order to solve this problem, someone proposed the multi-agent dominance decomposition theorem. On this basis, the HATRPO and HAPPO algorithms are derived. However, there are limitations to these approaches, which still rely on carefully designed maximization objectives.

In recent years, sequence models (SM) have made substantial progress in the field of natural language processing (NLP). For example, the GPT series and BERT perform well on a wide range of downstream tasks and achieve strong performance on small sample generalization tasks.

Since sequence models naturally fit with the sequence characteristics of language, they can be used for language tasks. However, sequence methods are not limited to NLP tasks, but are a widely applicable general basic model. For example, in computer vision (CV), one can split an image into subimages and arrange them in a sequence as if they were tokens in an NLP task. The more famous recent models such as Flamingo, DALL-E, GATO, etc. all have the shadow of the sequence method.

With the emergence of network architectures such as Transformer, sequence modeling technology has also attracted great attention from the RL community, which has promoted a series of offline RL development based on the Transformer architecture. These methods show great potential in solving some of the most fundamental RL training problems.

Despite the notable success of these methods, none was designed to model the most difficult (and unique to MARL) aspect of multi-agent systems— Interaction between agents. In fact, if we simply give all agents a Transformer policy and train them individually, this is still not guaranteed to improve the MARL joint performance. Therefore, while there are a large number of powerful sequence models available, MARL does not really take advantage of sequence model performance.

How to use sequence models to solve MARL problems? Researchers from Shanghai Jiao Tong University, Digital Brain Lab, Oxford University, etc. proposed a new multi-agent Transformer (MAT, Multi-Agent Transformer) architecture, which can effectively transform collaborative MARL problems into sequence model problems. Its tasks It maps the agent's observation sequence to the agent's optimal action sequence.

The goal of this paper is to build a bridge between MARL and SM in order to unlock the modeling capabilities of modern sequence models for MARL. The core of MAT is the encoder-decoder architecture, which uses the multi-agent advantage decomposition theorem to transform the joint strategy search problem into a sequential decision-making process, so that the multi-agent problem will exhibit linear time complexity, and most importantly, Doing so ensures monotonic performance improvement of MAT. Unlike previous techniques such as Decision Transformer that require pre-collected offline data, MAT is trained in an online strategic manner through online trial and error from the environment.

- Paper address: https://arxiv.org/pdf/2205.14953 .pdf

- Project homepage: https://sites.google.com/view/multi-agent-transformer

To verify MAT, researchers conducted extensive experiments on StarCraftII, Multi-Agent MuJoCo, Dexterous Hands Manipulation and Google Research Football benchmarks. The results show that MAT has better performance and data efficiency compared to strong baselines such as MAPPO and HAPPO. In addition, this study also proved that no matter how the number of agents changes, MAT performs better on unseen tasks, but it can be said to be an excellent small sample learner.

Background knowledge

In this section, the researcher first introduces the collaborative MARL problem formula and the multi-agent advantage decomposition theorem, which are the cornerstones of this article. Then, they review existing MAT-related MARL methods, finally leading to Transformer.

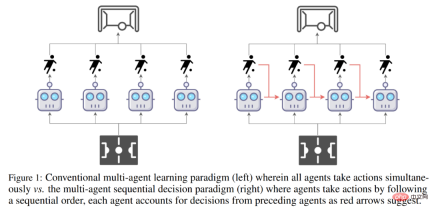

Comparison of the traditional multi-agent learning paradigm (left) and the multi-agent sequence decision-making paradigm (right).

Problem Formula

Collaborative MARL problems are usually composed of discrete partially observable Markov decision processes (Dec-POMDPs) to model.

to model.

Multi-agent dominance decomposition theorem

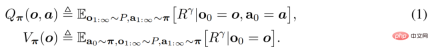

The agent evaluates the value of actions and observations through Q_π(o, a) and V_π(o), which are defined as follows.

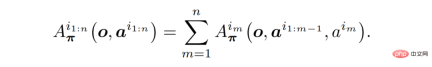

Theorem 1 (Multi-agent Advantage Decomposition): Let i_1:n be the arrangement of agents. The following formula always holds without further assumptions.

# Importantly, Theorem 1 provides an intuition for how to choose incremental improvement actions.

Existing MARL methods

Researchers have summarized two current SOTA MARL algorithms, both of which are built on Proximal Policy Optimization (PPO) . PPO is an RL method known for its simplicity and performance stability.

Multi-Agent Proximal Policy Optimization (MAPPO) is the first and most straightforward method to apply PPO to MARL.

Heterogeneous Agent Proximal Policy Optimization (HAPPO) is one of the current SOTA algorithms, which can make full use of Theorem (1) to Achieving multi-agent trust domain learning with monotonic lifting guarantees.

Transformer model

Based on what is described in Theorem (1) Sequence properties and the principles behind HAPPO can now be intuitively considered to use the Transformer model to implement multi-agent trust domain learning. By treating an agent team as a sequence, the Transformer architecture allows modeling of agent teams with variable numbers and types while avoiding the shortcomings of MAPPO/HAPPO.

Multi-agent Transformer

In order to realize the sequence modeling paradigm of MARL, the solution provided by the researchers is the multi-agent Transformer (MAT). The idea of applying the Transformer architecture stems from the fact that the agent observes the relationship between the input of the sequence (o^i_1,..., o^i_n) and the output of the action sequence (a^i_1, . . ., a^i_n) Mapping is a sequence modeling task similar to machine translation. As Theorem (1) avoids, action a^i_m depends on the previous decisions of all agents a^i_1:m−1.

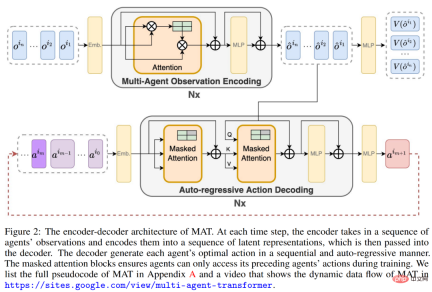

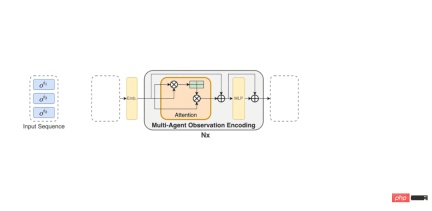

Therefore, as shown in Figure (2) below, MAT contains an encoder for learning joint observation representation and an autoregressive method to output actions for each agent. decoder.

The parameters of the encoder are represented by φ, which obtains the observation sequence in any order (o^i_1 , . . . , o^i_n) and pass them through several computational blocks. Each block consists of a self-attention mechanism, a multilayer perceptron (MLP), and residual connections to prevent vanishing gradients and network degradation with increasing depth.

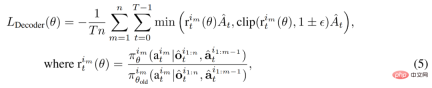

The parameters of the decoder are represented by θ, which embeds the joint action a^i_0:m−1, m = {1, . . . n} (where a^i_0 is Any symbol indicating the start of decoding) is passed to the decoding block sequence. Crucially, each decoding block has a masked self-attention mechanism. To train the decoder, we minimize the cropped PPO objective as follows.

#The detailed data flow in MAT is shown in the following animation.

Experimental results

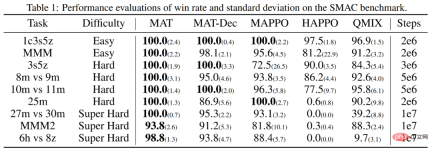

To evaluate whether MAT meets expectations, researchers tested the StarCraft II Multi-Agent Challenge (SMAC) benchmark (MAPPO on top of MAT was tested on the multi-agent MuJoCo benchmark (on which HAPPO has SOTA performance).

In addition, the researchers also conducted extended tests on MAT on Bimanual Dxterous Hand Manipulation (Bi-DexHands) and Google Research Football benchmarks. The former offers a range of challenging two-hand tasks, and the latter offers a range of cooperative scenarios within a football game.

Finally, since the Transformer model usually shows strong generalization performance on small sample tasks, the researchers believe that MAT can also have similar powerful performance on unseen MARL tasks. Generalization. Therefore, they designed zero-shot and small-shot experiments on SMAC and multi-agent MuJoCo tasks.

Performance on the collaborative MARL benchmark

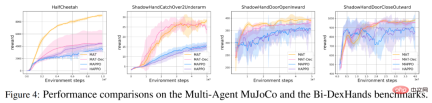

As shown in Table 1 and Figure 4 below, for the SMAC, multi-agent MuJoCo and Bi-DexHands benchmarks, MAT is It is significantly better than MAPPO and HAPPO on almost all tasks, indicating its powerful construction capabilities on homogeneous and heterogeneous agent tasks. Furthermore, MAT also achieves better performance than MAT-Dec, indicating the importance of decoder architecture in MAT design.

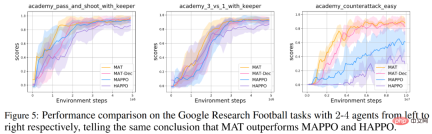

##Similarly, researchers on the Google Research Football benchmark Similar performance results were obtained, as shown in Figure 5 below.

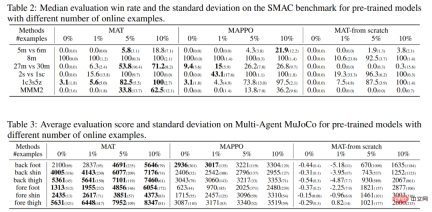

Zero-shot and few-shot examples for each algorithm are summarized in Table 2 and Table 3 Results, where bold numbers indicate the best performance.

The researchers also provided the performance of MAT with the same data, which was trained from scratch like the control group. As shown in the table below, MAT achieves most of the best results, which demonstrates the strong generalization performance of MAT's few-shot learning.

The above is the detailed content of StarCraft II cooperative confrontation benchmark surpasses SOTA, new Transformer architecture solves multi-agent reinforcement learning problem. For more information, please follow other related articles on the PHP Chinese website!

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AM

Tesla's Robovan Was The Hidden Gem In 2024's Robotaxi TeaserApr 22, 2025 am 11:48 AMSince 2008, I've championed the shared-ride van—initially dubbed the "robotjitney," later the "vansit"—as the future of urban transportation. I foresee these vehicles as the 21st century's next-generation transit solution, surpas

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Zend Studio 13.0.1

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.