Why can TensorFlow do machine learning development?

Machine learning is a complex subject, but because machine learning frameworks (such as Google's TensorFlow) simplify the process of obtaining data, training models, providing predictions and improving future results, implementing machine learning is far less daunting than it once was. .

Created by the Google Brain team and initially released to the public in 2015, TensorFlow is an open source library for numerical computing and large-scale machine learning. TensorFlow bundles together a wide range of machine learning and deep learning models and algorithms (also known as neural networks) and makes them useful through common programming metaphors. It provides a convenient front-end API for building applications using Python or JavaScript while executing them in high-performance C.

TensorFlow competes with frameworks such as PyTorch and Apache MXNet to train and run deep neural networks for handwritten digit classification, image recognition, word embeddings, recurrent neural networks, sequence-to-sequence models for machine translation, Natural Language Processing and PDE (Partial Differential Equations) based simulations. Best of all, TensorFlow supports production predictions at scale, using the same model for training.

TensorFlow also has an extensive library of pre-trained models that can be used in your own projects. You can also use the code in the TensorFlow Model Park as examples of best practices for training your own models.

How TensorFlow works

TensorFlow allows developers to create data flow graphs—structures that describe how data moves through a graph or series of processing nodes. Each node in the graph represents a mathematical operation, and each connection or edge between nodes is a multidimensional data array, or tensor.

TensorFlow applications can run on most convenient targets: local machines, clusters in the cloud, iOS and Android devices, CPUs, or GPUs. If you use Google's own cloud, you can run TensorFlow on Google's custom TensorFlow Processing Unit (TPU) chip for further acceleration. However, the resulting models created by TensorFlow can be deployed on most devices used to provide predictions.

TensorFlow 2.0 was released in October 2019, with various improvements to the framework based on user feedback, making it easier to use (for example, by using the relatively simple KerasAPI for model training) and higher performance. Distributed training is easier to run thanks to new APIs, and support for TensorFlow Lite makes it possible to deploy models on a wider variety of platforms. However, code written for earlier versions of TensorFlow must be rewritten—sometimes only slightly, sometimes significantly—to take maximum advantage of new TensorFlow 2.0 features.

The trained model can be used to provide predictions as a service through a Docker container using REST or gRPC API. For more advanced service scenarios, you can use Kubernetes

Using TensorFlow with Python

TensorFlow provides all these capabilities to programmers through the Python language. Python is easy to learn and use, and it provides convenient ways to express how to couple high-level abstractions together. TensorFlow is supported on Python versions 3.7 to 3.10, and while it may work on earlier versions of Python, it is not guaranteed to do so.

Nodes and tensors in TensorFlow are Python objects, and TensorFlow applications themselves are Python applications. However, the actual mathematical operations are not performed in Python. The transformation libraries provided through TensorFlow are written as high-performance C binaries. Python simply directs the flow between the various parts and provides high-level programming abstractions to connect them together.

Advanced work in TensorFlow (creating nodes and layers and linking them together) uses the Keras library. The Keras API is deceptively simple; a basic three-layer model can be defined in less than 10 lines of code, and the same training code requires only a few lines of code. But if you want to "lift the veil" and do more fine-grained work, like writing your own training loops, you can do that.

Using TensorFlow with JavaScript

Python is the most popular language for working with TensorFlow and machine learning. But JavaScript is now also the first-class language for TensorFlow, and one of the huge advantages of JavaScript is that it runs anywhere there is a web browser.

TensorFlow.js (called the JavaScript TensorFlow library) uses the WebGL API to accelerate computations with any GPU available in the system. It can also be performed using a WebAssembly backend, which is faster than a regular JavaScript backend if you only run on the CPU, but it's best to use the GPU whenever possible. Pre-built models let you get simple projects up and running, giving you an idea of how things work.

TensorFlow Lite

The trained TensorFlow model can also be deployed on edge computing or mobile devices, such as iOS or Android systems. The TensorFlow Lite toolset optimizes TensorFlow models to run well on such devices by allowing you to trade off model size and accuracy. Smaller models (i.e. 12MB vs. 25MB, or even 100 MB) are less accurate, but the loss in accuracy is usually small and offset by the speed and energy efficiency of the model.

Why use TensorFlow

The biggest benefit TensorFlow provides for machine learning development is abstraction. Developers can focus on overall application logic rather than dealing with the details of implementing algorithms or figuring out the correct way to connect the output of one function to the input of another. TensorFlow takes care of the details behind the scenes.

TensorFlow provides greater convenience for developers who need to debug and understand TensorFlow applications. Each graph operation can be evaluated and modified individually and transparently, rather than building the entire graph as a single opaque object and evaluating it at once. This so-called "eager execution mode" was available as an option in older versions of TensorFlow and is now standard.

TensorBoard Visualization Suite lets you inspect and analyze how your graphs are running through an interactive web-based dashboard. The Tensorboard.dev service (hosted by Google) lets you host and share machine learning experiments written in TensorFlow. It can be used for free to store up to 100M of scalar, 1GB of tensor data, and 1GB of binary object data. (Please note that any data hosted in Tensorboard.dev is public, so please do not use it for sensitive projects.)

TensorFlow also gains many advantages from the support of Google's top-notch commercial organizations. Google has driven the project's rapid growth and created many important products that make TensorFlow easier to deploy and use. The TPU chip described above for accelerating performance in Google Cloud is just one example.

Using TensorFlow for deterministic model training

Some details of TensorFlow implementation make it difficult to obtain completely deterministic model training results for some training jobs. Sometimes, a model trained on one system will be slightly different than a model trained on another system, even though they are provided with the exact same data. The reasons for this difference are tricky - one reason is how and where the random numbers are seeded; the other has to do with some non-deterministic behavior when using GPUs. The 2.0 branch of TensorFlow has an option to enable determinism throughout the entire workflow with a few lines of code. However, this feature comes at the cost of performance and should only be used when debugging workflows.

TensorFlow competes with PyTorch, CNTK and MXNet

TensorFlow competes with many other machine learning frameworks. PyTorch, CNTK, and MXNet are the three main frameworks that serve many of the same needs. Let’s take a quick look at where they stand out and fall short compared to TensorFlow:

- PyTorch is built in Python and has many other similarities with TensorFlow: Hardware acceleration components under the hood , a highly interactive development model that allows out-of-the-box design work, and already contains many useful components. PyTorch is often a better choice for rapid development of projects that need to be up and running in a short time, but TensorFlow is better suited for larger projects and more complex workflows.

- CNTK is the Microsoft Cognitive Toolkit, similar to TensorFlow in using graph structures to describe data flow, but it mainly focuses on creating deep learning neural networks. CNTK can handle many neural network jobs faster and has a wider API (Python, C, C#, Java). But currently it is not as easy to learn or deploy as TensorFlow. It is also only available under the GNU GPL 3.0 license, while TensorFlow is available under the more liberal Apache license. And CNTK has less positive developments. The last major version was in 2019.

- Adopted by Amazon as the premier deep learning framework on AWS, Apache MXNet scales almost linearly across multiple GPUs and multiple machines. MXNet also supports a wide range of language APIs - Python, C, Scala, R, JavaScript, Julia, Perl, Go - although its native API is not as easy to use as TensorFlow. It also has a much smaller community of users and developers.

Original title:What is TensorFlow? The machine learning library explained

The above is the detailed content of Why can TensorFlow do machine learning development?. For more information, please follow other related articles on the PHP Chinese website!

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

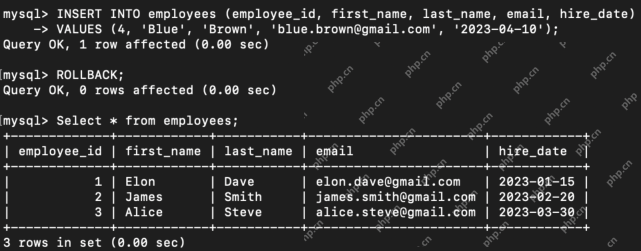

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AM

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AMIntroduction Efficient database management hinges on skillful transaction handling. Structured Query Language (SQL) provides powerful tools for this, offering commands to maintain data integrity and consistency. COMMIT and ROLLBACK are central to t

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AM

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AMPython GUI Development Simplified with PySimpleGUI Developing user-friendly graphical interfaces (GUIs) in Python can be challenging. However, PySimpleGUI offers a streamlined and accessible solution. This article explores PySimpleGUI's core functio

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor