How to split a dataset correctly? Summary of three common methods

Decomposing the data set into a training set can help us understand the model, which is very important for how the model generalizes to new unseen data. A model may not generalize well to new unseen data if it is overfitted. Therefore good predictions cannot be made.

Having an appropriate validation strategy is the first step to successfully create good predictions and use the business value of AI models. This article has compiled some common data splitting strategies.

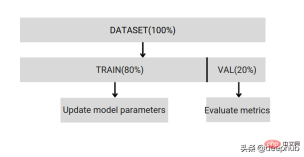

Simple training and test split

Divide the data set into 2 parts: training and verification, and use 80% training and 20% verification. You can do this using Scikit's random sampling.

First of all, the random seed needs to be fixed, otherwise the same data split cannot be compared and the results cannot be reproduced during debugging. If the data set is small, there is no guarantee that the validation split can be uncorrelated with the training split. If the data is unbalanced, you won't get the same split ratio.

So simple splitting can only help us develop and debug. Real training is not perfect enough, so the following splitting methods can help us end these problems.

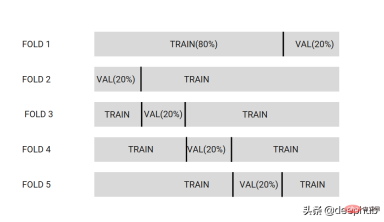

K-fold cross validation

Split the data set into k partitions. In the image below, the dataset is divided into 5 partitions.

#Select one partition as the validation data set, while the other partitions are the training data set. This will train the model on each different set of partitions.

Finally, K different models will be obtained, and these models will be used together using the integration method when reasoning and predicting later.

K is usually set to [3,5,7,10,20]

If you want to check the model performance for low bias, use a higher K [20]. If you are building a model for variable selection, use low k [3,5] and the model will have lower variance.

Advantages:

- By averaging model predictions, you can improve model performance on unseen data drawn from the same distribution.

- This is a widely used method to obtain good production models.

- Different integration techniques can be used to create predictions for each data in the data set, and these predictions can be used to improve the model. This is called OOF (out-fold prediction).

Question:

- If you have an unbalanced data set, use Stratified-kFold.

- If you retrain a model on all datasets, you cannot compare its performance to any model trained with k-Fold. Because this model is trained on k-1, not the entire data set.

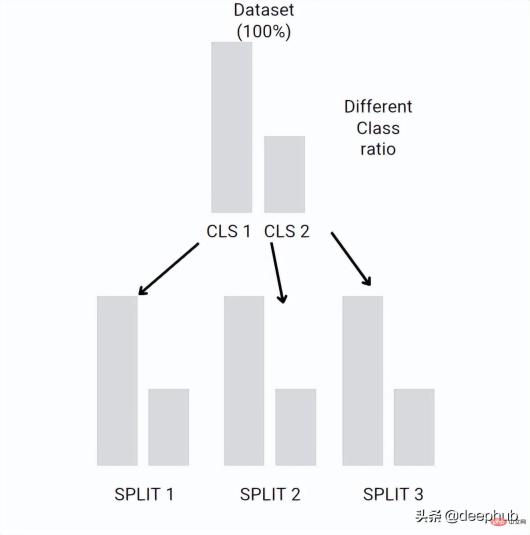

Stratified-kFold

can retain the ratio between different classes in each fold. If the dataset is unbalanced, say Class1 has 10 examples, and Class2 has 100 examples. Stratified-kFold creates each folded classification with the same ratio as the original dataset

The idea is similar to K-fold cross validation, but with the same ratio for each fold as the original dataset.

Each split preserves the initial ratio between classes. If your dataset is large, cross-validation of K-fold may also preserve proportions, but this is stochastic, whereas Stratified-kFold is deterministic and can be used with small datasets.

Bootstrap and Subsampling

Bootstrap and Subsampling are similar to K-Fold cross-validation, but they do not have fixed folds. It randomly selects some data from the data set, uses other data as validation and repeats n times

Bootstrap=alternating sampling, which we have introduced in detail in previous articles.

When should I use him? Bootstrap and Subsamlping can only be used if the standard error of the estimated metric error is large. This may be due to outliers in the data set.

Summary

Usually in machine learning, k-fold cross-validation is used as a starting point. If the data set is unbalanced, Stratified-kFold is used. If there are many outliers, Bootstrap or other methods can be used. Data splitting improvements.

The above is the detailed content of How to split a dataset correctly? Summary of three common methods. For more information, please follow other related articles on the PHP Chinese website!

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PM

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PMThe 2025 Artificial Intelligence Index Report released by the Stanford University Institute for Human-Oriented Artificial Intelligence provides a good overview of the ongoing artificial intelligence revolution. Let’s interpret it in four simple concepts: cognition (understand what is happening), appreciation (seeing benefits), acceptance (face challenges), and responsibility (find our responsibilities). Cognition: Artificial intelligence is everywhere and is developing rapidly We need to be keenly aware of how quickly artificial intelligence is developing and spreading. Artificial intelligence systems are constantly improving, achieving excellent results in math and complex thinking tests, and just a year ago they failed miserably in these tests. Imagine AI solving complex coding problems or graduate-level scientific problems – since 2023

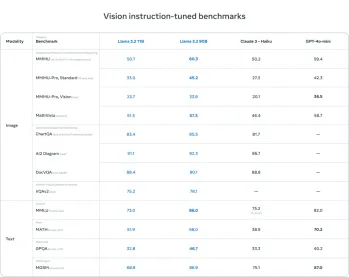

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PMMeta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PMThis week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PM

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PMThe comforting illusion of connection: Are we truly flourishing in our relationships with AI? This question challenged the optimistic tone of MIT Media Lab's "Advancing Humans with AI (AHA)" symposium. While the event showcased cutting-edg

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AM

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AMIntroduction Imagine you're a scientist or engineer tackling complex problems – differential equations, optimization challenges, or Fourier analysis. Python's ease of use and graphics capabilities are appealing, but these tasks demand powerful tools

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AM

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AMMeta's Llama 3.2: A Multimodal AI Powerhouse Meta's latest multimodal model, Llama 3.2, represents a significant advancement in AI, boasting enhanced language comprehension, improved accuracy, and superior text generation capabilities. Its ability t

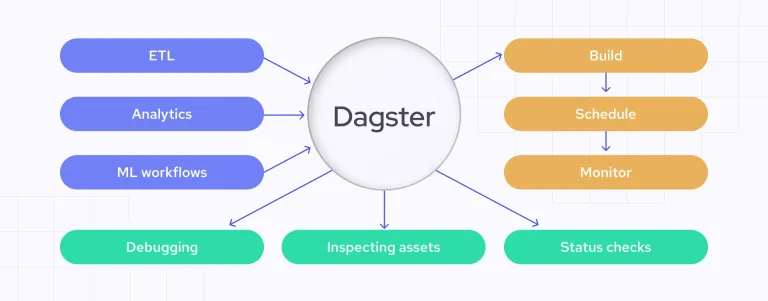

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AM

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AMData Quality Assurance: Automating Checks with Dagster and Great Expectations Maintaining high data quality is critical for data-driven businesses. As data volumes and sources increase, manual quality control becomes inefficient and prone to errors.

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AM

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AMMainframes: The Unsung Heroes of the AI Revolution While servers excel at general-purpose applications and handling multiple clients, mainframes are built for high-volume, mission-critical tasks. These powerful systems are frequently found in heavil

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 Linux new version

SublimeText3 Linux latest version

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Atom editor mac version download

The most popular open source editor