Home >Java >javaTutorial >Detailed explanation with pictures and text! What is Java memory model

Detailed explanation with pictures and text! What is Java memory model

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2022-03-22 18:00:411877browse

This article brings you the relevant knowledge of java, which mainly introduces related issues about the memory model, including why there is a memory model, concurrent programming, and the relationship between memory area and hardware memory. Wait, I hope it helps everyone.

Recommended study: "java tutorial"

In interviews, interviewers often like to ask: "Tell me what Java is." Memory Model (JMM)? 』

The interviewer was ecstatic. He had just memorized this question: "Java memory is mainly divided into five major blocks: heap, method area, virtual machine stack, local method stack, PC register, balabala..."

The interviewer smiled knowingly and revealed a ray of light: "Okay, today's interview is here first, go back and wait for notification"

Generally speaking, when you hear the phrase "wait for notification", this interview has a high probability. It's just cool. why? Because the interviewer misunderstood the concept, the interviewer wanted to test JMM, but as soon as the interviewer heard the keywords JavaMemory, he started to recite the eight-legged essay. There is a big difference between the Java Memory Model (JMM) and the Java runtime memory area. Don't go away and read on. Promise me to read it all.

1. Why do we need a memory model?

To answer this question, we need to first understand the traditional computer hardware memory architecture. Okay, I'm going to start drawing.

1.1. Hardware memory architecture

(1)CPU

Students who have been to the computer room know that multiple servers are generally configured on large servers. CPU, each CPU will also have multiple cores, which means that multiple CPUs or multiple cores can work at the same time (concurrently). If you use Java to start a multi-threaded task, it is very likely that each CPU will run a thread, and then your task will be truly executed concurrently at a certain moment.

(2)CPU Register

CPU Register is the CPU register. CPU registers are integrated inside the CPU, and the efficiency of performing operations on registers is several orders of magnitude higher than on main memory.

(3)CPU Cache Memory

CPU Cache Memory is the CPU cache. Compared with the register, it can usually also become the L2 secondary cache. Compared with the hard disk reading speed, the efficiency of memory reading is very high, but it is still orders of magnitude different from that of the CPU. Therefore, a multi-level cache is introduced between the CPU and the main memory for the purpose of buffering.

(4) Main Memory

Main Memory is the main memory, which is much larger than the L1 and L2 caches.

Note: Some high-end machines also have L3 level three cache.

1.2. Cache consistency problem

Because there is an order of magnitude gap between the main memory and the computing power of the CPU processor, a cache will be introduced as the main memory in the traditional computer memory architecture. In the buffer between the CPU and the processor, the CPU places commonly used data in the cache. After the operation is completed, the CPU synchronizes the operation results to the main memory.

Using cache solves the problem of CPU and main memory rate mismatch, but it also introduces another new problem: cache consistency problem.

In a multi-CPU system (or a single-CPU multi-core system), each CPU core has its own cache, and they share the same main memory (Main Memory). When the computing tasks of multiple CPUs involve the same main memory area, the CPU will read the data into the cache for computing, which may cause the respective cache data to be inconsistent.

Therefore, each CPU needs to follow a certain protocol when accessing the cache, operate according to the protocol when reading and writing data, and jointly maintain the consistency of the cache. Such protocols include MSI, MESI, MOSI, and Dragon Protocol.

1.3. Processor optimization and instruction reordering

In order to improve performance, a cache is added between the CPU and main memory, but you may encounter cache in a multi-threaded concurrent scenario Consistency issue. Is there any way to further improve the execution efficiency of the CPU? The answer is: processor optimization.

In order to maximize the full utilization of the computing units inside the processor, the processor will execute the input code out of order. This is processor optimization.

In addition to the processor optimizing the code, many modern programming language compilers will also do similar optimizations. For example, Java's just-in-time compiler (JIT) will do instruction reordering.

Processor optimization is actually a type of reordering. To summarize, reordering can be divided into three types:

- Compiler optimized reordering. The compiler can rearrange the execution order of statements without changing the semantics of a single-threaded program.

- Instruction-level parallel reordering. Modern processors use instruction-level parallelism to overlap execution of multiple instructions. If there are no data dependencies, the processor can change the order in which statements correspond to machine instructions.

- Reordering of the memory system. Because the processor uses cache and read and write buffers, this can make load and store operations appear to be executed out of order.

2. Concurrent programming issues

The above mentioned a lot of hardware-related things. Some students may be a little confused. After such a big circle, these things are not related to Does the Java memory model matter? Don't worry, let's look down slowly.

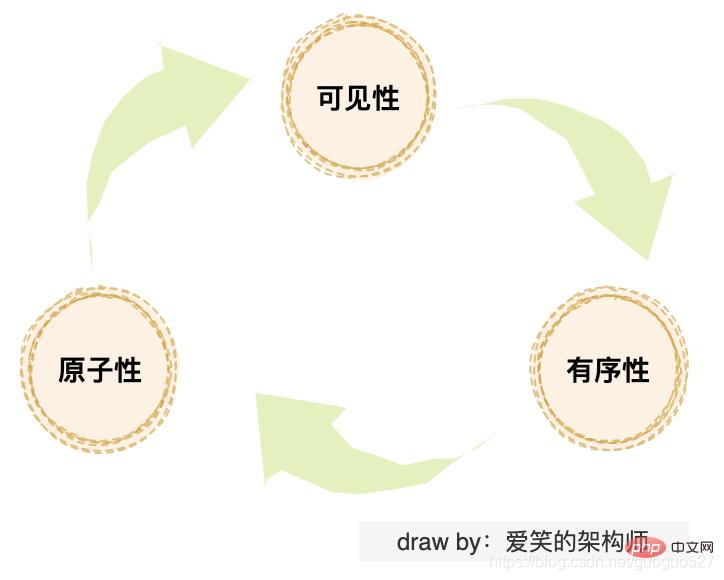

Students who are familiar with Java concurrency must be familiar with these three issues: "visibility issue", "atomicity issue", and "ordering issue". If you look at these three problems from a deeper level, they are actually caused by the "cache consistency", "processor optimization" and "instruction reordering" mentioned above.

The cache consistency problem is actually a visibility problem. Processor optimization may cause atomicity problems, and instruction reordering may cause ordering problems. Do you think they are all connected? .

There is always a problem that needs to be solved, so what is the solution? First of all, I thought of a simple and crude way. Killing the cache and letting the CPU interact directly with the main memory solves the visibility problem. Disabling processor optimization and instruction reordering solves the atomicity and ordering problems, but this will return to before liberation overnight. , obviously undesirable.

So the technical seniors thought of defining a set of memory models on physical machines to standardize memory read and write operations. The memory model mainly uses two methods to solve concurrency problems: Limit processor optimization and Use memory barriers.

3. Java memory model

With the same set of memory model specifications, different languages may have some differences in implementation. Next, we will focus on the implementation principles of Java memory model.

3.1. The relationship between the Java runtime memory area and hardware memory

Students who know the JVM know that the JVM runtime memory area is fragmented and divided into stacks, heaps, etc. In fact, These are logical concepts defined by the JVM. In traditional hardware memory architecture, there is no concept of stack and heap.

It can be seen from the figure that the stack and heap exist in both the cache and the main memory, so there is no direct relationship between the two.

3.2. The relationship between Java threads and main memory

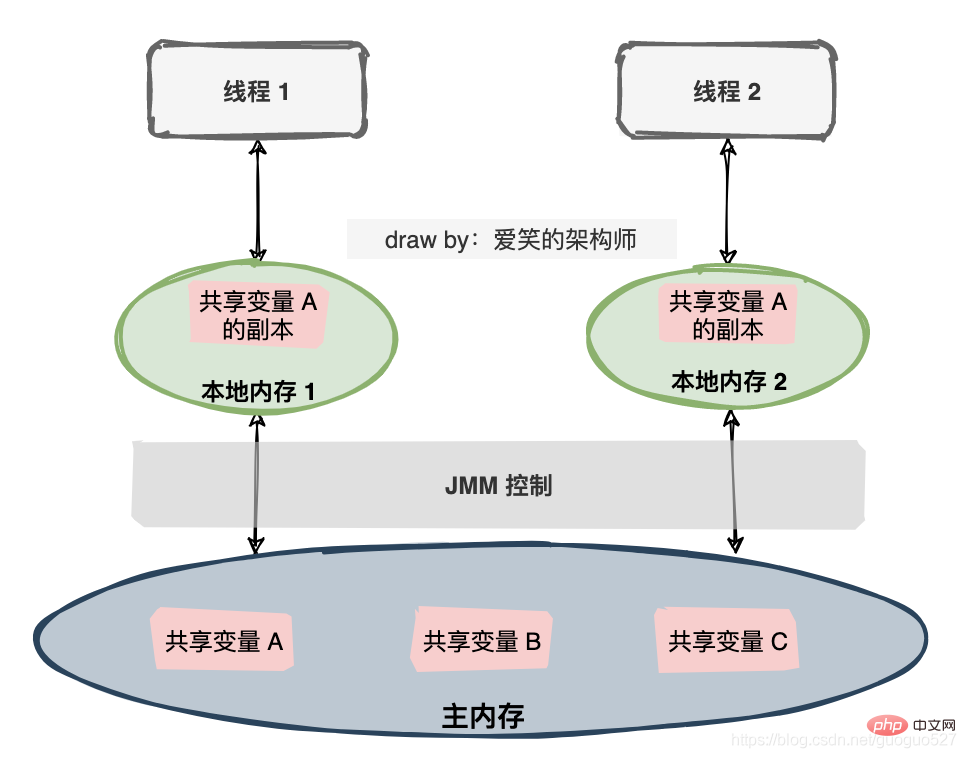

The Java memory model is a specification that defines many things:

- All variables are stored in the main memory In Main Memory.

- Each thread has a private local memory (Local Memory), which stores a copy of the thread's read/write shared variables.

- All operations on variables by threads must be performed in local memory and cannot directly read or write main memory.

- Different threads cannot directly access variables in each other's local memory.

Reading text is too boring, I drew another picture:

3.3. Communication between threads

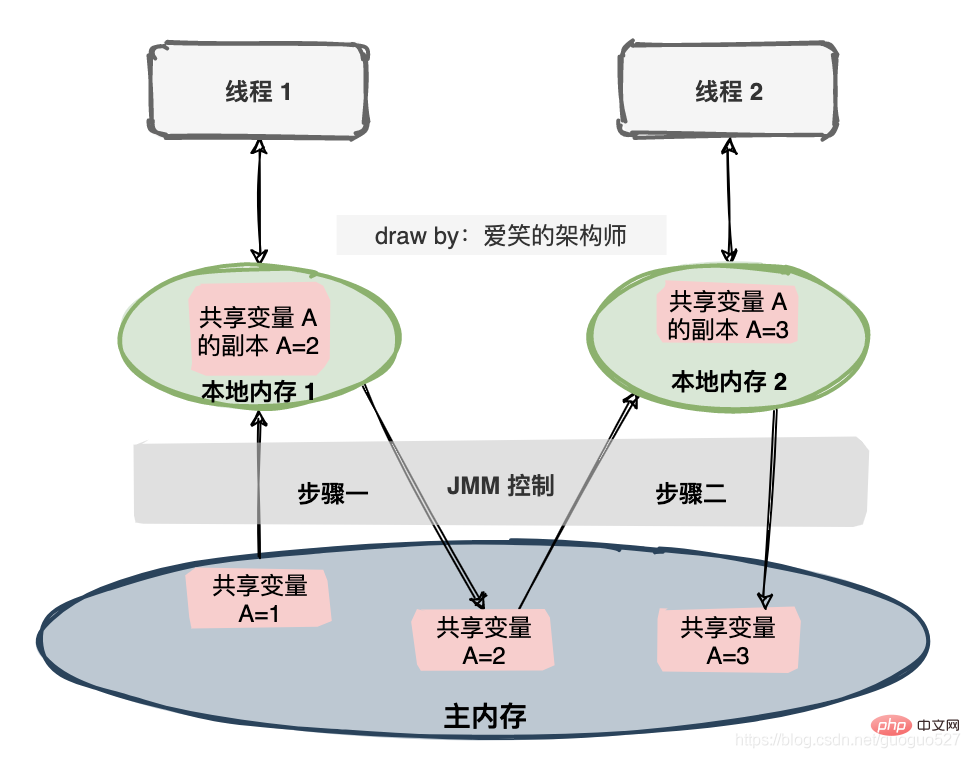

If two threads They all operate on a shared variable. The initial value of the shared variable is 1. Each thread increments the variable by 1. The expected value of the shared variable is 3. There are a series of operations under the JMM specification.

In order to better control the interaction between main memory and local memory, the Java memory model defines eight operations to achieve:

- lock: Lock. Acts on variables in main memory, marking a variable as exclusive to a thread.

- unlock: Unlock. Acts on main memory variables to release a locked variable so that the released variable can be locked by other threads.

- read: read. Acts on main memory variables and transfers a variable value from main memory to the thread's working memory for subsequent load actions. Use

- load: to load. Acts on variables in working memory, which puts the variable value obtained from main memory by the read operation into a copy of the variable in working memory.

- use: use. Variables that act on working memory pass a variable value in working memory to the execution engine. This operation will be performed whenever the virtual machine encounters a bytecode instruction that requires the value of the variable.

- assign: assignment. Acts on a variable in working memory. It assigns a value received from the execution engine to a variable in working memory. This operation is performed every time the virtual machine encounters a bytecode instruction that assigns a value to the variable.

- store: Storage. Acts on variables in working memory and transfers the value of a variable in working memory to main memory for subsequent write operations.

- write: write. Acts on a variable in main memory, which transfers the store operation from the value of a variable in working memory to a variable in main memory.

Note: Working memory also means local memory.

4. An attitude summary

Since there is an order of magnitude speed difference between the CPU and main memory, I thought of introducing a traditional hardware memory architecture with multi-level cache to solve the problem. Level cache acts as a buffer between the CPU and the main engine to improve overall performance. It solves the problem of poor speed, but also brings about the problem of cache consistency.

Data exists in both cache and main memory. If it is not standardized, it will inevitably cause disaster. Therefore, the memory model is abstracted on traditional machines.

The Java language has launched the JMM specification based on following the memory model. The purpose is to solve the problem of local memory data inconsistency and the compiler reordering and processing code instructions when multi-threads communicate through shared memory. The processor will cause problems such as out-of-order execution of code.

In order to more accurately control the interaction between working memory and main memory, JMM also defines eight operations: lock, unlock, read , load,use,assign, store, write.

Recommended study: "java learning tutorial"

The above is the detailed content of Detailed explanation with pictures and text! What is Java memory model. For more information, please follow other related articles on the PHP Chinese website!