Home >Backend Development >Python Tutorial >Spider of Python

Spider of Python

- coldplay.xixiforward

- 2020-10-12 17:21:515747browse

Todaypython video tutorial column introduces you to Python’s Spider (crawler) related knowledge.

1. Web crawler

Web crawlers are also called web spiders. We can imagine the Internet as a spider web, and every website is a Node, we can use a spider to grab the resources we want from each web page. To give the simplest example, if you enter 'Python' in Baidu and Google, a large number of Python-related web pages will be retrieved. How Baidu and Google retrieve the resources you want from the massive web pages? They rely on What it does is send a large number of spiders to crawl the web page, search for keywords, build an index database, and after a complex sorting algorithm, the results are displayed to you according to the relevance of the search keywords.

A journey of a thousand miles begins with a single step. Let’s learn how to write a web crawler from the very basics and implement the language using Python.

2. How does Python access the Internet?

If you want to write a web crawler, the first step is to access the Internet. How does Python access the Internet?

In Python, we use the urllib package to access the Internet. (In Python3, this module has been greatly adjusted. There used to be urllib and urllib2. In 3, these two modules were unified and merged, called the urllib package. The package contains four modules, urllib.request, urllib.error, urllib.parse, urllib.robotparser), currently the main one used is urllib.request.

We first give the simplest example, how to obtain the source code of a web page:

import urllib.request response = urllib.request.urlopen('https://docs.python.org/3/') html = response.read()print(html.decode('utf-8'))

3. Simple use of Python network

First, we use two small demos to practice To start, one is to use python code to download a picture to the local, and the other is to call Youdao Translation to write a small translation software.

3.1 Download the image according to the image link, the code is as follows:

import urllib.request

response = urllib.request.urlopen('http://www.3lian.com/e/ViewImg/index.html?url=http://img16.3lian.com/gif2016/w1/3/d/61.jpg')

image = response.read()

with open('123.jpg','wb') as f:

f.write(image)Where response is an object

Input: response.geturl()

->'http://www.3lian.com/e/ViewImg/index.html?url=http://img16.3lian.com/gif2016/w1/3/d/61. jpg'

Input: response.info()

->97471a31b33c8575455b5a47913ff0a6

Input: print(response.info())

->Content-Type: text/html

Last-Modified: Mon, 27 Sep 2004 01:23:20 GMT

Accept-Ranges: bytes

/8.0

Date: Sun, 14 Aug 2016 07:16:01 GMT

Connection: close

Content- Length: 2827

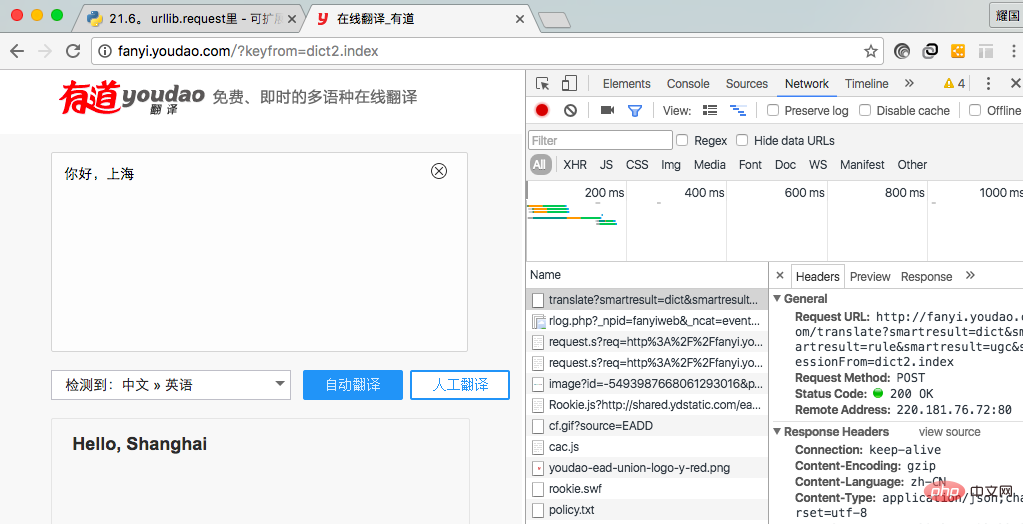

3.1 Use Youdao Dictionary to realize the translation function We want to implement the translation function, we need to get the request link. First, we need to enter the Youdao homepage, click Translate, enter the content to be translated in the translation interface, click the Translate button, and a request will be initiated to the server. All we need to do is get the request address and request parameters.

我在此使用谷歌浏览器实现拿到请求地址和请求参数。首先点击右键,点击检查(不同浏览器点击的选项可能不同,同一浏览器的不同版本也可能不同),进入图一所示,从中我们可以拿到请求请求地址和请求参数,在Header中的Form Data中我们可以拿到请求参数。

(图一)

代码段如下:

import urllib.requestimport urllib.parse

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule&smartresult=ugc&sessionFrom=dict2.index'data = {}

data['type'] = 'AUTO'data['i'] = 'i love you'data['doctype'] = 'json'data['xmlVersion'] = '1.8'data['keyfrom'] = 'fanyi.web'data['ue'] = 'UTF-8'data['action'] = 'FY_BY_CLICKBUTTON'data['typoResult'] = 'true'data = urllib.parse.urlencode(data).encode('utf-8')

response = urllib.request.urlopen(url,data)

html = response.read().decode('utf-8')print(html)上述代码执行如下:

{"type":"EN2ZH_CN","errorCode":0,"elapsedTime":0,"translateResult":[[{"src":"i love you","tgt":"我爱你"}]],"smartResult":{"type":1,"entries":["","我爱你。"]}}

对于上述结果,我们可以看到是一个json串,我们可以对此解析一下,并且对代码进行完善一下:

import urllib.requestimport urllib.parseimport json

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule&smartresult=ugc&sessionFrom=dict2.index'data = {}

data['type'] = 'AUTO'data['i'] = 'i love you'data['doctype'] = 'json'data['xmlVersion'] = '1.8'data['keyfrom'] = 'fanyi.web'data['ue'] = 'UTF-8'data['action'] = 'FY_BY_CLICKBUTTON'data['typoResult'] = 'true'data = urllib.parse.urlencode(data).encode('utf-8')

response = urllib.request.urlopen(url,data)

html = response.read().decode('utf-8')

target = json.loads(html)print(target['translateResult'][0][0]['tgt'])四、规避风险

服务器检测出请求不是来自浏览器,可能会屏蔽掉请求,服务器判断的依据是使用‘User-Agent',我们可以修改改字段的值,来隐藏自己。代码如下:

import urllib.requestimport urllib.parseimport json

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule&smartresult=ugc&sessionFrom=dict2.index'data = {}

data['type'] = 'AUTO'data['i'] = 'i love you'data['doctype'] = 'json'data['xmlVersion'] = '1.8'data['keyfrom'] = 'fanyi.web'data['ue'] = 'UTF-8'data['action'] = 'FY_BY_CLICKBUTTON'data['typoResult'] = 'true'data = urllib.parse.urlencode(data).encode('utf-8')

req = urllib.request.Request(url, data)

req.add_header('User-Agent','Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36')

response = urllib.request.urlopen(url, data)

html = response.read().decode('utf-8')

target = json.loads(html)print(target['translateResult'][0][0]['tgt'])View Code

上述做法虽然可以隐藏自己,但是还有很大问题,例如一个网络爬虫下载图片软件,在短时间内大量下载图片,服务器可以可以根据IP访问次数判断是否是正常访问。所有上述做法还有很大的问题。我们可以通过两种做法解决办法,一是使用延迟,例如5秒内访问一次。另一种办法是使用代理。

延迟访问(休眠5秒,缺点是访问效率低下):

import urllib.requestimport urllib.parseimport jsonimport timewhile True:

content = input('please input content(input q exit program):') if content == 'q': break;

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule&smartresult=ugc&sessionFrom=dict2.index'

data = {}

data['type'] = 'AUTO'

data['i'] = content

data['doctype'] = 'json'

data['xmlVersion'] = '1.8'

data['keyfrom'] = 'fanyi.web'

data['ue'] = 'UTF-8'

data['action'] = 'FY_BY_CLICKBUTTON'

data['typoResult'] = 'true'

data = urllib.parse.urlencode(data).encode('utf-8')

req = urllib.request.Request(url, data)

req.add_header('User-Agent','Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36')

response = urllib.request.urlopen(url, data)

html = response.read().decode('utf-8')

target = json.loads(html) print(target['translateResult'][0][0]['tgt'])

time.sleep(5)View Code

代理访问:让代理访问资源,然后讲访问到的资源返回。服务器看到的是代理的IP地址,不是自己地址,服务器就没有办法对你做限制。

步骤:

1,参数是一个字典{'类型' : '代理IP:端口号' } //类型是http,https等

proxy_support = urllib.request.ProxyHandler({})

2,定制、创建一个opener

opener = urllib.request.build_opener(proxy_support)

3,安装opener(永久安装,一劳永逸)

urllib.request.install_opener(opener)

3,调用opener(调用的时候使用)

opener.open(url)

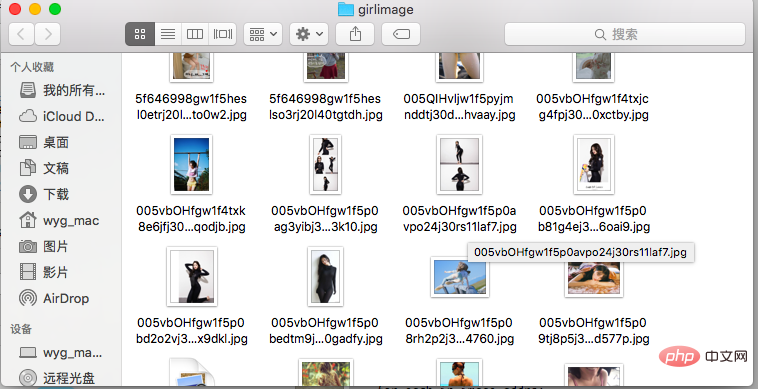

五、批量下载网络图片

图片下载来源为煎蛋网(http://jandan.net)

图片下载的关键是找到图片的规律,如找到当前页,每一页的图片链接,然后使用循环下载图片。下面是程序代码(待优化,正则表达式匹配,IP代理):

import urllib.requestimport osdef url_open(url):

req = urllib.request.Request(url)

req.add_header('User-Agent','Mozilla/5.0')

response = urllib.request.urlopen(req)

html = response.read() return htmldef get_page(url):

html = url_open(url).decode('utf-8')

a = html.find('current-comment-page') + 23

b = html.find(']',a) return html[a:b]def find_image(url):

html = url_open(url).decode('utf-8')

image_addrs = []

a = html.find('img src=') while a != -1:

b = html.find('.jpg',a,a + 150) if b != -1:

image_addrs.append(html[a+9:b+4]) else:

b = a + 9

a = html.find('img src=',b) for each in image_addrs: print(each) return image_addrsdef save_image(folder,image_addrs): for each in image_addrs:

filename = each.split('/')[-1]

with open(filename,'wb') as f:

img = url_open(each)

f.write(img)def download_girls(folder = 'girlimage',pages = 20):

os.mkdir(folder)

os.chdir(folder)

url = 'http://jandan.net/ooxx/'

page_num = int(get_page(url)) for i in range(pages):

page_num -= i

page_url = url + 'page-' + str(page_num) + '#comments'

image_addrs = find_image(page_url)

save_image(folder,image_addrs)if __name__ == '__main__':

download_girls() 代码运行效果如下:

更多相关免费学习推荐:python视频教程

The above is the detailed content of Spider of Python. For more information, please follow other related articles on the PHP Chinese website!