Home >Java >javaTutorial >Java HashMap Dialysis

Java HashMap Dialysis

- (*-*)浩forward

- 2019-10-28 15:35:452677browse

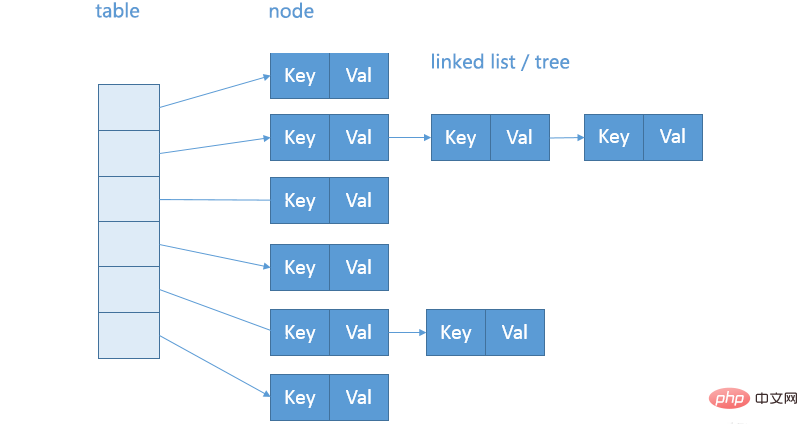

HashMap is a complex structure composed of an array and a linked list. The hash value determines the position of the key value in the array. When the hash values are the same, it is stored in the form of a linked list. When the length of the linked list When the set threshold is reached, it will be treed. This is done to ensure data security and the efficiency of data-related operations.

HashMap performance depends on the effectiveness of the hash code, so hashCode and equals are basically The agreed rules are particularly important, such as: if equals is equal, hashCode must be equal; if you rewrite hashCode, you must also rewrite equals; hashCode needs to maintain consistency, and the hash value returned by the status change must still be consistent; symmetry, reflection, and transmission of equals, etc. Features

The difference between HashMap and Hashtable and TreeMap

HashMap: an array-based asynchronous hash table, supporting null key or Value is the first choice for key-value pair access data scenarios

Hashtable: an array-based synchronized hash table that does not support null keys or values. Because synchronization causes performance impact, it is rarely used

TreeMap: A Map that provides sequential access based on red-black trees. It saves space than HashMap, but its data operation (check, add, delete) time complexity is: O (log (n)), which is different from HashMap. Supports null values. When the key is empty and the Comparator interface is not implemented, a NullPointerException will occur. Implementing the Comparator interface and judging the null object can achieve normal storage.

HashMap, Hashtable, and TreeMap all use key-value pairs Forms store or manipulate data elements. HashMap and TreeMap inherit from the AbstractMap class, and Hashtable inherits from the Dictionary class. All three implement the Map interface

HashMap source code analysis

HashMap()

public HashMap(int initialCapacity, float loadFactor){

// ...

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}Only some initial values are set when initializing HashMap, but when starting to process data, the .put() method gradually becomes more complicated

HashMap.put()

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

// 定义新tab数组及node对象

Node<K,V>[] tab; Node<K,V> p; int n, i;

// 如果原table是空的或者未存储任何元素则需要先初始化进行tab的初始化

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// 当数组中对应位置为null时,将新元素放入数组中

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

// 若对应位置不为空时处理哈希冲突

else {

Node<K,V> e; K k;

// 1 - 普通元素判断: 更新数组中对应位置数据

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

// 2 - 红黑树判断:当p为树的节点时,向树内插入节点

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

// 3 - 链表判断:插入节点

else {

for (int binCount = 0; ; ++binCount) {

// 找到尾结点并插入

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

// 判断链表长度是否达到树化阈值,达到就对链表进行树化

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

// 更新链表中对应位置数据

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

// 如果存在这个映射就覆盖

if (e != null) { // existing mapping for key

V oldValue = e.value;

// 判断是否允许覆盖,并且value是否为空

if (!onlyIfAbsent || oldValue == null)

e.value = value;

// 回调以允许LinkedHashMap后置操作

afterNodeAccess(e);

return oldValue;

}

}

// 更新修改次数

++modCount;

// 检查数组是否需要进行扩容

if (++size > threshold)

resize();

// 回调以允许LinkedHashMap后置操作

afterNodeInsertion(evict);

return null;

}When the table is null, it will be initialized through resize(), and resize() has two functions. One is to create and initialize the table, and the other is to expand the table when the capacity does not meet the requirements:

if (++size > threshold)

resize();Specific keys The calculation method of the value pair storage location is:

if ((p = tab[i = (n - 1) & hash]) == null)

// 向数组赋值新元素

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

// 如果新插入的结点和table中p结点的hash值,key值相同的话

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

// 如果是红黑树结点的话,进行红黑树插入

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

for (int binCount = 0; ; ++binCount) {

// 代表这个单链表只有一个头部结点,则直接新建一个结点即可

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

// 链表长度大于8时,将链表转红黑树

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

// 及时更新p

p = e;

}

}

// 如果存在这个映射就覆盖

if (e != null) { // existing mapping for key

V oldValue = e.value;

// 判断是否允许覆盖,并且value是否为空

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e); // 回调以允许LinkedHashMap后置操作

return oldValue;

}

}Pay attention to the hash calculation in the .put() method. It is not the hashCode of the key, but shifts the high-bit data of the key's hashCode to the low-bit for XOR operation. In this way, data whose main difference in the calculated hash value is in the high bits will not be ignored due to the high bits above the capacity when hash addressing in HashMap, so hash collisions in such situations can be effectively avoided

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}HashMap.resize()

final Node<K,V>[] resize() {

// 把当前底层数组赋值给oldTab,为数据迁移工作做准备

Node<K,V>[] oldTab = table;

// 获取当前数组的大小,等于或小于0表示需要初始化数组,大于0表示需要扩容数组

int oldCap = (oldTab == null) ? 0 : oldTab.length;

// 获取扩容的阈值(容量*负载系数)

int oldThr = threshold;

// 定义并初始化新数组长度和目标阈值

int newCap, newThr = 0;

// 判断是初始化数组还是扩容,等于或小于0表示需要初始化数组,大于0表示需要扩容数组。若 if(oldCap > 0)=true 表示需扩容而非初始化

if (oldCap > 0) {

// 判断数组长度是否已经是最大,MAXIMUM_CAPACITY =(2^30)

if (oldCap >= MAXIMUM_CAPACITY) {

// 阈值设置为最大

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

// 目标阈值扩展2倍,数组长度扩展2倍

newThr = oldThr << 1; // double threshold

}

// 表示需要初始化数组而不是扩容

else if (oldThr > 0)

// 说明调用的是HashMap的有参构造函数,因为无参构造函数并没有对threshold进行初始化

newCap = oldThr;

// 表示需要初始化数组而不是扩容,零初始阈值表示使用默认值

else {

// 说明调用的是HashMap的无参构造函数

newCap = DEFAULT_INITIAL_CAPACITY;

// 计算目标阈值

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

// 当目标阈值为0时需重新计算,公式:容量(newCap)*负载系数(loadFactor)

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

// 根据以上计算结果将阈值更新

threshold = newThr;

// 将新数组赋值给底层数组

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

// -------------------------------------------------------------------------------------

// 此时已完成初始化数组或扩容数组,但原数组内的数据并未迁移至新数组(扩容后的数组),之后的代码则是完成原数组向新数组的数据迁移过程

// -------------------------------------------------------------------------------------

// 判断原数组内是否有存储数据,有的话开始迁移数据

if (oldTab != null) {

// 开始循环迁移数据

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

// 将数组内此下标中的数据赋值给Node类型的变量e,并判断非空

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

// 1 - 普通元素判断:判断数组内此下标中是否只存储了一个元素,是的话表示这是一个普通元素,并开始转移

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

// 2 - 红黑树判断:判断此下标内是否是一颗红黑树,是的话进行数据迁移

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

// 3 - 链表判断:若此下标内包含的数据既不是普通元素又不是红黑树,则它只能是一个链表,进行数据转移

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

// 返回初始化完成或扩容完成的新数组

return newTab;

}Capacity and load factor determine the array capacity. Too much space will cause a waste of space, and using it too full will affect the operation performance.

If you can know it clearly For the number of key-value pairs that HashMap will access, consider setting an appropriate capacity in advance. We can make a simple estimate of the specific value based on the conditions under which capacity expansion occurs. Based on the previous code analysis, we know that it needs to meet the calculation conditions: load factor * capacity > number of elements

Therefore, the preset capacity needs Satisfies, is greater than the estimated number of elements/load factor, and is a power of 2

But it should be noted:

If there is no special need, do not proceed easily Change, because the default load factor of the JDK itself is very consistent with the needs of common scenarios. If you really need to adjust, it is recommended not to set a value exceeding 0.75, because it will significantly increase conflicts and reduce the performance of HashMap. If a load factor that is too small is used, the preset capacity value will also be adjusted according to the above formula. Otherwise, it may lead to more frequent capacity expansion, increase unnecessary overhead, and the access performance itself will also be affected.

HashMap.get()

public V get(Object key) {

Node<K,V> e;

return (e = getNode(hash(key), key)) == null ? null : e.value;

}

final Node<K,V> getNode(int hash, Object key) {

Node<K,V>[] tab; Node<K,V> first, e; int n; K k;

// 将table赋值给变量tab并判断非空 && tab 的厂部大于0 && 通过位运算得到求模结果确定链表的首节点赋值并判断非空

if ((tab = table) != null && (n = tab.length) > 0 &&

(first = tab[(n - 1) & hash]) != null) {

// 判断首节点hash值 && 判断key的hash值(地址相同 || equals相等)均为true则表示first即为目标节点直接返回

if (first.hash == hash && // always check first node

((k = first.key) == key || (key != null && key.equals(k))))

return first;

// 若首节点非目标节点,且还有后续节点时,则继续向后寻找

if ((e = first.next) != null) {

// 1 - 树:判断此节点是否为树的节点,是的话遍历树结构查找节点,查找结果可能为null

if (first instanceof TreeNode)

return ((TreeNode<K,V>)first).getTreeNode(hash, key);

// 2 - 链表:若此节点非树节点,说明它是链表,遍历链表查找节点,查找结果可能为null

do {

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);

}

}

return null;

}Why is HashMap treed

In order to ensure data security and related operation efficiency

Because during the element placement process, if an object hash conflict and are placed in the same bucket, a linked list will be formed. We know that linked list query is linear, which will seriously affect the performance of access

In the real world, constructing hash conflict data is not a very complicated matter. Malicious code can use this data to interact with the server side in large quantities, causing a large amount of server-side CPU usage. This constitutes a hash collision denial of service attack. Domestic Similar attacks have occurred at first-line Internet companies

The above is the detailed content of Java HashMap Dialysis. For more information, please follow other related articles on the PHP Chinese website!