Home >Web Front-end >JS Tutorial >How Web Technology Realizes Mobile Monitoring

How Web Technology Realizes Mobile Monitoring

- 小云云Original

- 2018-02-09 13:27:111823browse

This article mainly introduces how Web technology can realize motion monitoring, motion detection, also generally called motion detection, which is often used for unattended surveillance video and automatic alarm. The images collected by the camera at different frame rates will be calculated and compared by the CPU according to a certain algorithm. When the picture changes, such as someone walking by and the lens is moved, the number obtained from the calculation and comparison results will exceed the threshold and indicate that the system can Automatically make corresponding processing

Introduction to Web technology for mobile monitoring

It can be concluded from the above quotation that "mobile monitoring" requires the following elements:

A computer with a camera is used to determine the movement algorithm and post-movement processing

Note: All cases involved in this article are based on PC/Mac’s newer versions of Chrome/Firefox browsers. Some cases require Completed with the camera, all screenshots are saved locally.

The other party doesn’t want to talk to you and throws you a link:

##Comprehensive Case

##Comprehensive Case

Pixel difference

Pixel difference

Video source

Does not rely on Flash or Silverlight, we use the navigator.getUserMedia() API in WebRTC (Web Real-Time Communications), which allows Web applications Get the user's camera and microphone streams. The sample code is as follows:<!-- 若不加 autoplay,则会停留在第一帧 -->

<video id="video" autoplay></video>

// 具体参数含义可看相关文档。

const constraints = {

audio: false,

video: {

width: 640,

height: 480

}

}

navigator.mediaDevices.getUserMedia(constraints)

.then(stream => {

// 将视频源展示在 video 中

video.srcObject = stream

})

.catch(err => {

console.log(err)

})For compatibility issues, Safari 11 begins to support WebRTC. See caniuse for details.

Pixel processing

After getting the video source, we have the material to determine whether the object is moving. Of course, no advanced recognition algorithm is used here. It just uses the pixel difference between two consecutive screenshots to determine whether the object has moved (strictly speaking, it is a change in the picture).Screenshots

Sample code to get screenshots of video source:##

const video = document.getElementById('video')

const canvas = document.createElement('canvas')

const ctx = canvas.getContext('2d')

canvas.width = 640

canvas.height = 480

// 获取视频中的一帧

function capture () {

ctx.drawImage(video, 0, 0, canvas.width, canvas.height)

// ...其它操作

}Get the difference between screenshots

Regarding the pixel difference between the two images, the "pixel detection" collision algorithm mentioned in this blog post ""Wait a minute, let me touch!" - Common 2D Collision Detection" of the Alkaline Lab is One of the solutions. This algorithm determines whether there is a collision by traversing whether the transparency of pixels at the same position of two off-screen canvases is greater than 0 at the same time. Of course, here we need to change it to "whether the pixels at the same position are different (or the difference is less than a certain threshold)" to determine whether it is moved or not.

But the above method is a little troublesome and inefficient. Here we use

ctx.globalCompositeOperation = 'difference' to specify the new elements of the canvas (i.e. the second screenshot and the first screenshot) Synthesis method to get the difference between the two screenshots. Experience link>>

Sample code:

function diffTwoImage () {

// 设置新增元素的合成方式

ctx.globalCompositeOperation = 'difference'

// 清除画布

ctx.clearRect(0, 0, canvas.width, canvas.height)

// 假设两张图像尺寸相等

ctx.drawImage(firstImg, 0, 0)

ctx.drawImage(secondImg, 0, 0)

}The difference between the two pictures

After experiencing the above case, do you have the feeling of playing the "QQ game "Let's Find Difference"" back then. In addition, this case may also be applicable to the following two situations:

- When you don’t know the difference between the two design drafts the designer gave you before and after

- When you want to see the difference in the rendering of the same web page by two browsers, when it is an "action"

- From the above case of "difference between two images" Obtained from the center: black means that the pixel at that position has not changed, and the brighter the pixel, the greater the "movement" at that point. Therefore, when there are bright pixels after combining two consecutive screenshots, it is the generation of an "action". But in order to make the program less "sensitive", we can set a threshold. When the number of bright pixels is greater than the threshold, an "action" is considered to have occurred. Of course, we can also eliminate "not bright enough" pixels to avoid the influence of external environments (such as lights, etc.) as much as possible.

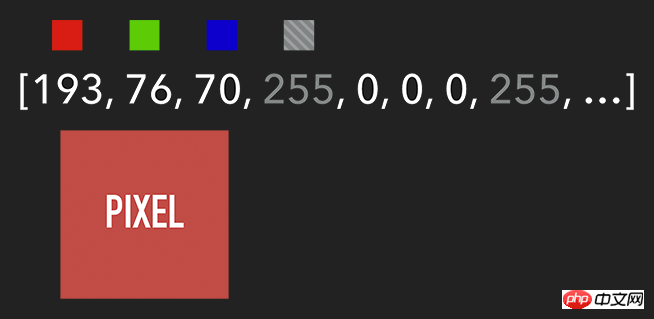

想要获取 Canvas 的像素信息,需要通过 ctx.getImageData(sx, sy, sw, sh),该 API 会返回你所指定画布区域的像素对象。该对象包含 data、width、height。其中 data 是一个含有每个像素点 RGBA 信息的一维数组,如下图所示。

含有 RGBA 信息的一维数组

获取到特定区域的像素后,我们就能对每个像素进行处理(如各种滤镜效果)。处理完后,则可通过 ctx.putImageData() 将其渲染在指定的 Canvas 上。

扩展:由于 Canvas 目前没有提供“历史记录”的功能,如需实现“返回上一步”操作,则可通过 getImageData 保存上一步操作,当需要时则可通过 putImageData 进行复原。

示例代码:

let imageScore = 0

const rgba = imageData.data

for (let i = 0; i < rgba.length; i += 4) {

const r = rgba[i] / 3

const g = rgba[i + 1] / 3

const b = rgba[i + 2] / 3

const pixelScore = r + g + b

// 如果该像素足够明亮

if (pixelScore >= PIXEL_SCORE_THRESHOLD) {

imageScore++

}

}

// 如果明亮的像素数量满足一定条件

if (imageScore >= IMAGE_SCORE_THRESHOLD) {

// 产生了移动

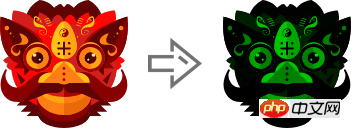

}在上述案例中,你也许会注意到画面是『绿色』的。其实,我们只需将每个像素的红和蓝设置为 0,即将 RGBA 的 r = 0; b = 0 即可。这样就会像电影的某些镜头一样,增加了科技感和神秘感。

体验地址>>

const rgba = imageData.data

for (let i = 0; i < rgba.length; i += 4) {

rgba[i] = 0 // red

rgba[i + 2] = 0 // blue

}

ctx.putImageData(imageData, 0, 0)

将 RGBA 中的 R 和 B 置为 0

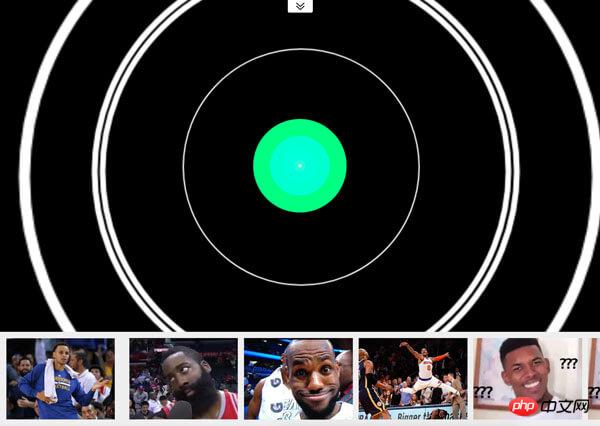

跟踪“移动物体”

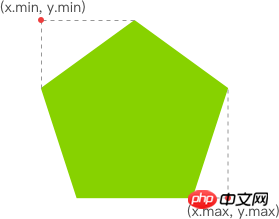

有了明亮的像素后,我们就要找出其 x 坐标的最小值与 y 坐标的最小值,以表示跟踪矩形的左上角。同理,x 坐标的最大值与 y 坐标的最大值则表示跟踪矩形的右下角。至此,我们就能绘制出一个能包围所有明亮像素的矩形,从而实现跟踪移动物体的效果。

How Web Technology Realizes Mobile Monitoring

体验链接>>

示例代码:

function processDiff (imageData) {

const rgba = imageData.data

let score = 0

let pixelScore = 0

let motionBox = 0

// 遍历整个 canvas 的像素,以找出明亮的点

for (let i = 0; i < rgba.length; i += 4) {

pixelScore = (rgba[i] + rgba[i+1] + rgba[i+2]) / 3

// 若该像素足够明亮

if (pixelScore >= 80) {

score++

coord = calcCoord(i)

motionBox = calcMotionBox(montionBox, coord.x, coord.y)

}

}

return {

score,

motionBox

}

}

// 得到左上角和右下角两个坐标值

function calcMotionBox (curMotionBox, x, y) {

const motionBox = curMotionBox || {

x: { min: coord.x, max: x },

y: { min: coord.y, max: y }

}

motionBox.x.min = Math.min(motionBox.x.min, x)

motionBox.x.max = Math.max(motionBox.x.max, x)

motionBox.y.min = Math.min(motionBox.y.min, y)

motionBox.y.max = Math.max(motionBox.y.max, y)

return motionBox

}

// imageData.data 是一个含有每个像素点 rgba 信息的一维数组。

// 该函数是将上述一维数组的任意下标转为 (x,y) 二维坐标。

function calcCoord(i) {

return {

x: (i / 4) % diffWidth,

y: Math.floor((i / 4) / diffWidth)

}

}在得到跟踪矩形的左上角和右下角的坐标值后,通过 ctx.strokeRect(x, y, width, height) API 绘制出矩形即可。

ctx.lineWidth = 6 ctx.strokeRect( diff.motionBox.x.min + 0.5, diff.motionBox.y.min + 0.5, diff.motionBox.x.max - diff.motionBox.x.min, diff.motionBox.y.max - diff.motionBox.y.min )

这是理想效果,实际效果请打开 体验链接

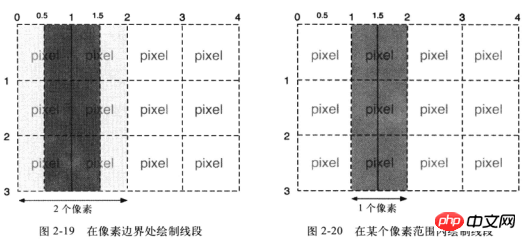

扩展:为什么上述绘制矩形的代码中的

x、y要加0.5呢?一图胜千言:

性能缩小尺寸

在上一个章节提到,我们需要通过对 Canvas 每个像素进行处理,假设 Canvas 的宽为 640,高为 480,那么就需要遍历 640 * 480 = 307200 个像素。而在监测效果可接受的前提下,我们可以将需要进行像素处理的 Canvas 缩小尺寸,如缩小 10 倍。这样需要遍历的像素数量就降低 100 倍,从而提升性能。

体验地址>>

示例代码:

const motionCanvas // 展示给用户看 const backgroundCanvas // offscreen canvas 背后处理数据 motionCanvas.width = 640 motionCanvas.height = 480 backgroundCanvas.width = 64 backgroundCanvas.height = 48

尺寸缩小 10 倍

定时器

我们都知道,当游戏以『每秒60帧』运行时才能保证一定的体验。但对于我们目前的案例来说,帧率并不是我们追求的第一位。因此,每 100 毫秒(具体数值取决于实际情况)取当前帧与前一帧进行比较即可。

另外,因为我们的动作一般具有连贯性,所以可取该连贯动作中幅度最大的(即“分数”最高)或最后一帧动作进行处理即可(如存储到本地或分享到朋友圈)。

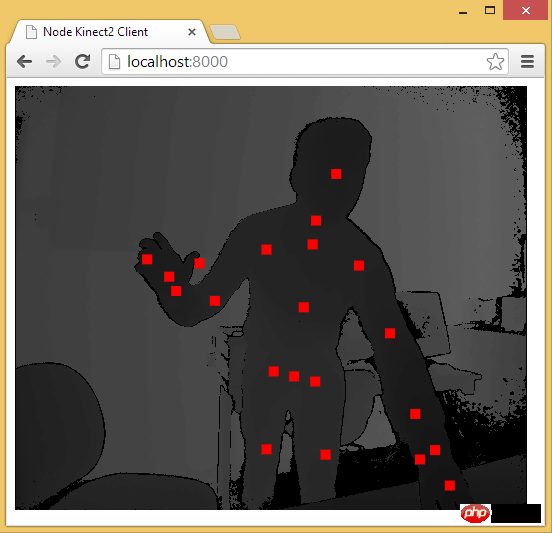

延伸

至此,用 Web 技术实现简易的“移动监测”效果已基本讲述完毕。由于算法、设备等因素的限制,该效果只能以 2D 画面为基础来判断物体是否发生“移动”。而微软的 Xbox、索尼的 PS、任天堂的 Wii 等游戏设备上的体感游戏则依赖于硬件。以微软的 Kinect 为例,它为开发者提供了可跟踪最多六个完整骨骼和每人 25 个关节等强大功能。利用这些详细的人体参数,我们就能实现各种隔空的『手势操作』,如画圈圈诅咒某人。

下面几个是通过 Web 使用 Kinect 的库:

DepthJS:以浏览器插件形式提供数据访问。

Node-Kinect2: 以 Nodejs 搭建服务器端,提供数据比较完整,实例较多。

ZigFu: Supports H5, U3D, and Flash, and has a relatively complete API.

Kinect-HTML5: Kinect-HTML5 uses C# to build a server and provide color data, depth data and bone data.

Get skeleton data through Node-Kinect2

Related recommendations:

ajax operation global monitoring ,Solution to user session failure

10 content recommendations for time monitoring category

php Timer page running time monitoring category

The above is the detailed content of How Web Technology Realizes Mobile Monitoring. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- An in-depth analysis of the Bootstrap list group component

- Detailed explanation of JavaScript function currying

- Complete example of JS password generation and strength detection (with demo source code download)

- Angularjs integrates WeChat UI (weui)

- How to quickly switch between Traditional Chinese and Simplified Chinese with JavaScript and the trick for websites to support switching between Simplified and Traditional Chinese_javascript skills