Home >Java >javaTutorial >Algorithm explanation from array to HashMap

Algorithm explanation from array to HashMap

- 巴扎黑Original

- 2017-04-30 10:11:052225browse

1. What is an array?

I forgot which book I have seen a sentence like "All data structures are the evolution of arrays". It actually makes sense when you think about it, because the computer's memory is actually a linear storage space.

Java sample code: int[] array = new int[5]

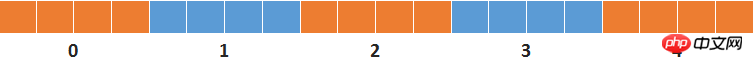

Ignoring the object header information and array length information, the JVM will allocate 20 bytes of memory space in the heap during execution, which looks like this:

Such a data structure can easily access data through array subscripts, but it requires traversing the array when searching, and the average time complexity is O(n/2).

When the amount of data is large or search operations are frequent, such traversal operations are almost unacceptable. So, how can we find data quickly in less time?

2. Binary search

If the elements in the array are sorted, then the natural way is to use binary search.

For example, there is an integer array with a length of 1000. The integers in the array are arranged from small to large. If I want to know whether 6000 is in this array. Then I can first check whether the number in array[500] is 6000. If the number in array[500] is smaller than 6000, then check the number in array[750]...and so on. Then after up to 10 times, the result can be determined. .

The time complexity of this algorithm is O(logN).

However, in most cases, array elements are unordered, and the time complexity O(NlogN) required for sorting usually far exceeds the time required for traversal.

So, the problem is back to its original point. How to quickly find data in disordered data?

3. Ignorant thinking

I still remember watching "Programming Pearls" when I first learned programming. There was a description in it: In the 1970s, Mike Lesk built a phone number search function for the telephone company. For example, enter the number key 5375*6* corresponding to LESK*M*. The correct phone number or optional list can be returned with a false match rate of only 0.2%.

How can it be done?

At that time, I didn’t understand data structures and algorithms at all. It’s still very interesting to restore the process of wild thinking at that time.

㈠ Addition

Adding all the numbers (the * key is 11), the sum of 5375*6* is 48. The sum of most inputs does not exceed 100, which means that tens of thousands of phone numbers will be clustered in the first 100 positions of the array, so it is not feasible.

(ii) Multiplication

When all the numbers are multiplied together, the product is 381150. This seems feasible, but there is a problem: the products of lesk, lsek, slke... are the same, and each number key also corresponds to 3 letters, which means that the probability of repetition is very high.

(iii) Improved multiplication

① Strings with the same letters but different alphabetical orders can avoid conflicts in this way: each number is first multiplied by the serial number, and then multiplied by other values.

②Each numeric key corresponds to 3 English letters. If the user does not enter the sequence number of the letter in the numeric key, there is no way to further calculate accurately. The only direction that can be considered is to make probability statistics based on the given word list, so it will not be considered.

(IV) Location conflict

Even if improved multiplication is used, the products calculated by different name letters may still be repeated. So what should be done when a conflict occurs?

Save the sequence to the next empty location? Come to think of it this doesn't work. If the next blank position happens to be the product of another set of letters, a secondary conflict occurs.

Fortunately, there are prime numbers.

Prime numbers can only be divisible by 1 and itself, so any product obtained by the above multiplication cannot be a prime number, so the phone number is not stored in the prime number position.

Therefore, starting from the current product, search for the next prime number to the right. If the prime number position is still used, then continue to search for the next nearest prime number...

At this point, the problem seems to be solved.

The user inputs a string of numbers, the product is calculated according to the formula, and the phone number at the subscript position is returned. If it is incorrect, the subsequent prime numbers are searched sequentially until the array element subscripted by a certain prime number is empty, and finally all found phone numbers are returned. In most cases, the phone number can be found in O(1) time complexity.

(5) The array is too large

The only problem is that the array created according to the above idea is too large. A name has 10 letters. If the number corresponding to each letter is 9, the final product obtained is approximately 9 raised to the 17th power. This means building an array of size 9^17, which is completely unfeasible.

㈥Later

Even if the factor of the array being too large is not taken into account, with my level of beginner programming at that time, I was unable to write such a program.

The reason why I restored this thinking process is that I think the process of independent thinking is very interesting and valuable. Think about it, in fact, the existing algorithms were finally derived by the experts who sought solutions step by step in actual projects.

Therefore, when you encounter some difficult problems in engineering, as long as you are willing to think about decomposing the problem and seek solutions to every small problem, then many so-called problems can be solved.

Later, after reading "Data Structure and Algorithm Analysis. Java Language Description", I realized that my idea was actually Open Addressing.

JDK's HashMap uses separate chaining. Difference: The concept of buckets is added to save conflicting data; remainder operation is performed to reduce the array size.

So, let’s talk about HashMap in Java.

4. Detailed explanation of HashMap structure and algorithm

The essence of HashMap is still an array, and it is a multi-dimensional array of variable length, similar to the structure below:

㈠ Brief introduction

The array in HashMap stores the head node of the linked list.

save data:

Calculate the hashCode of the key, and then perform a remainder operation with the array length to obtain the array subscript (linked list head node).

If the position is empty, generate a new linked list node and save it to the array.

If the position is not empty, each node in the linked list is compared cyclically: there is already a node with the same key, and the old node is overwritten; if there is no node with the same key, the new node is used as the tail node of the linked list (note: see JDK1.8 Source code, new nodes are always added to the end of the linked list, instead of being the head node of the linked list like JDK1.6).

Find data:

Calculate the hashCode of the key, and then perform a remainder operation with the array length to obtain the array subscript (linked list head node). If the linked list is not empty, compare the first node first. If the first node key is the same (hashCode is the same and equals is true), the value of the first node is directly returned; if the first node key is different, the other nodes in the linked list are traversed sequentially and compared, and the value with the same key is returned. The value of the node (null is returned if no node with the same key is found).

The purpose of HashMap introducing linked lists is to solve the duplicate conflict problem discussed in the previous section.

Precautions:

If the hashcode of all keys is the same, assuming they are all 0, then 0%4 = 0, all elements will be saved to linked list 0, and saving and searching for each data requires traversing linked list 0. Then, the HashMap at this time has essentially degenerated into a linked list, and the time complexity has also increased from the designed O(1) to O(n/2).

In order to keep the time complexity of HashMap search and saving at O(1) as much as possible, the elements need to be evenly distributed in each linked list, that is, each linked list only saves one element.

So what are the influencing factors?

First, the hashcode of the key cannot be repeated. If it is repeated, there will definitely be a linked list to save at least 2 elements;

The second is the hash function design. If it is just a simple remainder, then the remainder will be repeated a lot;

The third is the capacity of the array. If 100 elements are to be distributed in an array with a length of 10, no matter how you calculate it, there will be a linked list to save multiple elements. The best case is that each linked list saves 10;

The algorithm design of these three factors is introduced in detail below.

(ii) Hashcode generation

This is the hashCode generation code of the String class.

public int hashCode() {

int h = hash;

if (h == 0 && value.length > 0) {

char val[] = value;

for (int i = 0; i < value.length; i++) {

h = 31 * h + val[i];

}

hash = h;

}

return h;

}The value of the String class is char[], and char can be converted into UTF-8 encoding. For example, the UTF-8 encodings of 'a', 'b', and 'c' are 97, 98, and 99 respectively; "abc" converted into the formula according to the above code is:

h = 31 * 0 + 97 = 97;

h = 31 * 97 + 98 = 3105;

h = 31 * 3105 + 99 = 96354;

31 is used as the multiplier factor because it is a relatively suitable size of prime number: if the value is too small, the product will be too small when the number of items involved in calculating the hashcode is small; if it is an even number, it is equivalent to a left shift. When multiplication Overflow results in regular bit information loss. Both of these will lead to increased repetition rates.

If 32 is used as the multiplier factor (equivalent to << 5), taking the string "abcabcabcabcabc" as an example, the calculation results of each step of its hashcode are as follows:

As shown in the figure above, every 3 letters at the end of the string will generate a repeated hashcode. This is not a coincidence. Even if you change to other English letters, it is easy to repeat, and using prime numbers will greatly reduce the possibility of repetition. Those who are interested can refer to the picture above to perform the left shift operation, and you will find that this is not accidental.

《Effective Java》一书中详细描述了hashcode的生成规则和注意事项,有兴趣的可以去看看。

从源代码的hashCode()方法可知,String类对象保存了已经计算好的hashCode,如果已经调用过hashCode()方法,那么第二次调用时不会再重新生成,而是直接返回已经计算好的hashCode。

String对象总是会存放到String类私有维护的常量池中,不显式使用new关键字时,如果常量池中已经有value相同的对象,那么总是会返回已有对象的引用。因此,很多情况下生成hashCode这种比较昂贵的操作实际上并不需要执行。

㈢ 哈希函数设计

现在,已经得到了重复率很低的hashCode,还有什么美中不足的地方吗?

⑴ 扰动函数

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

下图还是以字符串“abcabcabcabcabc”为例,使用上面方法得到的运算过程和结果。

为什么要先无符号右位移16位,然后再执行异或运算?看看下图的二进制的与运算,你就会明白。

你会发现只要二进制数后4位不变,前面的二进制位无论如何变化都会出现相同的结果。为了防止出现这种高位变化而低位不变导致的运算结果相同的情况,因此需要将高位的变化也加入进来,而将整数的二进制位上半部与下半部进行异或操作就可以得到这个效果。

为什么要使用与运算?

因为哈希函数的计算公式就是hashCode % tableSize,当tableSize是2的n次方(n≥1)的时候,等价于hashCode & (tableSize - 1)。

扰动函数优化前:1954974080 % 16 = 1954974080 & (16 - 1) = 0

扰动函数优化后:1955003654 % 16 = 1955003654 & (16 - 1) = 6

这就是为什么需要增加扰动函数的原因。

⑵ 源代码详解

代码解释之前需要补充说明一下,jdk1.8引入了红黑树来解决大量冲突时的查找效率,所以当一个链表中的数据大到一定规模的时候,链表会转换成红黑树。因此在jdk1.8中,HashMap的查找和保存数据的最大时间复杂度实际上就是红黑树的时间复杂度O(logN)。

以下是HashMap中的保存数据的方法源代码,相信经过以上的描述,已经非常容易看懂这一段代码。

final V putVal(int hash, K key, V value, boolean onlyIfAbsent, boolean evict) {

Node<K,V>[] tab; //HashMap数组

Node<K,V> p; //初始化需要保存的数据

int n; //数组容量

int i; //数组下标

/* 如果数组为空或0,初始化容量为16 */

if ((tab = table) == null || (n = tab.length) == 0){

n = (tab = resize()).length;

}

/* 使用哈希函数获取数组位置(如果为空,保存新节点到数组) */

if ((p = tab[i = (n - 1) & hash]) == null){

tab[i] = newNode(hash, key, value, null);

}

/* 如果数组位置已经有值,则使用下列方式保存数据 */

else {

Node<K,V> e; //临时节点保存新值

K k; //临时变量用于比较key

//如果头节点与新节点的key的hash值相同 且 key的值相等,e赋值为旧节点

if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))){

e = p;

}

//如果头节点是一个红黑树节点,那么e将保存为树节点

else if (p instanceof TreeNode){

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

}

//如果头节点与新节点不同,且头节点不是红黑树节点,循环比对链表的所有节点

else {

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

//如果直到链表尾未找到相同key的节点,将新结点作为最后一个节点加入到链表

p.next = newNode(hash, key, value, null);

//如果链表节点数大于等于8-1,转换成红黑树;转换成红黑树的最小节点数是8

if (binCount >= TREEIFY_THRESHOLD - 1){

treeifyBin(tab, hash);

}

break;

}

//如果循环过程中发现新旧key的值相同,跳转:是否覆盖旧值

if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))){

break;

}

p = e;

}

}

//是否覆盖旧值

if (e != null) {

V oldValue = e.value;

//如果新值不为空且(允许修改旧值 或 旧值为空),覆盖旧节点的值

if (!onlyIfAbsent || oldValue == null){

e.value = value;

}

afterNodeAccess(e); //回调函数,这里是空函数,但在linkedHashMap中实现了此方法

return oldValue; //返回旧值

}

}

//用于比较判断是否在遍历集合过程中有其它线程修改集合,详情请网上搜索fail-fast快速失败机制

++modCount;

//当key数量大于允许容量时进行扩容。允许容量在HashMap中是数组长度 * 装填因子(默认0.75)

if (++size > threshold){

resize();

}

//回调函数,这里是空函数,但在linkedHashMap中实现了此方法

afterNodeInsertion(evict);

return null;

}

⑶ 简化后的伪代码

putval(key, value){

index = key.hashcode % tablesize;

if(null == table[index]){

table[index] = new node(key, value);

}else{

firstNode = table[index];

nextNode = firstNode.next;

while(nextNode.hasNextNode()){

//如果找到相同key的旧节点,覆盖旧节点

if(key == nextNode.key){

nextNode = new node(key, value);

break;

}

//如果到队列末尾依然没有找到相同key的旧节点,将新结点加入到最后一个节点的末尾

if(nextNode.next == null){

nextNode.next = new node(key, value);

break;

}

nextNode = nextNode.next;

}

}

}

⑷ 数组大小设计

代码注释中已经稍有提及,这里再分别展开讨论。

①数组容量选择

HashMap的初始容量是 1 << 4,也就是16。以后每次扩容都是size << 1,也就是扩容成原来容量的2倍。如此设计是因为 2^n-1(n≥1)的二进制表示总是为重复的1,方便进行求余运算。

《数据结构与算法分析.Java语言描述》一书中的建议是容量最好是质数,有助于降低冲突,但没有给出证明或实验数据。

质数虽然是神奇的数字,但个人感觉在这里并没有特别的用处。

根据公式index = hashCode % size可知,无论size是质数还是非质数,index的值区间都是0至(size-1)之间,似乎真正起决定作用的是hashCode的随机性要好。

这里先不下结论,待以后再写一个随机函数比较下质数和非质数重复率。

②装填因子

装填因子默认是0.75,也就是说如果数组容量为16,那么当key的数量大于12时,HashMap会进行扩容。

装填因子设计成0.75的目的是为了平衡时间和空间性能。过小会导致数组太过于稀疏,空间利用率不高;过大会导致冲突增大,时间复杂度会上升。

㈣ 关于其它

红黑树是在JDK 1.8中引入的,想用简单的语言来讲清楚红黑树的数据结构、增删改查操作及时间复杂度分析,这是一个复杂且艰难的任务,更适合单独来描述,因此留待以后吧。

五 最小完美哈希函数(Minimal Perfect Hash Function, MPHF)

Jdk中的HashMap解决了任意数据集的时间复杂度问题,所设计的哈希函数在未知数据集的情况下有良好的表现。

但如果有一个已知数据集(如Java关键字集合),如何设计一个哈希函数才能同时满足以下两方面的要求:

⑴ 容器容量与数据集合的大小完全一致;

⑵ There is no conflict.

In other words, when a certain data set is given, if a hash function allows each node of the container to have one and only one data element, such a hash function is called a minimum perfect hash function.

Recently, I was studying the principles of compilation, and mentioned how to solve the O(1) time complexity search problem of keyword sets, and mentioned that the minimum perfect hash function can be used. When I see a term like this, I immediately feel very good and noble.

The above is the detailed content of Algorithm explanation from array to HashMap. For more information, please follow other related articles on the PHP Chinese website!