Home >Web Front-end >PS Tutorial >Photoshop oil painting effect filter

Photoshop oil painting effect filter

- 高洛峰Original

- 2017-02-18 13:33:162601browse

This filter was developed by me using PS SDK. It may be unknown who proposed the filter algorithm. I referred to the source code of FilterExplorer (VC 6). The main reference source for this algorithm is this project. Filters.cpp, by Jason Waltman (18, April, 2001). In addition, the algorithm of the oil painting filter in PhotoSprite (Version 3.0, 2006, written by Lian Jun), another domestic software written in C# language, should also be quoted from the former (or other homologous code). When studying this filter algorithm, I mainly referred to the C++ code of the former. The conceptual description of the algorithm in this article belongs to my understanding and interpretation. But the efficiency of this algorithm is not high. I have greatly improved the efficiency of this algorithm. The time complexity regarding the template size has been improved from O (n^2) to the linear complexity O (n), and the complexity regarding the number of pixels is a constant. The coefficient is greatly reduced. For the same test sample (a certain 1920 * 1200 pixel RGB image), the processing speed of the same parameters is reduced from about 35 seconds to about 3 seconds, and the processing speed is increased to about 10 to 12 times (rough estimate).

This article mainly publishes Photoshop oil painting effect filter (OilPaint). The algorithm is not proposed by me, you can refer to the references in this article. This filter can be seen in the domestic software PhotoSprite developed in C#. I was asked to help develop this filter back in 2010, and now I've spent about a few days developing it and making it available for free.

(1) Conceptual description of the algorithm of the oil painting filter

This is the understanding I gained after reading the FilterExplorer source code. This filter has two parameters, one is the template radius (radius), then the template size is (radius * 2 + 1) * (radius * 2 + 1) size, that is, with the current pixel as the center, expand outward by radius A rectangular area of pixels, as a search range, we temporarily call it a "template" (actually this algorithm is not a standard template method such as Gaussian blur or custom filter, it is just that the processing process is similar, so I can implement it. Optimizations introduced later).

Another parameter is smoothness, which is actually the number of grayscale buckets. We assume that the grayscale/brightness of the pixel (0 ~ 255) is evenly divided into smoothness intervals, then we call each interval a bucket here, so that we have many buckets, temporarily called buckets Array (buckets).

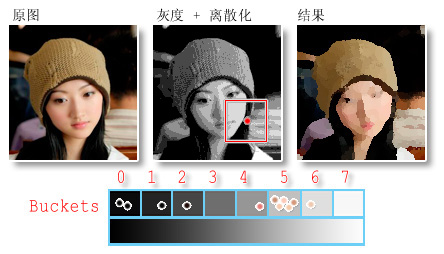

This algorithm traverses each pixel on the image, and for the current position (x, y) pixel, grayscales all pixels within the template range, that is, turns the image into a grayscale image, and then changes the pixel value Further discretization is to put the pixels in the template into the corresponding buckets in turn according to the interval in which the gray level of the pixel falls. Then find the bucket with the largest number of pixels falling into it, and average the color of all pixels in that bucket as the resulting value at position (x, y).

The above algorithm description is represented by the following schematic diagram. The image in the middle is the result of grayscale + discretization from the original image (equivalent to tone separation in Photoshop), and the small box represents the template. What is shown below is the bucket array (8 buckets, that is, the gray value from 0 to 255 is discretized into 8 intervals).

#

#

##

## It is not difficult to transplant the existing code to the PS filter as it is. It took me about 1 to 2 days of spare time to basically debug it successfully. But when reading the source code of foreigners, I clearly felt that the original code was not efficient enough. This algorithm can be completed by traversing the image once, and the processing of each pixel is constant time, so the complexity is O(n) for the number of pixels (image length * image width), but the constant coefficient of the original code is larger, for example, Each time the pixel result is calculated, the grayscale of the pixels within the template range must be recalculated and put into the bucket, which actually results in a large number of repetitive calculations.

2.1 To this end, my first improvement is to grayscale and discretize the current entire image patch in PS (put it into a bucket), so that when using the template When traversing the patch, there is no need to repeatedly calculate the grayscale and discretize it. This roughly doubles the running speed of the algorithm (for a certain sample, the processing speed increases from more than 20 seconds to about 10 seconds).

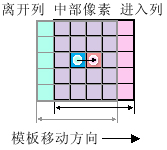

2.2 But this improvement in speed is still not significant enough. So I made another more important optimization, which was to reduce the complexity for the template size from square to linear complexity. This is based on the fact that considering that the template moves from left to right between the current rows, the statistics of the pixels in the middle of the template (the intersection of two adjacent templates) in the result remain unchanged. Only the leftmost column moves out of the template, and the rightmost column enters the template, so we don't have to worry about the pixels in the middle of the template when traversing the image, we only need to process the two edges of the template. As shown in the figure below (radius is 2, template size is 5 * 5 pixels):

When we reach the right edge of the patch, we do not reset it to the beginning of the line like a carriage return or line feed, but move the template down one line and enter The tail of the next line, and then translate to the left, so that the trajectory of the template becomes a serpentine step trajectory. After this improvement, when we traverse the pixels, we only need to process the two edge pixels of the template. In this way, the template size (radius in the parameter) is reduced from O(n^2) to O(n), thereby greatly improving the algorithm's operation speed. Combined with Optimization 2.1, the algorithm's operation speed is finally improved. 11 times (this value is only a rough estimate and has not been tested on a large number of samples). The optimized algorithm's processing time for large images has also become acceptable.

【Note】The reason why I can achieve such optimization is that the filter algorithm is not a standard template algorithm. Its essence is to obtain statistical information within the template range, that is, the result. It has nothing to do with the template coordinates of the pixel. This is just like we want to get information such as the population number and the ratio of men to women in a certain local area. Therefore, we optimize according to the above method.

→→→

↓

← ← ← ← ← ← ←

↓

→ → ...

I will give this below The code of the core algorithm of the filter, all codes located in algorithm.cpp:

code_FilterData_OilPaint

#include "Algorithm.h"

//=========================================

// 缩略图和实际处理共享的滤镜算法

//=========================================

//

// 默认把数据当作是RGB, GrayData 是单通道数据,矩形和 InRect 一致

//

// bInitGray: 是否需要算法重新计算灰度数据

// rowBytes: inData/outData, 扫描行宽度

// colBytes: inData/outData, 对于interleave分布,等于通道数,集中分布时该为1

// planeBytes: 每个通道的字节数(对于interleave分布,该参数的值为1)

// grayData: 由于仅一个通道,所以grayColumnBytes一定是1;

// buckets: 灰度桶; 每个灰度占据4个UINT,0-count,1-redSum,2-greenSum,3-blueSum

// abortProc: 用于测试是否取消的回调函数(在滤镜处理过程中,即测试用户是否按了Escape)

// 在缩略图中用于测试是否已经产生了后续的Trackbar拖动事件

// retVal:如果没有被打断,返回TRUE,否则返回FALSE(说明被用户取消或后续UI事件打断)

//

BOOL FilterData_OilPaint(

uint8* pDataIn, Rect& inRect, int inRowBytes, int inColumnBytes, int inPlaneBytes,

uint8* pDataOut, Rect& outRect, int outRowBytes, int outColumnBytes, int outPlaneBytes,

uint8* pDataGray, int grayRowBytes, BOOL bInitGray,

int radius,

int smoothness,

UINT* buckets,

TestAbortProc abortProc

)

{

int indexIn, indexOut, indexGray, x, y, i, j, i2, j2, k; //像素索引

uint8 red, green, blue;

//设置边界

int imaxOut = (outRect.right - outRect.left);

int jmaxOut = (outRect.bottom - outRect.top);

int imaxIn = (inRect.right - inRect.left);

int jmaxIn = (inRect.bottom - inRect.top);

//获取两个矩形(inRect和outRect)之间的偏移,即 outRect 左上角在 inRect 区中的坐标

int x0 = outRect.left - inRect.left;

int y0 = outRect.top - inRect.top;

// 灰度离散化应该作为原子性操作,不应该分割

if(bInitGray)

{

//把 In 贴片灰度化并离散化

double scale = smoothness /255.0;

for(j =0; j < jmaxIn; j++)

{

for(i =0; i < imaxIn; i++)

{

indexIn = i * inColumnBytes + j * inRowBytes; //源像素[x, y]

red = pDataIn[indexIn];

green = pDataIn[indexIn + inPlaneBytes];

blue = pDataIn[indexIn + inPlaneBytes*2];

pDataGray[grayRowBytes * j + i] = (uint8)(GET_GRAY(red, green, blue) * scale);

}

}

}

if(abortProc != NULL && abortProc())

return FALSE;

// 模板和统计数据

// 灰度桶 count, rSum, gSum, bSum

//

memset(buckets, 0, (smoothness +1) *sizeof(UINT) *4);

int colLeave, colEnter, yMin, yMax;

int rowLeave, rowEnter, xMin, xMax;

int direction;

//初始化第一个模板位置的数据

yMin = max(-y0, -radius);

yMax = min(-y0 + jmaxIn -1, radius);

xMin = max(-x0, -radius);

xMax = min(-x0 + imaxIn -1, radius);

for(j2 = yMin; j2 <= yMax; j2++)

{

for(i2 = xMin; i2 <= xMax; i2++)

{

indexIn = (j2 + y0) * inRowBytes + (i2 + x0) * inColumnBytes;

indexGray = (j2 + y0) * grayRowBytes + (i2 + x0);

buckets[ pDataGray[indexGray] *4 ]++; //count

buckets[ pDataGray[indexGray] *4+1 ] += pDataIn[indexIn]; //redSum

buckets[ pDataGray[indexGray] *4+2 ] += pDataIn[indexIn + inPlaneBytes]; //greenSum

buckets[ pDataGray[indexGray] *4+3 ] += pDataIn[indexIn + inPlaneBytes*2]; //greenSum

}

}

if(abortProc != NULL && abortProc())

return FALSE;

//进入模板的蛇形迂回循环

for(j =0; j < jmaxOut; j++)

{

if(abortProc != NULL && abortProc())

return FALSE;

//direction:水平移动方向( 1 - 向右移动; 0 - 向左移动)

direction =1- (j &1);

//找到最大的那个像素

GetMostFrequentColor(buckets, smoothness, &red, &green, &blue);

if(direction)

{

indexOut = j * outRowBytes;

}

else

{

indexOut = j * outRowBytes + (imaxOut -1) * outColumnBytes;

}

pDataOut[ indexOut ] = red;

pDataOut[ indexOut + outPlaneBytes ] = green;

pDataOut[ indexOut + outPlaneBytes *2 ] = blue;

i = direction?1 : (imaxOut -2);

for(k =1; k < imaxOut; k++) //k 是无意义的变量,仅为了在当前行中前进

{

//每 64 个点测试一次用户取消 ( 在每行中间有一次测试 )

if((k &0x3F) ==0x3F&& abortProc != NULL && abortProc())

{

return FALSE;

}

if(direction) //向右移动

{

colLeave = i - radius -1;

colEnter = i + radius;

}

else//向左移动

{

colLeave = i + radius +1;

colEnter = i - radius;

}

yMin = max(-y0, j - radius);

yMax = min(-y0 + jmaxIn -1, j + radius);

//移出当前模板的那一列

if((colLeave + x0) >=0&& (colLeave + x0) < imaxIn)

{

for(j2 = yMin; j2 <= yMax; j2++)

{

indexIn = (j2 + y0) * inRowBytes + (colLeave + x0) * inColumnBytes;

indexGray = (j2 + y0) * grayRowBytes + (colLeave + x0);

buckets[ pDataGray[indexGray] *4 ]--; //count

buckets[ pDataGray[indexGray] *4+1 ] -= pDataIn[indexIn]; //redSum

buckets[ pDataGray[indexGray] *4+2 ] -= pDataIn[indexIn + inPlaneBytes]; //greenSum

buckets[ pDataGray[indexGray] *4+3 ] -= pDataIn[indexIn + inPlaneBytes*2]; //greenSum

}

}

//进入当前模板的那一列

if((colEnter + x0) >=0&& (colEnter + x0) < imaxIn)

{

for(j2 = yMin; j2 <= yMax; j2++)

{

indexIn = (j2 + y0) * inRowBytes + (colEnter + x0) * inColumnBytes;

indexGray = (j2 + y0) * grayRowBytes + (colEnter + x0);

buckets[ pDataGray[indexGray] *4 ]++; //count

buckets[ pDataGray[indexGray] *4+1 ] += pDataIn[indexIn]; //redSum

buckets[ pDataGray[indexGray] *4+2 ] += pDataIn[indexIn + inPlaneBytes]; //greenSum

buckets[ pDataGray[indexGray] *4+3 ] += pDataIn[indexIn + inPlaneBytes*2]; //greenSum

}

}

//找到最大的那个像素

GetMostFrequentColor(buckets, smoothness, &red, &green, &blue);

//目标像素[i, j]

indexOut = j * outRowBytes + i * outColumnBytes;

pDataOut[ indexOut ] = red;

pDataOut[ indexOut + outPlaneBytes ] = green;

pDataOut[ indexOut + outPlaneBytes *2 ] = blue;

i += direction?1 : -1;

}

//把模板向下移动一行

rowLeave = j - radius;

rowEnter = j + radius +1;

if(direction)

{

xMin = max(-x0, (imaxOut -1) - radius);

xMax = min(-x0 + imaxIn -1, (imaxOut -1) + radius);

indexOut = (j +1) * outRowBytes + (imaxOut -1) * outColumnBytes; //目标像素[i, j]

}

else

{

xMin = max(-x0, -radius);

xMax = min(-x0 + imaxIn -1, radius);

indexOut = (j +1) * outRowBytes; //目标像素[i, j]

}

//移出当前模板的那一列

if((rowLeave + y0) >=0&& (rowLeave + y0) < jmaxIn)

{

for(i2 = xMin; i2 <= xMax; i2++)

{

indexIn = (rowLeave + y0) * inRowBytes + (i2 + x0) * inColumnBytes;

indexGray = (rowLeave + y0) * grayRowBytes + (i2 + x0);

buckets[ pDataGray[indexGray] *4 ]--; //count

buckets[ pDataGray[indexGray] *4+1 ] -= pDataIn[indexIn]; //redSum

buckets[ pDataGray[indexGray] *4+2 ] -= pDataIn[indexIn + inPlaneBytes]; //greenSum

buckets[ pDataGray[indexGray] *4+3 ] -= pDataIn[indexIn + inPlaneBytes*2]; //greenSum

}

}

//进入当前模板的那一列

if((rowEnter + y0) >=0&& (rowEnter + y0) < jmaxIn)

{

for(i2 = xMin; i2 <= xMax; i2++)

{

indexIn = (rowEnter + y0) * inRowBytes + (i2 + x0) * inColumnBytes;

indexGray = (rowEnter + y0) * grayRowBytes + (i2 + x0);

buckets[ pDataGray[indexGray] *4 ]++; //count

buckets[ pDataGray[indexGray] *4+1 ] += pDataIn[indexIn]; //redSum

buckets[ pDataGray[indexGray] *4+2 ] += pDataIn[indexIn + inPlaneBytes]; //greenSum

buckets[ pDataGray[indexGray] *4+3 ] += pDataIn[indexIn + inPlaneBytes*2]; //greenSum

}

}

}

return TRUE;

}

//从灰度桶阵列中,提取出最多像素的那个桶,并把桶中像素求平均值作为 RGB 结果。

void GetMostFrequentColor(UINT* buckets, int smoothness, uint8* pRed, uint8* pGreen, uint8* pBlue)

{

UINT maxCount =0;

int i, index =0;

for(i =0; i <= smoothness; i++)

{

if(buckets[ i *4 ] > maxCount)

{

maxCount = buckets[ i *4 ];

index = i;

}

}

if(maxCount >0)

{

*pRed = (uint8)(buckets[ index *4+1 ] / maxCount); //Red

*pGreen = (uint8)(buckets[ index *4+2 ] / maxCount); //Green

*pBlue = (uint8)(buckets[ index *4+3 ] / maxCount); //Blue

}

}

2.3 The original code limits the range of the radius to (1 ~ 5). Since I optimized the code, I can greatly increase the range of the radius. When I set the radius to 100 I find that it doesn't make sense to have a radius that is too large, because it's almost impossible to tell what the original image is.

[Summary] The improved code is more technical and challenging, including a large number of low-level pointer operations and between different rectangles (input patches, output patches, templates) The coordinate positioning may slightly reduce the readability of the code, but as long as the above principles are understood, the code will still have good readability. In addition, I also thought of improving the algorithm, changing the template from a rectangle to a "circle", and making the two parameters of the template radius and the number of buckets randomly jitter when traversing the image, but these improvements will make the improvement in 2.2 If the optimization fails, the speed of the algorithm will drop back to a lower level.

(3) Use multi-threading technology to improve thumbnail display efficiency and avoid affecting the interactivity of the UI thread

Display on the parameter dialog box For thumbnail technology, please refer to the fourth article in my previous tutorial on writing PS filters, which I won’t describe here. What I’m talking about here are improvements to UI interaction when updating thumbnails, as well as zoom and pan technology.

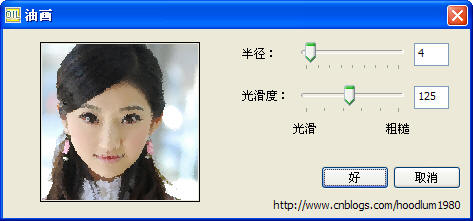

The picture below is the parameter setting dialog box that pops up when this filter is called in Photoshop. The user can drag the slider control (TrackBar, also known as Slider), or directly enter in the text box behind to change the parameters. Thumbnails will be updated in real time to reflect the new parameters. In the original filter implementation, I placed the processing of updating thumbnails in the same thread as the dialog UI. Doing this will introduce the following problem. When the user drags the slider control quickly, the parameters change quickly, and the UI thread may be busy processing thumbnail data and is "blocked" for a short period of time, making it unable to Immediately respond to subsequent control events, that is, the dragging of the slider control is not smooth enough, with jumping, frustration, and sluggishness, and the feedback to mouse dragging is not sensitive enough.

In order to improve this problem and not affect the UI thread, I plan to put the time-consuming task of processing thumbnails on Complete it in a new thread. When the thread completes the thumbnail processing, it will notify the dialog box to update its view. When dragging the Trackbar, the UI thread will receive control notifications at a very high frequency, like a "surge", which requires that subsequent UI events arrive to cause the running thread task to terminate and exit quickly.

In order to improve this problem and not affect the UI thread, I plan to put the time-consuming task of processing thumbnails on Complete it in a new thread. When the thread completes the thumbnail processing, it will notify the dialog box to update its view. When dragging the Trackbar, the UI thread will receive control notifications at a very high frequency, like a "surge", which requires that subsequent UI events arrive to cause the running thread task to terminate and exit quickly.

I extracted the filter algorithm as a shared function, so that the actual processing of the filter and updating the thumbnail can share this function. During the actual call of the filter by PS and the update of the thumbnail, the filter algorithm is actually required to detect "task cancellation" events regularly. For example, when PS calls a filter, if the user presses the ESC key, a time-consuming filter operation will be abandoned immediately. When updating thumbnails, if a surge of UI events occurs, the processing thread is also required to be able to terminate quickly.

In the core algorithm function of the filter, I regularly detect "task cancellation" events. Since the test cancellation method is different when calling the filter in PS and when updating the thumbnail, the filter algorithm is In the function, I added a callback function parameter (TestAbortProc). In this way, when PS calls the filter for actual processing, PS's built-in callback function is used to detect the cancellation event. When updating the thumbnail of the dialog box, I use a callback function provided by myself to detect the cancellation event (this function detects a Boolean variable to know whether there are new UI events waiting to be processed).

I use a single thread for thumbnail processing. That is, when each new UI event arrives, it is necessary to detect whether the thumbnail processing thread is running. If so, I set a mark for the new UI event, and then wait for the thread to exit. After the previous thread exits, I will start a new one. Threads, so that there is always only one thread for processing thumbnails, instead of opening too many threads when UI events arrive continuously. The advantage of this is that the logic is clear and easy to control, and it will not let us fall into an unmaintainable situation with too many threads. In trouble. The disadvantage is that although the thread regularly detects cancellation events, it still takes a small amount of time to terminate the thread. This causes the UI thread to still have a slight "pause", but it is trivial, compared to updating the thumbnail in the UI thread. Achieve essential improvements.

After the improvement, you can drag the two sliders on the parameter dialog box at a very fast speed. Although the core algorithm of this filter requires a large amount of calculations, we can see that the parameter dialog box still has many functions. Smooth response.

DUint\ and pan and function and function of panning>>

## Actually, it is not difficult to update the data of the thumbnail image regardless of zooming or panning. . The difficulty is mainly in the panning of thumbnails, because it involves mouse interaction, which requires very solid Windows programming skills and an understanding of the underlying mechanism of Windows programs. There are two ways to drag thumbnails: 4.1 Drag the result image directly. This is divided into two methods. One is a relatively perfect drag effect, but it comes at the cost of wasting a certain amount of space and time, and coding is also challenging. That is, the input data of the thumbnail is expanded to 9 times the size, and the result image is obtained in the memory. When displayed, only the center part of the result graph is displayed. When dragging, no empty space appears in the thumbnail. Another method is to take a snapshot (screenshot) of the current result image while dragging, and then just paste the screenshot result to the corresponding position on the screen while dragging. This is more efficient, but the disadvantage is that you can see a blank space next to the thumbnail when dragging. This method is often used when the cost of updating the view is large, such as vector drawing. The method I implemented in this filter falls into this category. 4.2 Drag the picture to the original input picture. That is, when dragging, the picture used is the original data instead of the result picture. This is also a compromise method to reduce the cost of updating data. For example, this method is used in Photoshop's built-in filter Gaussian Blur. When dragging the thumbnail, the thumbnail displayed is the original image, and the preview effect is only displayed after the mouse is released. This is more economical and effective. Because the cost for us to request the original data is not high, but the cost of processing the thumbnail once with a filter is high. Here are some additional technical details. Please note that since the mouse may move outside the client area (become a negative number), you cannot directly use LOWORD (lParam) and HIWORD (lParam) to obtain the client area coordinates. (Because WORD is an unsigned number), they should be converted to signed numbers (short) before use. The correct way is to use the macros in the windowsx.h header file: GET_X_LPARAM and GET_Y_LPARAM. (5) The download link of this filter (the attachment contains the PS plug-in installation tool I wrote, which can simplify user installation) -4-1 update, enhanced UI interaction performance] // The latest collection of PS plug-in I developed (including ICO, OILPAINT, DRAWTable, etc.) http://files.cnblogs. com/hoodlum1980/PsPlugIns_V2013.zip###### After installation and restart Photoshop: ###### Call this filter in the menu: Filter - hoodlum1980 - OilPaint. ###

You can see the About dialog box in the menu: Help - About Plug-in - OilPaint... (the appearance is almost the same as the About dialog box of the ICO file format plug-in I developed).

In the menu: Help - System Information, you can see whether the "OilPaint" item has been loaded and its version information.

##

## 6.1 The output patch size I used is 128 * 128 pixels. During the mirror processing process, each step of the progress bar you see on the Photoshop status bar indicates the completion of an output patch. The input patch is usually larger than or equal to the output patch, and the input patch size is related to the radius in the filter parameters (the pixel distance by which the template radius is expanded outward in four directions).

6.2 In the filter core algorithm, in order to improve the sensitivity to cancellation events, I detect a cancellation every 16 pixels processed in the current row (pixel index in row & 0x0F == 0x0F). Cancellation is also detected once after each row has been processed (in the column loop). However, this detection frequency is slightly too frequent. Too frequent may increase the cost of function calls.

6.3 Using the same image and the same parameters, I processed my filters, FilterExplorer, and PhotoSprite separately, and then compared them in Photoshop. Since my algorithm is based on the source code of FilterExplorer and is improved on its algorithm, my algorithm is equivalent to FilterExplorer, but more efficient, so the results are exactly the same. But the overall effect of my filter and FilterExplorer is very close to that of PhotoSprite, but the results are slightly different. I checked the code of PhotoSprite and found that this was caused by the difference in the grayscale algorithm of the image (when I adjusted the grayscale algorithm of PhotoSprite to be the same as that in FilterExplorer, the processing results became the same).

In PhotoSprite, the method used to grayscale pixels is:

Gray = ( byte ) ( ( 19661 * R + 38666 * G + 7209 * B ) >> 16 ) ;

In FilterExplorer / the filter I developed, the method used to grayscale pixels is:

Gray = (byte) (0.3 * R + 0.59 * G + 0.11 * B ) ;

The grayscale method in PhotoSprite converts floating point multiplication into integer multiplication. The efficiency may be slightly improved, but the performance here is not significant.

## 7.1 FilterExplorer source code. 7.2 Photoshop 6.0 SDK Documents. 7.3 FillRed and IcoFormat plug-in source code, by hoodlum1980 (myself).

##

## 8.01 [H] Fixed the filter passing when allocating grayscale bucket memory in the Continue call The memory size is incorrect (incorrectly set to the space size of the grayscale bitmap) BUG. This BUG is easily triggered under the following conditions: the document size is too small, or the radius parameter is small and the smoothness parameter is large. These factors may cause a patch to be too small. At this time, because the allocation of the gray bucket space is smaller than the actual required size, subsequent code may exceed the memory limit, causing the PS process to terminate unexpectedly. 2011-1-19 19:01. 8.02 [M] Added a new thumbnail zoom button and function, and optimized the code for zooming in and out buttons to reduce the flicker when zooming in and out. 2011-1-19 19:06. 8.03 [M] Added thumbnail mouse dragging function, and further adjusted the code to fix the thumbnail rectangle, completely avoiding flickering when zooming. 2011-1-19 23:50. 8.04 [L] New function, when the mouse is moved over the thumbnail and dragged and dropped, the cursor changes into an outstretched/grabbed hand shape. This is achieved by calling the function in the Suite PEA UI Hooks suite in PS, that is, what you see on the thumbnail is the cursor inside PS. 2011-1-20 1:23. 8.05 [M] Fixed the bug that the radius parameter was incorrectly converted when clicking the zoom in or zoom out button in the parameter dialog box. This BUG causes the thumbnails to be displayed incorrectly after clicking the zoom in button. 2011-1-20 1:55. 8.06 [L] Adjust the URL link on the About dialog box to a SysLink control, which can greatly simplify the window procedure code of the About dialog box. 2011-1-20 18:12. 8.07 [L] Update the plug-in installation auxiliary tool so that it can install multiple plug-ins at one time. 2011-1-20 21:15. 8.08 [L] Due to calculation errors when scaling thumbnails (the reason is unknown, possibly due to floating point calculation errors), the image in the lower right corner near the edge may not be translated into the thumbnail view, so the translation is Added error buffer to range. 2011-1-27. 8.09 [L] Beautification: Change the zoom button in the filter parameter dialog box to use DirectUI technology (add a new class CImgButton), the interface effect is better than using the original button control. 2011-2-14.8.10 [M] Performance: Adjusted the interactive performance of the slider for adjusting the radius and smoothness parameters when the plug-in is in the parameter dialog box. 2013-4-1.

For more Photoshop oil painting effect filters related articles, please pay attention to the PHP Chinese website!