Home >Web Front-end >JS Tutorial >Why can't js handle decimal operations correctly? _javascript skills

Why can't js handle decimal operations correctly? _javascript skills

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOriginal

- 2016-05-16 15:22:591501browse

var sum = 0;

for(var i = 0; i < 10; i++) {

sum += 0.1;

}

console.log(sum);

Will the above program output 1?

In the article 25 JavaScript interview questions you need to know , the 8th question briefly explains why js cannot handle decimal operations correctly. Today I will revisit an old topic and analyze this issue in a deeper way.

But first of all, it should be noted that the inability to correctly handle decimal operations is not a design error of the JavaScript language itself. Other high-level programming languages, such as C, Java, etc., are also unable to correctly handle decimal operations:

#include <stdio.h>

void main(){

float sum;

int i;

sum = 0;

for(i = 0; i < 100; i++) {

sum += 0.1;

}

printf('%f\n', sum); //10.000002

}

Representation of numbers inside the computer

We all know that programs written in high-level programming languages need to be converted into machine language that can be recognized by the CPU (Central Processing Unit) through interpretation, compilation and other operations before they can be run. However, the CPU does not recognize the decimal and decimal systems of numbers. Octal and hexadecimal, etc., these base numbers we declare in the program will be converted into binary numbers for calculation.

Why not convert it into ternary numbers for calculation?

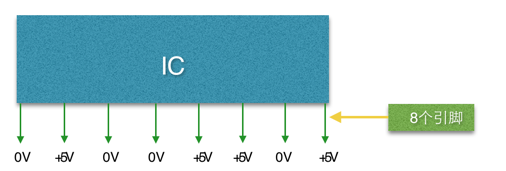

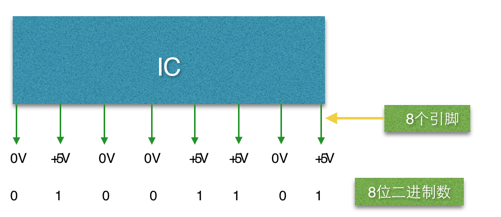

The inside of a computer is made up of many electronic components such as IC (Integrated Circuit: Integrated Circuit). It looks like this:

ICs come in many shapes, with many pins arranged side by side on both sides or inside them (only one side is shown in the picture). All pins of the IC have only two states of DC voltage 0V or 5V, that is, one IC pin can only represent two states. This characteristic of IC determines that the data inside the computer can only be processed with binary numbers.

Since 1 bit (one pin) can only represent two states, the binary calculation method becomes 0, 1, 10, 11, 100... This form:

So, in number operations, all operands will be converted into binary numbers to participate in the operation, such as 39, will be converted into binary 00100111

Binary representation of decimals

As mentioned above, the data in the program will be converted into binary numbers. When decimals are involved in operations, they will also be converted into binary numbers. For example, decimal 11.1875 will be converted into 1101.0010.

The numerical range expressed by 4 digits after the decimal point as a binary number is 0.0000~0.1111. Therefore, this can only represent the combination (addition) of the four decimal numbers 0.5, 0.25, 0.125, 0.0625 and the bit weights after the decimal point. Decimal:

As can be seen from the above table, the next digit of decimal number 0 is 0.0625. Therefore, decimals between 0 and 0.0625 cannot be represented by binary numbers with 4 digits after the decimal point; if you increase the binary number after the decimal point, The number of corresponding decimal digits will also increase, but no matter how many digits are added, the result of 0.1 cannot be obtained. In fact, 0.1 converted to binary is 0.00110011001100110011... Note that 0011 is repeated infinitely:

console.log(0.2+0.1); //操作数的二进制表示 0.1 => 0.0001 1001 1001 1001…(无限循环) 0.2 => 0.0011 0011 0011 0011…(无限循环)

The Number type of js is not divided into integer, single precision, double precision, etc. like C/Java, but is uniformly expressed as a double precision floating point type. According to IEEE regulations, single-precision floating-point numbers use 32 bits to represent all decimals, while double-precision floating-point numbers use 64 bits to represent all decimals. Floating-point numbers are composed of sign, mantissa, exponent and base, so not all digits are used. To represent decimals, symbols, exponents, etc. must also occupy digits, and the base does not occupy digits:

The decimal part of a double-precision floating point number supports up to 52 digits, so after adding the two, you get a string of 0.0100110011001100110011001100110011001100...a binary number that is truncated due to the limitation of the decimal place of the floating point number. At this time, convert it to decimal, That becomes 0.30000000000000004.

Summary

js cannot handle decimal operations correctly, including other high-level programming languages. This is not a design error of the language itself, but the computer itself cannot handle decimal operations correctly. Operations on decimals often produce unexpected results. Because not all decimal fractions can be represented in binary.

The above is the entire content of this article, I hope it will be helpful to everyone’s study.

Related articles

See more- An in-depth analysis of the Bootstrap list group component

- Detailed explanation of JavaScript function currying

- Complete example of JS password generation and strength detection (with demo source code download)

- Angularjs integrates WeChat UI (weui)

- How to quickly switch between Traditional Chinese and Simplified Chinese with JavaScript and the trick for websites to support switching between Simplified and Traditional Chinese_javascript skills