PySpark, the Python API for Apache Spark, empowers Python developers to harness Spark's distributed processing power for big data tasks. It leverages Spark's core strengths, including in-memory computation and machine learning capabilities, offering a streamlined Pythonic interface for efficient data manipulation and analysis. This makes PySpark a highly sought-after skill in the big data landscape. Preparing for PySpark interviews requires a solid understanding of its core concepts, and this article presents 30 key questions and answers to aid in that preparation.

This guide covers foundational PySpark concepts, including transformations, key features, the differences between RDDs and DataFrames, and advanced topics like Spark Streaming and window functions. Whether you're a recent graduate or a seasoned professional, these questions and answers will help you solidify your knowledge and confidently tackle your next PySpark interview.

Key Areas Covered:

- PySpark fundamentals and core features.

- Understanding and applying RDDs and DataFrames.

- Mastering PySpark transformations (narrow and wide).

- Real-time data processing with Spark Streaming.

- Advanced data manipulation with window functions.

- Optimization and debugging techniques for PySpark applications.

Top 30 PySpark Interview Questions and Answers for 2025:

Here's a curated selection of 30 essential PySpark interview questions and their comprehensive answers:

Fundamentals:

-

What is PySpark and its relationship to Apache Spark? PySpark is the Python API for Apache Spark, allowing Python programmers to utilize Spark's distributed computing capabilities for large-scale data processing.

-

Key features of PySpark? Ease of Python integration, DataFrame API (Pandas-like), real-time processing (Spark Streaming), in-memory computation, and a robust machine learning library (MLlib).

-

RDD vs. DataFrame? RDDs (Resilient Distributed Datasets) are Spark's fundamental data structure, offering low-level control but less optimization. DataFrames provide a higher-level, schema-enriched abstraction, offering improved performance and ease of use.

-

How does the Spark SQL Catalyst Optimizer improve query performance? The Catalyst Optimizer employs sophisticated optimization rules (predicate pushdown, constant folding, etc.) and intelligently plans query execution for enhanced efficiency.

-

PySpark cluster managers? Standalone, Apache Mesos, Hadoop YARN, and Kubernetes.

Transformations and Actions:

-

Lazy evaluation in PySpark? Transformations are not executed immediately; Spark builds an execution plan, executing only when an action is triggered. This optimizes processing.

-

Narrow vs. wide transformations? Narrow transformations involve one-to-one partition mapping (e.g.,

map,filter). Wide transformations require data shuffling across partitions (e.g.,groupByKey,reduceByKey). -

Reading a CSV into a DataFrame?

df = spark.read.csv('path/to/file.csv', header=True, inferSchema=True) -

Performing SQL queries on DataFrames? Register the DataFrame as a temporary view (

df.createOrReplaceTempView("my_table")) and then usespark.sql("SELECT ... FROM my_table"). -

cache()method? Caches an RDD or DataFrame in memory for faster access in subsequent operations. -

Spark's DAG (Directed Acyclic Graph)? Represents the execution plan as a graph of stages and tasks, enabling efficient scheduling and optimization.

-

Handling missing data in DataFrames?

dropna(),fillna(), andreplace()methods.

Advanced Concepts:

-

map()vs.flatMap()?map()applies a function to each element, producing one output per input.flatMap()applies a function that can produce multiple outputs per input, flattening the result. -

Broadcast variables? Cache read-only variables in memory across all nodes for efficient access.

-

Spark accumulators? Variables updated only through associative and commutative operations (e.g., counters, sums).

-

Joining DataFrames? Use the

join()method, specifying the join condition. -

Partitions in PySpark? Fundamental units of parallelism; controlling their number impacts performance (

repartition(),coalesce()). -

Writing a DataFrame to CSV?

df.write.csv('path/to/output.csv', header=True) -

Spark SQL Catalyst Optimizer (revisited)? A crucial component for query optimization in Spark SQL.

-

PySpark UDFs (User Defined Functions)? Extend PySpark functionality by defining custom functions using

udf()and specifying the return type.

Data Manipulation and Analysis:

-

Aggregations on DataFrames?

groupBy()followed by aggregation functions likeagg(),sum(),avg(),count(). -

withColumn()method? Adds new columns or modifies existing ones in a DataFrame. -

select()method? Selects specific columns from a DataFrame. -

Filtering rows in a DataFrame?

filter()orwhere()methods with a condition. -

Spark Streaming? Processes real-time data streams in mini-batches, applying transformations on each batch.

Data Handling and Optimization:

-

Handling JSON data?

spark.read.json('path/to/file.json') -

Window functions? Perform calculations across a set of rows related to the current row (e.g., running totals, ranking).

-

Debugging PySpark applications? Logging, third-party tools (Databricks, EMR, IDE plugins).

Further Considerations:

-

Explain the concept of data serialization and deserialization in PySpark and its impact on performance. (This delves into performance optimization)

-

Discuss different approaches to handling data skew in PySpark. (This focuses on a common performance challenge)

This expanded set of questions and answers provides a more comprehensive preparation guide for your PySpark interviews. Remember to practice coding examples and demonstrate your understanding of the underlying concepts. Good luck!

The above is the detailed content of Top 30 PySpark Interview Questions and Answers (2025). For more information, please follow other related articles on the PHP Chinese website!

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

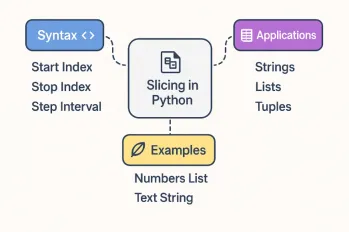

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AM

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AMOpenAI's latest subscription plan, ChatGPT Pro, provides advanced AI problem resolution! In December 2024, OpenAI announced its top-of-the-line plan, the ChatGPT Pro, which costs $200 a month. In this article, we will explain its features, particularly the performance of the "o1 pro mode" and new initiatives from OpenAI. This is a must-read for researchers, engineers, and professionals aiming to utilize advanced AI. ChatGPT Pro: Unleash advanced AI power ChatGPT Pro is the latest and most advanced product from OpenAI.

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AM

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AMIt is well known that the importance of motivation for applying when looking for a job is well known, but I'm sure there are many job seekers who struggle to create it. In this article, we will introduce effective ways to create a motivation statement using the latest AI technology, ChatGPT. We will carefully explain the specific steps to complete your motivation, including the importance of self-analysis and corporate research, points to note when using AI, and how to match your experience and skills with company needs. Through this article, learn the skills to create compelling motivation and aim for successful job hunting! OpenAI's latest AI agent, "Open

What's so amazing about ChatGPT? A thorough explanation of its features and strengths!May 14, 2025 am 01:26 AM

What's so amazing about ChatGPT? A thorough explanation of its features and strengths!May 14, 2025 am 01:26 AMChatGPT: Amazing Natural Language Processing AI and how to use it ChatGPT is an innovative natural language processing AI model developed by OpenAI. It is attracting attention around the world as an advanced tool that enables natural dialogue with humans and can be used in a variety of fields. Its excellent language comprehension, vast knowledge, learning ability and flexible operability have the potential to transform our lives and businesses. In this article, we will explain the main features of ChatGPT and specific examples of use, and explore the possibilities for the future that AI will unlock. Unraveling the possibilities and appeal of ChatGPT, and enjoying life and business

![[Images generated using AI] How to make and print Bikkuriman chocolate-style stickers with ChatGPT](https://img.php.cn/upload/article/001/242/473/174715657146278.jpg?x-oss-process=image/resize,p_40) [Images generated using AI] How to make and print Bikkuriman chocolate-style stickers with ChatGPTMay 14, 2025 am 01:16 AM

[Images generated using AI] How to make and print Bikkuriman chocolate-style stickers with ChatGPTMay 14, 2025 am 01:16 AMRelease childhood memories! Create your exclusive stickers with ChatGPT! Do you remember the fun of collecting stickers from childhood? Nowadays, with the powerful image generation capabilities of ChatGPT, you can easily create unique characters in style without drawing skills! This article will teach you step by step how to transform photos or illustrations into shiny stickers full of nostalgia using ChatGPT. We will explain everything from detailed tip word examples to sticker making and printing steps, creative ideas shared on social media, and even copyright and portrait rights. Table of contents Why can ChatGPT make pictures of the wind? ChatGPT image generation successfully

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Dreamweaver Mac version

Visual web development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools