This article explores the evolution of OpenAI's GPT models, focusing on GPT-2 and GPT-3. These models represent a significant shift in the approach to large language model (LLM) training, moving away from the traditional "pre-training plus fine-tuning" paradigm towards a "pre-training only" approach.

This shift was driven by observations of GPT-1's zero-shot capabilities – its ability to perform tasks it hadn't been specifically trained for. To understand this better, let's delve into the key concepts:

Part 1: The Paradigm Shift and its Enablers

The limitations of fine-tuning, particularly for the vast array of unseen NLP tasks, motivated the move towards task-agnostic learning. Fine-tuning large models on small datasets risks overfitting and poor generalization. The human ability to learn language tasks without massive supervised datasets further supports this shift.

Three key elements facilitated this paradigm shift:

- Task-Agnostic Learning (Meta-Learning): This approach equips the model with a broad skillset during training, allowing it to adapt rapidly to new tasks without further fine-tuning. Model-Agnostic Meta-Learning (MAML) exemplifies this concept.

-

The Scale Hypothesis: This hypothesis posits that larger models trained on larger datasets exhibit emergent capabilities – abilities that appear unexpectedly as model size and data increase. GPT-2 and GPT-3 served as experiments to test this.

-

In-Context Learning: This technique involves providing the model with a natural language instruction and a few examples (demonstrations) at inference time, allowing it to learn the task from these examples without gradient updates. Zero-shot, one-shot, and few-shot learning represent different levels of example provision.

Part 2: GPT-2 – A Stepping Stone

GPT-2 built upon GPT-1's architecture with several improvements: modified LayerNorm placement, weight scaling for residual layers, expanded vocabulary (50257), increased context size (1024 tokens), and larger batch size (512). Four models were trained with parameter counts ranging from 117M to 1.5B. The training dataset, WebText, comprised approximately 45M links. While GPT-2 showed promising results, particularly in language modeling, it lagged behind state-of-the-art models on tasks like reading comprehension and translation.

Part 3: GPT-3 – A Leap Forward

GPT-3 retained a similar architecture to GPT-2, primarily differing in its use of alternating dense and sparse attention patterns. Eight models were trained, ranging from 125M to 175B parameters. The training data was significantly larger and more diverse, with careful curation and weighting of datasets based on quality.

Key findings from GPT-3's evaluation demonstrate the effectiveness of the scale hypothesis and in-context learning. Performance scaled smoothly with increased compute, and larger models showed superior performance across zero-shot, one-shot, and few-shot learning settings.

Part 4: Conclusion

GPT-2 and GPT-3 represent significant advancements in LLM development, paving the way for future research into emergent capabilities, training paradigms, data cleaning, and ethical considerations. Their success highlights the potential of task-agnostic learning and the power of scaling up both model size and training data. This research continues to influence the development of subsequent models, such as GPT-3.5 and InstructGPT.

For related articles in this series, see:

- Part 1: Understanding the Evolution of ChatGPT: Part 1 – An In-Depth Look at GPT-1 and What Inspired It.

- Part 3: Insights from Codex and InstructGPT

The above is the detailed content of Understanding the Evolution of ChatGPT: Part 2 – GPT-2 and GPT-3. For more information, please follow other related articles on the PHP Chinese website!

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PM

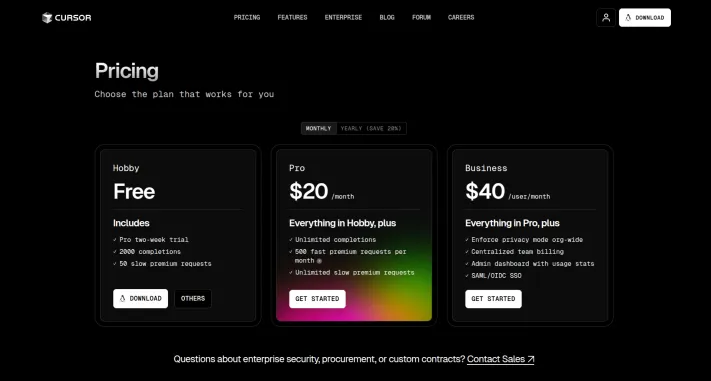

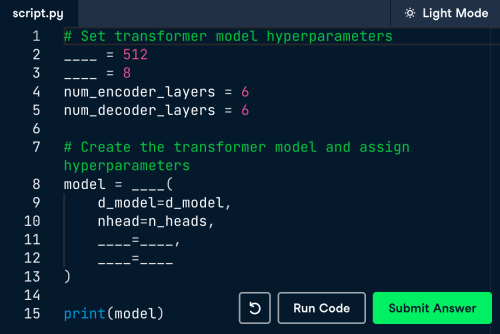

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PMThe Model Context Protocol (MCP): A Universal Connector for AI and Data We're all familiar with AI's role in daily coding. Replit, GitHub Copilot, Black Box AI, and Cursor IDE are just a few examples of how AI streamlines our workflows. But imagine

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PM

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PMMicrosoft's OmniParser V2 and OmniTool: Revolutionizing GUI Automation with AI Imagine AI that not only understands but also interacts with your Windows 11 interface like a seasoned professional. Microsoft's OmniParser V2 and OmniTool make this a re

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AMRevolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AM

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AMThis blog post shares my experience testing Runway ML's new Act-One animation tool, covering both its web interface and Python API. While promising, my results were less impressive than expected. Want to explore Generative AI? Learn to use LLMs in P

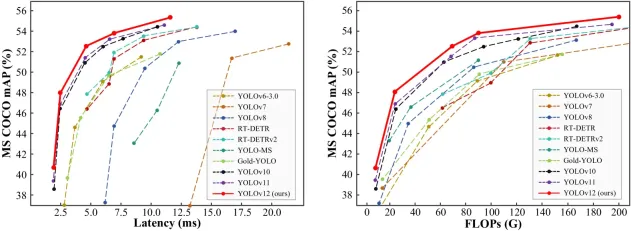

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment