Technology peripherals

Technology peripherals AI

AI Why Retrieval-Augmented Generation Is Still Relevant in the Era of Long-Context Language Models

Why Retrieval-Augmented Generation Is Still Relevant in the Era of Long-Context Language ModelsLet's explore the evolution of Retrieval-Augmented Generation (RAG) in the context of increasingly powerful Large Language Models (LLMs). We'll examine how advancements in LLMs are affecting the necessity of RAG.

A Brief History of RAG

A Brief History of RAG

RAG isn't a new concept. The idea of providing context to LLMs for access to current data has roots in a 2020 Facebook AI/Meta paper, "Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks"—predating ChatGPT's November 2022 debut. This paper highlighted two types of memory for LLMs:

- Parametric memory: The knowledge inherent to the LLM, acquired during its training on vast text datasets.

- Non-parametric memory: External context provided within the prompt.

The original paper utilized text embeddings for semantic search to retrieve relevant documents, although this isn't the only method for document retrieval in RAG. Their research demonstrated that RAG yielded more precise and factual responses compared to using the LLM alone.

The original paper utilized text embeddings for semantic search to retrieve relevant documents, although this isn't the only method for document retrieval in RAG. Their research demonstrated that RAG yielded more precise and factual responses compared to using the LLM alone.

The ChatGPT Impact

ChatGPT's November 2022 launch revealed the potential of LLMs for query answering, but also highlighted limitations:

- Limited knowledge: LLMs lack access to information beyond their training data.

- Hallucinations: LLMs may fabricate information rather than admitting uncertainty.

LLMs rely solely on training data and prompt input. Queries outside this scope often lead to fabricated responses.

LLMs rely solely on training data and prompt input. Queries outside this scope often lead to fabricated responses.

The Rise and Refinement of RAG

While RAG pre-dated ChatGPT, its widespread adoption increased significantly in 2023. The core concept is simple: instead of directly querying the LLM, provide a relevant context within the prompt and instruct the LLM to answer based solely on that context.

The prompt serves as the LLM's starting point for answer generation.

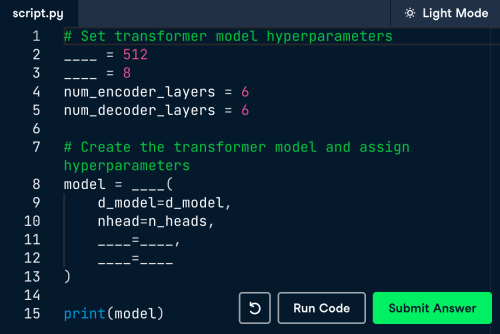

<code>Use the following context to answer the user's question. If you don't know the answer, say "I don't know," and do not fabricate information.

----------------

{context}</code>

This approach significantly reduced hallucinations, enabled access to up-to-date data, and facilitated the use of business-specific data.

RAG's Early Limitations

Initial challenges centered on the limited context window size. ChatGPT-3.5's 4k token limit (roughly 3000 English words) constrained the amount of context and answer length. A balance was needed to avoid excessively long contexts (limiting answer length) or insufficient context (risking omission of crucial information).

The context window acts like a limited blackboard; more space for instructions leaves less for the answer.

The context window acts like a limited blackboard; more space for instructions leaves less for the answer.

The Current Landscape

Significant changes have occurred since then, primarily concerning context window size. Models like GPT-4o (released May 2024) boast a 128k token context window, while Google's Gemini 1.5 (available since February 2024) offers a massive 1 million token window.

The Shifting Role of RAG

This increase in context window size has sparked debate. Some argue that with the capacity to include entire books within the prompt, the need for carefully selected context is diminished. One study (July 2024) even suggested that long-context prompts might outperform RAG in certain scenarios.

Retrieval Augmented Generation or Long-Context LLMs? A Comprehensive Study and Hybrid Approach

However, a more recent study (September 2024) countered this, emphasizing the importance of RAG and suggesting that previous limitations stemmed from the order of context elements within the prompt.

In Defense of RAG in the Era of Long-Context Language Models

Another relevant study (July 2023) highlighted the positional impact of information within long prompts.

Lost in the Middle: How Language Models Use Long Contexts

Information at the beginning of the prompt is more readily utilized by the LLM than information in the middle.

Information at the beginning of the prompt is more readily utilized by the LLM than information in the middle.

The Future of RAG

Despite advancements in context window size, RAG remains crucial, primarily due to cost considerations. Longer prompts demand more processing power. RAG, by limiting prompt size to essential information, reduces computational costs significantly. The future of RAG may involve filtering irrelevant information from large datasets to optimize cost and answer quality. The use of smaller, specialized models tailored to specific tasks will also likely play a significant role.

The above is the detailed content of Why Retrieval-Augmented Generation Is Still Relevant in the Era of Long-Context Language Models. For more information, please follow other related articles on the PHP Chinese website!

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PM

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PMThe Model Context Protocol (MCP): A Universal Connector for AI and Data We're all familiar with AI's role in daily coding. Replit, GitHub Copilot, Black Box AI, and Cursor IDE are just a few examples of how AI streamlines our workflows. But imagine

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PM

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PMMicrosoft's OmniParser V2 and OmniTool: Revolutionizing GUI Automation with AI Imagine AI that not only understands but also interacts with your Windows 11 interface like a seasoned professional. Microsoft's OmniParser V2 and OmniTool make this a re

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AMRevolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AM

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AMThis blog post shares my experience testing Runway ML's new Act-One animation tool, covering both its web interface and Python API. While promising, my results were less impressive than expected. Want to explore Generative AI? Learn to use LLMs in P

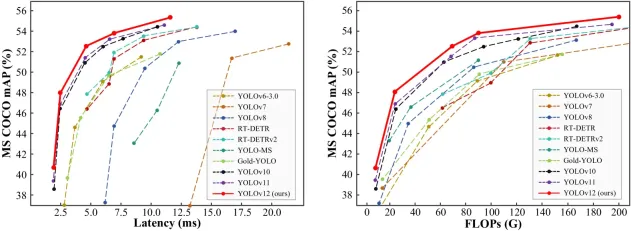

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

A Brief History of RAG

A Brief History of RAG