Local fine-tuning DeepSeek class models face challenges of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

DeepSeek Local Fine Tuning: Challenges and Strategies

DeepSeek Local Fine Tuning is not easy. It requires strong computing resources and solid expertise. Simply put, fine-tuning a large language model directly on your computer is like trying to roast a cow in a home oven – theoretically feasible, but actually challenging.

Why is it so difficult? Models like DeepSeek usually have huge parameters, often billions or even tens of billions. This directly leads to a very high demand for memory and video memory. Even if your computer has a strong configuration, you may face the problem of memory overflow or insufficient video memory. I once tried to fine-tune a relatively small model on a desktop with pretty good configuration, but it got stuck for a long time and finally failed. This cannot be solved simply by "waiting for a long time".

So, what strategies can be tried?

1. Model quantization: This is a good idea. Converting model parameters from high-precision floating-point numbers to low-precision integers (such as INT8) can significantly reduce memory usage. Many deep learning frameworks provide quantization tools, but it should be noted that quantization will bring about accuracy loss, and you need to weigh accuracy and efficiency. Imagine compressing a high-resolution image to a low-resolution, and although the file is smaller, the details are also lost.

2. Use a smaller model: Instead of trying to fine-tune a behemoth, consider using a pre-trained model with smaller parameters. Although not as capable as large models, these models are easier to fine-tune in a local environment and are faster to train. Just like hitting a nail with a small hammer, although it may be slower, it is more flexible and easier to control.

3. Data selection and preprocessing: This is probably one of the most important steps. You need to select high-quality training data that is relevant to your task and perform reasonable preprocessing. Dirty data is like feeding poison to the model, which only makes the results worse. Remember to clean the data, process missing values and outliers, and carry out necessary feature engineering. I once saw a project that because the data preprocessing was not in place, the model was extremely effective, and finally had to re-collect and clean the data.

4. Batch training: If your data is large, you can consider batch training, and only load part of the data into memory for training at a time. This is a bit like installment payment. Although it takes a longer time, it avoids breaking the capital chain (memory overflow).

5. Use GPU acceleration: If your computer has a discrete graphics card, be sure to make full use of the GPU acceleration training process. It's like adding a super burner to your oven, which can greatly reduce cooking time.

Finally, I want to emphasize that the success rate of local fine-tuning large models such as DeepSeek is not high, and you need to choose the appropriate strategy based on your actual situation and resources. Rather than blindly pursuing fine-tuning of large models locally, it is better to evaluate your resources and goals first and choose a more pragmatic approach. Perhaps cloud computing is the more suitable solution. After all, it is better to leave some things to professionals.

The above is the detailed content of How to fine-tune deepseek locally. For more information, please follow other related articles on the PHP Chinese website!

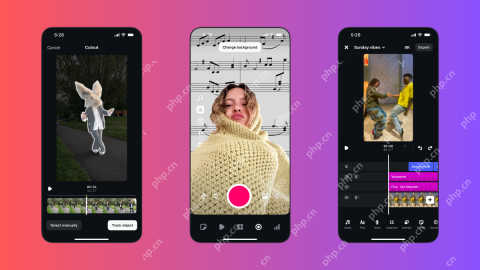

Instagram Just Launched Its Version of CapCutApr 30, 2025 am 10:25 AM

Instagram Just Launched Its Version of CapCutApr 30, 2025 am 10:25 AMInstagram officially launched the Edits video editing app to seize the mobile video editing market. The release has been three months since Instagram first announced the app, and two months after the original release date of Edits in February. Instagram challenges TikTok Instagram’s self-built video editor is of great significance. Instagram is no longer just an app to view photos and videos posted by individuals and companies: Instagram Reels is now its core feature. Short videos are popular all over the world (even LinkedIn has launched short video features), and Instagram is no exception

Chess Lessons Are Coming to DuolingoApr 24, 2025 am 10:41 AM

Chess Lessons Are Coming to DuolingoApr 24, 2025 am 10:41 AMDuolingo, renowned for its language-learning platform, is expanding its offerings! Later this month, iOS users will gain access to new chess lessons integrated seamlessly into the familiar Duolingo interface. The lessons, designed for beginners, wi

Blue Check Verification Is Coming to BlueskyApr 24, 2025 am 10:17 AM

Blue Check Verification Is Coming to BlueskyApr 24, 2025 am 10:17 AMBluesky Echoes Twitter's Past: Introducing Official Verification Bluesky, the decentralized social media platform, is mirroring Twitter's past by introducing an official verification process. This will supplement the existing self-verification optio

Google Photos Now Lets You Convert Standard Photos to Ultra HDRApr 24, 2025 am 10:15 AM

Google Photos Now Lets You Convert Standard Photos to Ultra HDRApr 24, 2025 am 10:15 AMUltra HDR: Google Photos' New Image Enhancement Ultra HDR is a cutting-edge image format offering superior visual quality. Like standard HDR, it packs more data, resulting in brighter highlights, deeper shadows, and richer colors. The key differenc

You Should Try Instagram's New 'Blend' Feature for a Custom Reels FeedApr 23, 2025 am 11:35 AM

You Should Try Instagram's New 'Blend' Feature for a Custom Reels FeedApr 23, 2025 am 11:35 AMInstagram and Spotify now offer personalized "Blend" features to enhance social sharing. Instagram's Blend, accessible only through the mobile app, creates custom daily Reels feeds for individual or group chats. Spotify's Blend mirrors th

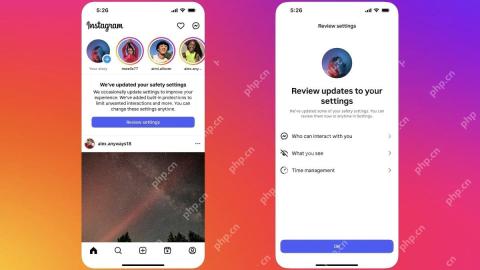

Instagram Is Using AI to Automatically Enroll Minors Into 'Teen Accounts'Apr 23, 2025 am 10:00 AM

Instagram Is Using AI to Automatically Enroll Minors Into 'Teen Accounts'Apr 23, 2025 am 10:00 AMMeta is cracking down on underage Instagram users. Following the introduction of "Teen Accounts" last year, featuring restrictions for users under 18, Meta has expanded these restrictions to Facebook and Messenger, and is now enhancing its

Should I Use an Agent for Taobao?Apr 22, 2025 pm 12:04 PM

Should I Use an Agent for Taobao?Apr 22, 2025 pm 12:04 PMNavigating Taobao: Why a Taobao Agent Like BuckyDrop Is Essential for Global Shoppers The popularity of Taobao, a massive Chinese e-commerce platform, presents a challenge for non-Chinese speakers or those outside China. Language barriers, payment c

How Can I Avoid Buying Fake Products On Taobao?Apr 22, 2025 pm 12:03 PM

How Can I Avoid Buying Fake Products On Taobao?Apr 22, 2025 pm 12:03 PMNavigating the vast marketplace of Taobao requires vigilance against counterfeit goods. This article provides practical tips to help you identify and avoid fake products, ensuring a safe and satisfying shopping experience. Scrutinize Seller Feedbac

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Chinese version

Chinese version, very easy to use

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.