Chainlit: A Scalable Conversational AI Framework

Chainlit is an open-source, asynchronous Python framework designed for building robust and scalable conversational AI applications. It offers a flexible foundation, allowing developers to integrate external APIs, custom logic, and local models seamlessly.

This tutorial demonstrates two Retrieval Augmented Generation (RAG) implementations within Chainlit:

- Leveraging OpenAI Assistants with uploaded documents.

- Utilizing llama_index with a local document folder.

Local Chainlit Setup

Virtual Environment

Create a virtual environment:

mkdir chainlit && cd chainlit python3 -m venv venv source venv/bin/activate

Install Dependencies

Install required packages and save dependencies:

pip install chainlit pip install llama_index # For implementation #2 pip install openai pip freeze > requirements.txt

Test Chainlit

Start Chainlit:

chainlit hello

Access the placeholder at https://www.php.cn/link/2674cea93e3214abce13e072a2dc2ca5

Upsun Deployment

Git Initialization

Initialize a Git repository:

git init .

Create a .gitignore file:

<code>.env database/** data/** storage/** .chainlit venv __pycache__</code>

Upsun Project Creation

Create an Upsun project using the CLI (follow prompts). Upsun will automatically configure the remote repository.

Configuration

Example Upsun configuration for Chainlit:

applications:

chainlit:

source:

root: "/"

type: "python:3.11"

mounts:

"/database":

source: "storage"

source_path: "database"

".files":

source: "storage"

source_path: "files"

"__pycache__":

source: "storage"

source_path: "pycache"

".chainlit":

source: "storage"

source_path: ".chainlit"

web:

commands:

start: "chainlit run app.py --port $PORT --host 0.0.0.0"

upstream:

socket_family: tcp

locations:

"/":

passthru: true

"/public":

passthru: true

build:

flavor: none

hooks:

build: |

set -eux

pip install -r requirements.txt

deploy: |

set -eux

# post_deploy: |

routes:

"https://{default}/":

type: upstream

upstream: "chainlit:http"

"https://www.{default}":

type: redirect

to: "https://{default}/"

Set the OPENAI_API_KEY environment variable via Upsun CLI:

upsun variable:create env:OPENAI_API_KEY --value=sk-proj[...]

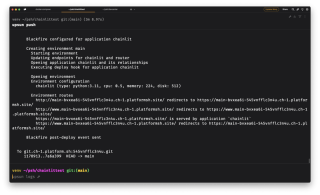

Deployment

Commit and deploy:

git add . git commit -m "First chainlit example" upsun push

Review the deployment status. Successful deployment will show Chainlit running on your main environment.

Implementation 1: OpenAI Assistant & Uploaded Files

This implementation uses an OpenAI assistant to process uploaded documents.

Assistant Creation

Create a new OpenAI assistant on the OpenAI Platform. Set system instructions, choose a model (with text response format), and keep the temperature low (e.g., 0.10). Copy the assistant ID (asst_[xxx]) and set it as an environment variable:

upsun variable:create env:OPENAI_ASSISTANT_ID --value=asst_[...]

Content Upload

Upload your documents (Markdown preferred) to the assistant. OpenAI will create a vector store.

Assistant Logic (app.py)

Replace app.py content with the provided code. Key parts: @cl.on_chat_start creates a new OpenAI thread, and @cl.on_message sends user messages to the thread and streams the response.

Commit and deploy the changes. Test the assistant.

Implementation 2: OpenAI llama_index

This implementation uses llama_index for local knowledge management and OpenAI for response generation.

Branch Creation

Create a new branch:

mkdir chainlit && cd chainlit python3 -m venv venv source venv/bin/activate

Folder Creation and Mounts

Create data and storage folders. Add mounts to the Upsun configuration.

app.py Update

Update app.py with the provided llama_index code. This code loads documents, creates a VectorStoreIndex, and uses it to answer queries via OpenAI.

Deploy the new environment and upload the data folder. Test the application.

Bonus: Authentication

Add authentication using a SQLite database.

Database Setup

Create a database folder and add a mount to the Upsun configuration. Create an environment variable for the database path:

pip install chainlit pip install llama_index # For implementation #2 pip install openai pip freeze > requirements.txt

Authentication Logic (app.py)

Add authentication logic to app.py using @cl.password_auth_callback. This adds a login form.

Create a script to generate hashed passwords. Add users to the database (using hashed passwords). Deploy the authentication and test login.

Conclusion

This tutorial demonstrated deploying a Chainlit application on Upsun with two RAG implementations and authentication. The flexible architecture allows for various adaptations and integrations.

The above is the detailed content of Experiment with Chainlit AI interface with RAG on Upsun. For more information, please follow other related articles on the PHP Chinese website!

The Main Purpose of Python: Flexibility and Ease of UseApr 17, 2025 am 12:14 AM

The Main Purpose of Python: Flexibility and Ease of UseApr 17, 2025 am 12:14 AMPython's flexibility is reflected in multi-paradigm support and dynamic type systems, while ease of use comes from a simple syntax and rich standard library. 1. Flexibility: Supports object-oriented, functional and procedural programming, and dynamic type systems improve development efficiency. 2. Ease of use: The grammar is close to natural language, the standard library covers a wide range of functions, and simplifies the development process.

Python: The Power of Versatile ProgrammingApr 17, 2025 am 12:09 AM

Python: The Power of Versatile ProgrammingApr 17, 2025 am 12:09 AMPython is highly favored for its simplicity and power, suitable for all needs from beginners to advanced developers. Its versatility is reflected in: 1) Easy to learn and use, simple syntax; 2) Rich libraries and frameworks, such as NumPy, Pandas, etc.; 3) Cross-platform support, which can be run on a variety of operating systems; 4) Suitable for scripting and automation tasks to improve work efficiency.

Learning Python in 2 Hours a Day: A Practical GuideApr 17, 2025 am 12:05 AM

Learning Python in 2 Hours a Day: A Practical GuideApr 17, 2025 am 12:05 AMYes, learn Python in two hours a day. 1. Develop a reasonable study plan, 2. Select the right learning resources, 3. Consolidate the knowledge learned through practice. These steps can help you master Python in a short time.

Python vs. C : Pros and Cons for DevelopersApr 17, 2025 am 12:04 AM

Python vs. C : Pros and Cons for DevelopersApr 17, 2025 am 12:04 AMPython is suitable for rapid development and data processing, while C is suitable for high performance and underlying control. 1) Python is easy to use, with concise syntax, and is suitable for data science and web development. 2) C has high performance and accurate control, and is often used in gaming and system programming.

Python: Time Commitment and Learning PaceApr 17, 2025 am 12:03 AM

Python: Time Commitment and Learning PaceApr 17, 2025 am 12:03 AMThe time required to learn Python varies from person to person, mainly influenced by previous programming experience, learning motivation, learning resources and methods, and learning rhythm. Set realistic learning goals and learn best through practical projects.

Python: Automation, Scripting, and Task ManagementApr 16, 2025 am 12:14 AM

Python: Automation, Scripting, and Task ManagementApr 16, 2025 am 12:14 AMPython excels in automation, scripting, and task management. 1) Automation: File backup is realized through standard libraries such as os and shutil. 2) Script writing: Use the psutil library to monitor system resources. 3) Task management: Use the schedule library to schedule tasks. Python's ease of use and rich library support makes it the preferred tool in these areas.

Python and Time: Making the Most of Your Study TimeApr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study TimeApr 14, 2025 am 12:02 AMTo maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

Python: Games, GUIs, and MoreApr 13, 2025 am 12:14 AM

Python: Games, GUIs, and MoreApr 13, 2025 am 12:14 AMPython excels in gaming and GUI development. 1) Game development uses Pygame, providing drawing, audio and other functions, which are suitable for creating 2D games. 2) GUI development can choose Tkinter or PyQt. Tkinter is simple and easy to use, PyQt has rich functions and is suitable for professional development.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver CS6

Visual web development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft