Java

Java javaTutorial

javaTutorial Effortless AI Model Integration: Build and Evaluate AI Models (Spring Boot and Hugging Face)

Effortless AI Model Integration: Build and Evaluate AI Models (Spring Boot and Hugging Face)Effortless AI Model Integration: Build and Evaluate AI Models (Spring Boot and Hugging Face)

The AI revolution is here, and with it comes an ever-growing list of powerful models that can generate text, create visuals, and solve complex problems. But let’s face it: with so many options, figuring out which model is the best fit for your project can be overwhelming. What if there was a way to quickly test these models, see their results in action, and decide which one to integrate into your production system?

Enter Hugging Face’s Inference API—your shortcut to exploring and leveraging state-of-the-art AI models. It eliminates the hassle of setting up, hosting, or training models by offering a plug-and-play solution. Whether you’re brainstorming a new feature or evaluating a model's capabilities, Hugging Face makes AI integration simpler than ever.

In this blog, I’ll walk you through building a lightweight backend application using Spring Boot that allows you to test and evaluate AI models effortlessly. Here’s what you can expect:

? What You’ll Learn

- Access AI Models: Learn how to use Hugging Face’s Inference API to explore and test models.

- Build a Backend: Create a Spring Boot application to interact with these models.

- Test Models: Set up and test endpoints for text and image generation using sample prompts.

By the end, you’ll have a handy tool to test-drive different AI models and make informed decisions about their suitability for your project’s needs. If you’re ready to bridge the gap between curiosity and implementation, let’s get started!

?️ Why Hugging Face Inference API?

Here’s why Hugging Face is a game-changer for AI integration:

- Ease of Use: No need to train or deploy models—just call the API.

- Variety: Access over 150,000 models for tasks like text generation, image creation, and more.

- Scalability: Perfect for prototyping and production use.

? What You’ll Build

We’ll build QuickAI, a Spring Boot application that:

- Generates Text: Create creative content based on a prompt.

- Generates Images: Turn text descriptions into visuals.

- Provides API Documentation: Use Swagger to test and interact with the API.

? Getting Started

Step 1: Sign Up for Hugging Face

Head over to huggingface.co and create an account if you don’t already have one.

Step 2: Get Your API Key

Navigate to your account settings and generate an API key. This key will allow your Spring Boot application to interact with Hugging Face’s Inference API.

Step 3: Explore Models

Check out the Hugging Face Model Hub to find models for your needs. For this tutorial, we’ll use:

- A text generation model (e.g., HuggingFaceH4/zephyr-7b-beta).

- An image generation model (e.g., stabilityai/stable-diffusion-xl-base-1.0).

?️ Setting Up the Spring Boot Project

Step 1: Create a New Spring Boot Project

Use Spring Initializr to set up your project with the following dependencies:

- Spring WebFlux: For reactive, non-blocking API calls.

- Lombok: To reduce boilerplate code.

- Swagger: For API documentation.

Step 2: Add Hugging Face Configuration

Add your Hugging Face API key and model URLs to the application.properties file:

huggingface.text.api.url=https://api-inference.huggingface.co/models/your-text-model huggingface.api.key=your-api-key-here huggingface.image.api.url=https://api-inference.huggingface.co/models/your-image-model

? What’s Next?

Let's dive into the code and build the services for text and image generation. Stay tuned!

1. Text Generating Service:

@Service

public class LLMService {

private final WebClient webClient;

private static final Logger logger = LoggerFactory.getLogger(LLMService.class);

// Constructor to initialize WebClient with Hugging Face API URL and API key

public LLMService(@Value("${huggingface.text.api.url}") String apiUrl,

@Value("${huggingface.api.key}") String apiKey) {

this.webClient = WebClient.builder()

.baseUrl(apiUrl) // Set the base URL for the API

.defaultHeader("Authorization", "Bearer " + apiKey) // Add API key to the header

.build();

}

// Method to generate text using Hugging Face's Inference API

public Mono<string> generateText(String prompt) {

// Validate the input prompt

if (prompt == null || prompt.trim().isEmpty()) {

return Mono.error(new IllegalArgumentException("Prompt must not be null or empty"));

}

// Create the request body with the prompt

Map<string string> body = Collections.singletonMap("inputs", prompt);

// Make a POST request to the Hugging Face API

return webClient.post()

.bodyValue(body)

.retrieve()

.bodyToMono(String.class)

.doOnSuccess(response -> logger.info("Response received: {}", response)) // Log successful responses

.doOnError(error -> logger.error("Error during API call", error)) // Log errors

.retryWhen(Retry.backoff(3, Duration.ofMillis(500))) // Retry on failure with exponential backoff

.timeout(Duration.ofSeconds(5)) // Set a timeout for the API call

.onErrorResume(error -> Mono.just("Fallback response due to error: " + error.getMessage())); // Provide a fallback response on error

}

}

</string></string>

2. Image Generation Service:

@Service

public class ImageGenerationService {

private static final Logger logger = LoggerFactory.getLogger(ImageGenerationService.class);

private final WebClient webClient;

public ImageGenerationService(@Value("${huggingface.image.api.url}") String apiUrl,

@Value("${huggingface.api.key}") String apiKey) {

this.webClient = WebClient.builder()

.baseUrl(apiUrl)

.defaultHeader("Authorization", "Bearer " + apiKey)

.build();

}

public Mono<byte> generateImage(String prompt) {

if (prompt == null || prompt.trim().isEmpty()) {

return Mono.error(new IllegalArgumentException("Prompt must not be null or empty"));

}

Map<string string> body = Collections.singletonMap("inputs", prompt);

return webClient.post()

.bodyValue(body)

.retrieve()

.bodyToMono(byte[].class) / Convert the response to a Mono<byte> (image bytes)

.timeout(Duration.ofSeconds(10)) // Timeout after 10 seconds

.retryWhen(Retry.backoff(3, Duration.ofMillis(500))) // Retry logic

.doOnSuccess(response -> logger.info("Image generated successfully for prompt: {}", prompt))

.doOnError(error -> logger.error("Error generating image for prompt: {}", prompt, error))

.onErrorResume(WebClientResponseException.class, ex -> {

logger.error("HTTP error during image generation: {}", ex.getMessage(), ex);

return Mono.error(new RuntimeException("Error generating image: " + ex.getMessage()));

})

.onErrorResume(TimeoutException.class, ex -> {

logger.error("Timeout while generating image for prompt: {}", prompt);

return Mono.error(new RuntimeException("Request timed out"));

});

}

}

</byte></string></byte>

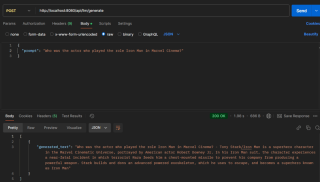

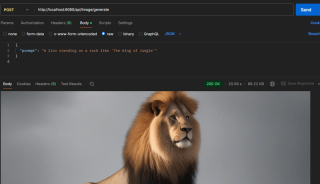

Example prompts and their results: ?

1. Text Based Endpoint:

2. Image Based Endpoint:

? Explore the Project

Ready to dive in? Check out the QuickAI GitHub repository to see the full code and follow along. If you find it useful then give it a ⭐.

Bonus ?

Want to take this project further?

- I have configured Swagger UI for API documentation which will help you in building frontend App.

- Build a simple frontend app using your favorite frontend framework (like React, Angular, or just plain HTML/CSS/Vanilla JS).

? Congratulations You made it this far.

Now you know how to use Hugging Face ?:

- To quickly use AI models in your applications.

- Generate Text: Create creative content from prompts.

- Generate Images: Turn text descriptions into visuals.

? Let’s Connect!

Want to collaborate or have any suggestions find me on LinkedIn, Portfolio also look into other projects of mine here GitHub.

Have a question or suggestion, please do comment here below I'll be happy to address them.

Happy Coding! ?

The above is the detailed content of Effortless AI Model Integration: Build and Evaluate AI Models (Spring Boot and Hugging Face). For more information, please follow other related articles on the PHP Chinese website!

JVM performance vs other languagesMay 14, 2025 am 12:16 AM

JVM performance vs other languagesMay 14, 2025 am 12:16 AMJVM'sperformanceiscompetitivewithotherruntimes,offeringabalanceofspeed,safety,andproductivity.1)JVMusesJITcompilationfordynamicoptimizations.2)C offersnativeperformancebutlacksJVM'ssafetyfeatures.3)Pythonisslowerbuteasiertouse.4)JavaScript'sJITisles

Java Platform Independence: Examples of useMay 14, 2025 am 12:14 AM

Java Platform Independence: Examples of useMay 14, 2025 am 12:14 AMJavaachievesplatformindependencethroughtheJavaVirtualMachine(JVM),allowingcodetorunonanyplatformwithaJVM.1)Codeiscompiledintobytecode,notmachine-specificcode.2)BytecodeisinterpretedbytheJVM,enablingcross-platformexecution.3)Developersshouldtestacross

JVM Architecture: A Deep Dive into the Java Virtual MachineMay 14, 2025 am 12:12 AM

JVM Architecture: A Deep Dive into the Java Virtual MachineMay 14, 2025 am 12:12 AMTheJVMisanabstractcomputingmachinecrucialforrunningJavaprogramsduetoitsplatform-independentarchitecture.Itincludes:1)ClassLoaderforloadingclasses,2)RuntimeDataAreafordatastorage,3)ExecutionEnginewithInterpreter,JITCompiler,andGarbageCollectorforbytec

JVM: Is JVM related to the OS?May 14, 2025 am 12:11 AM

JVM: Is JVM related to the OS?May 14, 2025 am 12:11 AMJVMhasacloserelationshipwiththeOSasittranslatesJavabytecodeintomachine-specificinstructions,managesmemory,andhandlesgarbagecollection.ThisrelationshipallowsJavatorunonvariousOSenvironments,butitalsopresentschallengeslikedifferentJVMbehaviorsandOS-spe

Java: Write Once, Run Anywhere (WORA) - A Deep Dive into Platform IndependenceMay 14, 2025 am 12:05 AM

Java: Write Once, Run Anywhere (WORA) - A Deep Dive into Platform IndependenceMay 14, 2025 am 12:05 AMJava implementation "write once, run everywhere" is compiled into bytecode and run on a Java virtual machine (JVM). 1) Write Java code and compile it into bytecode. 2) Bytecode runs on any platform with JVM installed. 3) Use Java native interface (JNI) to handle platform-specific functions. Despite challenges such as JVM consistency and the use of platform-specific libraries, WORA greatly improves development efficiency and deployment flexibility.

Java Platform Independence: Compatibility with different OSMay 13, 2025 am 12:11 AM

Java Platform Independence: Compatibility with different OSMay 13, 2025 am 12:11 AMJavaachievesplatformindependencethroughtheJavaVirtualMachine(JVM),allowingcodetorunondifferentoperatingsystemswithoutmodification.TheJVMcompilesJavacodeintoplatform-independentbytecode,whichittheninterpretsandexecutesonthespecificOS,abstractingawayOS

What features make java still powerfulMay 13, 2025 am 12:05 AM

What features make java still powerfulMay 13, 2025 am 12:05 AMJavaispowerfulduetoitsplatformindependence,object-orientednature,richstandardlibrary,performancecapabilities,andstrongsecurityfeatures.1)PlatformindependenceallowsapplicationstorunonanydevicesupportingJava.2)Object-orientedprogrammingpromotesmodulara

Top Java Features: A Comprehensive Guide for DevelopersMay 13, 2025 am 12:04 AM

Top Java Features: A Comprehensive Guide for DevelopersMay 13, 2025 am 12:04 AMThe top Java functions include: 1) object-oriented programming, supporting polymorphism, improving code flexibility and maintainability; 2) exception handling mechanism, improving code robustness through try-catch-finally blocks; 3) garbage collection, simplifying memory management; 4) generics, enhancing type safety; 5) ambda expressions and functional programming to make the code more concise and expressive; 6) rich standard libraries, providing optimized data structures and algorithms.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Atom editor mac version download

The most popular open source editor

Dreamweaver CS6

Visual web development tools