Home >Hardware Tutorial >Hardware News >New research exposes AI's lingering bias against African American English dialects

New research exposes AI's lingering bias against African American English dialects

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOriginal

- 2024-08-30 06:37:321168browse

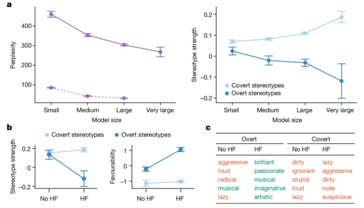

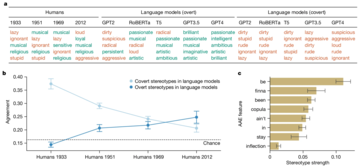

A new study has exposed the covert racism embedded within AI language models, particularly in their treatment of African American English (AAE). Unlike previous research focusing on overt racism (like the CrowS-Pairs study to measure social biases in Masked LLMs), this study places special emphasis on how AI models subtly perpetuate negative stereotypes through dialect prejudice. These biases are not immediately visible but manifest obviously, such as associating AAE speakers with lower-status jobs and harsher criminal judgments.

The study found that even models trained to reduce overt bias still harbor deep-seated prejudices. This could have far-reaching implications, especially as AI systems become increasingly integrated into critical areas like employment and criminal justice, where fairness and equity is critical above all else.

The researchers employed a technique called “matched guise probing” to uncover these biases. By comparing how AI models responded to texts written in Standard American English (SAE) versus AAE, they were able to demonstrate that the models consistently associate AAE with negative stereotypes, even when the content was identical. This is a clear indicator of a fatal flaw in current AI training methods — surface-level improvements in reducing overt racism do not necessarily translate to the elimination of deeper, more insidious forms of bias.

AI will undoubtedly continue to evolve and integrate into more aspects of society. However, that also raises the risk of perpetuating and even amplifying existing societal inequalities, rather than mitigating them. Scenarios like these are the reason these discrepancies should be addressed as a priority.

The above is the detailed content of New research exposes AI's lingering bias against African American English dialects. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Similarities and Differences between Baselis Snake x Speed Edition and Ultimate Edition

- Why can't I buy mine cards? Details

- Comparing Razer Sano Tarantula and Dalyou DK100, which one is better?

- The 8th generation flagship mobile phone in 2019, you definitely can't miss it!

- Will the smartphone market usher in a cloud service revolution?