Java

Java javaTutorial

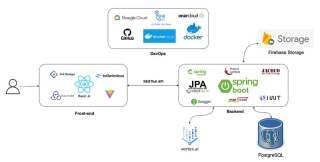

javaTutorial Image-Based Product Search Using Spring Boot, Google Cloud Vertex AI, and Gemini Model

Image-Based Product Search Using Spring Boot, Google Cloud Vertex AI, and Gemini ModelImage-Based Product Search Using Spring Boot, Google Cloud Vertex AI, and Gemini Model

Introduction

Imagine you’re shopping online and come across a product you love but don’t know its name. Wouldn’t it be amazing to upload a picture and have the app find it for you?

In this article, we’ll show you how to build exactly that: an image-based product search feature using Spring Boot and Google Cloud Vertex AI.

Overview of the Feature

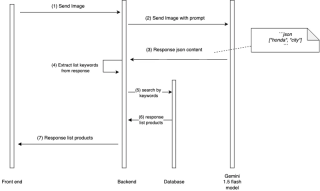

This feature allows users to upload an image and receive a list of products that match it, making the search experience more intuitive and visually driven.

The image-based product search feature leverages Google Cloud Vertex AI to process images and extract relevant keywords. These keywords are then used to search for matching products in the database.

Technology Stack

- Java 21

- Spring boot 3.2.5

- PostgreSQL

- Vertex AI

- ReactJS

We’ll walk through the process of setting up this functionality step-by-step.

Step-by-Step Implementation

1. Create a new project on Google Console

First, we need to create a new project on Google Console for this.

We need to go to https://console.cloud.google.com and create a new account if you already have one. If you have one, sign in to the account.

If you add your bank account, Google Cloud will offer you a free trial.

Once you have created an account or signed in to an already existing account, you can create a new project.

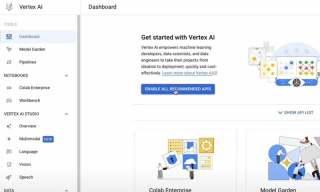

2. Enable Vertex AI Service

On the search bar, we need to find Vertex AI and enable all recommended APIs.

Vertex AI is Google Cloud’s fully managed machine learning (ML) platform designed to simplify the development, deployment, and management of ML models. It allows you to build, train, and deploy ML models at scale by providing tools and services like AutoML, custom model training, hyperparameter tuning, and model monitoring

Gemini 1.5 Flash is part of Google’s Gemini family of models, specifically designed for efficient and high-performance inference in ML applications. Gemini models are a series of advanced AI models developed by Google, often used in natural language processing (NLP), vision tasks, and other AI-powered applications

Note: For other frameworks, you can use Gemini API directly at https://aistudio.google.com/app/prompts/new_chat. Use the structure prompt feature because you can customize your output to match the input so you will get better results.

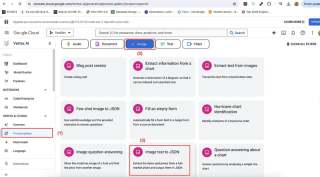

3. Create a new prompt that matches your application

At this step, we need to customize a prompt that matching with your application.

Vertex AI Studio has provided a lot of sample prompts at Prompt Gallery. We use sample Image text to JSON to extract keywords that are related to the product image.

My application is a CarShop, so I build a prompt like this. My expectation the model will respond to me with a list of keywords relating to the image.

My prompt: Extract the name car to a list keyword and output them in JSON. If you don’t find any information about the car, please output the list empty.nExample response: [”rolls”, ”royce”, ”wraith”]

After we customize a suitable prompt with your application. Now, we go to explore how to integrate with Spring Boot Application.

4. Integrate with Spring Boot Application

I have built an E-commerce application about cars. So I want to find cars by the image.

First, in the pom.xml file, you should update your dependency:

<!-- config version for dependency-->

<properties>

<spring-cloud-gcp.version>5.1.2</spring-cloud-gcp.version>

<google-cloud-bom.version>26.32.0</google-cloud-bom.version>

</properties>

<!-- In your dependencyManagement, please add 2 dependencies below -->

<dependencymanagement>

<dependencies>

<dependency>

<groupid>com.google.cloud</groupid>

<artifactid>spring-cloud-gcp-dependencies</artifactid>

<version>${spring-cloud-gcp.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupid>com.google.cloud</groupid>

<artifactid>libraries-bom</artifactid>

<version>${google-cloud-bom.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencymanagement>

<!-- In your tab dependencies, please add the dependency below -->

<dependencies>

<dependency>

<groupid>com.google.cloud</groupid>

<artifactid>google-cloud-vertexai</artifactid>

</dependency>

</dependencies>

After you have done the config in the pom.xml file, you create a config class GeminiConfig.java

- MODEL_NAME: “gemini-1.5-flash”

- LOCATION: “Your location when setting up the project”

- PROJECT_ID: “your project ID ”

import com.google.cloud.vertexai.VertexAI;

import com.google.cloud.vertexai.generativeai.GenerativeModel;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration(proxyBeanMethods = false)

public class GeminiConfig {

private static final String MODEL_NAME = "gemini-1.5-flash";

private static final String LOCATION = "asia-southeast1";

private static final String PROJECT_ID = "yasmini";

@Bean

public VertexAI vertexAI() {

return new VertexAI(PROJECT_ID, LOCATION);

}

@Bean

public GenerativeModel getModel(VertexAI vertexAI) {

return new GenerativeModel(MODEL_NAME, vertexAI);

}

}

Second, create layers Service, Controller to implement the find car function. Create class service.

Because the Gemini API responds with markdown format, we need to create a function to help convert to JSON, and from JSON we will convert to List string in Java.

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.google.cloud.vertexai.api.Content;

import com.google.cloud.vertexai.api.GenerateContentResponse;

import com.google.cloud.vertexai.api.Part;

import com.google.cloud.vertexai.generativeai.*;

import com.learning.yasminishop.common.entity.Product;

import com.learning.yasminishop.common.exception.AppException;

import com.learning.yasminishop.common.exception.ErrorCode;

import com.learning.yasminishop.product.ProductRepository;

import com.learning.yasminishop.product.dto.response.ProductResponse;

import com.learning.yasminishop.product.mapper.ProductMapper;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import org.springframework.web.multipart.MultipartFile;

import java.util.HashSet;

import java.util.List;

import java.util.Objects;

import java.util.Set;

@Service

@RequiredArgsConstructor

@Slf4j

@Transactional(readOnly = true)

public class YasMiniAIService {

private final GenerativeModel generativeModel;

private final ProductRepository productRepository;

private final ProductMapper productMapper;

public List<productresponse> findCarByImage(MultipartFile file){

try {

var prompt = "Extract the name car to a list keyword and output them in JSON. If you don't find any information about the car, please output the list empty.\nExample response: [\"rolls\", \"royce\", \"wraith\"]";

var content = this.generativeModel.generateContent(

ContentMaker.fromMultiModalData(

PartMaker.fromMimeTypeAndData(Objects.requireNonNull(file.getContentType()), file.getBytes()),

prompt

)

);

String jsonContent = ResponseHandler.getText(content);

log.info("Extracted keywords from image: {}", jsonContent);

List<string> keywords = convertJsonToList(jsonContent).stream()

.map(String::toLowerCase)

.toList();

Set<product> results = new HashSet();

for (String keyword : keywords) {

List<product> products = productRepository.searchByKeyword(keyword);

results.addAll(products);

}

return results.stream()

.map(productMapper::toProductResponse)

.toList();

} catch (Exception e) {

log.error("Error finding car by image", e);

return List.of();

}

}

private List<string> convertJsonToList(String markdown) throws JsonProcessingException {

ObjectMapper objectMapper = new ObjectMapper();

String parseJson = markdown;

if(markdown.contains("```

json")){

parseJson = extractJsonFromMarkdown(markdown);

}

return objectMapper.readValue(parseJson, List.class);

}

private String extractJsonFromMarkdown(String markdown) {

return markdown.replace("

```json\n", "").replace("\n```

", "");

}

}

</string></product></product></string></productresponse>

We need to create a controller class to make an endpoint for front end

import com.learning.yasminishop.product.dto.response.ProductResponse;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.security.access.prepost.PreAuthorize;

import org.springframework.web.bind.annotation.*;

import org.springframework.web.multipart.MultipartFile;

import java.util.List;

@RestController

@RequestMapping("/ai")

@RequiredArgsConstructor

@Slf4j

public class YasMiniAIController {

private final YasMiniAIService yasMiniAIService;

@PostMapping

public List<productresponse> findCar(@RequestParam("file") MultipartFile file) {

var response = yasMiniAIService.findCarByImage(file);

return response;

}

}

</productresponse>

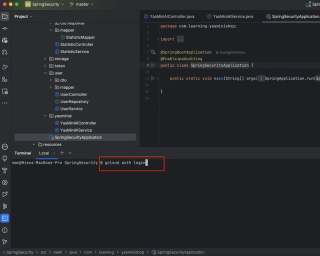

5. IMPORTANT step: Login to Google Cloud with Google Cloud CLI

The Spring Boot Application can not verify who you are and isn't able for you to accept the resource in Google Cloud.

So we need to log in to Google and provide authorization.

5.1 First we need to install GCloud CLI on your machine

Link tutorial: https://cloud.google.com/sdk/docs/install

Check the above link and install it on your machine

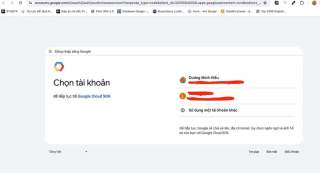

5.2 Login

- Open your terminal at the project (you must cd into the project)

- Type: gcloud auth login

- Enter, and you will see the windows that allow you to login

gcloud auth login

Note: After you log in, credentials are saved in the Google Maven package, and you don’t need to log in again when restart the Spring Boot application.

Conclusion

So these implement above based on my project E-commerce, you can modify matching with your project, and your framework. In other frameworks, not spring boot (NestJs, ..), you can use https://aistudio.google.com/app/prompts/new_chat. and don’t need to create a new Google Cloud account.

You can check the detailed implementation at my repo:

Backend: https://github.com/duongminhhieu/YasMiniShop

Front-end: https://github.com/duongminhhieu/YasMini-Frontend

Happy learning !!!

The above is the detailed content of Image-Based Product Search Using Spring Boot, Google Cloud Vertex AI, and Gemini Model. For more information, please follow other related articles on the PHP Chinese website!

How does the JVM contribute to Java's 'write once, run anywhere' (WORA) capability?May 02, 2025 am 12:25 AM

How does the JVM contribute to Java's 'write once, run anywhere' (WORA) capability?May 02, 2025 am 12:25 AMJVM implements the WORA features of Java through bytecode interpretation, platform-independent APIs and dynamic class loading: 1. Bytecode is interpreted as machine code to ensure cross-platform operation; 2. Standard API abstract operating system differences; 3. Classes are loaded dynamically at runtime to ensure consistency.

How do newer versions of Java address platform-specific issues?May 02, 2025 am 12:18 AM

How do newer versions of Java address platform-specific issues?May 02, 2025 am 12:18 AMThe latest version of Java effectively solves platform-specific problems through JVM optimization, standard library improvements and third-party library support. 1) JVM optimization, such as Java11's ZGC improves garbage collection performance. 2) Standard library improvements, such as Java9's module system reducing platform-related problems. 3) Third-party libraries provide platform-optimized versions, such as OpenCV.

Explain the process of bytecode verification performed by the JVM.May 02, 2025 am 12:18 AM

Explain the process of bytecode verification performed by the JVM.May 02, 2025 am 12:18 AMThe JVM's bytecode verification process includes four key steps: 1) Check whether the class file format complies with the specifications, 2) Verify the validity and correctness of the bytecode instructions, 3) Perform data flow analysis to ensure type safety, and 4) Balancing the thoroughness and performance of verification. Through these steps, the JVM ensures that only secure, correct bytecode is executed, thereby protecting the integrity and security of the program.

How does platform independence simplify deployment of Java applications?May 02, 2025 am 12:15 AM

How does platform independence simplify deployment of Java applications?May 02, 2025 am 12:15 AMJava'splatformindependenceallowsapplicationstorunonanyoperatingsystemwithaJVM.1)Singlecodebase:writeandcompileonceforallplatforms.2)Easyupdates:updatebytecodeforsimultaneousdeployment.3)Testingefficiency:testononeplatformforuniversalbehavior.4)Scalab

How has Java's platform independence evolved over time?May 02, 2025 am 12:12 AM

How has Java's platform independence evolved over time?May 02, 2025 am 12:12 AMJava's platform independence is continuously enhanced through technologies such as JVM, JIT compilation, standardization, generics, lambda expressions and ProjectPanama. Since the 1990s, Java has evolved from basic JVM to high-performance modern JVM, ensuring consistency and efficiency of code across different platforms.

What are some strategies for mitigating platform-specific issues in Java applications?May 01, 2025 am 12:20 AM

What are some strategies for mitigating platform-specific issues in Java applications?May 01, 2025 am 12:20 AMHow does Java alleviate platform-specific problems? Java implements platform-independent through JVM and standard libraries. 1) Use bytecode and JVM to abstract the operating system differences; 2) The standard library provides cross-platform APIs, such as Paths class processing file paths, and Charset class processing character encoding; 3) Use configuration files and multi-platform testing in actual projects for optimization and debugging.

What is the relationship between Java's platform independence and microservices architecture?May 01, 2025 am 12:16 AM

What is the relationship between Java's platform independence and microservices architecture?May 01, 2025 am 12:16 AMJava'splatformindependenceenhancesmicroservicesarchitecturebyofferingdeploymentflexibility,consistency,scalability,andportability.1)DeploymentflexibilityallowsmicroservicestorunonanyplatformwithaJVM.2)Consistencyacrossservicessimplifiesdevelopmentand

How does GraalVM relate to Java's platform independence goals?May 01, 2025 am 12:14 AM

How does GraalVM relate to Java's platform independence goals?May 01, 2025 am 12:14 AMGraalVM enhances Java's platform independence in three ways: 1. Cross-language interoperability, allowing Java to seamlessly interoperate with other languages; 2. Independent runtime environment, compile Java programs into local executable files through GraalVMNativeImage; 3. Performance optimization, Graal compiler generates efficient machine code to improve the performance and consistency of Java programs.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Chinese version

Chinese version, very easy to use

Dreamweaver CS6

Visual web development tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

WebStorm Mac version

Useful JavaScript development tools