Technology peripherals

Technology peripherals AI

AI How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!

How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!Evaluating Large Language Models' Output Quality is crucial for ensuring reliability and effectiveness. Accuracy, coherence, fluency, and relevance are key considerations. Human evaluation, automated metrics, task-based evaluation, and error analysis

How to Evaluate the Output Quality of Large Language Models (LLMs)

Evaluating the output quality of LLMs is crucial to ensure their reliability and effectiveness. Here are some key considerations:

- Accuracy: The output should соответствовать фактическим данным and be free from errors or biases.

- Coherence: The output should be logically consistent and easy to understand.

- Fluency: The output should be well-written and grammatically correct.

- Relevance: The output should be relevant to the input prompt and meet the intended purpose.

Common Methods for Evaluating LLM Output Quality

Several methods can be used to assess LLM output quality:

- Human Evaluation: Human raters manually evaluate the output based on predefined criteria, providing subjective but often insightful feedback.

- Automatic Evaluation Metrics: Automated tools measure specific aspects of output quality, such as BLEU (for text generation) or Rouge (for summarization).

- Task-Based Evaluation: Output is evaluated based on its ability to perform a specific task, such as generating code or answering questions.

- Error Analysis: Identifying and analyzing errors in the output helps pinpoint areas for improvement.

Choosing the Most Appropriate Evaluation Method

The choice of evaluation method depends on several factors:

- Purpose of Evaluation: Determine the specific aspects of output quality that need to be assessed.

- Data Availability: Consider the availability of labeled data or expert annotations for human evaluation.

- Time and Resources: Assess the time and resources available for evaluation.

- Expertise: Determine the level of expertise required for manual evaluation or the interpretation of automatic metric scores.

By carefully considering these factors, researchers and practitioners can select the most appropriate evaluation method to objectively assess the output quality of LLMs.

The above is the detailed content of How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!. For more information, please follow other related articles on the PHP Chinese website!

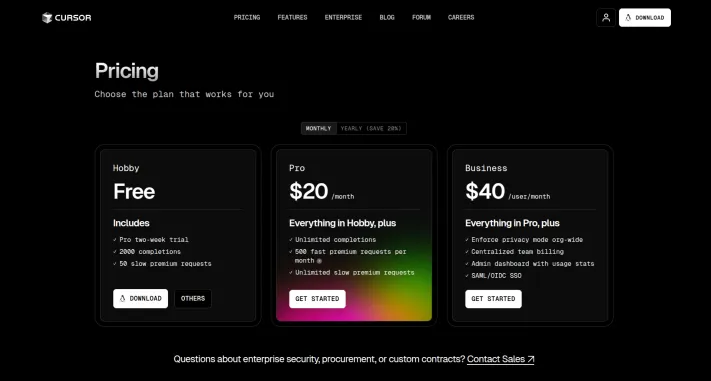

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

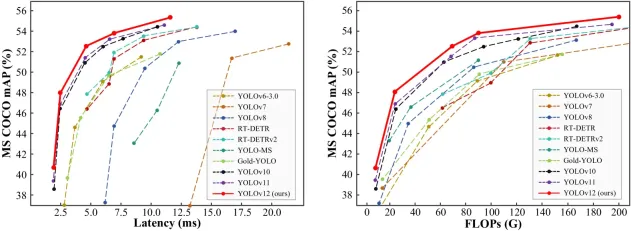

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Is ChatGPT 4 O available?Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?Mar 28, 2025 pm 05:29 PMChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?Mar 16, 2025 am 11:47 AM

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?Mar 16, 2025 am 11:47 AMOpenAI's o1: A 12-Day Gift Spree Begins with Their Most Powerful Model Yet December's arrival brings a global slowdown, snowflakes in some parts of the world, but OpenAI is just getting started. Sam Altman and his team are launching a 12-day gift ex

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software