Technology peripherals

Technology peripherals AI

AI ICLR 2024 Spotlight | No need to worry about intermediate steps, MUSTARD can generate high-quality mathematical inference data

ICLR 2024 Spotlight | No need to worry about intermediate steps, MUSTARD can generate high-quality mathematical inference dataICLR 2024 Spotlight | No need to worry about intermediate steps, MUSTARD can generate high-quality mathematical inference data

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com.

Paper title: MUSTARD: Mastering Uniform Synthesis of Theorem and Proof Data Paper link: https://openreview.net/forum?id=8xliOUg9EW Code link: https:/// /github.com/Eleanor-H/MUSTARD Dataset link: https://drive.google.com/file/d/1yIVAVqpkC2Op7LhisG6BJJ_-MavAMr1B/view Author homepage: https://eleanor- h.github.io/

MUSTARDSAUCE-valid: 5866 pieces of data verified by the Lean formal prover; MUSTARDSAUCE-invalid: failed to pass Lean 5866 pieces of data verified by the formal prover; MUSTARDSAUCE-random: 5866 pieces of random data; MUSTARDSAUCE-tt: all 28316 pieces of data generated by MUSTARD.

트랙 1-1(자동 비공식화): https://www.codabench.org/competitions/2436/ 트랙 1-2(자동 비공식화): https: //www.codabench .org/competitions/2484/ 트랙 2(자동 정리 생성 및 증명): https://www.codabench.org/competitions/2437/ 트랙 3(운영 연구 최적화의 코드 지원 자동 솔루션 문제): https://www.codabench.org/competitions/2438/

The above is the detailed content of ICLR 2024 Spotlight | No need to worry about intermediate steps, MUSTARD can generate high-quality mathematical inference data. For more information, please follow other related articles on the PHP Chinese website!

5 Powerful AI Prompts That Can Boost Any Business IdeaApr 16, 2025 am 11:11 AM

5 Powerful AI Prompts That Can Boost Any Business IdeaApr 16, 2025 am 11:11 AMFortunately, this is a field where generative AI can be extremely helpful. No, it won’t come up with foolproof strategies. But it can help you brainstorm business plans, research your market and fine-tune marketing content and messaging. It’s not a

Graduate Smart: Career Advice For The AI EraApr 16, 2025 am 11:10 AM

Graduate Smart: Career Advice For The AI EraApr 16, 2025 am 11:10 AMOnly this year feels different. Uncertain. It’s not just the fact that a tariff war is well underway. AI is the underlying cause of so much head scratching and soul searching of late. The national youth charity Onside recently conducted a survey of

Effective Accelerationism Or Prosocial AI. What Is The Future Of AI?Apr 16, 2025 am 11:09 AM

Effective Accelerationism Or Prosocial AI. What Is The Future Of AI?Apr 16, 2025 am 11:09 AMAn Accelerationist Vision: Full Speed Ahead Effective Accelerationism, known as e/acc for short, emerged around 2022 as a tech-optimist movement that's gained significant traction in Silicon Valley and beyond, at its core, e/acc advocates for rapid,

What are Relative, Absolute, and Mixed References in Excel?Apr 16, 2025 am 11:03 AM

What are Relative, Absolute, and Mixed References in Excel?Apr 16, 2025 am 11:03 AMIntroduction My initial spreadsheet experiences were frustrating due to the unpredictable behavior of formulas when copied. I didn't understand cell referencing then, but mastering relative, absolute, and mixed references revolutionized my spreadshe

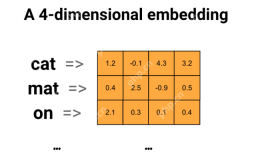

Smart Subject Email Line Generation with Word2VecApr 16, 2025 am 11:01 AM

Smart Subject Email Line Generation with Word2VecApr 16, 2025 am 11:01 AMThis article demonstrates how to generate effective email subject lines using Word2Vec embeddings. It guides you through building a system that leverages semantic similarity to create contextually relevant subject lines, improving email marketing en

Future of Data AnalystApr 16, 2025 am 11:00 AM

Future of Data AnalystApr 16, 2025 am 11:00 AMData Analytics: Navigating the Evolving Landscape Imagine a world where data isn't just numbers, but the cornerstone of every management decision. In this dynamic environment, the data analyst is indispensable, transforming raw data into actionable

What is the SUMPRODUCT Function in Excel? - Analytics VidhyaApr 16, 2025 am 10:55 AM

What is the SUMPRODUCT Function in Excel? - Analytics VidhyaApr 16, 2025 am 10:55 AMExcel's SUMPRODUCT Function: A Data Analysis Powerhouse Unlock the power of Excel's SUMPRODUCT function for streamlined data analysis. This versatile function effortlessly combines summing and multiplying capabilities, extending to addition, subtract

What is Data Scrubbing?Apr 16, 2025 am 10:53 AM

What is Data Scrubbing?Apr 16, 2025 am 10:53 AMData Cleansing: Ensuring Data Accuracy and Reliability for Informed Decisions Imagine planning a large family reunion with an inaccurate guest list—wrong contacts, duplicates, misspelled names. A poorly prepared list could ruin the event. Similarly

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SublimeText3 English version

Recommended: Win version, supports code prompts!

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),