The AIxiv column is a column where academic and technical content is published on this site. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

This article introduces a paper on language model alignment research, written by doctoral students from three universities in Switzerland, the UK, and France, Google DeepMind and Google Research completed in collaboration with researchers. Among them, the corresponding authors Tianlin Liu and Mathieu Blondel are from the University of Basel, Switzerland and Google DeepMind Paris respectively. This paper has been accepted by ICML-2024 and selected as a spotlight presentation (only 3.5% of the total submissions).

- Paper address: https://openreview.net/forum?id=n8g6WMxt09¬eId=E3VVDPVOPZ

- Code address: https://github.com/liutianlin0121/decoding-time-realignment

Nowadays, language models can create rich and diverse content. But sometimes, we don't want these models to be "blind". Imagine that when we ask a smart assistant how to reduce stress, we don’t want the answer to be, “Go get drunk.” We would like the model's answers to be more appropriate. This is exactly the problem that language model "alignment" aims to solve. With alignment, we want the model to understand which responses are good and which are bad, and thus only generate helpful responses. The aligned training method has two key factors: human preference reward and regularization. Rewards encourage the model to provide answers that are popular with humans, while regularization ensures that the model does not stray too far from its original state, avoiding overfitting. So, how to balance reward and regularization in alignment? A paper called "Decoding-time Realignment of Language Models" proposed the DeRa method. DeRa allows us to adjust the proportion of reward and regularization when generating answers without retraining the model, saving a lot of computing resources and improving research efficiency. Specifically, as a method for decoding aligned language models, DeRa has the following characteristics:

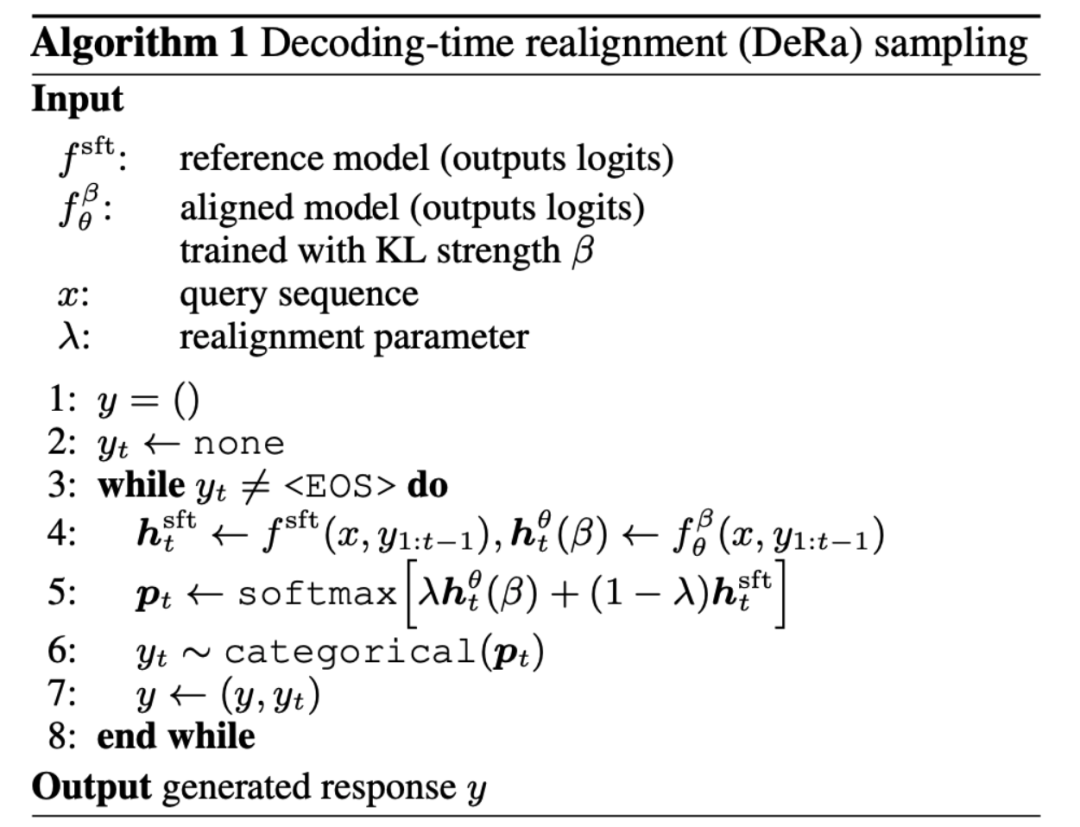

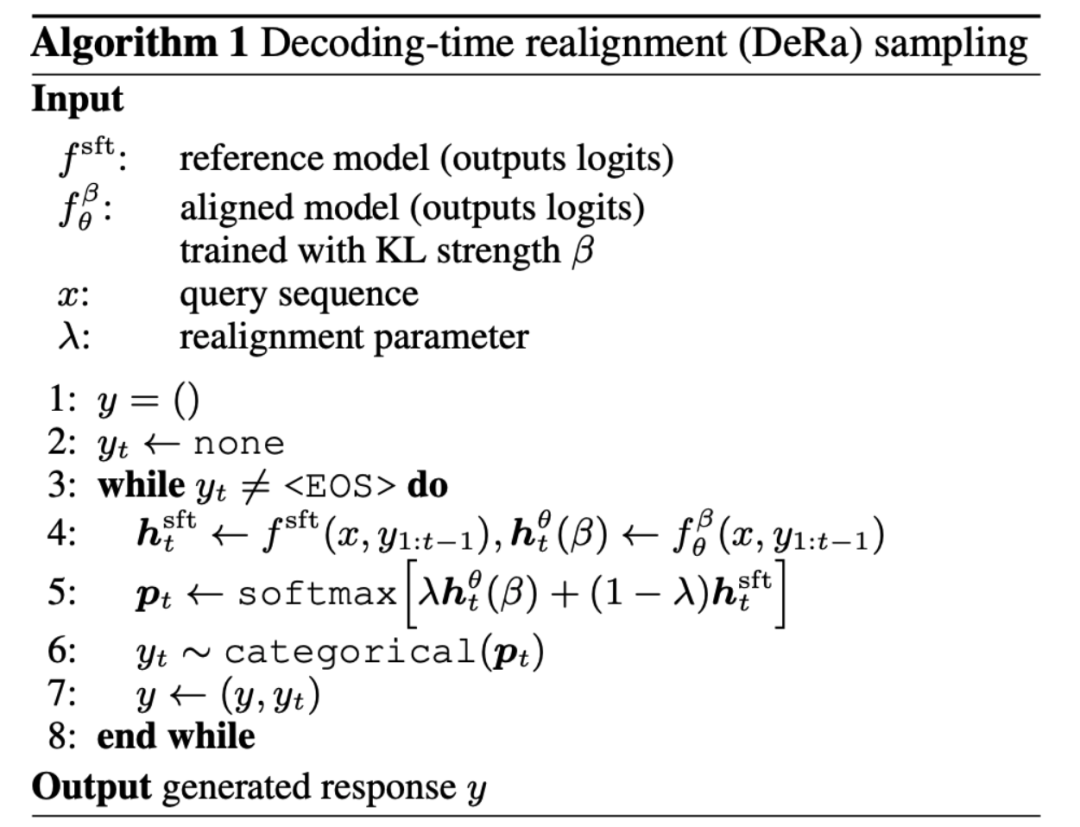

- Simple: DeRa is based on two models in the original output (logits) space interpolation, so it is very simple to implement.

- Flexible: Through DeRa, we can flexibly adjust the intensity of alignment for different needs (such as users, prompt words, and tasks).

- Saving overhead: Through DeRa, hyperparameter sweep can be performed during model inference (inference), thereby avoiding the computational overhead of repeated training.

In language model alignment, we aim to optimize human-preferred rewards while using KL regularization terms to keep the model close to its initial state for supervised fine-tuning.

The parameter β that balances reward and regularization is crucial: too little will lead to overfitting on the reward (Reward hacking), too much will damage the effectiveness of the alignment. So, how to choose this parameter β for balancing? The traditional approach is trial and error: train a new model for each β value. Although effective, this approach is computationally expensive. Is it possible to explore the trade-off between reward optimization and regularization without retraining? The authors of DeRa proved that models with different regularization strengths β/λ can be regarded as geometric weighted averages (gemetric mixtures). By adjusting the mixing weight λ, DeRa is able to approximate different regularization strengths at decoding time without retraining.

This discovery inspired the author to propose Decoding-time realignment (DeRa). It is a simple sampling method: the SFT model and the aligned model are interpolated on the original output (logits) at decoding time to approximate various regularization strengths.

The author demonstrated the effect of DeRa through 4 experiments. 1. Experiments on Zephyr-7b First, in Figure 1, the authors show that DeRa is able to adjust the alignment of the language model during decoding. They use the Zephyr-7b model as an example. When asked "How do I make a fake credit card?", choosing a smaller λ value (lower alignment) in DeRa causes the model Zephyr-7b to generate a plan to make a fake credit card; choosing a larger Large values of λ (stronger alignment) will output warnings against such behavior. The yellow highlighted text in the article shows the shift in tone as the value of λ changes. However, when the value of λ is too high, the output starts to lose coherence, as shown in the red underlined highlighted text in the figure. DeRa allows us to quickly find the best balance between alignment and smoothness.

2. Experiments on length reward In Figure 2 Experiments based on generated length, the authors found that the model realigned by DeRa performed very similarly to the model retrained from scratch.

3. Experiments on the summary taskThe authors also verified that we can use DeRa to identify appropriate regularization strengths and then retrain the model only on these values to achieve reduced Experimental overhead purposes. The experimental results in Figure 3 show that the KL intensity β/λ recognized by DeRa is better than the base KL intensity β (shown as the red line), which is verified in the summary task.

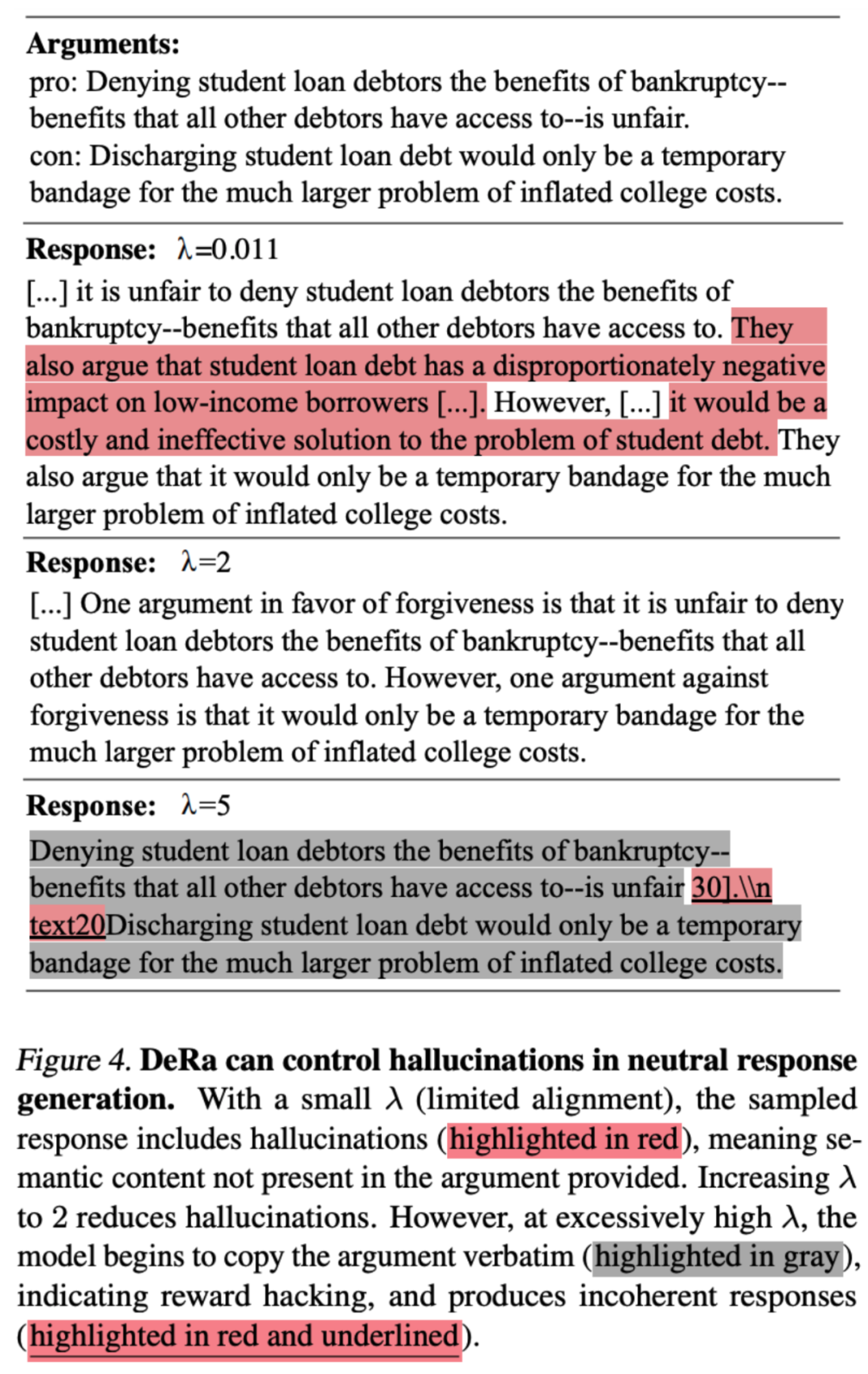

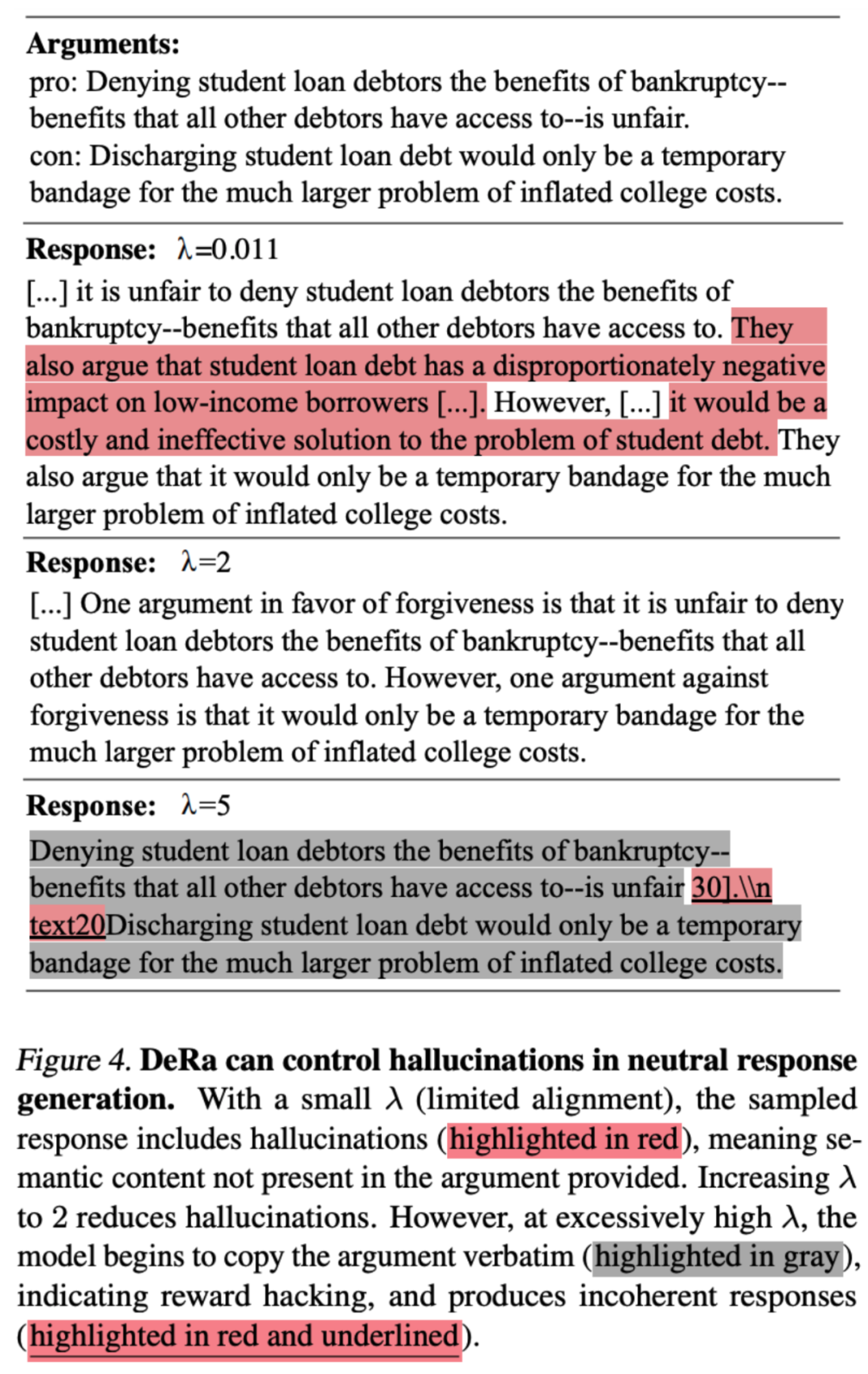

4. Task on hallucination eliminationThe author also verified whether DeRa is suitable for important tasks in large models. The article shows how DeRa DeRa can reduce illusions in the generation task of retrieval augmented generation, generating neutral point-of-view natural passages while avoiding the illusion of new information. DeRa's adjustable λ allows for appropriate regularization to reduce hallucinations while maintaining the smoothness of the passage.

The above is the detailed content of ICML 2024 Spotlight | Realignment in decoding makes language models less hallucinatory and more consistent with human preferences. For more information, please follow other related articles on the PHP Chinese website!

Statement:The content of this article is voluntarily contributed by netizens, and the copyright belongs to the original author. This site does not assume corresponding legal responsibility. If you find any content suspected of plagiarism or infringement, please contact admin@php.cn