Technology peripherals

Technology peripherals AI

AI ICML 2024| Large language model helps CLIP-based out-of-distribution detection tasks

ICML 2024| Large language model helps CLIP-based out-of-distribution detection tasksICML 2024| Large language model helps CLIP-based out-of-distribution detection tasks

When the distributions of the training data set and the test data set are the same, machine learning models can show superior performance. However, in an open world environment, models often encounter out-of-distribution (OOD) samples. OOD samples may cause the model to behave unpredictable, and the consequences of errors may be fatal, especially in high-risk scenarios such as autonomous driving [1, 2]. Therefore, OOD detection is crucial to ensure the reliability of machine learning models in actual deployment.

Most OOD detection methods [1, 3] can effectively detect OOD samples based on well-trained In-Distribution (ID) classifiers. However, for different ID datasets, they need to retrain the classifier for OOD detection. Furthermore, these methods only rely on visual patterns and ignore the connection between visual images and text labels. With the emergence of large-scale visual language models (Vision-Manguage Models, VLMs, such as CLIP [4]), zero-shot OOD detection becomes possible [5]. By building a text classifier with only ID category labels, it is possible to detect OOD samples across different ID datasets without retraining the classifier.

Although existing CLIP-based out-of-distribution detection methods exhibit impressive performance, they often fail when encountering out-of-distribution samples that are difficult to detect. We believe that existing methods only rely on ID category labels The approach to building text classifiers largely limits CLIP's inherent ability to identify samples from the open label space. As shown in Figure 1 (a), the method of constructing a text classifier that relies only on ID category labels is difficult to distinguish OOD samples that are difficult to detect (ID dataset: CUB-200-2011, OOD dataset: Places).

Figure 1. Schematic diagram of research motivation: (a) build text classifier relying only on ID category labels, (b) use real OOD labels, (c) use LLM to imagine potential outlier exposure

In this work , we propose an out-of-distribution detection method called Envisioning Outlier Exposure (EOE), which leverages the expert knowledge and reasoning capabilities of large language models (LLMs) to imagine potential outlier exposures, thereby improving VLMs. OOD detection performance (shown in Figure 1 (c)) without accessing any actual OOD data. We design (1) LLM cues based on visual similarity to generate potential outlier class labels specifically for OOD detection, and (2) a new scoring function based on potential outlier penalties to effectively distinguish difficult-to-identify OOD samples. . Experiments show that EOE achieves superior performance in different OOD tasks and can effectively scale to the ImageNet-1K dataset.

ØPaper link: https://arxiv.org/pdf/2406.00806

ØCode link: https://github.com/tmlr-group/EOE

Next we will briefly share with you Research results on the direction of out-of-distribution detection were recently published at ICML 2024.

Preliminary knowledge

Method introduction

EOE aims to improve zero-shot OOD detection performance by leveraging LLM to generate potential outlier category labels. However, since the OOD categories encountered when the model is deployed are unknown, then, how should we guide LLM to generate the required outlier category labels? After obtaining the outlier class labels, how can we better distinguish between ID and OOD samples? To address these issues, we propose an LLM hint specifically for OOD detection designed based on the visual similarity principle, and introduce a novel scoring function to better distinguish ID/OOD samples. The overall framework of our approach is shown in Figure 2.

Figure 2. EOE overall framework diagram

Fine-grained OOD detection is also called open set identification. In Fine-grained OOD detection, both ID and OOD samples belong to the same main category (such as "bird" ” class), and there are inherent visual similarities between subclasses (e.g., “sparrow” and “swallow”). Therefore, it is more appropriate to instruct the LLM to directly provide different subcategories within the same main category.

The above three types of LLM prompts for OOD detection are shown in Figure 3

Figure 3. Three types of LLM prompts designed based on the principle of visual similarity

Figure 4. EOE pseudocode

The advantages of our method are summarized as follows:

EOE does not rely on prior knowledge of unknown OOD data, so it is particularly suitable for open world scenarios.

Zero-sample: The same pre-trained model can be effectively applied to a variety of task-specific ID datasets without the need to train each specific ID dataset separately. EOE achieves superior OOD detection performance by knowing only the ID class tags.

Scalability and versatility: Compared with existing zero-shot OOD detection methods [6] that also generate latent OOD class labels, EOE can be easily applied to large-scale datasets such as ImageNet-1K. Furthermore, EOE shows versatility in different tasks, including Far, Near and Fine-grainedOOD detection.

Experimental results

We conducted experiments on multiple data sets of different OOD tasks. Table 1 shows the experimental results of Far OOD detection on ImageNet-1K, where Ground Truth represents the performance when using real OOD labels, which is not available in actual deployment. Results show that EOE is comparable to fine-tuning methods and surpasses MCM [5].

Table 1. Far OOD experimental results

We also report experimental results on Near OOD and Fine-grained OOD tasks. As shown in Table 2 and Table 3, our methods both achieve the best detection performance.

Table 2. Near OOD experimental results

Table 3. Fine-grained OOD experimental results

We conducted ablation experiments on each model of EOE, including different scoring functions, LLM tips: different The number of LLM and potential OOD class labels of different lengths. Experiments show that the scoring function we designed and the LLM prompt designed based on the visual similarity principle achieve optimal performance, and our method achieves excellent performance on different LLMs and the number of potential OOD class labels of different lengths. At the same time, we also conducted ablation experiments on the structure of the visual language model. Please refer to the original article for detailed experimental results.

Figure 5. Ablation experiment – different scoring functions, LLM hints and different LLMs

Figure 5. Ablation experiment – the number of potential OOD class labels generated

We analyzed the effectiveness of EOE ,In fact, the generated anomaly class labels are unlikely to have a ,high probability of hitting the true OOD class. This is because the OOD data encountered in actual deployment of the model is diverse and unpredictable. However, guided by visual similarity rules, even if the real OOD class is not hit, the potential abnormal class labels generated by EOE can still improve the model's performance in OOD detection.

To illustrate the above argument, we show visualizations derived from the softmax output of label matching scores via T-SNE. The visualization results between our EOE and the comparison method MCM are shown in Figure 6. Based on the ID class labels of ImageNet-10, LLM generates potential anomaly label "submarines" based on visual similarity rules. When encountering the OOD class "steam locomotive" (a class in ImageNet-20), "steam locomotive" has the highest similarity to "submarine" in and . Therefore, EOE will cluster it as a "submarine" and thus detect it as an OOD class. However, if there are no potential outlier class labels, we can find that MCM tends to cluster all OOD class labels together. This may result in identifying difficult-to-identify OOD samples as ID classes. In summary, in our EOE framework, 1) OOD samples belonging to the same class tend to be clustered together, 2) samples from the same group are classified into the assumed outlier class ("Steam") with which they are visually similar. "Locomotive" vs "Submarine"). These observations suggest that our EOE can enhance OOD detection without touching the actual OOD category and is also semantically easier to interpret. We hope that this work can provide a new idea for future research in the field of OOD detection.

Figure 6. Visualization results

References

[1] Hendrycks, D. and Gimpel, K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. In ICLR, 2017.

[2] Yang, J., Zhou, K., Li, Y., and Liu, Z. Generalized out-of-distribution detection: A survey. arXiv preprint arXiv:2110.11334, 2021.

[3] Liu, W., Wang, X., Owens, J., and Li, Y. Energy-based out-of-distribution detection. In NeurIPS, 2020.

[4] Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al. Learning transferable visual models from natural language supervision. In ICML, 2021.

[5] Ming, Y., Cai, Z., Gu, J., Sun, Y., Li, W., and Li, Y. Delving into out-of-distribution detection with vision-language representations. In NeurIPS, 2022.

[6] Esmaeilpour, S., Liu, B., Robertson, E., and Shu, L. Zeroshot out-of-distribution detection based on the pre- trained model clip. In AAAI, 2022.

Introduction to the research group

The Trustworthy Machine Learning and Reasoning Research Group (TMLR Group) of Hong Kong Baptist University consists of a number of young professors, postdoctoral researchers, doctoral students, visiting doctoral students and It is composed of research assistants, and the research team is affiliated with the Department of Computer Science, School of Science. The research group specializes in trustworthy representation learning, trustworthy learning based on causal reasoning, trustworthy basic models and other related algorithms, theory and system design, as well as applications in natural sciences. The specific research directions and related results can be found on the group's Github (https ://github.com/tmlr-group). The research team is funded by government research funds and industrial research funds, such as the Hong Kong Research Grants Council Outstanding Young Scholars Program, National Natural Science Foundation of China general projects and youth projects, as well as scientific research funds from Microsoft, NVIDIA, Baidu, Alibaba, Tencent and other companies. Young professors and senior researchers work hand in hand, and GPU computing resources are sufficient. It has long-term recruitment of many postdoctoral researchers, doctoral students, research assistants and research interns. In addition, the group also welcomes applications from self-funded visiting postdoctoral fellows, doctoral students and research assistants for at least 3-6 months, and remote access is supported. Interested students please send your resume and preliminary research plan to the email address (bhanml@comp.hkbu.edu.hk).

The above is the detailed content of ICML 2024| Large language model helps CLIP-based out-of-distribution detection tasks. For more information, please follow other related articles on the PHP Chinese website!

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

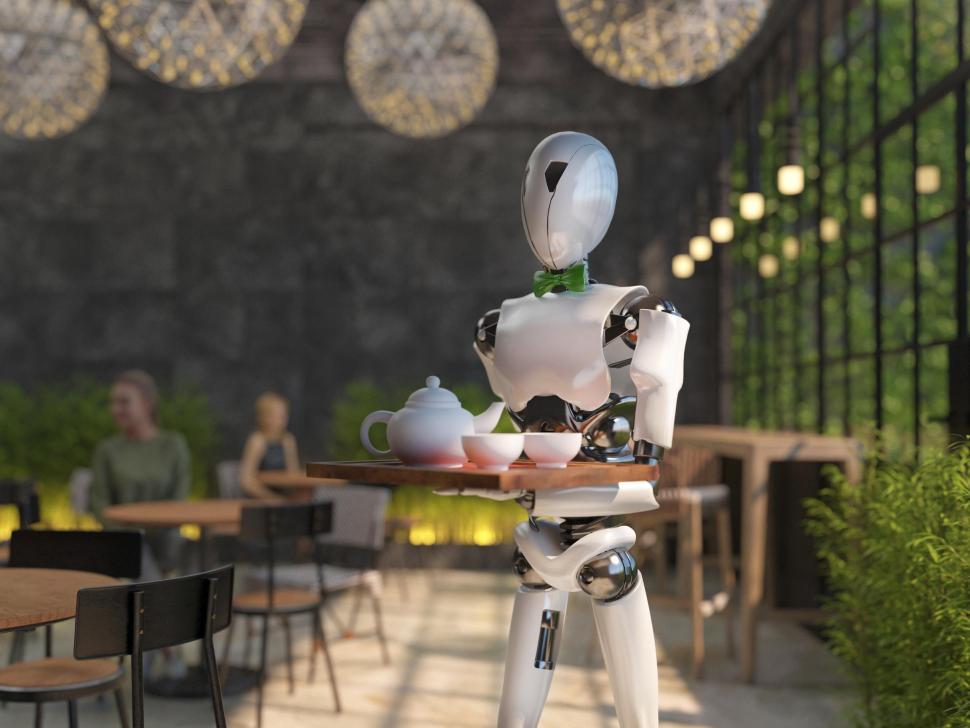

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

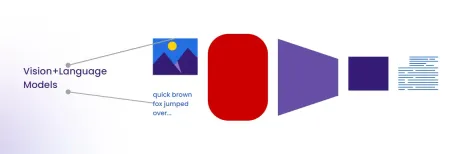

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Notepad++7.3.1

Easy-to-use and free code editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SublimeText3 Chinese version

Chinese version, very easy to use