爬虫抓取的资料想分列存取在tsv上,试过很多方式都没有办法成功存存取成两列资讯。

想存取为数字爬取的资料一列,底下类型在第二列

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

import csv

html = urlopen("http://www.app12345.com/?area=tw&store=Apple%20Store")

bs0bj = BeautifulSoup (html)

def GPname():

GPnameList = bs0bj.find_all("dd",{"class":re.compile("ddappname")})

str = ''

for name in GPnameList:

str += name.get_text()

str += '\n'

print(name.get_text())

return str

def GPcompany():

GPcompanyname = bs0bj.find_all("dd",{"style":re.compile("color")})

str = ''

for cpa in GPcompanyname:

str += cpa.get_text()

str += '\n'

print(cpa.get_text())

return str

with open('0217.tsv','w',newline='',encoding='utf-8') as f:

f.write(GPname())

f.write(GPcompany())

f.close()

可能对zip不熟悉,存取下来之后变成一个字一格

也找到这篇参考,但怎么尝试都没有办法成功

https://segmentfault.com/q/10...

怪我咯2017-04-18 10:27:51

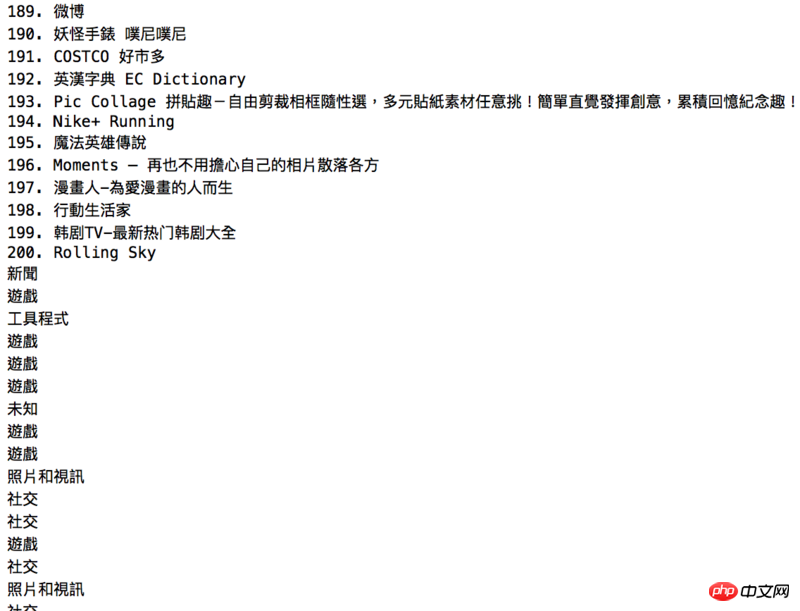

写csv文件简单点 你的结构数据要成这样 [["1. 东森新闻云","新闻"],["2. 创世黎明(Dawn of world)","游戏"]]

from urllib import urlopen

from bs4 import BeautifulSoup

import re

import csv

html = urlopen("http://www.app12345.com/?area=tw&store=Apple%20Store")

bs0bj = BeautifulSoup (html)

GPnameList = [name.get_text() for name in bs0bj.find_all("dd",{"class":re.compile("ddappname")})]

GPcompanyname = [cpa.get_text() for cpa in bs0bj.find_all("dd",{"style":re.compile("color")})]

data = '\n'.join([','.join(d) for d in zip(GPnameList, GPcompanyname)])

with open('C:/Users/sa/Desktop/0217.csv','wb') as f:

f.write(data.encode('utf-8'))