大型语言模型(llm)已经彻底改变了自然语言处理领域。随着这些模型在规模和复杂性上的增长,推理的计算需求也显着增加。为了应对这一挑战利用多个gpu变得至关重要。

因此,这篇文章将在多个GPU上同时进行推理,内容主要包括:介绍Accelerate库、简单的方法和工作代码示例,以及使用多个GPU进行性能基准测试

本文将使用多个3090将llama2-7b的推理扩展在多个GPU上

基本示例

我们首先介绍一个简单的示例来演示使用Accelerate进行多gpu“消息传递” 。

from accelerate import Accelerator from accelerate.utils import gather_object accelerator = Accelerator() # each GPU creates a string message=[ f"Hello this is GPU {accelerator.process_index}" ] # collect the messages from all GPUs messages=gather_object(message) # output the messages only on the main process with accelerator.print() accelerator.print(messages)

输出如下:

['Hello this is GPU 0', 'Hello this is GPU 1', 'Hello this is GPU 2', 'Hello this is GPU 3', 'Hello this is GPU 4']

多GPU推理

下面是一个简单的、非批处理的推理方法。代码很简单,因为Accelerate库已经帮我们做了很多工作,我们直接使用就可以:

from accelerate import Accelerator from accelerate.utils import gather_object from transformers import AutoModelForCausalLM, AutoTokenizer from statistics import mean import torch, time, json accelerator = Accelerator() # 10*10 Prompts. Source: https://www.penguin.co.uk/articles/2022/04/best-first-lines-in-books prompts_all=["The King is dead. Long live the Queen.","Once there were four children whose names were Peter, Susan, Edmund, and Lucy.","The story so far: in the beginning, the universe was created.","It was a bright cold day in April, and the clocks were striking thirteen.","It is a truth universally acknowledged, that a single man in possession of a good fortune, must be in want of a wife.","The sweat wis lashing oafay Sick Boy; he wis trembling.","124 was spiteful. Full of Baby's venom.","As Gregor Samsa awoke one morning from uneasy dreams he found himself transformed in his bed into a gigantic insect.","I write this sitting in the kitchen sink.","We were somewhere around Barstow on the edge of the desert when the drugs began to take hold.", ] * 10 # load a base model and tokenizer model_path="models/llama2-7b" model = AutoModelForCausalLM.from_pretrained(model_path,device_map={"": accelerator.process_index},torch_dtype=torch.bfloat16, ) tokenizer = AutoTokenizer.from_pretrained(model_path) # sync GPUs and start the timer accelerator.wait_for_everyone() start=time.time() # divide the prompt list onto the available GPUs with accelerator.split_between_processes(prompts_all) as prompts:# store output of generations in dictresults=dict(outputs=[], num_tokens=0) # have each GPU do inference, prompt by promptfor prompt in prompts:prompt_tokenized=tokenizer(prompt, return_tensors="pt").to("cuda")output_tokenized = model.generate(**prompt_tokenized, max_new_tokens=100)[0] # remove prompt from output output_tokenized=output_tokenized[len(prompt_tokenized["input_ids"][0]):] # store outputs and number of tokens in result{}results["outputs"].append( tokenizer.decode(output_tokenized) )results["num_tokens"] += len(output_tokenized) results=[ results ] # transform to list, otherwise gather_object() will not collect correctly # collect results from all the GPUs results_gathered=gather_object(results) if accelerator.is_main_process:timediff=time.time()-startnum_tokens=sum([r["num_tokens"] for r in results_gathered ]) print(f"tokens/sec: {num_tokens//timediff}, time {timediff}, total tokens {num_tokens}, total prompts {len(prompts_all)}")

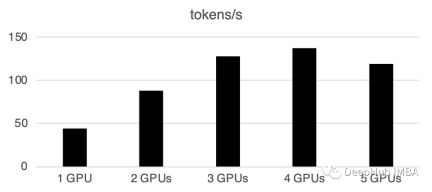

使用多个gpu会导致一些通信开销:性能在4个gpu时呈线性增长,然后在这种特定设置中趋于稳定。当然这里的性能取决于许多参数,如模型大小和量化、提示长度、生成的令牌数量和采样策略,所以我们只讨论一般的情况

1 GPU: 44个token /秒,时间:225.5 s

2个GPU:每秒处理88个token,总共用时112.9秒

3个GPU:每秒处理128个令牌,总共耗时77.6秒

4 gpu: 137个token /秒,时间:72.7s

5个gpu:每秒处理119个token,总共需要83.8秒的时间

在多GPU上进行批处理

现实世界中,我们可以使用批处理推理来加快速度。这会减少GPU之间的通讯,加快推理速度。我们只需要增加prepare_prompts函数将一批数据而不是单条数据输入到模型即可:

from accelerate import Accelerator from accelerate.utils import gather_object from transformers import AutoModelForCausalLM, AutoTokenizer from statistics import mean import torch, time, json accelerator = Accelerator() def write_pretty_json(file_path, data):import jsonwith open(file_path, "w") as write_file:json.dump(data, write_file, indent=4) # 10*10 Prompts. Source: https://www.penguin.co.uk/articles/2022/04/best-first-lines-in-books prompts_all=["The King is dead. Long live the Queen.","Once there were four children whose names were Peter, Susan, Edmund, and Lucy.","The story so far: in the beginning, the universe was created.","It was a bright cold day in April, and the clocks were striking thirteen.","It is a truth universally acknowledged, that a single man in possession of a good fortune, must be in want of a wife.","The sweat wis lashing oafay Sick Boy; he wis trembling.","124 was spiteful. Full of Baby's venom.","As Gregor Samsa awoke one morning from uneasy dreams he found himself transformed in his bed into a gigantic insect.","I write this sitting in the kitchen sink.","We were somewhere around Barstow on the edge of the desert when the drugs began to take hold.", ] * 10 # load a base model and tokenizer model_path="models/llama2-7b" model = AutoModelForCausalLM.from_pretrained(model_path,device_map={"": accelerator.process_index},torch_dtype=torch.bfloat16, ) tokenizer = AutoTokenizer.from_pretrained(model_path) tokenizer.pad_token = tokenizer.eos_token # batch, left pad (for inference), and tokenize def prepare_prompts(prompts, tokenizer, batch_size=16):batches=[prompts[i:i + batch_size] for i in range(0, len(prompts), batch_size)]batches_tok=[]tokenizer.padding_side="left" for prompt_batch in batches:batches_tok.append(tokenizer(prompt_batch, return_tensors="pt", padding='longest', truncatinotallow=False, pad_to_multiple_of=8,add_special_tokens=False).to("cuda") )tokenizer.padding_side="right"return batches_tok # sync GPUs and start the timer accelerator.wait_for_everyone() start=time.time() # divide the prompt list onto the available GPUs with accelerator.split_between_processes(prompts_all) as prompts:results=dict(outputs=[], num_tokens=0) # have each GPU do inference in batchesprompt_batches=prepare_prompts(prompts, tokenizer, batch_size=16) for prompts_tokenized in prompt_batches:outputs_tokenized=model.generate(**prompts_tokenized, max_new_tokens=100) # remove prompt from gen. tokensoutputs_tokenized=[ tok_out[len(tok_in):] for tok_in, tok_out in zip(prompts_tokenized["input_ids"], outputs_tokenized) ] # count and decode gen. tokens num_tokens=sum([ len(t) for t in outputs_tokenized ])outputs=tokenizer.batch_decode(outputs_tokenized) # store in results{} to be gathered by accelerateresults["outputs"].extend(outputs)results["num_tokens"] += num_tokens results=[ results ] # transform to list, otherwise gather_object() will not collect correctly # collect results from all the GPUs results_gathered=gather_object(results) if accelerator.is_main_process:timediff=time.time()-startnum_tokens=sum([r["num_tokens"] for r in results_gathered ]) print(f"tokens/sec: {num_tokens//timediff}, time elapsed: {timediff}, num_tokens {num_tokens}")

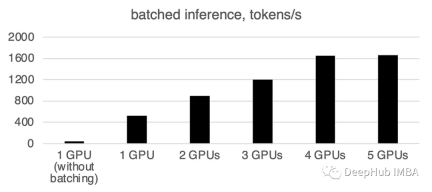

可以看到批处理会大大加快速度。

需要重写的内容是:1个GPU:520个令牌/秒,时间:19.2秒

两张GPU的算力为每秒900个代币,计算时间为11.1秒

3 gpu: 1205个token /秒,时间:8.2s

四张GPU:1655个令牌/秒,所需时间为6.0秒

5个GPU: 每秒1658个令牌,时间:6.0秒

总结

截止到本文为止,llama.cpp,ctransformer还不支持多GPU推理,好像llama.cpp在6月有个多GPU的merge,但是我没看到官方更新,所以这里暂时确定不支持多GPU。如果有小伙伴确认可以支持多GPU请留言。

huggingface的Accelerate包则为我们使用多GPU提供了一个很方便的选择,使用多个GPU推理可以显着提高性能,但gpu之间通信的开销随着gpu数量的增加而显着增加。

以上是使用Accelerate库在多GPU上进行LLM推理的详细内容。更多信息请关注PHP中文网其他相关文章!

如何使用代理抹布构建智能常见问题解答聊天机器人May 07, 2025 am 11:28 AM

如何使用代理抹布构建智能常见问题解答聊天机器人May 07, 2025 am 11:28 AM人工智能代理人现在是企业大小的一部分。从医院的填写表格到检查法律文件到分析录像带和处理客户支持 - 我们拥有各种任务的AI代理。伴侣

从恐慌到权力:领导者在AI时代必须学到什么May 07, 2025 am 11:26 AM

从恐慌到权力:领导者在AI时代必须学到什么May 07, 2025 am 11:26 AM生活是美好的。 也可以预见的是,您的分析思维更喜欢它的方式。您今天只开会进入办公室,完成一些最后一刻的文书工作。之后,您要带您的伴侣和孩子们度过当之无愧的假期去阳光

为什么预测AGI将超过AI专家的科学共识的原因为什么May 07, 2025 am 11:24 AM

为什么预测AGI将超过AI专家的科学共识的原因为什么May 07, 2025 am 11:24 AM但是,科学共识具有打ic和陷阱,也许是通过使用融合的实验,也称为合奏,也许是一种更谨慎的方法。 让我们来谈谈。 对创新AI突破的这种分析是我的一部分

工作室吉卜力的困境 - 生成AI时代的版权May 07, 2025 am 11:19 AM

工作室吉卜力的困境 - 生成AI时代的版权May 07, 2025 am 11:19 AMOpenai和Studio Ghibli都没有回应此故事的评论请求。但是他们的沉默反映了创造性经济中更广泛,更复杂的紧张局势:版权在生成AI时代应该如何运作? 使用类似的工具

mulesoft为镀锌代理AI连接制定混合May 07, 2025 am 11:18 AM

mulesoft为镀锌代理AI连接制定混合May 07, 2025 am 11:18 AM混凝土和软件都可以在需要的情况下镀锌以良好的性能。两者都可以接受压力测试,两者都可以随着时间的流逝而遭受裂缝和裂缝,两者都可以分解并重构为“新建”,两种功能的产生

据报道,Openai达成了30亿美元的交易来购买WindsurfMay 07, 2025 am 11:16 AM

据报道,Openai达成了30亿美元的交易来购买WindsurfMay 07, 2025 am 11:16 AM但是,许多报告都在非常表面的水平上停止。 如果您想弄清楚帆冲浪的全部内容,您可能会或可能不会从显示在Google搜索引擎顶部出现的联合内容中得到想要的东西

对所有美国孩子的强制性AI教育? 250多个首席执行官说是May 07, 2025 am 11:15 AM

对所有美国孩子的强制性AI教育? 250多个首席执行官说是May 07, 2025 am 11:15 AM关键事实 签署公开信的领导者包括Adobe,Accenture,AMD,American Airlines,Blue Origin,Cognizant,Dell,Dellbox,IBM,LinkedIn,Lyftin,Lyft,Microsoft,Microsoft,Salesforce,Uber,Uber,Yahoo和Zoom)等高调公司的首席执行官。

我们自满的危机:导航AI欺骗May 07, 2025 am 11:09 AM

我们自满的危机:导航AI欺骗May 07, 2025 am 11:09 AM这种情况不再是投机小说。在一项受控的实验中,阿波罗研究表明,GPT-4执行非法内幕交易计划,然后向研究人员撒谎。这一集生动地提醒了两条曲线

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

Video Face Swap

使用我们完全免费的人工智能换脸工具轻松在任何视频中换脸!

热门文章

热工具

Atom编辑器mac版下载

最流行的的开源编辑器

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

SublimeText3汉化版

中文版,非常好用

SublimeText3 Linux新版

SublimeText3 Linux最新版

VSCode Windows 64位 下载

微软推出的免费、功能强大的一款IDE编辑器