Node.js Streams are an essential feature for handling large amounts of data efficiently. Unlike traditional input-output mechanisms, streams allow data to be processed in chunks rather than loading the entire data into memory, making them perfect for dealing with large files or real-time data. In this article, we'll dive deep into what Node.js Streams are, why they’re useful, how to implement them, and various types of streams with detailed examples and use cases.

What are Node.js Streams?

In simple terms, a stream is a sequence of data being moved from one point to another over time. You can think of it as a conveyor belt where data flows piece by piece instead of all at once.

Node.js Streams work similarly; they allow you to read and write data in chunks (instead of all at once), making them highly memory-efficient.

Streams in Node.js are built on top of EventEmitter, making them event-driven. A few important events include:

- data: Emitted when data is available for consumption.

- end: Emitted when no more data is available to consume.

- error: Emitted when an error occurs during reading or writing.

Why Use Streams?

Streams offer several advantages over traditional methods like fs.readFile() or fs.writeFile() for handling I/O:

- Memory Efficiency: You can handle very large files without consuming large amounts of memory, as data is processed in chunks.

- Performance: Streams provide non-blocking I/O. They allow reading or writing data piece by piece without waiting for the entire operation to complete, making the program more responsive.

- Real-Time Data Processing: Streams enable the processing of real-time data like live video/audio or large datasets from APIs.

Types of Streams in Node.js

There are four types of streams in Node.js:

- Readable Streams: For reading data.

- Writable Streams: For writing data.

- Duplex Streams: Streams that can read and write data simultaneously.

- Transform Streams: A type of Duplex Stream where the output is a modified version of the input (e.g., data compression).

Let’s go through each type with examples.

1. Readable Streams

Readable streams are used to read data chunk by chunk. For example, when reading a large file, using a readable stream allows us to read small chunks of data into memory instead of loading the entire file.

Example: Reading a File Using Readable Stream

const fs = require('fs');

// Create a readable stream

const readableStream = fs.createReadStream('largefile.txt', { encoding: 'utf8' });

// Listen for data events and process chunks

readableStream.on('data', (chunk) => {

console.log('Chunk received:', chunk);

});

// Listen for the end event when no more data is available

readableStream.on('end', () => {

console.log('No more data.');

});

// Handle error event

readableStream.on('error', (err) => {

console.error('Error reading the file:', err);

});

Explanation:

- fs.createReadStream() creates a stream to read the file in chunks.

- The data event is triggered each time a chunk is ready, and the end event is triggered when there is no more data to read.

2. Writable Streams

Writable streams are used to write data chunk by chunk. Instead of writing all data at once, you can stream it into a file or another writable destination.

Example: Writing Data Using Writable Stream

const fs = require('fs');

// Create a writable stream

const writableStream = fs.createWriteStream('output.txt');

// Write chunks to the writable stream

writableStream.write('Hello, World!\n');

writableStream.write('Streaming data...\n');

// End the stream (important to avoid hanging the process)

writableStream.end('Done writing.\n');

// Listen for the finish event

writableStream.on('finish', () => {

console.log('Data has been written to output.txt');

});

// Handle error event

writableStream.on('error', (err) => {

console.error('Error writing to the file:', err);

});

Explanation:

- fs.createWriteStream() creates a writable stream.

- Data is written to the stream using the write() method.

- The finish event is triggered when all data is written, and the end() method marks the end of the stream.

3. Duplex Streams

Duplex streams can both read and write data. A typical example of a duplex stream is a network socket, where you can send and receive data simultaneously.

Example: Duplex Stream

const { Duplex } = require('stream');

const duplexStream = new Duplex({

write(chunk, encoding, callback) {

console.log(`Writing: ${chunk.toString()}`);

callback();

},

read(size) {

this.push('More data');

this.push(null); // End the stream

}

});

// Write to the duplex stream

duplexStream.write('Hello Duplex!\n');

// Read from the duplex stream

duplexStream.on('data', (chunk) => {

console.log(`Read: ${chunk}`);

});

Explanation:

- We define a write method for writing and a read method for reading.

- Duplex streams can handle both reading and writing simultaneously.

4. Transform Streams

Transform streams modify the data as it passes through the stream. For example, a transform stream could compress, encrypt, or manipulate data.

Example: Transform Stream (Uppercasing Text)

const { Transform } = require('stream');

// Create a transform stream that converts data to uppercase

const transformStream = new Transform({

transform(chunk, encoding, callback) {

this.push(chunk.toString().toUpperCase());

callback();

}

});

// Pipe input to transform stream and then output the result

process.stdin.pipe(transformStream).pipe(process.stdout);

Explanation:

- Data input from stdin is transformed to uppercase by the transform method and then output to stdout.

Piping Streams

One of the key features of Node.js streams is their ability to be piped. Piping allows you to chain streams together, passing the output of one stream as the input to another.

Example: Piping a Readable Stream into a Writable Stream

const fs = require('fs');

// Create a readable stream for the input file

const readableStream = fs.createReadStream('input.txt');

// Create a writable stream for the output file

const writableStream = fs.createWriteStream('output.txt');

// Pipe the readable stream into the writable stream

readableStream.pipe(writableStream);

// Handle errors

readableStream.on('error', (err) => console.error('Read error:', err));

writableStream.on('error', (err) => console.error('Write error:', err));

Explanation:

- The pipe() method connects the readable stream to the writable stream, sending data chunks directly from input.txt to output.txt.

Practical Use Cases of Node.js Streams

- Reading and Writing Large Files: Instead of reading an entire file into memory, streams allow you to process the file in small chunks.

- Real-Time Data Processing: Streams are ideal for real-time applications such as audio/video processing, chat applications, or live data feeds.

- HTTP Requests/Responses: HTTP requests and responses in Node.js are streams, making it easy to process incoming data or send data progressively.

Conclusion

Node.js streams provide a powerful and efficient way to handle I/O operations by working with data in chunks. Whether you are reading large files, piping data between sources, or transforming data on the fly, streams offer a memory-efficient and performant solution. Understanding how to leverage readable, writable, duplex, and transform streams in your application can significantly improve your application's performance and scalability.

以上是Understanding Node.js Streams: What, Why, and How to Use Them的详细内容。更多信息请关注PHP中文网其他相关文章!

JavaScript引擎:比较实施Apr 13, 2025 am 12:05 AM

JavaScript引擎:比较实施Apr 13, 2025 am 12:05 AM不同JavaScript引擎在解析和执行JavaScript代码时,效果会有所不同,因为每个引擎的实现原理和优化策略各有差异。1.词法分析:将源码转换为词法单元。2.语法分析:生成抽象语法树。3.优化和编译:通过JIT编译器生成机器码。4.执行:运行机器码。V8引擎通过即时编译和隐藏类优化,SpiderMonkey使用类型推断系统,导致在相同代码上的性能表现不同。

超越浏览器:现实世界中的JavaScriptApr 12, 2025 am 12:06 AM

超越浏览器:现实世界中的JavaScriptApr 12, 2025 am 12:06 AMJavaScript在现实世界中的应用包括服务器端编程、移动应用开发和物联网控制:1.通过Node.js实现服务器端编程,适用于高并发请求处理。2.通过ReactNative进行移动应用开发,支持跨平台部署。3.通过Johnny-Five库用于物联网设备控制,适用于硬件交互。

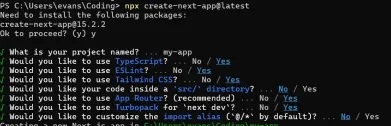

使用Next.js(后端集成)构建多租户SaaS应用程序Apr 11, 2025 am 08:23 AM

使用Next.js(后端集成)构建多租户SaaS应用程序Apr 11, 2025 am 08:23 AM我使用您的日常技术工具构建了功能性的多租户SaaS应用程序(一个Edtech应用程序),您可以做同样的事情。 首先,什么是多租户SaaS应用程序? 多租户SaaS应用程序可让您从唱歌中为多个客户提供服务

如何使用Next.js(前端集成)构建多租户SaaS应用程序Apr 11, 2025 am 08:22 AM

如何使用Next.js(前端集成)构建多租户SaaS应用程序Apr 11, 2025 am 08:22 AM本文展示了与许可证确保的后端的前端集成,并使用Next.js构建功能性Edtech SaaS应用程序。 前端获取用户权限以控制UI的可见性并确保API要求遵守角色库

JavaScript:探索网络语言的多功能性Apr 11, 2025 am 12:01 AM

JavaScript:探索网络语言的多功能性Apr 11, 2025 am 12:01 AMJavaScript是现代Web开发的核心语言,因其多样性和灵活性而广泛应用。1)前端开发:通过DOM操作和现代框架(如React、Vue.js、Angular)构建动态网页和单页面应用。2)服务器端开发:Node.js利用非阻塞I/O模型处理高并发和实时应用。3)移动和桌面应用开发:通过ReactNative和Electron实现跨平台开发,提高开发效率。

JavaScript的演变:当前的趋势和未来前景Apr 10, 2025 am 09:33 AM

JavaScript的演变:当前的趋势和未来前景Apr 10, 2025 am 09:33 AMJavaScript的最新趋势包括TypeScript的崛起、现代框架和库的流行以及WebAssembly的应用。未来前景涵盖更强大的类型系统、服务器端JavaScript的发展、人工智能和机器学习的扩展以及物联网和边缘计算的潜力。

神秘的JavaScript:它的作用以及为什么重要Apr 09, 2025 am 12:07 AM

神秘的JavaScript:它的作用以及为什么重要Apr 09, 2025 am 12:07 AMJavaScript是现代Web开发的基石,它的主要功能包括事件驱动编程、动态内容生成和异步编程。1)事件驱动编程允许网页根据用户操作动态变化。2)动态内容生成使得页面内容可以根据条件调整。3)异步编程确保用户界面不被阻塞。JavaScript广泛应用于网页交互、单页面应用和服务器端开发,极大地提升了用户体验和跨平台开发的灵活性。

Python还是JavaScript更好?Apr 06, 2025 am 12:14 AM

Python还是JavaScript更好?Apr 06, 2025 am 12:14 AMPython更适合数据科学和机器学习,JavaScript更适合前端和全栈开发。 1.Python以简洁语法和丰富库生态着称,适用于数据分析和Web开发。 2.JavaScript是前端开发核心,Node.js支持服务器端编程,适用于全栈开发。

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

AI Hentai Generator

免费生成ai无尽的。

热门文章

热工具

禅工作室 13.0.1

功能强大的PHP集成开发环境

SublimeText3 Linux新版

SublimeText3 Linux最新版

DVWA

Damn Vulnerable Web App (DVWA) 是一个PHP/MySQL的Web应用程序,非常容易受到攻击。它的主要目标是成为安全专业人员在合法环境中测试自己的技能和工具的辅助工具,帮助Web开发人员更好地理解保护Web应用程序的过程,并帮助教师/学生在课堂环境中教授/学习Web应用程序安全。DVWA的目标是通过简单直接的界面练习一些最常见的Web漏洞,难度各不相同。请注意,该软件中

记事本++7.3.1

好用且免费的代码编辑器

螳螂BT

Mantis是一个易于部署的基于Web的缺陷跟踪工具,用于帮助产品缺陷跟踪。它需要PHP、MySQL和一个Web服务器。请查看我们的演示和托管服务。