YOLOv10来啦!真正实时端到端目标检测

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWB原创

- 2024-06-09 17:29:311178浏览

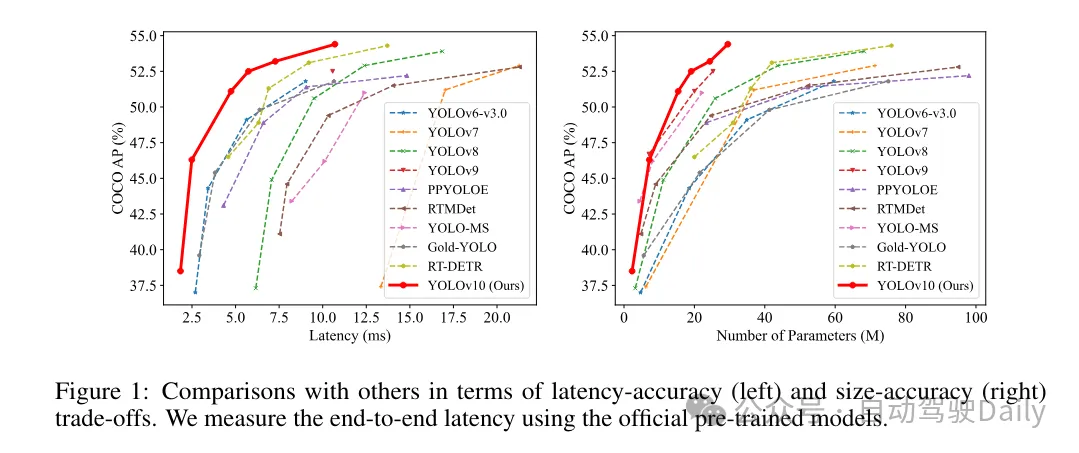

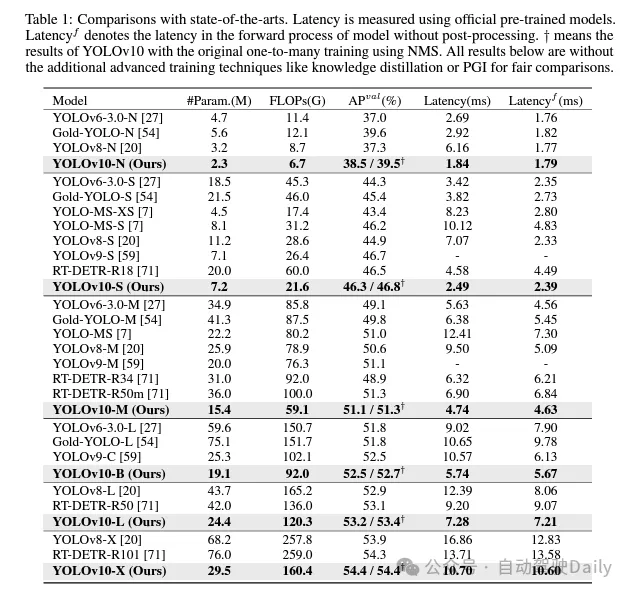

过去几年里,YOLOs因在计算成本和检测性能之间实现有效平衡而成为实时目标检测领域的主流范式。研究人员针对YOLOs的结构设计、优化目标、数据增强策略等进行了深入探索,并取得了显着进展。然而,对非极大值抑制(NMS)的后处理依赖阻碍了YOLOs的端到端部署,并对推理延迟产生负面影响。此外,YOLOs中各种组件的设计缺乏全面和彻底的审查,导致明显的计算冗余并限制了模型的性能。这导致次优的效率,以及性能提升的巨大潜力。在这项工作中,我们旨在从后处理和模型架构两个方面进一步推进YOLOs的性能-效率边界。为此,我们首先提出了用于YOLOs无NMS训练的持续双重分配,该方法同时带来了竞争性的性能和较低的推理延迟。此外,我们为YOLOs引入了全面的效率-准确性驱动模型设计策略。我们从效率和准确性两个角度全面优化了YOLOs的各个组件,这大大降低了计算开销并增强了模型能力。我们的努力成果是新一代YOLO系列,专为实时端到端目标检测而设计,名为YOLOv10。广泛的实验表明,YOLOv10在各种模型规模下均达到了最先进的性能和效率。例如,在COCO数据集上,我们的YOLOv10-S在相似AP下比RT-DETR-R18快1.8倍,同时参数和浮点运算量(FLOPs)减少了2.8倍。与YOLOv9-C相比,YOLOv10-B在相同性能下延迟减少了46%,参数减少了25%。代码链接:https://github.com/THU-MIG/yolov10。

YOLOv10有哪些改进?

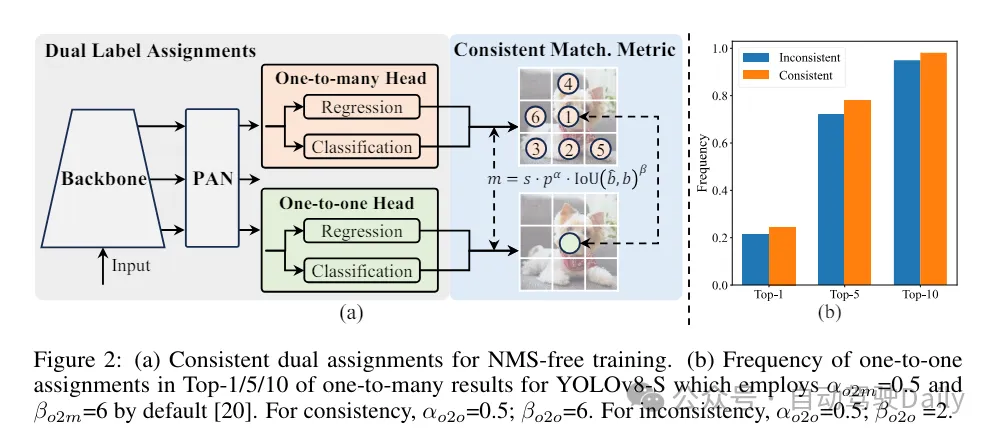

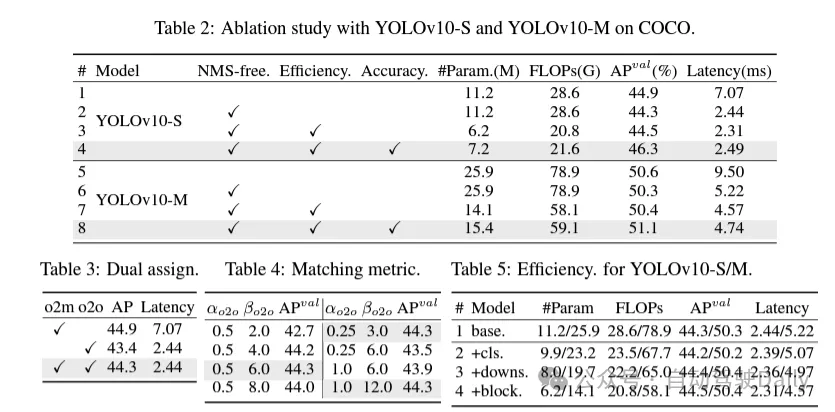

首先通过为无NMS的YOLOs提出一种持续双重分配策略来解决后处理中的冗余预测问题。该策略包括括双重标签分配和一致匹配度量。这使得模型在训练过程中能够够获得丰富而和谐的监督,同时消除了推理过程中对NMS的需求,从而在保持高效率的同时获得了竞争性的性能。

此次,为模型架构提出了全面的效率-准确度驱动模型设计策略,对YOLOs中的各个组件进行了全面检查。在效率方面,提出了轻量级分类头、空间-通道解耦下采样和rank引导block设计,以减少明显的计算冗余并实现更高效的架构。

在准确度方面,探索了大核卷积并提出了有效的部分自注意力模块,以增强模型能力,以低成本挖掘性能提升潜力。

基于这些方法,作者成功地实现了一系列不同模型规模的实时端到端检测器,即YOLOv10-N / S / M / B / L / X。在标准目标检测基准上进行的广泛实验表明,YOLOv10在各种模型规模下,在计算-准确度权衡方面显示出了优于先前的最先进模型的能力。如图1所示,在类似性能下,YOLOv10-S / X分别比RT-DETR R18 / R101快1.8倍/1.3倍。与YOLOv9-C相比,YOLOv10-B在相同性能下实现了46%的延迟降低。此外,YOLOv10展现出了极高的参数利用效率。 YOLOv10-L / X在参 数数量分别减少了1.8倍和2.3倍的情况下,比YOLOv8-L / X高出0.3 AP和0.5 AP。 YOLOv10-M在参数数量分别减少了23%和31%的情况下,与YOLOv9-M / YOLO-MS实现了相似的AP。

在训练过程中,YOLOs通常利用TAL(任务分配学习)为每个实例分配多个样本。采用一对多的分配方式产生了丰富的监督信号,有助于优化并实现更强的性能。然而,这也使得YOLOs必须依赖NMS(非极大值抑制)后处理,这导致在部署时的推理效率不是最优的。虽然之前的工作探索了一对一的匹配方式来抑制冗余预测,但它们通常会增加额外的推理开销或导致次优的性能。 在这项工作中,我们提出了一种无需NMS的训练策略,该策略采用双重标签分配和一致匹配度量,实现了高效率和具有竞争力的性能。通过该策略,我们的YOLOs在训练中不再需要NMS,从而实现了高效率和具有竞争力的性能。

效率驱动的模型设计。 YOLO中的组件包括主干(stem)、下采样层、带有基本构建块的阶段和头部。主干部分的计算成本很低,因此我们对其他三个部分进行效率驱动的模型设计。

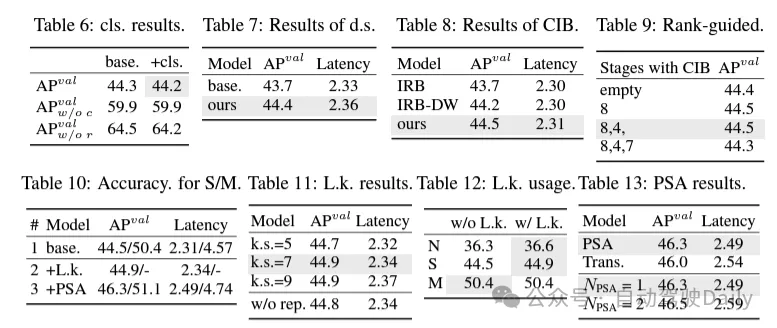

(1) Lightweight classification header. In YOLO, the classification head and regression head usually have the same architecture. However, they have significant differences in computational overhead. For example, in YOLOv8-S, the number of FLOPs and parameters of the classification head (5.95G/1.51M) and regression head (2.34G/0.64M) are 2.5 times and 2.4 times that of the regression head respectively. However, by analyzing the impact of classification errors and regression errors (see Table 6), we found that the regression head is more important to the performance of YOLO. Therefore, we can reduce the overhead of classification headers without worrying about performance harm. Therefore, we simply adopt a lightweight classification head architecture, which consists of two depthwise separable convolutions with a kernel size of 3 × 3, followed by a 1 × 1 kernel. Through the above improvements, we can simplify the architecture of a lightweight classification head, which consists of two depth-separable convolutions with a convolution kernel size of 3×3, followed by a 1×1 convolution kernel. This simplified architecture can achieve classification functions with smaller computational overhead and number of parameters.

(2) Space-channel decoupled downsampling. YOLO usually uses a regular 3×3 standard convolution with a stride of 2, while implementing spatial downsampling (from H × W to H/2 × W/2) and channel transformation (from C to 2C). This introduces a non-negligible computational cost and parameter count. Instead, we propose to decouple the space reduction and channel increase operations to achieve more efficient downsampling. Specifically, pointwise convolution is first utilized to modulate the channel dimensions, and then depthwise convolution is utilized for spatial downsampling. This reduces the computational cost to and parameter count to . At the same time, it maximizes information retention during downsampling, thereby reducing latency while maintaining competitive performance.

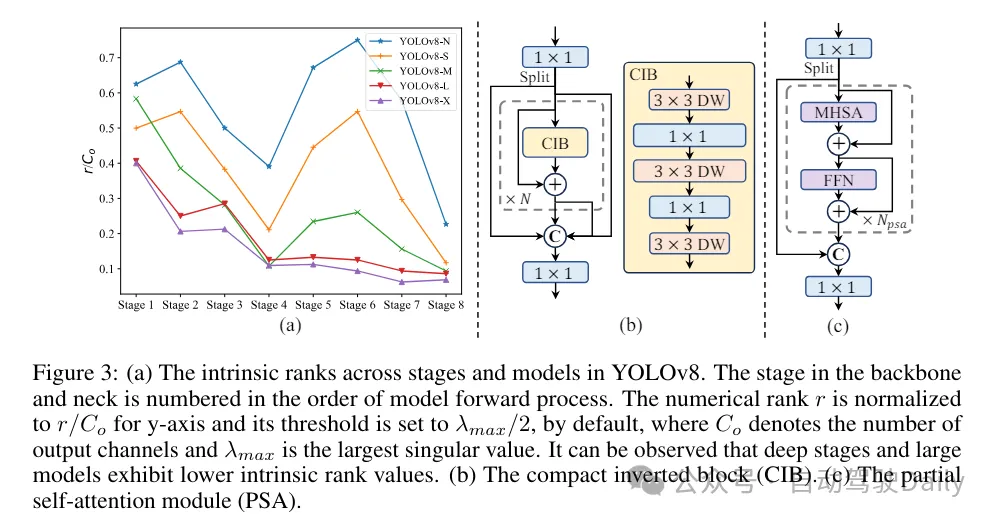

(3) Module design based on rank guidance. YOLOs typically use the same basic building blocks for all stages, such as the bottleneck block in YOLOv8. To thoroughly examine this isomorphic design of YOLOs, we utilize intrinsic rank to analyze the redundancy of each stage. Specifically, the numerical rank of the last convolution in the last basic block in each stage is calculated, which counts the number of singular values larger than a threshold. Figure 3(a) shows the results of YOLOv8, showing that deep stages and large models are more likely to exhibit more redundancy. This observation suggests that simply applying the same block design to all stages is suboptimal for achieving the best capacity-efficiency trade-off. To solve this problem, a rank-based module design scheme is proposed, which aims to reduce the complexity of stages that prove to be redundant through compact architectural design.

We first introduce a compact inverted block (CIB) structure, which uses cheap depthwise convolution for spatial mixing and cost-effective pointwise convolution for channel mixing, as shown in Figure 3(b) Show. It can serve as an effective basic building block, for example embedded in ELAN structures (Figure 3(b)). Then, a rank-based module allocation strategy is advocated to achieve optimal efficiency while maintaining competitive power. Specifically, given a model, order all stages according to the ascending order of their intrinsic rank. Further examine the performance changes after replacing the basic blocks of the leading stage with CIB. If there is no performance degradation compared to the given model, we proceed to the next stage with replacement, otherwise we stop the process. As a result, we can implement adaptive compact block designs at different stages and model sizes, achieving greater efficiency without compromising performance.

#Based on precision-oriented model design. The paper further explores large-core convolution and self-attention mechanisms to achieve precision-based design, aiming to improve performance at minimal cost.

(1) Large kernel convolution. Adopting large-kernel deep convolutions is an effective way to expand the receptive field and enhance the model's capabilities. However, simply exploiting them in all stages may introduce contamination in the shallow features used to detect small objects, while also introducing significant I/O overhead and latency in the high-resolution stage. Therefore, the authors propose to utilize large-kernel deep convolutions in the inter-stage information block (CIB) of the deep stage. Here the kernel size of the second 3×3 depthwise convolution in CIB is increased to 7×7. In addition, structural reparameterization technology is adopted to introduce another 3×3 depth convolution branch to alleviate the optimization problem without increasing inference overhead. Furthermore, as the model size increases, its receptive field naturally expands, and the benefits of using large kernel convolutions gradually diminish. Therefore, large-kernel convolutions are only employed at small model scales.

(2) Partial self-attention (PSA). The self-attention mechanism is widely used in various visual tasks due to its excellent global modeling capabilities. However, it exhibits high computational complexity and memory footprint. In order to solve this problem, in view of the ubiquitous attention head redundancy, the author proposes an efficient partial self-attention (PSA) module design, as shown in Figure 3.(c). Specifically, the features are evenly divided into two parts by channels after 1×1 convolution. Only a part of the features are input into the NPSA block consisting of multi-head self-attention module (MHSA) and feed-forward network (FFN). Then, the two parts of features are spliced and fused through 1×1 convolution. Additionally, set the dimensions of queries and keys in MHSA to half of the values, and replace LayerNorm with BatchNorm for fast inference. PSA is only placed after stage 4 with the lowest resolution to avoid excessive overhead caused by the quadratic computational complexity of self-attention. In this way, global representation learning capabilities can be incorporated into YOLOs at low computational cost, thus well enhancing the model's capabilities and improving performance.

Experimental Comparison

I won’t introduce too much here, just the results! ! ! Latency is reduced and performance continues to increase.

以上是YOLOv10来啦!真正实时端到端目标检测的详细内容。更多信息请关注PHP中文网其他相关文章!