Recently I am playing with Hadoop per analyzing the data set I scraped from WEIBO.COM. After a couple of tryings, many are failed due to disk space shortage, after I decreased the input date set volumn, luckily I gained a completed Hadoop

Recently I am playing with Hadoop per analyzing the data set I scraped from WEIBO.COM. After a couple of tryings, many are failed due to disk space shortage, after I decreased the input date set volumn, luckily I gained a completed Hadoop Job results, but, sadly, with only 1000 lines of records processed.

Here is the Job Summary:

| Counter | Map | Reduce | Total |

| Bytes Read | 7,945,196 | 0 | 7,945,196 |

| FILE_BYTES_READ | 16,590,565,518 | 8,021,579,181 | 24,612,144,699 |

| HDFS_BYTES_READ | 7,945,580 | 0 | 7,945,580 |

| FILE_BYTES_WRITTEN | 24,612,303,774 | 8,021,632,091 | 32,633,935,865 |

| HDFS_BYTES_WRITTEN | 0 | 2,054,409,494 | 2,054,409,494 |

| Reduce input groups | 0 | 381,696,888 | 381,696,888 |

| Map output materialized bytes | 8,021,579,181 | 0 | 8,021,579,181 |

| Combine output records | 826,399,600 | 0 | 826,399,600 |

| Map input records | 1,000 | 0 | 1,000 |

| Reduce shuffle bytes | 0 | 8,021,579,181 | 8,021,579,181 |

| Physical memory (bytes) snapshot | 1,215,041,536 | 72,613,888 | 1,287,655,424 |

| Reduce output records | 0 | 381,696,888 | 381,696,888 |

| Spilled Records | 1,230,714,511 | 401,113,702 | 1,631,828,213 |

| Map output bytes | 7,667,457,405 | 0 | 7,667,457,405 |

| Total committed heap usage (bytes) | 1,038,745,600 | 29,097,984 | 1,067,843,584 |

| CPU time spent (ms) | 2,957,800 | 2,104,030 | 5,061,830 |

| Virtual memory (bytes) snapshot | 4,112,838,656 | 1,380,306,944 | 5,493,145,600 |

| SPLIT_RAW_BYTES | 384 | 0 | 384 |

| Map output records | 426,010,418 | 0 | 426,010,418 |

| Combine input records | 851,296,316 | 0 | 851,296,316 |

| Reduce input records | 0 | 401,113,702 | 401,113,702 |

From which we can see that, specially metrics which highlighted in bold style, I only passed in about 7MB data file with 1000 lines of records, but Reducer outputs 381,696,888 records, which are 2.1GB compressed gz file and some 9GB plain text when decompressed.

But clearly it’s not the problem of my code that leads to so much disk space usages, the above output metrics are all reasonable, although you may be surprised by the comparison between 7MB with only 1000 records input and 9GB with 381,696,888 records output. The truth is that I’m calculating co-appearance combination computation.

From this experimental I learned that my personal computer really cannot play with big elephant, input data records from the first 10 thousand down to 5 thousand to 3 thousand to ONE thousand at last, but data analytic should go on, I need to find a solution to work it out, actually I have 30 times of data need to process, that is 30 thousand records.

Yet still have a lot of work to do, and I plan to post some articles about what’s I have done with my big data :) and Hadoop so far.

---EOF---

原文地址:My First Lucky and Sad Hadoop Results, 感谢原作者分享。

Java错误:Hadoop错误,如何处理和避免Jun 24, 2023 pm 01:06 PM

Java错误:Hadoop错误,如何处理和避免Jun 24, 2023 pm 01:06 PMJava错误:Hadoop错误,如何处理和避免当使用Hadoop处理大数据时,常常会遇到一些Java异常错误,这些错误可能会影响任务的执行,导致数据处理失败。本文将介绍一些常见的Hadoop错误,并提供处理和避免这些错误的方法。Java.lang.OutOfMemoryErrorOutOfMemoryError是Java虚拟机内存不足的错误。当Hadoop任

在Beego中使用Hadoop和HBase进行大数据存储和查询Jun 22, 2023 am 10:21 AM

在Beego中使用Hadoop和HBase进行大数据存储和查询Jun 22, 2023 am 10:21 AM随着大数据时代的到来,数据处理和存储变得越来越重要,如何高效地管理和分析大量的数据也成为企业面临的挑战。Hadoop和HBase作为Apache基金会的两个项目,为大数据存储和分析提供了一种解决方案。本文将介绍如何在Beego中使用Hadoop和HBase进行大数据存储和查询。一、Hadoop和HBase简介Hadoop是一个开源的分布式存储和计算系统,它可

如何使用PHP和Hadoop进行大数据处理Jun 19, 2023 pm 02:24 PM

如何使用PHP和Hadoop进行大数据处理Jun 19, 2023 pm 02:24 PM随着数据量的不断增大,传统的数据处理方式已经无法处理大数据时代带来的挑战。Hadoop是开源的分布式计算框架,它通过分布式存储和处理大量的数据,解决了单节点服务器在大数据处理中带来的性能瓶颈问题。PHP是一种脚本语言,广泛应用于Web开发,而且具有快速开发、易于维护等优点。本文将介绍如何使用PHP和Hadoop进行大数据处理。什么是HadoopHadoop是

探索Java在大数据领域的应用:Hadoop、Spark、Kafka等技术栈的了解Dec 26, 2023 pm 02:57 PM

探索Java在大数据领域的应用:Hadoop、Spark、Kafka等技术栈的了解Dec 26, 2023 pm 02:57 PMJava大数据技术栈:了解Java在大数据领域的应用,如Hadoop、Spark、Kafka等随着数据量不断增加,大数据技术成为了当今互联网时代的热门话题。在大数据领域,我们常常听到Hadoop、Spark、Kafka等技术的名字。这些技术起到了至关重要的作用,而Java作为一门广泛应用的编程语言,也在大数据领域发挥着巨大的作用。本文将重点介绍Java在大

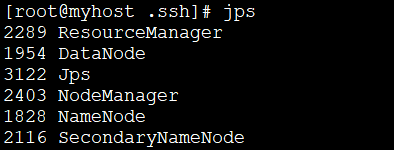

linux下安装Hadoop的方法是什么May 18, 2023 pm 08:19 PM

linux下安装Hadoop的方法是什么May 18, 2023 pm 08:19 PM一:安装JDK1.执行以下命令,下载JDK1.8安装包。wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2.执行以下命令,解压下载的JDK1.8安装包。tar-zxvfjdk-8u151-linux-x64.tar.gz3.移动并重命名JDK包。mvjdk1.8.0_151//usr/java84.配置Java环境变量。echo'

PHP中的数据处理引擎(Spark, Hadoop等)Jun 23, 2023 am 09:43 AM

PHP中的数据处理引擎(Spark, Hadoop等)Jun 23, 2023 am 09:43 AM在当前的互联网时代,海量数据的处理是各个企业和机构都需要面对的问题。作为一种广泛应用的编程语言,PHP同样需要在数据处理方面跟上时代的步伐。为了更加高效地处理海量数据,PHP开发引入了一些大数据处理工具,如Spark和Hadoop等。Spark是一款开源的数据处理引擎,可以用于大型数据集的分布式处理。Spark的最大特点是具有快速的数据处理速度和高效的数据存

hadoop三大核心组件介绍Mar 13, 2024 pm 05:54 PM

hadoop三大核心组件介绍Mar 13, 2024 pm 05:54 PMHadoop的三大核心组件分别是:Hadoop Distributed File System(HDFS)、MapReduce和Yet Another Resource Negotiator(YARN)。

SQL语句中的AND运算符和OR运算符怎么用May 28, 2023 pm 04:34 PM

SQL语句中的AND运算符和OR运算符怎么用May 28, 2023 pm 04:34 PMSQLAND&OR运算符AND和OR运算符用于基于一个以上的条件对记录进行过滤。AND和OR可在WHERE子语句中把两个或多个条件结合起来。如果第一个条件和第二个条件都成立,则AND运算符显示一条记录。如果第一个条件和第二个条件中只要有一个成立,则OR运算符显示一条记录。"Persons"表:LastNameFirstNameAddressCityAdamsJohnOxfordStreetLondonBushGeorgeFifthAvenueNewYorkCarter

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

AI Hentai Generator

免费生成ai无尽的。

热门文章

热工具

禅工作室 13.0.1

功能强大的PHP集成开发环境

Atom编辑器mac版下载

最流行的的开源编辑器

ZendStudio 13.5.1 Mac

功能强大的PHP集成开发环境

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

Dreamweaver Mac版

视觉化网页开发工具