只需2張圖片,無需測量任何額外數據-

當當,一個完整的3D小熊就有了:

這個名為DUSt3R的新工具,火得一塌糊塗,才上線沒多久就登上GitHub熱榜第二。

有網友實測#,拍兩張照片,真的就重建出了他家的廚房,整個過程耗時不到2秒鐘!

(除了3D圖,深度圖、置信度圖和點雲圖它都能一併給出)

驚得這位朋友直呼:

大夥先忘掉sora吧,這才是我們真正看得見摸得著的東西。

實驗顯示,DUSt3R在單目/多視圖深度估計以及相對位姿估計三個任務上,均取得SOTA。

作者團隊(來自芬蘭阿爾託大學NAVER LABS人工智慧研究所歐洲分所)的「宣語」也是氣勢滿滿:

我們就是要讓天下沒有難搞的3D視覺任務。

所以,它是如何做到?

「all-in-one」

對於多視圖立體重建(MVS)任務來說,第一步就是估計相機參數,包括內外參。

這個操作很枯燥也很麻煩,但對於後續在三維空間中進行三角測量的像素不可或缺,而這又是幾乎所有效能比較好的MVS演算法都離不開的一環。

在本文研究中,作者團隊引進的DUSt3R則完全採用了截然不同的方法。

它不需要任何相機校準或視點姿勢的先驗資訊,就可完成任意影像的密集或無約束3D重建。

在此,團隊將成對重建問題表述為點圖迴歸,統一單目和雙眼重建情況。

在提供超過兩張輸入影像的情況下,透過簡單而有效的全域對準策略,將所有成對的點圖表示為一個共同的參考框架。

如下圖所示,給定一組具有未知相機姿態和內在特徵的照片,DUSt3R輸出對應的一組點圖,從中我們就可以直接恢復各種通常難以同時估計的幾何量,如相機參數、像素對應關係、深度圖,以及完全一致的3D重建效果。

(作者提示,DUSt3R也適用於單張輸入影像)

具體網路架構方面,DUSt3R基於的是標準Transformer編碼器和解碼器,受到了CroCo(透過跨視圖完成3D視覺任務的自我監督預訓練的一個研究)的啟發,並採用簡單的回歸損失訓練完成。

如下圖所示,場景的兩個視圖(I1,I2)首先以共享的ViT編碼器以連體(Siamese)方式進行編碼。

所得到的token表示(F1和F2)隨後被傳遞到兩個Transformer解碼器,後者透過交叉注意力不斷地交換資訊。

最後,兩個迴歸頭輸出兩個對應的點圖和相關的置信圖。

重點是,這兩個點圖都要在第一張影像的同一座標系中進行表示。

Multi-tasks SOTA

The experiment first evaluates DUST3R on the 7Scenes (7 indoor scenes) and Cambridge Landmarks (8 outdoor scenes) datasets Performance on the absolute pose estimation task, the indicators are translation error and rotation error (the smaller the value, the better) .

The author stated that compared with other existing feature matching and end-to-end methods, the performance of DUSt3R is remarkable.

Because it has never received any visual positioning training, and secondly, it has not encountered query images and database images during the training process.

Secondly, is the multi-view pose regression task performed on 10 random frames. Results DUST3R achieved the best results on both datasets.

On the monocular depth estimation task, DUSt3R can also hold indoor and outdoor scenes well, with performance better than that of self-supervised baselines and different from the most advanced supervised baselines. Up and down.

In terms of multi-view depth estimation, DUSt3R’s performance is also outstanding.

The following are the 3D reconstruction effects given by the two groups of officials. To give you a feel, they only input two images:

(1)

(2)

Actual measurement by netizens: It’s OK if the two pictures don’t overlap

Yes A netizen gave DUST3R two images without any overlapping content, and it also output an accurate 3D view within a few seconds:

(The picture is his office, so I must have never seen it in training)

In response, some netizens said that this means that the method is not there Make "objective measurements" and instead behave more like an AI.

In addition, some people are curiousWhether the method is still valid when the input images are taken by two different cameras?

Some netizens actually tried it, and the answer is yes!

Portal:

[1]Paper https://arxiv.org/abs/2312.14132

[2]Code https ://github.com/naver/dust3r

以上是兩張圖2秒鐘3D重建!這款AI工具火爆GitHub,網友:忘掉Sora的詳細內容。更多資訊請關注PHP中文網其他相關文章!

Markitdown MCP可以將任何文檔轉換為Markdowns!Apr 27, 2025 am 09:47 AM

Markitdown MCP可以將任何文檔轉換為Markdowns!Apr 27, 2025 am 09:47 AM處理文檔不再只是在您的AI項目中打開文件,而是將混亂變成清晰度。諸如PDF,PowerPoints和Word之類的文檔以各種形狀和大小淹沒了我們的工作流程。檢索結構化

如何使用Google ADK進行建築代理? - 分析VidhyaApr 27, 2025 am 09:42 AM

如何使用Google ADK進行建築代理? - 分析VidhyaApr 27, 2025 am 09:42 AM利用Google的代理開發套件(ADK)的力量創建具有現實世界功能的智能代理!該教程通過使用ADK來構建對話代理,並支持Gemini和GPT等各種語言模型。 w

在LLM上使用SLM進行有效解決問題-Analytics VidhyaApr 27, 2025 am 09:27 AM

在LLM上使用SLM進行有效解決問題-Analytics VidhyaApr 27, 2025 am 09:27 AM摘要: 小型語言模型 (SLM) 專為效率而設計。在資源匱乏、實時性和隱私敏感的環境中,它們比大型語言模型 (LLM) 更勝一籌。 最適合專注型任務,尤其是在領域特異性、控制性和可解釋性比通用知識或創造力更重要的情況下。 SLM 並非 LLMs 的替代品,但在精度、速度和成本效益至關重要時,它們是理想之選。 技術幫助我們用更少的資源取得更多成就。它一直是推動者,而非驅動者。從蒸汽機時代到互聯網泡沫時期,技術的威力在於它幫助我們解決問題的程度。人工智能 (AI) 以及最近的生成式 AI 也不例

如何將Google Gemini模型用於計算機視覺任務? - 分析VidhyaApr 27, 2025 am 09:26 AM

如何將Google Gemini模型用於計算機視覺任務? - 分析VidhyaApr 27, 2025 am 09:26 AM利用Google雙子座的力量用於計算機視覺:綜合指南 領先的AI聊天機器人Google Gemini擴展了其功能,超越了對話,以涵蓋強大的計算機視覺功能。 本指南詳細說明瞭如何利用

Gemini 2.0 Flash vs O4-Mini:Google可以比OpenAI更好嗎?Apr 27, 2025 am 09:20 AM

Gemini 2.0 Flash vs O4-Mini:Google可以比OpenAI更好嗎?Apr 27, 2025 am 09:20 AM2025年的AI景觀正在充滿活力,而Google的Gemini 2.0 Flash和Openai的O4-Mini的到來。 這些尖端的車型分開了幾週,具有可比的高級功能和令人印象深刻的基準分數。這個深入的比較

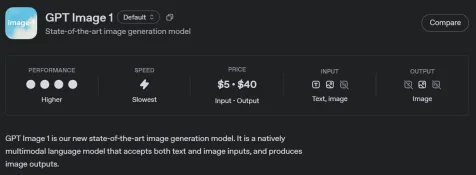

如何使用OpenAI GPT-Image-1 API生成和編輯圖像Apr 27, 2025 am 09:16 AM

如何使用OpenAI GPT-Image-1 API生成和編輯圖像Apr 27, 2025 am 09:16 AMOpenai的最新多模式模型GPT-Image-1徹底改變了Chatgpt和API的形像生成。 本文探討了其功能,用法和應用程序。 目錄 了解gpt-image-1 gpt-image-1的關鍵功能

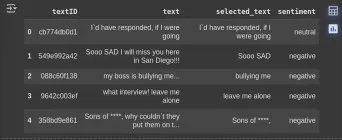

如何使用清潔行執行數據預處理? - 分析VidhyaApr 27, 2025 am 09:15 AM

如何使用清潔行執行數據預處理? - 分析VidhyaApr 27, 2025 am 09:15 AM數據預處理對於成功的機器學習至關重要,但是實際數據集通常包含錯誤。清潔行提供了一種有效的解決方案,它使用其Python軟件包來實施自信的學習算法。 它自動檢測和

AI技能差距正在減慢供應鏈Apr 26, 2025 am 11:13 AM

AI技能差距正在減慢供應鏈Apr 26, 2025 am 11:13 AM經常使用“ AI-Ready勞動力”一詞,但是在供應鏈行業中確實意味著什麼? 供應鏈管理協會(ASCM)首席執行官安倍·埃什肯納齊(Abe Eshkenazi)表示,它表示能夠評論家的專業人員

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

Atom編輯器mac版下載

最受歡迎的的開源編輯器

SAP NetWeaver Server Adapter for Eclipse

將Eclipse與SAP NetWeaver應用伺服器整合。

Dreamweaver Mac版

視覺化網頁開發工具

VSCode Windows 64位元 下載

微軟推出的免費、功能強大的一款IDE編輯器

WebStorm Mac版

好用的JavaScript開發工具