OpenAI recently announced the launch of their latest generation embedding model embedding v3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large.

Little information is disclosed about how these models are designed and trained, and the models are only accessible through a paid API. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model?

This article will empirically compare the performance of these new models with open source models. We plan to build a data retrieval workflow where the key task is to find the most relevant documents from the corpus based on the user's query.

Our corpus is the European Artificial Intelligence Act, which is currently in the validation phase. This corpus is the world’s first legal framework involving artificial intelligence and is unique in that it is available in 24 languages. This allows us to compare the accuracy of data retrieval in different language backgrounds, providing important support for the cross-cultural application of artificial intelligence.

We plan to create a custom synthetic question/answer dataset using a multilingual text corpus and use this dataset to compare OpenAI with the state-of-the-art The accuracy of open source embedding models. We will share the full code as our approach can be easily adapted to other data corpora.

Generate a custom Q/A data set

First, we can start by creating a custom question and answer (Q/A) data set, The advantage of doing this is to ensure that the data set will not become a bias factor in model training, avoiding situations that may occur in benchmark references such as MTEB. Furthermore, by generating custom datasets, we can tailor the evaluation process to a specific data corpus, which can be important for scenarios like Retrieval Augmentation Applications (RAG).

We will follow the simple process suggested in the Llama Index documentation. First, the corpus is divided into chunks. Next, for each block, a large language model (LLM) is used to generate a series of synthetic questions to ensure that the answer is in the corresponding block.

Implementing this strategy using an LLM data frame like Llama Index is very simple, as shown in the code below.

from llama_index.readers.web import SimpleWebPageReader from llama_index.core.node_parser import SentenceSplitter language = "EN" url_doc = "https://eur-lex.europa.eu/legal-content/"+language+"/TXT/HTML/?uri=CELEX:52021PC0206" documents = SimpleWebPageReader(html_to_text=True).load_data([url_doc]) parser = SentenceSplitter(chunk_size=1000) nodes = parser.get_nodes_from_documents(documents, show_progress=True)

The corpus is the English version of the EU Artificial Intelligence Act, obtained directly from the web using this official URL. This article uses the draft version from April 2021, as the final version is not yet available in all European languages. So the version we chose can replace language in the URL with any of the other 23 official EU languages, retrieving text in different languages (BG for Bulgarian, ES for Spanish, CS for Czech, etc. ).

Use a SentenceSplitter object to split the document into chunks of every 1000 tokens. For English, this generates about 100 chunks. Each block is then provided as context to the following prompt (the default prompt suggested in the Llama Index library):

prompts={} prompts["EN"] = """\ Context information is below. --------------------- {context_str} --------------------- Given the context information and not prior knowledge, generate only questions based on the below query. You are a Teacher/ Professor. Your task is to setup {num_questions_per_chunk} questions for an upcoming quiz/examination. The questions should be diverse in nature across the document. Restrict the questions to the context information provided." """This prompt can generate questions about the documentation block , the number of questions to generate for each data chunk is passed as parameter "num_questions_per_chunk", which we set to 2. Questions can then be generated by calling generate_qa_embedding_pairs in the Llama Index library:

from llama_index.llms import OpenAI from llama_index.legacy.finetuning import generate_qa_embedding_pairs qa_dataset = generate_qa_embedding_pairs(llm=OpenAI(model="gpt-3.5-turbo-0125",additional_kwargs={'seed':42}),nodes=nodes,qa_generate_prompt_tmpl = prompts[language],num_questions_per_chunk=2 )我们依靠OpenAI的GPT-3.5-turbo-0125来完成这项任务,结果对象' qa_dataset '包含问题和答案(块)对。作为生成问题的示例,以下是前两个问题的结果(其中“答案”是文本的第一部分):

- What are the main objectives of the proposal for a Regulation laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) according to the explanatory memorandum?

- How does the proposal for a Regulation on artificial intelligence aim to address the risks associated with the use of AI while promoting the uptake of AI in the European Union, as outlined in the context information?

OpenAI嵌入模型

评估函数也是遵循Llama Index文档:首先所有答案(文档块)的嵌入都存储在VectorStoreIndex中,以便有效检索。然后评估函数循环遍历所有查询,检索前k个最相似的文档,并根据MRR (Mean Reciprocal Rank)评估检索的准确性,代码如下:

def evaluate(dataset, embed_model, insert_batch_size=1000, top_k=5):# Get corpus, queries, and relevant documents from the qa_dataset objectcorpus = dataset.corpusqueries = dataset.queriesrelevant_docs = dataset.relevant_docs # Create TextNode objects for each document in the corpus and create a VectorStoreIndex to efficiently store and retrieve embeddingsnodes = [TextNode(id_=id_, text=text) for id_, text in corpus.items()]index = VectorStoreIndex(nodes, embed_model=embed_model, insert_batch_size=insert_batch_size)retriever = index.as_retriever(similarity_top_k=top_k) # Prepare to collect evaluation resultseval_results = [] # Iterate over each query in the dataset to evaluate retrieval performancefor query_id, query in tqdm(queries.items()):# Retrieve the top_k most similar documents for the current query and extract the IDs of the retrieved documentsretrieved_nodes = retriever.retrieve(query)retrieved_ids = [node.node.node_id for node in retrieved_nodes] # Check if the expected document was among the retrieved documentsexpected_id = relevant_docs[query_id][0]is_hit = expected_id in retrieved_ids # assume 1 relevant doc per query # Calculate the Mean Reciprocal Rank (MRR) and append to resultsif is_hit:rank = retrieved_ids.index(expected_id) + 1mrr = 1 / rankelse:mrr = 0eval_results.append(mrr) # Return the average MRR across all queries as the final evaluation metricreturn np.average(eval_results)

嵌入模型通过' embed_model '参数传递给评估函数,对于OpenAI模型,该参数是一个用模型名称和模型维度初始化的OpenAIEmbedding对象。

from llama_index.embeddings.openai import OpenAIEmbedding embed_model = OpenAIEmbedding(model=model_spec['model_name'],dimensinotallow=model_spec['dimensions'])

dimensions参数可以缩短嵌入(即从序列的末尾删除一些数字),而不会失去嵌入的概念表示属性。OpenAI在他们的公告中建议,在MTEB基准测试中,嵌入可以缩短到256大小,同时仍然优于未缩短的text-embedding-ada-002嵌入(大小为1536)。

我们在四种不同的嵌入模型上运行评估函数:

两个版本的text-embedding-3-large:一个具有最低可能维度(256),另一个具有最高可能维度(3072)。它们被称为“OAI-large-256”和“OAI-large-3072”。

OAI-small:text-embedding-3-small,维数为1536。

OAI-ada-002:传统的文本嵌入text-embedding-ada-002,维度为1536。

每个模型在四种不同的语言上进行评估:英语(EN),法语(FR),捷克语(CS)和匈牙利语(HU),分别涵盖日耳曼语,罗曼语,斯拉夫语和乌拉尔语的例子。

embeddings_model_spec = { } embeddings_model_spec['OAI-Large-256']={'model_name':'text-embedding-3-large','dimensions':256} embeddings_model_spec['OAI-Large-3072']={'model_name':'text-embedding-3-large','dimensions':3072} embeddings_model_spec['OAI-Small']={'model_name':'text-embedding-3-small','dimensions':1536} embeddings_model_spec['OAI-ada-002']={'model_name':'text-embedding-ada-002','dimensions':None} results = [] languages = ["EN", "FR", "CS", "HU"] # Loop through all languages for language in languages: # Load datasetfile_name=language+"_dataset.json"qa_dataset = EmbeddingQAFinetuneDataset.from_json(file_name) # Loop through all modelsfor model_name, model_spec in embeddings_model_spec.items(): # Get modelembed_model = OpenAIEmbedding(model=model_spec['model_name'],dimensinotallow=model_spec['dimensions']) # Assess embedding score (in terms of MRR)score = evaluate(qa_dataset, embed_model) results.append([language, model_name, score]) df_results = pd.DataFrame(results, columns = ["Language" ,"Embedding model", "MRR"])MRR精度如下:

嵌入尺寸越大,性能越好。

开源嵌入模型

围绕嵌入的开源研究也是非常活跃的,Hugging Face 的 MTEB leaderboard会经常发布最新的嵌入模型。

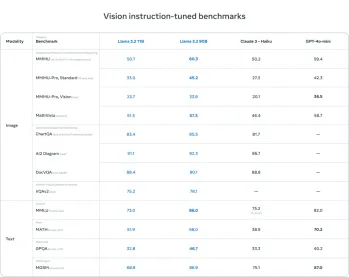

为了在本文中进行比较,我们选择了一组最近发表的四个嵌入模型(2024)。选择的标准是他们在MTEB排行榜上的平均得分和他们处理多语言数据的能力。所选模型的主要特性摘要如下。

e5-mistral-7b-instruct:微软的这个E5嵌入模型是从Mistral-7B-v0.1初始化的,并在多语言混合数据集上进行微调。模型在MTEB排行榜上表现最好,但也是迄今为止最大的(14GB)。

multilingual-e5-large-instruct(ML-E5-large):微软的另一个E5模型,可以更好地处理多语言数据。它从xlm-roberta-large初始化,并在多语言数据集的混合上进行训练。它比E5-Mistral小得多(10倍),上下文大小也小得多(514)。

BGE-M3:该模型由北京人工智能研究院设计,是他们最先进的多语言数据嵌入模型,支持100多种工作语言。截至2024年2月22日,它还没有进入MTEB排行榜。

nomic-embed-text-v1 (Nomic- embed):该模型由Nomic设计,其性能优于OpenAI Ada-002和text-embedding-3-small,而且大小仅为0.55GB。该模型是第一个完全可复制和可审计的(开放数据和开源训练代码)的模型。

用于评估这些开源模型的代码类似于用于OpenAI模型的代码。主要的变化在于模型参数:

embeddings_model_spec = { } embeddings_model_spec['E5-mistral-7b']={'model_name':'intfloat/e5-mistral-7b-instruct','max_length':32768, 'pooling_type':'last_token', 'normalize': True, 'batch_size':1, 'kwargs': {'load_in_4bit':True, 'bnb_4bit_compute_dtype':torch.float16}} embeddings_model_spec['ML-E5-large']={'model_name':'intfloat/multilingual-e5-large','max_length':512, 'pooling_type':'mean', 'normalize': True, 'batch_size':1, 'kwargs': {'device_map': 'cuda', 'torch_dtype':torch.float16}} embeddings_model_spec['BGE-M3']={'model_name':'BAAI/bge-m3','max_length':8192, 'pooling_type':'cls', 'normalize': True, 'batch_size':1, 'kwargs': {'device_map': 'cuda', 'torch_dtype':torch.float16}} embeddings_model_spec['Nomic-Embed']={'model_name':'nomic-ai/nomic-embed-text-v1','max_length':8192, 'pooling_type':'mean', 'normalize': True, 'batch_size':1, 'kwargs': {'device_map': 'cuda', 'trust_remote_code' : True}} results = [] languages = ["EN", "FR", "CS", "HU"] # Loop through all models for model_name, model_spec in embeddings_model_spec.items(): print("Processing model : "+str(model_spec)) # Get modeltokenizer = AutoTokenizer.from_pretrained(model_spec['model_name'])embed_model = AutoModel.from_pretrained(model_spec['model_name'], **model_spec['kwargs']) if model_name=="Nomic-Embed":embed_model.to('cuda') # Loop through all languagesfor language in languages: # Load datasetfile_name=language+"_dataset.json"qa_dataset = EmbeddingQAFinetuneDataset.from_json(file_name) start_time_assessment=time.time() # Assess embedding score (in terms of hit rate at k=5)score = evaluate(qa_dataset, tokenizer, embed_model, model_spec['normalize'], model_spec['max_length'], model_spec['pooling_type']) # Get duration of score assessmentduration_assessment = time.time()-start_time_assessment results.append([language, model_name, score, duration_assessment]) df_results = pd.DataFrame(results, columns = ["Language" ,"Embedding model", "MRR", "Duration"])結果如下:

BGE-M3的表現最好,其次是ML-E5-Large、E5-mistral- 7b和Nomic-Embed。 BGE-M3模型尚未在MTEB排行榜上進行基準測試,我們的結果表明它可能比其他模型排名更高。雖然BGE-M3針對多語言資料進行了最佳化,但它在英語方面的表現也比其他模型更好。

因為式開源模型所以一般都需要本地運行,所以我們也刻意記錄了每個嵌入模型的處理時間。

E5-mistral-7b比其他模型大10倍以上,所以最慢是很正常的

總結

我們把所有的結果做一個匯總

採用開源模型獲得了最好的性能,BGE-M3模型表現最佳。模型具有與OpenAI模型相同的上下文長度(8K),大小為2.2GB。

OpenAI的large(3072)、small 和ada模型的表現非常相似。縮小large的嵌入尺寸(256)會導致效能下降,並且沒有像OpenAI說的那樣比ada更好。

幾乎所有型號(ML-E5-large除外)在英語上都表現最好。在捷克語和匈牙利語等語言中,表現有顯著差異,可能是因為訓練的資料比較少。

我們應該付費訂閱OpenAI,還是託管一個開源嵌入模型?

OpenAI最近的價格調整使得他們的API變得更加實惠,現在每百萬代幣的成本為0.13美元。如果每個月處理一百萬個查詢(假設每個查詢涉及大約1K令牌),沒那麼成本約為130美元。所以可以根據實際需要計算來選擇是否要託管開源嵌入模型。

當然成本效益並不是唯一的考慮因素。也可能需要考慮延遲、隱私和對資料處理工作流程的控制等其他因素。開源模型提供了完全資料控制的優勢,增強了隱私性和客製化。

說到延遲,OpenAI的API也存在延遲問題,有時會導致回應時間延長,所有有時候OpenAI的API不一定是最快的選擇。

總之,在開源模型和像OpenAI這樣的專有解決方案之間做出選擇並不是一個簡單的答案。開源嵌入提供了一個非常好的選項,它將效能與對資料的更好控制結合在一起。而OpenAI的產品可能仍然會吸引那些優先考慮便利性的人,特別是如果隱私問題是次要的。

本文程式碼:https://github.com/Yannael/multilingual-embeddings

以上是選擇最適合資料的嵌入模型:OpenAI 和開源多語言嵌入的對比測試的詳細內容。更多資訊請關注PHP中文網其他相關文章!

閱讀AI索引2025:AI是您的朋友,敵人還是副駕駛?Apr 11, 2025 pm 12:13 PM

閱讀AI索引2025:AI是您的朋友,敵人還是副駕駛?Apr 11, 2025 pm 12:13 PM斯坦福大學以人為本人工智能研究所發布的《2025年人工智能指數報告》對正在進行的人工智能革命進行了很好的概述。讓我們用四個簡單的概念來解讀它:認知(了解正在發生的事情)、欣賞(看到好處)、接納(面對挑戰)和責任(弄清我們的責任)。 認知:人工智能無處不在,並且發展迅速 我們需要敏銳地意識到人工智能發展和傳播的速度有多快。人工智能係統正在不斷改進,在數學和復雜思維測試中取得了優異的成績,而就在一年前,它們還在這些測試中慘敗。想像一下,人工智能解決複雜的編碼問題或研究生水平的科學問題——自2023年

開始使用Meta Llama 3.2 -Analytics VidhyaApr 11, 2025 pm 12:04 PM

開始使用Meta Llama 3.2 -Analytics VidhyaApr 11, 2025 pm 12:04 PMMeta的Llama 3.2:多模式和移動AI的飛躍 Meta最近公佈了Llama 3.2,這是AI的重大進步,具有強大的視覺功能和針對移動設備優化的輕量級文本模型。 以成功為基礎

AV字節:Meta' llama 3.2,Google的雙子座1.5等Apr 11, 2025 pm 12:01 PM

AV字節:Meta' llama 3.2,Google的雙子座1.5等Apr 11, 2025 pm 12:01 PM本週的AI景觀:進步,道德考慮和監管辯論的旋風。 OpenAI,Google,Meta和Microsoft等主要參與者已經釋放了一系列更新,從開創性的新車型到LE的關鍵轉變

與機器交談的人類成本:聊天機器人真的可以在乎嗎?Apr 11, 2025 pm 12:00 PM

與機器交談的人類成本:聊天機器人真的可以在乎嗎?Apr 11, 2025 pm 12:00 PM連接的舒適幻想:我們在與AI的關係中真的在蓬勃發展嗎? 這個問題挑戰了麻省理工學院媒體實驗室“用AI(AHA)”研討會的樂觀語氣。事件展示了加油

了解Python的Scipy圖書館Apr 11, 2025 am 11:57 AM

了解Python的Scipy圖書館Apr 11, 2025 am 11:57 AM介紹 想像一下,您是科學家或工程師解決複雜問題 - 微分方程,優化挑戰或傅立葉分析。 Python的易用性和圖形功能很有吸引力,但是這些任務需要強大的工具

3種運行Llama 3.2的方法-Analytics VidhyaApr 11, 2025 am 11:56 AM

3種運行Llama 3.2的方法-Analytics VidhyaApr 11, 2025 am 11:56 AMMeta's Llama 3.2:多式聯運AI強力 Meta的最新多模式模型Llama 3.2代表了AI的重大進步,具有增強的語言理解力,提高的準確性和出色的文本生成能力。 它的能力t

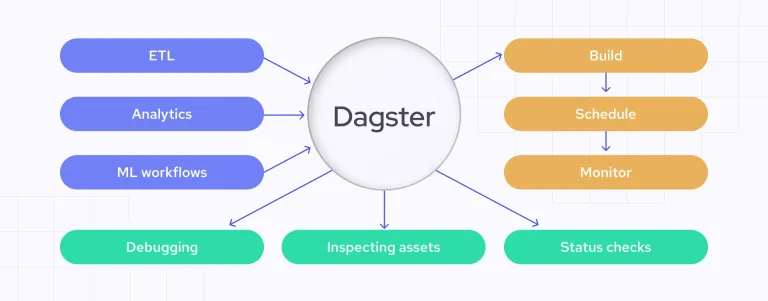

使用dagster自動化數據質量檢查Apr 11, 2025 am 11:44 AM

使用dagster自動化數據質量檢查Apr 11, 2025 am 11:44 AM數據質量保證:與Dagster自動檢查和良好期望 保持高數據質量對於數據驅動的業務至關重要。 隨著數據量和源的增加,手動質量控制變得效率低下,容易出現錯誤。

大型機在人工智能時代有角色嗎?Apr 11, 2025 am 11:42 AM

大型機在人工智能時代有角色嗎?Apr 11, 2025 am 11:42 AM大型機:AI革命的無名英雄 雖然服務器在通用應用程序上表現出色並處理多個客戶端,但大型機是專為關鍵任務任務而建立的。 這些功能強大的系統經常在Heavil中找到

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

EditPlus 中文破解版

體積小,語法高亮,不支援程式碼提示功能

記事本++7.3.1

好用且免費的程式碼編輯器

SecLists

SecLists是最終安全測試人員的伙伴。它是一個包含各種類型清單的集合,這些清單在安全評估過程中經常使用,而且都在一個地方。 SecLists透過方便地提供安全測試人員可能需要的所有列表,幫助提高安全測試的效率和生產力。清單類型包括使用者名稱、密碼、URL、模糊測試有效載荷、敏感資料模式、Web shell等等。測試人員只需將此儲存庫拉到新的測試機上,他就可以存取所需的每種類型的清單。

MinGW - Minimalist GNU for Windows

這個專案正在遷移到osdn.net/projects/mingw的過程中,你可以繼續在那裡關注我們。 MinGW:GNU編譯器集合(GCC)的本機Windows移植版本,可自由分發的導入函式庫和用於建置本機Windows應用程式的頭檔;包括對MSVC執行時間的擴展,以支援C99功能。 MinGW的所有軟體都可以在64位元Windows平台上運作。

ZendStudio 13.5.1 Mac

強大的PHP整合開發環境