一、背景

首先來介紹百度綜合資訊流推薦的業務背景、資料背景,以及基本的演算法策略。

1、百度綜合資訊流推薦

#百度的綜合資訊流包含在手百APP中搜尋框的清單頁以及沉浸頁的形態,涵蓋了多種產品類型。從上圖可以看到,推薦內容的形式有類似抖音的沉浸態推薦,也有單列和雙列推薦,類似小紅書筆記的版面。用戶與內容的交互方式也非常多樣,可以在落地頁上進行評論、點讚、收藏,還可以進入作者頁查看相關信息並進行交互,用戶還可以提供負向的反饋等等。整個綜合資訊流的設計非常豐富多樣,能夠滿足使用者不同的需求和互動方式。

2、資料背景

從建模的角度來看,主要面對三方面的挑戰:

- 大規模##。每天的展現量級超過了百億級,因此模型需要有天級別百億的吞吐能力。每天的 DAU 過億,這也決定了整個模型需要有高吞吐、高可擴展性的設計。對於排序模型來說,在線每秒鐘有數億次的計算,因此模型設計時不僅要考慮效果,同時也要考慮性能,需要做到很好的性能和效果的折中。使用者互動形態以及場景的多樣化,也要求模型可以預估多類型任務。

- 高要求。 整個系統的回應時間需求非常高,端到端都是毫秒的運算,超過了預定的時間,就會回傳失敗。這也造成了另一個問題,就是複雜結構上線困難。

- 馬太效應強。 從資料樣本角度來看,馬太效應非常強,少量的頭部活躍用戶貢獻了大多數的分發量,頭部的熱門資源也覆蓋到了大多數的展現量。無論是使用者側或資源側,馬太效應都是非常強的。因此,系統設計時就需要弱化馬太效應,使得推薦更公平。

3、基本演算法策略

#在整個產業界的推廣在搜場景上,特徵設計通常採用離散化的方式,以確保記憶和泛化兩方面的效果。特徵透過哈希方式轉換為one-hot編碼進行離散化處理。對於頭部用戶,需要進行精細刻畫,以實現準確的記憶。而對於佔比較大的稀疏長尾用戶,則需要進行良好的泛化處理。此外,在使用者的點擊和消費決策序列中,session扮演著非常重要的角色。

模型設計需要平衡頭部和長尾的資料分佈,確保準確性和泛化能力。特徵設計已經考慮到了這一點,因此模型設計也需要同時考慮到泛化和準確性。百度推薦漏斗對效能要求非常嚴格,因此需要在架構和策略上進行聯合設計,找到效能和效果的平衡點。此外,還需要平衡模型的高吞吐性和精確度。

架構的設計需要從效能和效果兩個維度綜合考慮。一個模型無法處理數千萬的資源庫,因此必須進行分層設計,核心思想是分治法。各層之間存在關聯,因此需要進行多階段的聯訓,以提升多階段漏斗之間的效率。此外,還需要採用彈性計算法,在資源幾乎不變的情況下,能夠上線複雜的模型。

上圖中右側的漢諾塔項目,在粗排這一層非常巧妙地實現了用戶與資源的分離建模。還有CTR3.0 聯合訓練,實現了多層多階段的聯訓,例如精排,是整個系統中最複雜、最精緻的模型,精度是相當高的,重排是在精排之上做list wise 的建模,精排跟重排的關係是很緊密的,我們提出的基於這兩個模型聯訓的方式,取得了非常好的線上效果。

接下來,將分別從特徵、演算法和架構三個角度進一步展開介紹。

##1、用戶-系統互動決策過程

特徵描述了使用者與系統之間的互動決策過程。

下圖中展示了使用者-資源-場景-狀態時空關係交互矩陣圖。

首先將所有訊號切分為使用者、資源、場景和狀態這四個維度,因為本質上是要建模使用者與資源之間的關係。在每個維度上,可以做各種各樣的畫像資料。

使用者維度上,最基礎的年齡、性別、興趣點畫像。在此基礎上還會有一些細微的特徵,例如相似用戶,以及用戶歷史上對不同資源類型的偏好行為等。 session 特徵,主要是長短期行為序列。業界有很多做序列的模型,在此不作贅述。但無論做何種類型的序列模型,都缺少不了特徵層面的離散 session 特徵。在百度的搜尋廣告上,從10 多年前就已經引入了這一種細粒度的序列特徵,對用戶在不同的時間窗口上,對不同資源類型的點擊行為、消費行為等等都細緻地刻畫了多組序列特徵。

資源維度上,也會有 ID 類別特徵來記錄資源本身的情況,主導的是記憶。還有明文畫像特徵來實現基礎的泛化能力。除了粗粒度的特徵以外,也會有更細緻的資源特徵,例如 Embedding 畫像特徵,是基於多模態等預訓練模型產出的,更細緻地建模離散 embedding 空間中資源之間的關係。還有統計畫像類別的特徵,描述資源各種情況下的後驗如何。以及 lookalike 特徵,透過使用者來反向表徵資源進而提升精度。

在場景維度上,有單列、沉浸式、雙列等不同的場景特徵。

使用者在不同的狀態下,對於 feed 資訊的消費也是不同的。例如刷新狀態是如何的,是從什麼樣的網路過來的,以及落地頁上的互動形態是怎麼樣的,都會影響到使用者未來的決策,所以也會從狀態維度來描述特徵。

透過使用者、資源、狀態、場景四個維度,全面刻畫使用者與系統互動的決策過程。很多時候也會做多個維度之間的組合。

2、離散特徵設計原理

#接下來介紹離散特徵設計原理。

優質的特徵通常有三個特點:區分度高、覆蓋率高、穩健性強。

- 區分度高:加入特徵後,後驗有著很大差異。例如加入 a 特徵的樣本,後驗點擊率跟沒有命中 a 特徵的後驗點擊率差距是非常大的。

- 覆蓋率高:如果加入的特徵在整個樣本中的覆蓋率只有萬分之幾、十萬分之幾,那麼即使特徵很有區分度,但大機率也是沒有效果的。

- 穩健性強:特徵本身的分佈要是相對穩定的,不能隨著時間發生非常劇烈的變化。

除了上述三個標準,還可以做單一特徵的 AUC 判斷。例如只用某一特徵來訓練模型,看特徵跟目標的關係。也可以去掉某個特徵,看少了特徵之後的 AUC 變化。

基於上述設計原則,我們來重點討論三類重要特徵:即交叉、偏移和序列特徵。

- Regarding cross-features, there are hundreds of related works in the industry. In practice, it has been found that no type of implicit feature cross-over can completely replace explicit feature cross-over, nor can it combine all All cross-features are deleted and only implicit representations are used. Explicit feature intersection can depict relevant information that implicit feature intersection cannot express. Of course, if you go deeper, you can use AutoML to automatically search the possible feature combination space. Therefore, in practice, the cross between features is done mainly by explicit feature cross and supplemented by implicit feature cross.

-

The bias feature refers to the fact that user clicks do not equal user satisfaction, because there are various biases in the display of resources, such as the most common The problem is position bias. Resources displayed in the header are naturally more likely to be clicked. There is also system bias. The system gives priority to showing what it thinks is the best, but it is not necessarily the real best. For example, newly released resources may be at a disadvantage due to lack of posterior information.

There is a very classic structure for biased features, which is the Wide&Deep structure proposed by Google. Various biased features are usually placed on the Wide side, which can be cropped directly online. out, and achieve the effect of unbiased estimation through this partial ordering method. - #The last is the sequence feature, which is a very important type of user personalized feature. The current mainstream in the industry is to model very long sequences. In specific experiments, it will be found that the storage overhead of long sequences is usually very large. As mentioned in the previous article, we need to achieve a compromise between performance and effect. Long sequences can be pre-calculated offline, and short sequences can be calculated online in real time, so we often combine the two methods. The gating network is used to decide whether the user currently prefers short sequences or long sequences to balance long-term interests and short-term interests. At the same time, it should be noted that the marginal benefit decreases as the sequence lengthens.

3. Optimized feature system of recommendation funnel

Entire recommendation The funnel is designed in layers, with filtering and truncation at each layer. How to achieve maximum efficiency in a layered design with filter truncation? As mentioned earlier, we will do joint training of models. In addition, related designs can also be done in the dimension of feature design. There are also some problems here:

- First of all, in order to improve the funnel pass rate, recall and rough sorting are directly fitted to fine ranking or fine sorting, which will lead to further strengthening of the Matthew effect. At this time , the recall/rough ranking model does not drive the learning process based on user behavior, but rather a fitting funnel. This is not the result we want to see. The correct approach is to recommend the decoupling design of each layer of the funnel model, rather than directly fitting the lower layer of the funnel.

- The second aspect is rough sorting, which is theoretically closer to recall and is essentially the outlet for unified recall. Therefore, at the level of rough sorting, more recall signals can be introduced, such as crowd voting signals for collaborative recommendation, graph index paths, etc., so that rough sorting can be jointly optimized with the recall queue, so that the recall efficiency of resources entering fine sorting can be improved optimize.

- The third is calculation reuse, which can improve the robustness of the model while reducing the amount of calculation. It should be noted here that there are often cascaded models. The second-level model uses the scores of the first-level model as features. This approach is very risky because the final estimated value of the model is an unstable distribution. If the estimated value of the first-level model is directly used as a feature, the lower-level model will be severely coupled, causing system instability.

##3. Algorithm

The following introduces the core algorithm the design of.

1. Sorting model from a system perspective

First let’s look at the recommended sorting model. It is generally believed that fine ranking is the most accurate model in the recommendation system. There is a view in the industry that rough layout is attached to fine layout and can be learned from fine layout. However, in actual practice, it has been found that rough layout cannot be directly learned from fine layout, which may cause many problems.

As you can see from the picture above, the positioning of rough sorting and fine sorting is different. Generally speaking, the rough sorting training samples are the same as the fine sorting samples, which are also display samples. Each time there are tens of thousands of candidates recalled for rough ranking, more than 99% of the resources are not displayed, and the model only uses a dozen or so resources that are finally displayed for training, which breaks the independence Under the assumption of identical distribution, the distribution of offline models varies greatly. This situation is most serious in recall, because the recall candidate sets are millions, tens of millions or even hundreds of millions, and most of the final returned results are not displayed. Rough sorting is also relatively serious. Because the candidate set is usually in the tens of thousands. The fine sorting is relatively better. After passing through the two-layer funnel of recall and rough sorting, the basic quality of resources is guaranteed. It mainly does the work of selecting the best from the best. Therefore, the problem of offline distribution inconsistency in fine ranking is not so serious, and there is no need to consider too much the problem of sample selection bias (SSB). At the same time, because the candidate set is small, heavy calculations can be done. Fine ranking focuses on feature intersection, sequence modeling, etc. .

However, the level of rough sorting cannot be directly learned from fine sorting, nor can it be directly recalculated similar to fine sorting, because the calculation amount is dozens of times that of fine sorting. Times, if you directly use the design idea of fine layout, the online machine will be completely unbearable, so rough layout requires a high degree of skill to balance performance and effect. It is a lightweight module. The focus of rough sorting iteration is different from fine sorting, and it mainly solves problems such as sample selection bias and recall queue optimization. Since rough sorting is closely related to recall, more attention is paid to the average quality of thousands of resources returned to fine sorting rather than the precise sorting relationship. Fine ranking is more closely related to rearrangement and focuses more on the AUC accuracy of a single point.

Therefore, in the design of rough ranking, it is more about the selection and generation of samples, and the design of generalization features and networks. The refined design can do complex multi-order intersection features, ultra-long sequence modeling, etc.

2. Generalization of very large-scale discrete DNN

The previous introduction is at the macro level, let’s take a look at the micro level.

# Specific to the model training process, the current mainstream in the industry is to use ultra-large-scale discrete DNN, and the generalization problem will be more serious. Because ultra-large-scale discrete DNN, through the embedding layer, mainly performs the memory function. See the figure above. The entire embedding space is a very large matrix, usually with hundreds of billions or trillions of rows and 1,000 columns. Therefore, model training is fully distributed, with dozens or even hundreds of GPUs doing distributed training.

Theoretically, for such a large matrix, brute force calculations will not be performed directly, but operations similar to matrix decomposition will be used. Of course, this matrix decomposition is different from the standard SVD matrix decomposition. The matrix decomposition here first learns the low-dimensional representation, and reduces the amount of calculation and storage through the sharing of parameters between slots, that is, it is decomposed into two matrices. the process of learning. The first is the feature and representation matrix, which will learn the relationship between the feature and the low-dimensional embedding. This embedding is very low, and an embedding of about ten dimensions is usually selected. The other one is the embedding and neuron matrix, and the weights between each slot are shared. In this way, the storage volume is reduced and the effect is improved.

Low-dimensional embedding learning is the key to optimizing the generalization ability of offline DNN. It is equivalent to doing sparse matrix decomposition. Therefore, the key to improving the generalization ability of the entire model lies in how to make it Parameter size can be better matched with the number of samples.

Optimize from multiple aspects:

- #First of all, from the embedding dimension, because of the display of different features The quantity difference is very large. The display quantity of some features is very high, such as head resources and head users. You can use longer embedding dimensions. This is the common idea of dynamic embedding dimensions, that is, the more fully the embedding dimensions are displayed. The longer. Of course, if you want to be more fancy, you can use autoML and other methods to do reinforcement learning and automatically search for the optimal embedding length.

- #The second aspect is to create thresholds. Since different resources display different amounts, when to create embedded representations for features also needs to be considered.

3. Overfitting problem

The industry usually adopts a two-stage training method to resist overfitting. The entire model consists of two layers, one is a large discrete matrix layer, and the other is a small dense parameter layer. The discrete matrix layer is very easy to overfit, so industry practice usually uses One Pass Training, that is, online learning, where all the data is passed through, and batch training is not done like in academia.

In addition, the industry usually uses timing validation set to solve the overfitting problem of sparse layers. Divide the entire training data set into many Deltas, T0, T1, T2, and T3, according to the time dimension. Each training is fixed with the discrete parameter layer trained a few hours ago, and then the next Delta data is used to finetune the dense network. That is, by fixing the sparse layer and retraining other parameters, the overfitting problem of the model can be alleviated.

This approach will also bring another problem, because the training is divided, and the discrete parameters at time T0 need to be fixed each time, and then the join is retrained at time t 1 stage, this will slow down the entire training speed and bring scalability challenges. Therefore, in recent years, single-stage training has been adopted, that is, the discrete representation layer and the dense network layer are updated simultaneously in a Delta. There is also a problem with single-stage training, because in addition to embedding features, the entire model also has many continuous-valued features. These continuous-valued features will count the display clicks of each discrete feature. Therefore, it may bring the risk of data crossing. Therefore, in actual practice, the first step will be to remove the statistical features, and the second step will be to train the dense network together with the discrete representation, using a single-stage training method. In addition, the entire embedded length is automatically scalable. Through this series of methods, model training can be accelerated by about 30%. Practice shows that the degree of overfitting of this method is very slight, and the difference between the AUC of training and testing is 1/1000 or lower.

##4. Architecture

Next, we will introduce the architecture design thoughts and experiences.

1. Principle of system layered design

effect. Because fine ranking is not the ground truth, user behavior is. You need to learn user behavior well, not learn fine ranking. This is a very important tip.

2. Multi-stage model joint training

#The relationship between fine ranking and rearrangement is It is very close. In the early years, rearrangements were directly trained using the scores of fine lineups. On the one hand, the coupling was very serious. On the other hand, the scores of fine lineups were directly used for training, which easily caused online fluctuations.

Baidu Fengchao CTR 3.0 joint training project of fine ranking and rearrangement very cleverly uses models to train at the same time to avoid the problem of scoring coupling. This project uses the hidden layer and internal scoring of the fine-ranking sub-network as characteristics of the rearrangement sub-network. Then, the fine-ranking and rearrangement sub-networks are separated and deployed in their respective modules. On the one hand, the intermediate results can be reused well without the fluctuation problem caused by scoring coupling. At the same time, the accuracy of rearrangement will be improved by a percentile. This was also one of the sub-projects that received Baidu’s highest award that year.

In addition, please note that this project is not ESSM. ESSM is CTCVR modeling and multi-objective modeling. CTR3.0 joint training mainly solves the problems of scoring coupling and rearrangement model accuracy. .

In addition, recall and rough sorting must be decoupled, because new queues are added, which may not be fair to the new queues. Therefore, a random masking method is proposed, that is, randomly masking out some features so that the coupling degree is not so strong.

3. Sparse routing network

Finally, let’s take a look at the online deployment process. The scale of model parameters is in the order of hundreds of billions to trillions, and there are many targets. Direct online deployment is very expensive, and we cannot only consider the effect without considering the performance. A better way is elastic calculation, similar to the idea of Sparse MOE.

Rough sorting has access to a lot of queues, with dozens or even hundreds of queues. The online value (LTV) of these queues is different. The traffic value layer calculates the value of different recall queues to online click duration. The core idea is that the greater the overall contribution of the recall queue, the more complex calculations can be enjoyed. This allows limited computing power to serve higher value traffic. Therefore, we did not use the traditional distillation method, but adopted an idea similar to Sparse MOE for elastic computing, that is, the design of strategy and architecture co-design, so that different recall queues can use the most suitable resource network for calculation.

##5. Future plans

As we all know, now Entering the era of LLM large models. Baidu's exploration of the next generation recommendation system based on LLM large language model will be carried out from three aspects.

The first aspect is to upgrade the model from basic prediction to being able to make decisions. For example, important issues such as efficient exploration of classic cold start resources, immersive sequence recommendation feedback, and the decision-making chain from search to recommendation can all be made with the help of large models.

The second aspect is from discrimination to generation. Now the entire model is discriminative. In the future, we will explore generative recommendation methods, such as automatically generating recommendation reasons, and based on long-tail data prompt for automatic data enhancement and generative retrieval model.

The third aspect is from black box to white box. In the traditional recommendation system, people often say that neural network is an alchemy and a black box. Is it possible to move towards white box? Exploration is also one of the important tasks in the future. For example, based on cause and effect, we can explore the reasons behind user behavior state transitions, make better unbiased estimates of recommendation fairness, and perform better scene adaptation in Multi Task Machine Learning scenarios.

以上是百度排序技術的探索與應用的詳細內容。更多資訊請關注PHP中文網其他相關文章!

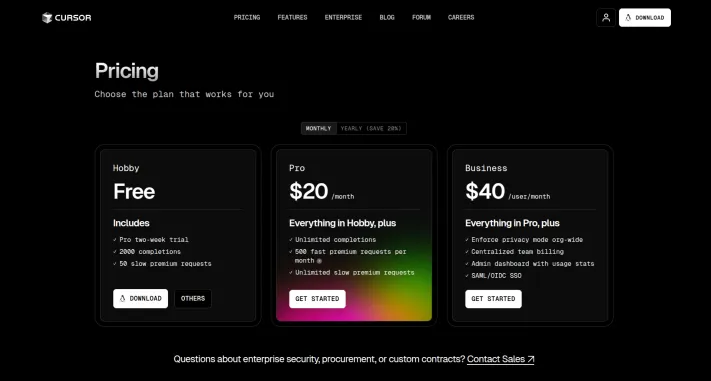

我嘗試了使用光標AI編碼的Vibe編碼,這太神奇了!Mar 20, 2025 pm 03:34 PM

我嘗試了使用光標AI編碼的Vibe編碼,這太神奇了!Mar 20, 2025 pm 03:34 PMVibe編碼通過讓我們使用自然語言而不是無盡的代碼行創建應用程序來重塑軟件開發的世界。受Andrej Karpathy等有遠見的人的啟發,這種創新的方法使Dev

如何使用DALL-E 3:技巧,示例和功能Mar 09, 2025 pm 01:00 PM

如何使用DALL-E 3:技巧,示例和功能Mar 09, 2025 pm 01:00 PMDALL-E 3:生成的AI圖像創建工俱生成的AI正在革新內容創建,而OpenAI最新的圖像生成模型Dall-E 3處於最前沿。它於2023年10月發行,建立在其前任Dall-E和Dall-E 2上

2025年2月的Genai推出前5名:GPT-4.5,Grok-3等!Mar 22, 2025 am 10:58 AM

2025年2月的Genai推出前5名:GPT-4.5,Grok-3等!Mar 22, 2025 am 10:58 AM2025年2月,Generative AI又是一個改變遊戲規則的月份,為我們帶來了一些最令人期待的模型升級和開創性的新功能。從Xai的Grok 3和Anthropic的Claude 3.7十四行詩到Openai的G

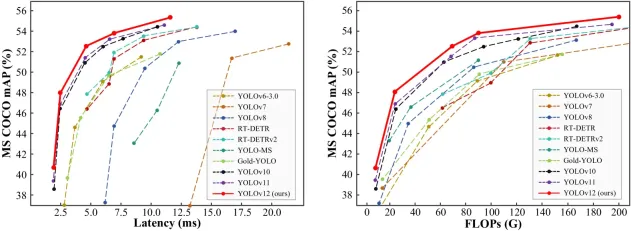

如何使用Yolo V12進行對象檢測?Mar 22, 2025 am 11:07 AM

如何使用Yolo V12進行對象檢測?Mar 22, 2025 am 11:07 AMYolo(您只看一次)一直是領先的實時對象檢測框架,每次迭代都在以前的版本上改善。最新版本Yolo V12引入了進步,可顯著提高準確性

Elon Musk&Sam Altman衝突超過5000億美元的星際之門項目Mar 08, 2025 am 11:15 AM

Elon Musk&Sam Altman衝突超過5000億美元的星際之門項目Mar 08, 2025 am 11:15 AM這項耗資5000億美元的星際之門AI項目由OpenAI,Softbank,Oracle和Nvidia等科技巨頭支持,並得到美國政府的支持,旨在鞏固美國AI的領導力。 這項雄心勃勃

Sora vs veo 2:哪個創建更現實的視頻?Mar 10, 2025 pm 12:22 PM

Sora vs veo 2:哪個創建更現實的視頻?Mar 10, 2025 pm 12:22 PMGoogle的VEO 2和Openai的Sora:哪個AI視頻發電機佔據了至尊? 這兩個平台都產生了令人印象深刻的AI視頻,但它們的優勢在於不同的領域。 使用各種提示,這種比較揭示了哪種工具最適合您的需求。 t

Google的Gencast:Gencast Mini Demo的天氣預報Mar 16, 2025 pm 01:46 PM

Google的Gencast:Gencast Mini Demo的天氣預報Mar 16, 2025 pm 01:46 PMGoogle DeepMind的Gencast:天氣預報的革命性AI 天氣預報經歷了巨大的轉變,從基本觀察到復雜的AI驅動預測。 Google DeepMind的Gencast,開創性

哪個AI比Chatgpt更好?Mar 18, 2025 pm 06:05 PM

哪個AI比Chatgpt更好?Mar 18, 2025 pm 06:05 PM本文討論了AI模型超過Chatgpt,例如Lamda,Llama和Grok,突出了它們在準確性,理解和行業影響方面的優勢。(159個字符)

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

Atom編輯器mac版下載

最受歡迎的的開源編輯器

Dreamweaver Mac版

視覺化網頁開發工具

VSCode Windows 64位元 下載

微軟推出的免費、功能強大的一款IDE編輯器

SAP NetWeaver Server Adapter for Eclipse

將Eclipse與SAP NetWeaver應用伺服器整合。

EditPlus 中文破解版

體積小,語法高亮,不支援程式碼提示功能