本文主要為大家帶來一篇Node.js+jade抓取部落格所有文章產生靜態html檔案的實例。小編覺得蠻不錯的,現在就分享給大家,也給大家做個參考。一起跟著小編過來看看吧,希望能幫助大家。

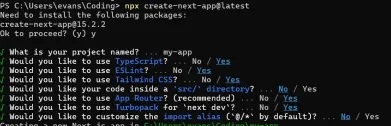

專案結構:

#好了,接下來,我們就來講解下,這篇文章主要實現的功能:

1,抓取文章,主要抓取文章的標題,內容,超鏈接,文章id(用於生成靜態html文件)

2,根據jade模板產生html檔

一、抓取文章如何實現?

非常簡單,跟上文抓取文章清單的實作差不多

function crawlerArc( url ){

var html = '';

var str = '';

var arcDetail = {};

http.get(url, function (res) {

res.on('data', function (chunk) {

html += chunk;

});

res.on('end', function () {

arcDetail = filterArticle( html );

str = jade.renderFile('./views/layout.jade', arcDetail );

fs.writeFile( './html/' + arcDetail['id'] + '.html', str, function( err ){

if( err ) {

console.log( err );

}

console.log( 'success:' + url );

if ( aUrl.length ) crawlerArc( aUrl.shift() );

} );

});

});

}參數url就是文章的位址,把文章的內容抓取完畢之後,呼叫filterArticle ( html ) 過濾出需要的文章資訊(id, 標題,超鏈接,內容),然後用jade的renderFile這個api,實現模板內容的替換,

模板內容替換完之後,肯定就需要生成html檔了, 所以用writeFile寫入文件,寫入文件時候,用id當html檔名。這就是產生一篇靜態html檔的實現,

接下來就是循環產生靜態html檔了, 就是下面這行:

if ( aUrl.length ) crawlerArc( aUrl.shift() );

aUrl保存的是我的部落格所有文章的url, 每次採集完一篇文章之後,就把目前文章的url刪除,讓下一篇文章的url出來,繼續採集

完整的實作程式碼server.js:

var fs = require( 'fs' );

var http = require( 'http' );

var cheerio = require( 'cheerio' );

var jade = require( 'jade' );

var aList = [];

var aUrl = [];

function filterArticle(html) {

var $ = cheerio.load( html );

var arcDetail = {};

var title = $( "#cb_post_title_url" ).text();

var href = $( "#cb_post_title_url" ).attr( "href" );

var re = /\/(\d+)\.html/;

var id = href.match( re )[1];

var body = $( "#cnblogs_post_body" ).html();

return {

id : id,

title : title,

href : href,

body : body

};

}

function crawlerArc( url ){

var html = '';

var str = '';

var arcDetail = {};

http.get(url, function (res) {

res.on('data', function (chunk) {

html += chunk;

});

res.on('end', function () {

arcDetail = filterArticle( html );

str = jade.renderFile('./views/layout.jade', arcDetail );

fs.writeFile( './html/' + arcDetail['id'] + '.html', str, function( err ){

if( err ) {

console.log( err );

}

console.log( 'success:' + url );

if ( aUrl.length ) crawlerArc( aUrl.shift() );

} );

});

});

}

function filterHtml(html) {

var $ = cheerio.load(html);

var arcList = [];

var aPost = $("#content").find(".post-list-item");

aPost.each(function () {

var ele = $(this);

var title = ele.find("h2 a").text();

var url = ele.find("h2 a").attr("href");

ele.find(".c_b_p_desc a").remove();

var entry = ele.find(".c_b_p_desc").text();

ele.find("small a").remove();

var listTime = ele.find("small").text();

var re = /\d{4}-\d{2}-\d{2}\s*\d{2}[:]\d{2}/;

listTime = listTime.match(re)[0];

arcList.push({

title: title,

url: url,

entry: entry,

listTime: listTime

});

});

return arcList;

}

function nextPage( html ){

var $ = cheerio.load(html);

var nextUrl = $("#pager a:last-child").attr('href');

if ( !nextUrl ) return getArcUrl( aList );

var curPage = $("#pager .current").text();

if( !curPage ) curPage = 1;

var nextPage = nextUrl.substring( nextUrl.indexOf( '=' ) + 1 );

if ( curPage < nextPage ) crawler( nextUrl );

}

function crawler(url) {

http.get(url, function (res) {

var html = '';

res.on('data', function (chunk) {

html += chunk;

});

res.on('end', function () {

aList.push( filterHtml(html) );

nextPage( html );

});

});

}

function getArcUrl( arcList ){

for( var key in arcList ){

for( var k in arcList[key] ){

aUrl.push( arcList[key][k]['url'] );

}

}

crawlerArc( aUrl.shift() );

}

var url = 'http://www.cnblogs.com/ghostwu/';

crawler( url );layout.jade檔:

doctype html

html

head

meta(charset='utf-8')

title jade+node.js express

link(rel="stylesheet", href='./css/bower_components/bootstrap/dist/css/bootstrap.min.css')

body

block header

p.container

p.well.well-lg

h3 ghostwu的博客

p js高手之路

block container

p.container

h3

a(href="#{href}" rel="external nofollow" ) !{title}

p !{body}

block footer

p.container

footer 版权所有 - by ghostwu後續的打算:

1,採用mongodb入庫

#2,支援斷點採集

3,採集圖片

4,採集小說

等等....

相關推薦:

以上是Node.js、jade產生靜態html檔實例的詳細內容。更多資訊請關注PHP中文網其他相關文章!

Python vs. JavaScript:學習曲線和易用性Apr 16, 2025 am 12:12 AM

Python vs. JavaScript:學習曲線和易用性Apr 16, 2025 am 12:12 AMPython更適合初學者,學習曲線平緩,語法簡潔;JavaScript適合前端開發,學習曲線較陡,語法靈活。 1.Python語法直觀,適用於數據科學和後端開發。 2.JavaScript靈活,廣泛用於前端和服務器端編程。

Python vs. JavaScript:社區,圖書館和資源Apr 15, 2025 am 12:16 AM

Python vs. JavaScript:社區,圖書館和資源Apr 15, 2025 am 12:16 AMPython和JavaScript在社區、庫和資源方面的對比各有優劣。 1)Python社區友好,適合初學者,但前端開發資源不如JavaScript豐富。 2)Python在數據科學和機器學習庫方面強大,JavaScript則在前端開發庫和框架上更勝一籌。 3)兩者的學習資源都豐富,但Python適合從官方文檔開始,JavaScript則以MDNWebDocs為佳。選擇應基於項目需求和個人興趣。

從C/C到JavaScript:所有工作方式Apr 14, 2025 am 12:05 AM

從C/C到JavaScript:所有工作方式Apr 14, 2025 am 12:05 AM從C/C 轉向JavaScript需要適應動態類型、垃圾回收和異步編程等特點。 1)C/C 是靜態類型語言,需手動管理內存,而JavaScript是動態類型,垃圾回收自動處理。 2)C/C 需編譯成機器碼,JavaScript則為解釋型語言。 3)JavaScript引入閉包、原型鍊和Promise等概念,增強了靈活性和異步編程能力。

JavaScript引擎:比較實施Apr 13, 2025 am 12:05 AM

JavaScript引擎:比較實施Apr 13, 2025 am 12:05 AM不同JavaScript引擎在解析和執行JavaScript代碼時,效果會有所不同,因為每個引擎的實現原理和優化策略各有差異。 1.詞法分析:將源碼轉換為詞法單元。 2.語法分析:生成抽象語法樹。 3.優化和編譯:通過JIT編譯器生成機器碼。 4.執行:運行機器碼。 V8引擎通過即時編譯和隱藏類優化,SpiderMonkey使用類型推斷系統,導致在相同代碼上的性能表現不同。

超越瀏覽器:現實世界中的JavaScriptApr 12, 2025 am 12:06 AM

超越瀏覽器:現實世界中的JavaScriptApr 12, 2025 am 12:06 AMJavaScript在現實世界中的應用包括服務器端編程、移動應用開發和物聯網控制:1.通過Node.js實現服務器端編程,適用於高並發請求處理。 2.通過ReactNative進行移動應用開發,支持跨平台部署。 3.通過Johnny-Five庫用於物聯網設備控制,適用於硬件交互。

使用Next.js(後端集成)構建多租戶SaaS應用程序Apr 11, 2025 am 08:23 AM

使用Next.js(後端集成)構建多租戶SaaS應用程序Apr 11, 2025 am 08:23 AM我使用您的日常技術工具構建了功能性的多租戶SaaS應用程序(一個Edtech應用程序),您可以做同樣的事情。 首先,什麼是多租戶SaaS應用程序? 多租戶SaaS應用程序可讓您從唱歌中為多個客戶提供服務

如何使用Next.js(前端集成)構建多租戶SaaS應用程序Apr 11, 2025 am 08:22 AM

如何使用Next.js(前端集成)構建多租戶SaaS應用程序Apr 11, 2025 am 08:22 AM本文展示了與許可證確保的後端的前端集成,並使用Next.js構建功能性Edtech SaaS應用程序。 前端獲取用戶權限以控制UI的可見性並確保API要求遵守角色庫

JavaScript:探索網絡語言的多功能性Apr 11, 2025 am 12:01 AM

JavaScript:探索網絡語言的多功能性Apr 11, 2025 am 12:01 AMJavaScript是現代Web開發的核心語言,因其多樣性和靈活性而廣泛應用。 1)前端開發:通過DOM操作和現代框架(如React、Vue.js、Angular)構建動態網頁和單頁面應用。 2)服務器端開發:Node.js利用非阻塞I/O模型處理高並發和實時應用。 3)移動和桌面應用開發:通過ReactNative和Electron實現跨平台開發,提高開發效率。

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

DVWA

Damn Vulnerable Web App (DVWA) 是一個PHP/MySQL的Web應用程序,非常容易受到攻擊。它的主要目標是成為安全專業人員在合法環境中測試自己的技能和工具的輔助工具,幫助Web開發人員更好地理解保護網路應用程式的過程,並幫助教師/學生在課堂環境中教授/學習Web應用程式安全性。 DVWA的目標是透過簡單直接的介面練習一些最常見的Web漏洞,難度各不相同。請注意,該軟體中

SublimeText3漢化版

中文版,非常好用

MantisBT

Mantis是一個易於部署的基於Web的缺陷追蹤工具,用於幫助產品缺陷追蹤。它需要PHP、MySQL和一個Web伺服器。請查看我們的演示和託管服務。

SublimeText3 英文版

推薦:為Win版本,支援程式碼提示!

mPDF

mPDF是一個PHP庫,可以從UTF-8編碼的HTML產生PDF檔案。原作者Ian Back編寫mPDF以從他的網站上「即時」輸出PDF文件,並處理不同的語言。與原始腳本如HTML2FPDF相比,它的速度較慢,並且在使用Unicode字體時產生的檔案較大,但支援CSS樣式等,並進行了大量增強。支援幾乎所有語言,包括RTL(阿拉伯語和希伯來語)和CJK(中日韓)。支援嵌套的區塊級元素(如P、DIV),