使用 JIT 編譯器讓我的 Python 迴圈變慢?

- PHPz原創

- 2024-08-29 06:35:071012瀏覽

如果您還沒有聽說過,Python 循環可能會很慢——尤其是在處理大型資料集時。如果您嘗試跨數百萬個資料點進行計算,執行時間很快就會成為瓶頸。幸運的是,Numba 有一個即時 (JIT) 編譯器,我們可以用它來幫助加速 Python 中的數值計算和循環。

前幾天,我發現自己需要一個簡單的 Python 指數平滑函數。該函數需要接受數組並傳回一個具有平滑值的相同長度的陣列。通常,我會嘗試在 Python 中盡可能避免循環(尤其是在處理 Pandas DataFrame 時)。以我目前的能力水平,我不知道如何避免使用循環以指數方式平滑值數組。

我將逐步介紹建立此指數平滑函數的過程,並在使用和不使用 JIT 編譯的情況下進行測試。我將簡要介紹 JIT 以及如何確保以適用於 nopython 模式的方式對循環進行編碼。

什麼是JIT?

JIT 編譯器對於 Python、JavaScript 和 Java 等高階語言特別有用。這些語言以其靈活性和易用性而聞名,但與 C 或 C++ 等較低級語言相比,它們的執行速度可能較慢。 JIT 編譯透過優化執行時間程式碼的執行來幫助彌補這一差距,使其更快,而不會犧牲這些高階語言的優勢。

在 Numba JIT 編譯器中使用 nopython=True 模式時,Python 解釋器將完全繞過,迫使 Numba 將所有內容編譯為機器碼。透過消除與 Python 動態類型和其他解釋器相關操作相關的開銷,可以實現更快的執行速度。

建立快速指數平滑函數

指數平滑是一種透過對過去的觀察值應用加權平均值來平滑資料的技術。指數平滑的公式為:

地點:

- ttt t

- ttV_tVt

- :代表時間點的原始值 tt > 來自值數組。 αα :平滑因子,決定目前值的權重 VtV_tV

- 在平滑過程中。 St小t−1

- 新的平滑值 tV_tVtSt小 🎜>S_{t-1}S

- t−1 。 αα 決定對當前值的影響程度 VtV_tV 與先前的平滑值相比,平滑後的值 St 小

🎜>

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

# Generate a large random array of a million integers

large_array = np.random.randint(1, 100, size=1_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

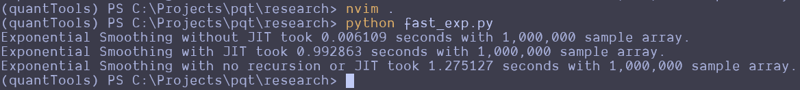

This can be repeated and altered just a bit to test the function without the JIT decorator. Here are the results that I got:

Wait, what the f***?

I thought JIT was supposed to speed it up. It looks like the standard Python function beat the JIT version and a version that attempts to use no recursion. That's strange. I guess you can't just slap the JIT decorator on something and make it go faster? Perhaps simple array loops and NumPy operations are already pretty efficient? Perhaps I don't understand the use case for JIT as well as I should? Maybe we should try this on a more complex loop?

Here is the entire code python file I created for testing:

import numpy as np

from numba import jit

import time

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def fast_exponential_smoothing_nojit(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def non_recursive_exponential_smoothing(values, alpha=0.33333333):

n = len(values)

smoothed_values = np.zeros(n)

# Initialize the first value

smoothed_values[0] = values[0]

# Calculate the rest of the smoothed values

decay_factors = (1 - alpha) ** np.arange(1, n)

cumulative_weights = alpha * decay_factors

smoothed_values[1:] = np.cumsum(values[1:] * np.flip(cumulative_weights)) + (1 - alpha) ** np.arange(1, n) * values[0]

return smoothed_values

# Generate a large random array of a million integers

large_array = np.random.randint(1, 1000, size=10_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing_nojit(large_array)

end_time = time.time()

print(f"Exponential Smoothing without JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = non_recursive_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with no recursion or JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

I attempted to create the non-recursive version to see if vectorized operations across arrays would make it go faster, but it seems to be pretty damn fast as it is. These results remained the same all the way up until I didn't have enough memory to make the array of random integers.

Let me know what you think about this in the comments. I am by no means a professional developer, so I am accepting all comments, criticisms, or educational opportunities.

Until next time.

Happy coding!

以上是使用 JIT 編譯器讓我的 Python 迴圈變慢?的詳細內容。更多資訊請關注PHP中文網其他相關文章!