There is my solutions to tackle the disk spaces shortage problem I described in the previous post. The core principle of the solution is to reduce the number of output records at Mapper stage; the method I used is Filter , adding a filter,

There is my solutions to tackle the disk spaces shortage problem I described in the previous post. The core principle of the solution is to reduce the number of output records at Mapper stage; the method I used is Filter, adding a filter, which I will explain later, to decrease the output records of Mapper, which in turn significantly decrease the Mapper’s Spill records, and fundamentally decrease the disk space usages. After applying the filter, with 30,661 records. some 200MB data set as inputs, the total Spill Records is 25,471,725, and it only takes about 509MB disk spaces!

Followed Filter

And now I’m going to reveal what’s kinda Filter it looks like, and how did I accomplish that filter. The true face of the FILTER is called Followed Filter, it filters users from computing co-followed combinations if their followed number does not satisfy a certain number, called Followed Threshold.

Followed Filter is used to reduce the co-followed combinations at Mapper stage. Say we set the followed threshold to 100, meaning users who doesn’t own 100 fans(be followed by 100 other users) will be ignored during co-followed combinations computing stage(to get the actual number of the threshold we need analyze statistics of user’s followed number of our data set).

Reason

Choosing followed filter is reasonable because how many user follows is a metric of user’s popularity/famousness.

HOW

In order to accomplish it, we need:

First, counting user’s followed number among our data set, which needs a new MapReduce Job;

Second, choosing a followed threshold after analyze the statistics perspective of followed number data set got in first step;

Third, using DistrbutedCache of Hadoop to cache users who satisfy the filter to all Mappers;

Forth, adding followed filter to Mapper class, only users satisfy filter condition will be passed into co-followed combination computing phrase;

Fifth, adding co-followed filter/threshold in Reducer side if necessary.

Outcomes

Here is the Hadoop Job Summary, after applying the followed filter with followed threshold of 1000, that means only users who are followed by 1000 users will have the opportunity to co-followed combinations, compared with the Job Summary in my previous post, most all metrics have significant improvements:

| Counter | Map | Reduce | Total |

| Bytes Written | 0 | 1,798,185 | 1,798,185 |

| Bytes Read | 203,401,876 | 0 | 203,401,876 |

| FILE_BYTES_READ | 405,219,906 | 52,107,486 | 457,327,392 |

| HDFS_BYTES_READ | 203,402,751 | 0 | 203,402,751 |

| FILE_BYTES_WRITTEN | 457,707,759 | 52,161,704 | 509,869,463 |

| HDFS_BYTES_WRITTEN | 0 | 1,798,185 | 1,798,185 |

| Reduce input groups | 0 | 373,680 | 373,680 |

| Map output materialized bytes | 52,107,522 | 0 | 52,107,522 |

| Combine output records | 22,202,756 | 0 | 22,202,756 |

| Map input records | 30,661 | 0 | 30,661 |

| Reduce shuffle bytes | 0 | 52,107,522 | 52,107,522 |

| Physical memory (bytes) snapshot | 2,646,589,440 | 116,408,320 | 2,762,997,760 |

| Reduce output records | 0 | 373,680 | 373,680 |

| Spilled Records | 22,866,351 | 2,605,374 | 25,471,725 |

| Map output bytes | 2,115,139,050 | 0 | 2,115,139,050 |

| Total committed heap usage (bytes) | 2,813,853,696 | 84,738,048 | 2,898,591,744 |

| CPU time spent (ms) | 5,766,680 | 11,210 | 5,777,890 |

| Virtual memory (bytes) snapshot | 9,600,737,280 | 1,375,002,624 | 10,975,739,904 |

| SPLIT_RAW_BYTES | 875 | 0 | 875 |

| Map output records | 117,507,725 | 0 | 117,507,725 |

| Combine input records | 137,105,107 | 0 | 137,105,107 |

| Reduce input records | 0 | 2,605,374 | 2,605,374 |

P.S.

Frankly Speaking, chances are I am on the wrong way to Hadoop Programming, since I’m palying Pesudo Distribution Hadoop with my personal computer, which has 4 CUPs and 4G RAM, in real Hadoop Cluster disk spaces might never be a trouble, and all the tuning work I have done may turn into meaningless efforts. Before the Followed Filter, I also did some Hadoop tuning like customed Writable class, RawComparator, block size and io.sort.mb, etc.

---EOF---

原文地址:Adding Filter in Hadoop Mapper Class, 感谢原作者分享。

Java错误:Hadoop错误,如何处理和避免Jun 24, 2023 pm 01:06 PM

Java错误:Hadoop错误,如何处理和避免Jun 24, 2023 pm 01:06 PMJava错误:Hadoop错误,如何处理和避免当使用Hadoop处理大数据时,常常会遇到一些Java异常错误,这些错误可能会影响任务的执行,导致数据处理失败。本文将介绍一些常见的Hadoop错误,并提供处理和避免这些错误的方法。Java.lang.OutOfMemoryErrorOutOfMemoryError是Java虚拟机内存不足的错误。当Hadoop任

idea springBoot项目自动注入mapper为空报错如何解决May 17, 2023 pm 06:49 PM

idea springBoot项目自动注入mapper为空报错如何解决May 17, 2023 pm 06:49 PM在SpringBoot项目中,如果使用了MyBatis作为持久层框架,使用自动注入时可能会遇到mapper报空指针异常的问题。这是因为在自动注入时,SpringBoot无法正确识别MyBatis的Mapper接口,需要进行一些额外的配置。解决这个问题的方法有两种:1.在Mapper接口上添加注解在Mapper接口上添加@Mapper注解,告诉SpringBoot这个接口是一个Mapper接口,需要进行代理。示例如下:@MapperpublicinterfaceUserMapper{//...}2

![解决“[Vue warn]: Failed to resolve filter”错误的方法](https://img.php.cn/upload/article/000/887/227/169243040583797.jpg) 解决“[Vue warn]: Failed to resolve filter”错误的方法Aug 19, 2023 pm 03:33 PM

解决“[Vue warn]: Failed to resolve filter”错误的方法Aug 19, 2023 pm 03:33 PM解决“[Vuewarn]:Failedtoresolvefilter”错误的方法在使用Vue进行开发的过程中,我们有时候会遇到一个错误提示:“[Vuewarn]:Failedtoresolvefilter”。这个错误提示通常出现在我们在模板中使用了一个未定义的过滤器的情况下。本文将介绍如何解决这个错误并给出相应的代码示例。当我们在Vue的

springboot如何实现指定mybatis中mapper文件扫描路径May 17, 2023 pm 10:25 PM

springboot如何实现指定mybatis中mapper文件扫描路径May 17, 2023 pm 10:25 PM指定mybatis中mapper文件扫描路径所有的mapper映射文件mybatis.mapper-locations=classpath*:com/springboot/mapper/*.xml或者resource下的mapper映射文件mybatis.mapper-locations=classpath*:mapper/**/*.xmlmybatis配置多个扫描路径写法百度得到,但是很乱,稍微整理下:最近拆项目,遇到个小问题,稍微记录下:

在Beego中使用Hadoop和HBase进行大数据存储和查询Jun 22, 2023 am 10:21 AM

在Beego中使用Hadoop和HBase进行大数据存储和查询Jun 22, 2023 am 10:21 AM随着大数据时代的到来,数据处理和存储变得越来越重要,如何高效地管理和分析大量的数据也成为企业面临的挑战。Hadoop和HBase作为Apache基金会的两个项目,为大数据存储和分析提供了一种解决方案。本文将介绍如何在Beego中使用Hadoop和HBase进行大数据存储和查询。一、Hadoop和HBase简介Hadoop是一个开源的分布式存储和计算系统,它可

如何使用PHP和Hadoop进行大数据处理Jun 19, 2023 pm 02:24 PM

如何使用PHP和Hadoop进行大数据处理Jun 19, 2023 pm 02:24 PM随着数据量的不断增大,传统的数据处理方式已经无法处理大数据时代带来的挑战。Hadoop是开源的分布式计算框架,它通过分布式存储和处理大量的数据,解决了单节点服务器在大数据处理中带来的性能瓶颈问题。PHP是一种脚本语言,广泛应用于Web开发,而且具有快速开发、易于维护等优点。本文将介绍如何使用PHP和Hadoop进行大数据处理。什么是HadoopHadoop是

探索Java在大数据领域的应用:Hadoop、Spark、Kafka等技术栈的了解Dec 26, 2023 pm 02:57 PM

探索Java在大数据领域的应用:Hadoop、Spark、Kafka等技术栈的了解Dec 26, 2023 pm 02:57 PMJava大数据技术栈:了解Java在大数据领域的应用,如Hadoop、Spark、Kafka等随着数据量不断增加,大数据技术成为了当今互联网时代的热门话题。在大数据领域,我们常常听到Hadoop、Spark、Kafka等技术的名字。这些技术起到了至关重要的作用,而Java作为一门广泛应用的编程语言,也在大数据领域发挥着巨大的作用。本文将重点介绍Java在大

linux下安装Hadoop的方法是什么May 18, 2023 pm 08:19 PM

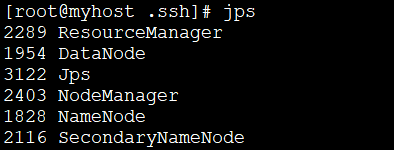

linux下安装Hadoop的方法是什么May 18, 2023 pm 08:19 PM一:安装JDK1.执行以下命令,下载JDK1.8安装包。wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2.执行以下命令,解压下载的JDK1.8安装包。tar-zxvfjdk-8u151-linux-x64.tar.gz3.移动并重命名JDK包。mvjdk1.8.0_151//usr/java84.配置Java环境变量。echo'

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

MantisBT

Mantis是一個易於部署的基於Web的缺陷追蹤工具,用於幫助產品缺陷追蹤。它需要PHP、MySQL和一個Web伺服器。請查看我們的演示和託管服務。

SecLists

SecLists是最終安全測試人員的伙伴。它是一個包含各種類型清單的集合,這些清單在安全評估過程中經常使用,而且都在一個地方。 SecLists透過方便地提供安全測試人員可能需要的所有列表,幫助提高安全測試的效率和生產力。清單類型包括使用者名稱、密碼、URL、模糊測試有效載荷、敏感資料模式、Web shell等等。測試人員只需將此儲存庫拉到新的測試機上,他就可以存取所需的每種類型的清單。

PhpStorm Mac 版本

最新(2018.2.1 )專業的PHP整合開發工具

Dreamweaver CS6

視覺化網頁開發工具

禪工作室 13.0.1

強大的PHP整合開發環境