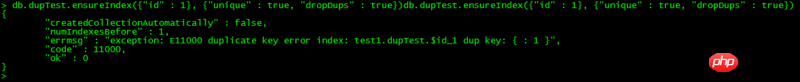

数据库中的一个字段已经存在相同的值,想给这个字段建立一个唯一索引,并删除多余的数据,于是建立索引时设置了dropDups 为true,但还是报错重复key,是怎么回事呀?或者有什么方法能快速删除多余的数据呢?

仅有的幸福2017-04-28 09:05:35

Duplicates can be removed via python script

from pymongo import MongoClient

client = MongoClient()

db = client.dbname

documentname = db.documentname

keys = {}

for k in documentname.find():

key = k['field']

if keys.has_key(key):

print 'duplicate key %s' % key

documentname.remove({'_id':k['_id']})

else:

print 'first record key %s' % key

keys[key]=1

The idea is very simple, traverse and store it in dict, and delete it when it is encountered for the second time.

But this way you cannot control the deleted and retained objects. You can adjust the script according to your scenario

我想大声告诉你2017-04-28 09:05:35

I have also encountered this situation. I don’t know how to solve it. Can you give me some advice?

伊谢尔伦2017-04-28 09:05:35

When there are more than 100,000 pieces of data, can it be processed quickly through scripts? How does the script handle when there is a lot of concurrency?

PHP中文网2017-04-28 09:05:35

mongoDB3.0 abandons the dropDups parameter. Duplicate data cannot be deleted through this in the future.

http://blog.chinaunix.net/xmlrpc.php?r=blog/article&id=4865696&uid=15795819