现在可以从网上下载这些代码,怎么进行部署和运行代码

从github上下载了关于分布式的代码,不知道怎么用,求各位大神指点下。。。

下面是网址

https://github.com/rolando/scrapy-redis

环境已经按照上面的配置好了,但不知道如何实现分布式。

分布式我是这样理解的,有一个redis服务器,从一个网页上获取url种子,并将url种子放到redis服务器了,然后将这些url种子分配给其他机器。中间存在调度方面的问题,以及服务器和机器间的通信。

谢谢。。。

PHP中文网2017-04-24 09:13:57

I feel like this cannot be described clearly in one or two sentences.

This blog post I referred to before, I hope it will be helpful to you.

Let me talk about my personal understanding.

What aboutscrapy使用改良之后的python自带的collection.deque来存放待爬取的request,该怎么让两个以上的Spider共用这个deque?

The queue to be crawled cannot be shared, and distribution is nonsense. scrapy-redis提供了一个解决方法,把collection.deque换成redis数据库,多个爬虫从同一个redis服务器存放要爬取的request,这样就能让多个spiderRead in the same database, so that the main problem of distribution is solved.

Note: It does not mean that it will be distributed directly by changing redis来存放request,scrapy!

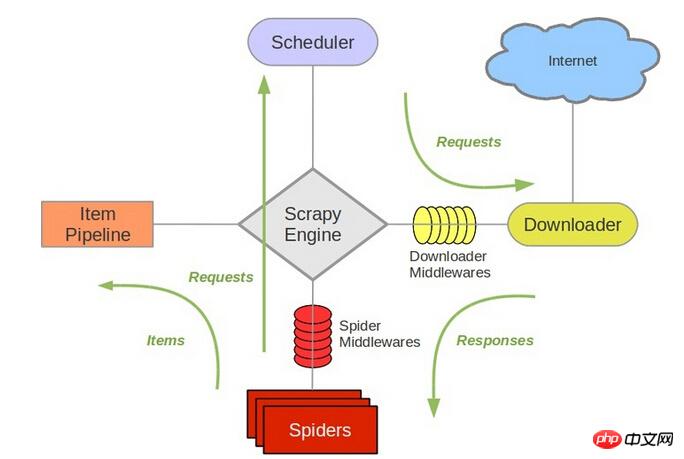

scrapy中跟待爬队列直接相关的就是调度器Scheduler.

Referencescrapy structure

It is responsible for new request进行入列操作,取出下一个要爬取的request and other operations. Therefore, after replacing redis, other components must be changed.

So, my personal understanding is to deploy the same crawler on multiple machines, distributed deploymentredis,参考地址

我的博客,比较简单。而这些工作,包括url去重,就是已经写好的scrapy-redisfunction of the framework.

The reference address is here, you can download the example to see the specific implementation. I've been working on this recentlyscrapy-redis, and I'll update this answer after I deploy it.

If you have new progress, you can share it and communicate.

黄舟2017-04-24 09:13:57

@伟兴 Hello, I saw this comment on 15.10.11. Do you have any results now?

Can you recommend some of your blogs? Thank you~

You can contact me at chenjian158978@gmail.com