在70W的数据中,执行 'name': /Mamacitas / 需要17.358767秒才完成

数据内容例:

{

"Attitude_low": NumberInt(0),

"Comments": "i",

"file": [

"mamacitas-7-scene3.avi",

"mamacitas-7-scene4.avi",

"mamacitas-7-scene5.avi",

"mamacitas-7-scene2.avi",

"mamacitas-7-scene1.avi",

"14968frontbig.jpg",

"[000397].gif",

"mamacitas-7-bonus-scene1.avi",

"14968backbig.jpg"

],

"Announce": "http://exodus.desync.com/announce",

"View": NumberInt(0),

"Hash": "9E3842903C56E8BBC0C7AF7A0A8636590491923C",

"name": "Mamacitas 7[SILVERDUST]",

"Encoding": "!",

"EntryTime": 1403169286.9712,

"Attitude_top": NumberInt(0),

"CreatedBy": "ruTorrent (PHP Class - Adrien Gibrat)",

"CreationDate": NumberInt(1365851919)

}

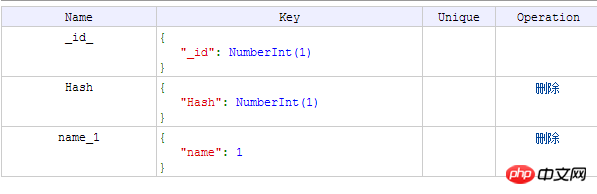

关于索引部分:

请问我该如何提高匹配速度?

怪我咯2017-04-24 09:11:39

Hash-type indexes are not useful when using fuzzy queries. You really need to rely on search engines to specifically build indexes after word segmentation

One is to use something like elastic search and build a dedicated one

You can also consider using a word segmentation library to segment your fields into words, and then use mongodb to create a collection of word segmentation. You can use mongodb's default indexing mechanism

天蓬老师2017-04-24 09:11:39

You can try MongoDB Text Indexes. It seems that you just want to match a complete word, which is the same as the application scenario of this index.

PHPz2017-04-24 09:11:39

You can use Lucence/Sphinx combined with MongoDb to do search queries. Mongodb query efficiency is indeed relatively low