下载了一份新浪微博的数据,但是原始数据是用csv的,在mac上没办法直接打开,读取的时候,也会错误,会出现

UnicodeDecodeError: 'utf-8' codec can't decode byte 0x84 in position 36: invalid start byte然后自己google,发现read_csv('file', encoding = "ISO-8859-1") 这样的时候读取不会有错

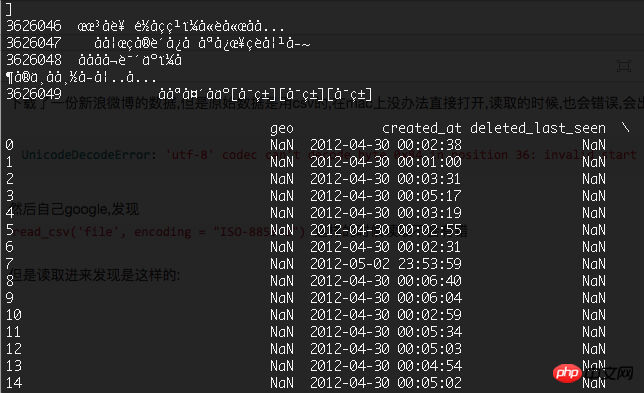

但是读取进来发现是这样的:

中文全部

然后测试了read_csv('file', encoding = "gbk")read_csv('file', encoding = "utf8")read_csv('file', encoding = "gb18030")

总之就是各种不行~基本的情况如下:

UnicodeDecodeError: 'gb18030' codec can't decode byte 0xaf in position 12: incomplete multibyte sequence有大神遇到类似的情况吗?

有大神说要数据 因为比较大,热心的人可以看看 不过我觉得挺有用的

下面是微博的数据

链接:http://pan.baidu.com/s/1jHCOwCI 密码:x58f

补充一下代码吧~

上面随意一个文件下载下来(是csv格式的)然后用pandas打开就会出错~

import pandas

df = pandas.read_csv("week1.csv")伊谢尔伦2017-04-18 10:30:36

Give me the code and original data

Just write some capable code + representative data, don’t create a few gigabytes of big data~

Who is watching?

大家讲道理2017-04-18 10:30:36

I’m in the same situation as you. I tried a lot of encodings but it still doesn’t work. But if the data is encoded in UTF8, some data can be converted successfully. So the way I can think of for the time being is to use open to read line by line. If there is encoding conversion, The errors are discarded, so the amount of data is actually quite large

高洛峰2017-04-18 10:30:36

You can also try using cp1252. The best way is to first use the chardet package (https://pypi.python.org/pypi/...) to see what encoding is used for the file.

天蓬老师2017-04-18 10:30:36

There is no problem after trying it. I guess it is a problem with your environment encoding. You can try the following code

#coding=utf-8

import pandas as pd

import sys

reload(sys)

sys.setdefaultencoding("utf-8")

df = pd.read_csv('week1.csv', encoding='utf-8', nrows=10)

print df